Introduction

Large Language Models (LLMs) have revolutionized our day-to-day productivity with its ability to understand and generate text across a wide range of topics. But while LLMs excel at conversation, summarization, and content generation, they have a key limitation: they stop at language. They can tell you what to do, but they can’t do it for you.

This is where Language Action Models (LAMs) come in. LAMs build on the foundation of LLMs but add a crucial new capability — action. Instead of just producing responses, LAMs can understand intent, plan steps, and interact with tools or systems to carry out tasks. Whether it’s automating database updates, language-powered robotics, LAMs are designed to turn natural language into meaningful, real-world outcomes.

What is a Language Action Model (LAM)

A Language Action Model (LAM) is an AI system that not only understands language but uses it to perform actions. Unlike traditional LLMs that focus on generating text, LAMs are built to interpret commands, plan next steps, and take action. Large Action Models (LAMs) are designed to understand human intentions and translate them into actions within a given environment or system.

LAMs combine language understanding with tool use, decision-making, and memory. They can call APIs, interact with apps, and reference past context to follow through on complex tasks — all while communicating naturally with users.

Key Use Cases

LAMs are useful anywhere natural language can drive real action. Common applications include:

- Workflow Automation: Triggering sequences like generating reports, updating databases, or managing emails, all through a chat interface.

- Personal Assistants & Robotics: Scheduling meetings, setting reminders, or controlling smart devices by interpreting natural commands.

- Developer Tools: Writing, editing, running code within an integrated development environment (IDE), and/or text-based instructions.

- Research Agents: Searching, reading, summarizing, and compiling insights from large volumes of documents or data. It can also fill in labeled data according to the documents.

These use cases show how LAMs bridge the gap between communication and execution, making AI more practical and hands-on.

How do LAMs Work?

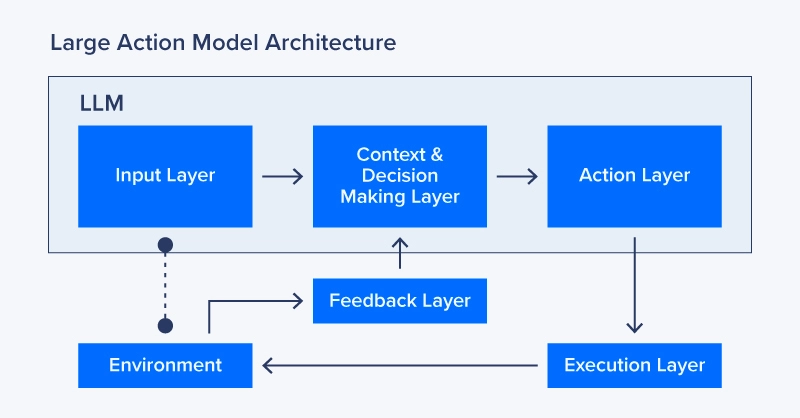

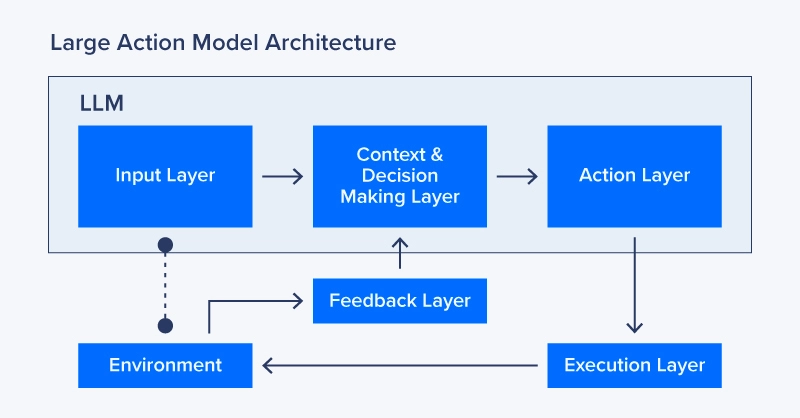

LAMs operate through a series of interconnected components that enable them to understand language, make decisions, and perform tasks:

- Input Layer: Powered by a foundational LLM, the input layer receives text or voice inputs to understand the context of the user request.

- Context & Decision Making Layer: Interprets goals, loads context into memory, and evaluates the situation and environment to determine the best approach to the task.

- Action Layer: Based on the Context and Decision Layer, the Action Layer will select the action by mapping our context to a concrete action plan. This can include API calls, system commands, text output response, or other mapped functions.

- Execution Layer: Executes the action plan laid out by the Action layer, like calling APIs, using a tool, querying a database, and more.

- Feedback Layer: Feeds the model the outcome as a result of the entire process to evaluate success or failure. This layer can trigger retries, adjustments, and learning updates.

Here's an example of a Language Action Model in a personal robot. In this case, computer vision is necessary. NVIDIA has teased this capability and developed the capability to talk to and interact with humanoid robots. If you ask your robot to place an apple in the basket, we can employ a Large Action Model.

- Input Layer: Voice or text prompts are fed to the model to understand the user's instruction: For example, "Can you bring me a soda from the kitchen?" The input layer uses an LLM to parse the command, recognize the objects involved ("soda", "kitchen"), and translate this into a structured goal.

- Context + Decision Layer: Determines the high-level plan. First, it would decide the intent: "Navigate to the kitchen, locate a soda, grasp it, return to user" and may also query memory Where is the kitchen? What does a soda can look like?

- Action Layer: Converts the plan into concrete action steps.

- Generates specific commands such as:

- NavigateTo(location="kitchen")

- DetectObject(label="soda can")

- GraspObject(object_id=42)

- NavigateTo(location="user")

- ReleaseObject(object_id=42)

- Generates specific commands such as:

- Execution Layer: Executes each command using low-level robot control and sensors.

- Controls the motors to move to the kitchen.

- Runs object detection via camera.

- Commands the arm to grasp and move.

- Monitors sensors for collision avoidance, object grip feedback, etc.

- Feedback Layer: Evaluates whether each step succeeded. If 'soda' not detected: retry detection or escalate error. If 'grip' failed: adjust position and try again. If 'user' moved: recalculate route.

Considerations for Deploying a LAM

While Language Action Models (LAMs) unlock advanced capabilities, their deployment requires deliberate engineering to ensure reliability, safety, and performance. Below are critical factors to consider:

Key Deployment Factors

- Tool and API Integration: LAMs must interface with external systems to perform tasks. This requires robust API connectors, clear input/output schemas, and secure authentication protocols.

- System Complexity: A LAM pipeline may include intent parsing, planning modules, tool execution, memory management, and monitoring. Orchestrating these components demands modular design and clear system boundaries.

- Compute Requirements: Depending on model size and task complexity, real-time LAM inference may require GPU acceleration, especially if reasoning or multi-step planning is involved.

- Memory and Context: Persistent user and task context improve performance and personalization. Securely storing and retrieving relevant session or user history is essential for continuity.

- Security and Governance: LAMs can take real-world actions, so it's important to define strict execution permissions, track decisions, and build in override mechanisms to prevent misuse or failure.

- Performance and Latency: Each LAM loop — intent recognition, planning, execution — adds latency. Low-latency planning, caching, and pre-processing can improve responsiveness.

Best Practices for LAM Deployment

To build a robust and scalable LAM-powered system, consider the following best practices:

- Start with a Controlled Scope: Limit the number of tools or APIs the LAM can access early on. Focus on stable, well-documented endpoints and expand gradually.

- Modular Architecture: Separate model inference, planning logic, tool execution, and memory handling into clear components. This aids in debugging, scaling, and maintenance.

- Implement Role-Based Access Control (RBAC): Use RBAC and scopes to restrict the LAM’s capabilities based on the task or user role. This prevents accidental or unauthorized actions.

- Fallback and Confirmation Mechanisms: For high-impact actions, implement user confirmation steps or verification prompts before execution.

- Monitor and Log Actions: Maintain detailed logs of actions taken, decision traces, and user input to support observability, audits, and incident response.

- Use Lightweight Planning When Possible: Avoid complex reasoning when rules or heuristics suffice. Streamlined planning can significantly reduce latency and error risk.

- Design for Human-in-the-Loop (HITL): In early deployments, give humans oversight on key decisions or plans generated by the LAM, especially in sensitive applications.

Conclusion

Language Action Models (LAMs) build on traditional language models by transforming natural language into executable actions. With capabilities like intent recognition, planning, and tool use, LAMs enable systems to move toward autonomous task completion.

This shift addresses a core limitation of LLMs — their inability to act — and opens the door to smarter, more efficient automation. As AI adoption grows, LAMs are poised to become foundational in developing agents that can operate independently and productively.

LAMs represent the infrastructure needed to build systems that not only understand instructions but also respond with meaningful, goal-directed action.

.png)

Fueling Innovation with an Exxact Multi-GPU Server

Training AI models on massive datasets can be accelerated exponentially with the right system. It's not just a high-performance computer, but a tool to propel and accelerate your research.

Configure Now

Large Action Model - Large Language Models for Performing Tasks

Introduction

Large Language Models (LLMs) have revolutionized our day-to-day productivity with its ability to understand and generate text across a wide range of topics. But while LLMs excel at conversation, summarization, and content generation, they have a key limitation: they stop at language. They can tell you what to do, but they can’t do it for you.

This is where Language Action Models (LAMs) come in. LAMs build on the foundation of LLMs but add a crucial new capability — action. Instead of just producing responses, LAMs can understand intent, plan steps, and interact with tools or systems to carry out tasks. Whether it’s automating database updates, language-powered robotics, LAMs are designed to turn natural language into meaningful, real-world outcomes.

What is a Language Action Model (LAM)

A Language Action Model (LAM) is an AI system that not only understands language but uses it to perform actions. Unlike traditional LLMs that focus on generating text, LAMs are built to interpret commands, plan next steps, and take action. Large Action Models (LAMs) are designed to understand human intentions and translate them into actions within a given environment or system.

LAMs combine language understanding with tool use, decision-making, and memory. They can call APIs, interact with apps, and reference past context to follow through on complex tasks — all while communicating naturally with users.

Key Use Cases

LAMs are useful anywhere natural language can drive real action. Common applications include:

- Workflow Automation: Triggering sequences like generating reports, updating databases, or managing emails, all through a chat interface.

- Personal Assistants & Robotics: Scheduling meetings, setting reminders, or controlling smart devices by interpreting natural commands.

- Developer Tools: Writing, editing, running code within an integrated development environment (IDE), and/or text-based instructions.

- Research Agents: Searching, reading, summarizing, and compiling insights from large volumes of documents or data. It can also fill in labeled data according to the documents.

These use cases show how LAMs bridge the gap between communication and execution, making AI more practical and hands-on.

How do LAMs Work?

LAMs operate through a series of interconnected components that enable them to understand language, make decisions, and perform tasks:

- Input Layer: Powered by a foundational LLM, the input layer receives text or voice inputs to understand the context of the user request.

- Context & Decision Making Layer: Interprets goals, loads context into memory, and evaluates the situation and environment to determine the best approach to the task.

- Action Layer: Based on the Context and Decision Layer, the Action Layer will select the action by mapping our context to a concrete action plan. This can include API calls, system commands, text output response, or other mapped functions.

- Execution Layer: Executes the action plan laid out by the Action layer, like calling APIs, using a tool, querying a database, and more.

- Feedback Layer: Feeds the model the outcome as a result of the entire process to evaluate success or failure. This layer can trigger retries, adjustments, and learning updates.

Here's an example of a Language Action Model in a personal robot. In this case, computer vision is necessary. NVIDIA has teased this capability and developed the capability to talk to and interact with humanoid robots. If you ask your robot to place an apple in the basket, we can employ a Large Action Model.

- Input Layer: Voice or text prompts are fed to the model to understand the user's instruction: For example, "Can you bring me a soda from the kitchen?" The input layer uses an LLM to parse the command, recognize the objects involved ("soda", "kitchen"), and translate this into a structured goal.

- Context + Decision Layer: Determines the high-level plan. First, it would decide the intent: "Navigate to the kitchen, locate a soda, grasp it, return to user" and may also query memory Where is the kitchen? What does a soda can look like?

- Action Layer: Converts the plan into concrete action steps.

- Generates specific commands such as:

- NavigateTo(location="kitchen")

- DetectObject(label="soda can")

- GraspObject(object_id=42)

- NavigateTo(location="user")

- ReleaseObject(object_id=42)

- Generates specific commands such as:

- Execution Layer: Executes each command using low-level robot control and sensors.

- Controls the motors to move to the kitchen.

- Runs object detection via camera.

- Commands the arm to grasp and move.

- Monitors sensors for collision avoidance, object grip feedback, etc.

- Feedback Layer: Evaluates whether each step succeeded. If 'soda' not detected: retry detection or escalate error. If 'grip' failed: adjust position and try again. If 'user' moved: recalculate route.

Considerations for Deploying a LAM

While Language Action Models (LAMs) unlock advanced capabilities, their deployment requires deliberate engineering to ensure reliability, safety, and performance. Below are critical factors to consider:

Key Deployment Factors

- Tool and API Integration: LAMs must interface with external systems to perform tasks. This requires robust API connectors, clear input/output schemas, and secure authentication protocols.

- System Complexity: A LAM pipeline may include intent parsing, planning modules, tool execution, memory management, and monitoring. Orchestrating these components demands modular design and clear system boundaries.

- Compute Requirements: Depending on model size and task complexity, real-time LAM inference may require GPU acceleration, especially if reasoning or multi-step planning is involved.

- Memory and Context: Persistent user and task context improve performance and personalization. Securely storing and retrieving relevant session or user history is essential for continuity.

- Security and Governance: LAMs can take real-world actions, so it's important to define strict execution permissions, track decisions, and build in override mechanisms to prevent misuse or failure.

- Performance and Latency: Each LAM loop — intent recognition, planning, execution — adds latency. Low-latency planning, caching, and pre-processing can improve responsiveness.

Best Practices for LAM Deployment

To build a robust and scalable LAM-powered system, consider the following best practices:

- Start with a Controlled Scope: Limit the number of tools or APIs the LAM can access early on. Focus on stable, well-documented endpoints and expand gradually.

- Modular Architecture: Separate model inference, planning logic, tool execution, and memory handling into clear components. This aids in debugging, scaling, and maintenance.

- Implement Role-Based Access Control (RBAC): Use RBAC and scopes to restrict the LAM’s capabilities based on the task or user role. This prevents accidental or unauthorized actions.

- Fallback and Confirmation Mechanisms: For high-impact actions, implement user confirmation steps or verification prompts before execution.

- Monitor and Log Actions: Maintain detailed logs of actions taken, decision traces, and user input to support observability, audits, and incident response.

- Use Lightweight Planning When Possible: Avoid complex reasoning when rules or heuristics suffice. Streamlined planning can significantly reduce latency and error risk.

- Design for Human-in-the-Loop (HITL): In early deployments, give humans oversight on key decisions or plans generated by the LAM, especially in sensitive applications.

Conclusion

Language Action Models (LAMs) build on traditional language models by transforming natural language into executable actions. With capabilities like intent recognition, planning, and tool use, LAMs enable systems to move toward autonomous task completion.

This shift addresses a core limitation of LLMs — their inability to act — and opens the door to smarter, more efficient automation. As AI adoption grows, LAMs are poised to become foundational in developing agents that can operate independently and productively.

LAMs represent the infrastructure needed to build systems that not only understand instructions but also respond with meaningful, goal-directed action.

.png)

Fueling Innovation with an Exxact Multi-GPU Server

Training AI models on massive datasets can be accelerated exponentially with the right system. It's not just a high-performance computer, but a tool to propel and accelerate your research.

Configure Now