Introduction

Training models quickly and accurately is important for building trust in these workflow tools. With AI powered applications becoming more capable of executing complex tasks, data scientists and machine learning engineers can explore innovative approaches.

To develop the best model for a specific use case, utilizing the appropriate model, dataset, and deployment can streamline the AI development process and yield the best results.

Selecting the Right Model

Selecting the best model architecture is important for getting the best results for your specific task. Different kinds of problems need different model architectures:

- Convolutional Neural Networks (CNNs)

- Recurrent Neural Networks (RNNs)

- Transformer Models

- GANs and Diffusion Models

- Reinforcement Learning

- Autoencoders

When choosing a model architecture, think about things like the kind of data you have, how complicated your task is, and the resources you have. It's often a good idea to start with simpler models and make them more complicated as needed. Apart from the 6 listed, there are other models you can explore.

Convolutional Neural Network (CNNs)

CNNs are ideal for image processing tasks and excel in extracting patterns like edges, textures, and objects within visual data by using filters that detect spatial relationships.

- Use Cases: Image classification, object detection

- Compute Requirements: High GPU compute requirements since visual processing is GPU intensive

- Notable Architectures: EfficientNet, ResNet, CNNs with attention mechanisms

Convolutional Neural Networks have been around for quite some time now, using weights and parameters to evaluate, classify, and detect objects in computer vision models. With the surge of the transformer architecture, ViTs or Vision Transformers have also become a strong alternative.

Recurrent Neural Networks (RNNs)

RNNs are best suited for sequential or time-dependent data, where the order of information is crucial. They're widely used in applications like language modeling, speech recognition, and time-series forecasting, as RNNs can retain previous input states, making them effective for capturing dependencies within sequences.

- Use Cases: Sequential data, time series analysis, speech recognition, forecasting

- Compute Requirements: Moderate to high GPU compute

- Notable Architectures: LSTM, GRU, bidirectional RNNs

RNNs previously were designed to power natural language processing tasks but have been superseded by Transformer models like BERT and GPT. But RNNs remain relevant for highly sequential tasks and real time analysis such as weather modeling and stock forecasting.

Transformer Models

Transformer Models revolutionized AI for sequence data, especially in natural language processing tasks. Transformers process entire text sequences in parallel, using self-attention to weigh the importance of different tokens, words, and phrases in context. This parallelism boosts their performance on complex language based tasks. Transformers do suffer if training is not properly tuned, trained on quality data, or not trained enough, resulting in hallucination or false positives.

- Use Cases: Language processing, text generation, chatbots, knowledge base

- Compute Requirements: Training requires extreme GPU compute & running requires moderate to high GPU compute

- Popular Architectures: BERT and GPT

Transformer models can be augmented since they are prompted. Thus, Mixture of Experts and Retrieval Augmented Generation are methods for enhancing the functionality of a highly generalized AI model.

Image Generation Models: Diffusion & GANs

Diffusion and GANs are for generating new, realistic images. These image generation models are popular in creative fields for generating images, videos, or music, and they're also used for data augmentation in training models.

- Use Case: Image generation by prompt, image augmentation, artistic ideation, 3D model generation, image upscaling, denoising

- Compute Requirements: GANs can be parallelized whereas diffusion models are sequential. Both require high GPU requirements, especially for higher fidelity generation.

- Popular Architectures: StableDiffusion, Midjourney, StyleGAN, DCGAN

Diffusion models utilize denoising and image recognition techniques to guide the model in generating a persuasive image. Hundreds of passes will make static fuzz into an original art piece.

GANs or general adversarial networks pit two competing models in an iterative dance, one generator for creating an image and a discriminator for evaluating if the generated image was fake or real. Continuous passes will train both models to become better and better until the generator is able to beat the discriminator.

Reinforcement Learning

Reinforcement Learning (RL) is well-suited for decision-making tasks that involve interacting with an environment to achieve a specific objective. RL models learn by trial and error, making them ideal for applications in robotics, game playing, and autonomous systems, where the model receives feedback from its actions to progressively improve its performance. RL shines in scenarios where the AI must develop strategies over time, balancing short-term actions with long-term goals.

- Use Cases: Gameplay optimization, exploit finding, creating competitive CPUs, decision making

- Compute Requirements: Depends on complexity but benefits with more GPU compute

- Popular Architectures: Q-Learning, DQN, SAC

You can find various instances of hobbyists creating RL based AIs to train how to play a game. Tuning and training of a reinforcement learning model requires reading in-between lines as to not allow the AI to learn an unintended action. For example, in driving game Trackmania, the AI practitioner did not allow the AI the ability to brake, encouraging speed when taking a turn. He did not want the AI to learn how to take a turn successfully if it meant constant braking.

Autoencoders

Autoencoders are a type of unsupervised neural network designed to learn efficient codings of input data by compressing it into a lower-dimensional representation and then reconstructing it. This involves an encoder compressing the input and a decoder reconstructing it. Autoencoders are particularly well-suited for tasks such as dimensionality reduction, data denoising, and anomaly detection. They excel in applications like image and signal processing, where they can remove noise from data or detect unusual patterns that deviate from the norm. Additionally, they are used in generating synthetic data and feature extraction, making them versatile tools in various machine learning and data preprocessing tasks.

- Use Case: Data compression, anomaly detection, and noise reduction.

- Compute Requirements: Moderate compute; can run on mid-range GPUs for smaller data.

- Popular Architectures: Vanilla autoencoders, variational autoencoders (VAE).

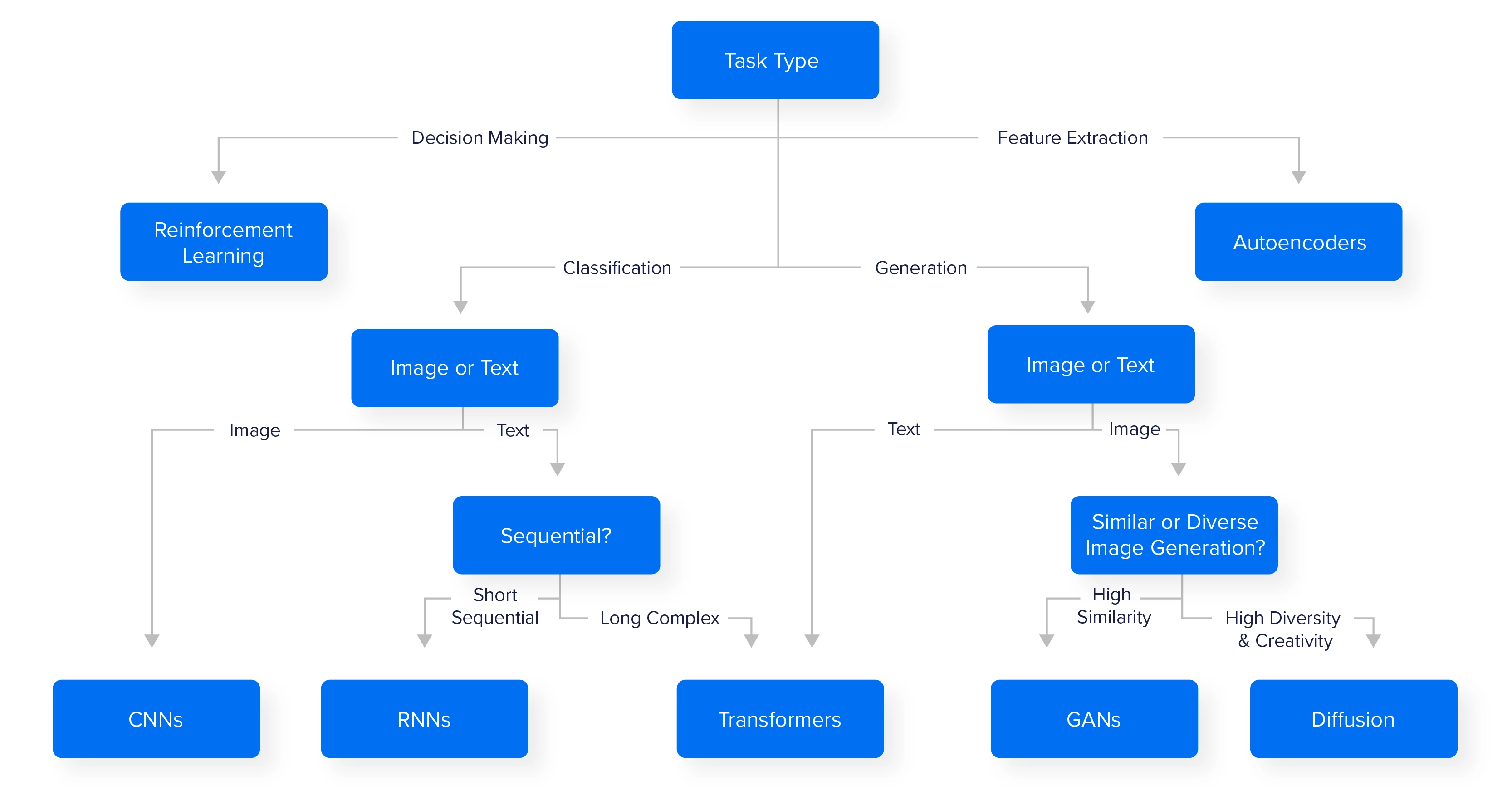

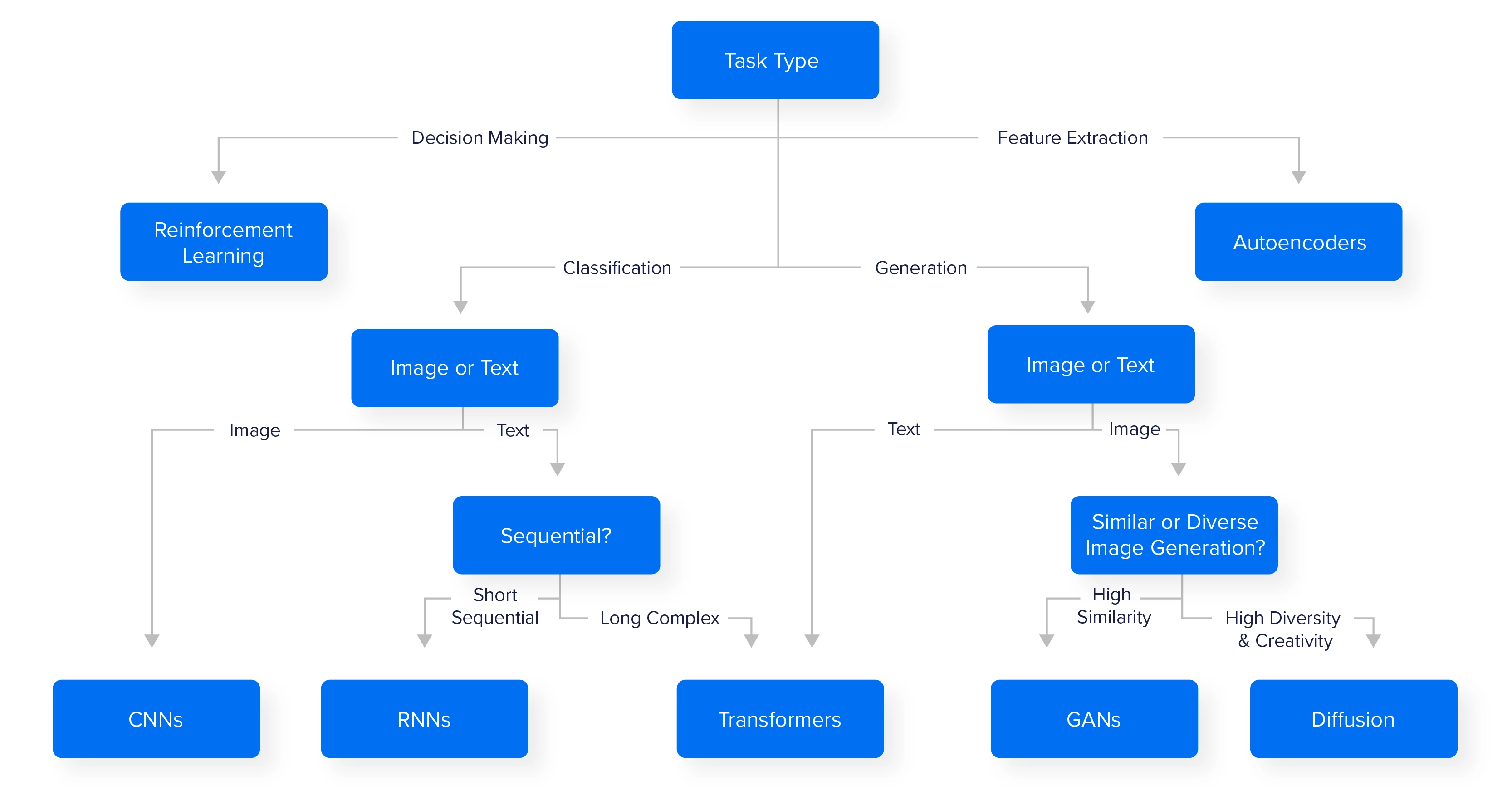

Model Selection Guideline

We developed a table and a rough flow chart to help you choose the appropriate AI model for your use case. These are just suggestions, and there are numerous other models to choose from, but this can get you started.

| Model | Use Case | GPU Compute Requirement |

| Convolutional Neural Network | Image Processing, Classification and Detection | ⭐⭐⭐⭐ |

| Recurrent Neural Network | Sequential Data, Time Series | ⭐⭐⭐ |

| Transformers | Complex Natural Language, Chatbots, Knowledge Bases | ⭐⭐⭐⭐⭐ |

| General Adversarial Networks | Data Generation | ⭐⭐⭐⭐ |

| Diffusion Models | Image Generation | ⭐⭐⭐⭐ |

| Reinforcement Learning | Decision Making, Robotics, Games | ⭐⭐⭐ |

| Autoencoders | Data Compression, Anomaly Detection | ⭐⭐⭐ |

Model Selection Decision Tree

There are also alternatives to CNNs that utilize transformers called ViTs as well as other models that may perform better for your specific use case. We encourage practitioners to experiment with different architectures to achieve a desired result.

But to train these models efficiency, run exploratory analysis, and benchmark various codes is not computationally cheap. High performance hardware is necessary for faster train time completion.

If you are looking to upgrade your computing infrastructure and level up productivity levels Exxact empowers AI engineers and data scientists with custom build hardware optimized for your use case. Increasing GPU compute is a worthwhile investment for any operation especially in the AI landscape of today.

Accelerate Training with an Exxact Multi-GPU Workstation

With the latest CPUs and most powerful GPUs available, accelerate your deep learning and AI project optimized to your deployment, budget, and desired performance!

Configure Now

Maximizing AI Training Efficiency - Selecting the Right Model

Introduction

Training models quickly and accurately is important for building trust in these workflow tools. With AI powered applications becoming more capable of executing complex tasks, data scientists and machine learning engineers can explore innovative approaches.

To develop the best model for a specific use case, utilizing the appropriate model, dataset, and deployment can streamline the AI development process and yield the best results.

Selecting the Right Model

Selecting the best model architecture is important for getting the best results for your specific task. Different kinds of problems need different model architectures:

- Convolutional Neural Networks (CNNs)

- Recurrent Neural Networks (RNNs)

- Transformer Models

- GANs and Diffusion Models

- Reinforcement Learning

- Autoencoders

When choosing a model architecture, think about things like the kind of data you have, how complicated your task is, and the resources you have. It's often a good idea to start with simpler models and make them more complicated as needed. Apart from the 6 listed, there are other models you can explore.

Convolutional Neural Network (CNNs)

CNNs are ideal for image processing tasks and excel in extracting patterns like edges, textures, and objects within visual data by using filters that detect spatial relationships.

- Use Cases: Image classification, object detection

- Compute Requirements: High GPU compute requirements since visual processing is GPU intensive

- Notable Architectures: EfficientNet, ResNet, CNNs with attention mechanisms

Convolutional Neural Networks have been around for quite some time now, using weights and parameters to evaluate, classify, and detect objects in computer vision models. With the surge of the transformer architecture, ViTs or Vision Transformers have also become a strong alternative.

Recurrent Neural Networks (RNNs)

RNNs are best suited for sequential or time-dependent data, where the order of information is crucial. They're widely used in applications like language modeling, speech recognition, and time-series forecasting, as RNNs can retain previous input states, making them effective for capturing dependencies within sequences.

- Use Cases: Sequential data, time series analysis, speech recognition, forecasting

- Compute Requirements: Moderate to high GPU compute

- Notable Architectures: LSTM, GRU, bidirectional RNNs

RNNs previously were designed to power natural language processing tasks but have been superseded by Transformer models like BERT and GPT. But RNNs remain relevant for highly sequential tasks and real time analysis such as weather modeling and stock forecasting.

Transformer Models

Transformer Models revolutionized AI for sequence data, especially in natural language processing tasks. Transformers process entire text sequences in parallel, using self-attention to weigh the importance of different tokens, words, and phrases in context. This parallelism boosts their performance on complex language based tasks. Transformers do suffer if training is not properly tuned, trained on quality data, or not trained enough, resulting in hallucination or false positives.

- Use Cases: Language processing, text generation, chatbots, knowledge base

- Compute Requirements: Training requires extreme GPU compute & running requires moderate to high GPU compute

- Popular Architectures: BERT and GPT

Transformer models can be augmented since they are prompted. Thus, Mixture of Experts and Retrieval Augmented Generation are methods for enhancing the functionality of a highly generalized AI model.

Image Generation Models: Diffusion & GANs

Diffusion and GANs are for generating new, realistic images. These image generation models are popular in creative fields for generating images, videos, or music, and they're also used for data augmentation in training models.

- Use Case: Image generation by prompt, image augmentation, artistic ideation, 3D model generation, image upscaling, denoising

- Compute Requirements: GANs can be parallelized whereas diffusion models are sequential. Both require high GPU requirements, especially for higher fidelity generation.

- Popular Architectures: StableDiffusion, Midjourney, StyleGAN, DCGAN

Diffusion models utilize denoising and image recognition techniques to guide the model in generating a persuasive image. Hundreds of passes will make static fuzz into an original art piece.

GANs or general adversarial networks pit two competing models in an iterative dance, one generator for creating an image and a discriminator for evaluating if the generated image was fake or real. Continuous passes will train both models to become better and better until the generator is able to beat the discriminator.

Reinforcement Learning

Reinforcement Learning (RL) is well-suited for decision-making tasks that involve interacting with an environment to achieve a specific objective. RL models learn by trial and error, making them ideal for applications in robotics, game playing, and autonomous systems, where the model receives feedback from its actions to progressively improve its performance. RL shines in scenarios where the AI must develop strategies over time, balancing short-term actions with long-term goals.

- Use Cases: Gameplay optimization, exploit finding, creating competitive CPUs, decision making

- Compute Requirements: Depends on complexity but benefits with more GPU compute

- Popular Architectures: Q-Learning, DQN, SAC

You can find various instances of hobbyists creating RL based AIs to train how to play a game. Tuning and training of a reinforcement learning model requires reading in-between lines as to not allow the AI to learn an unintended action. For example, in driving game Trackmania, the AI practitioner did not allow the AI the ability to brake, encouraging speed when taking a turn. He did not want the AI to learn how to take a turn successfully if it meant constant braking.

Autoencoders

Autoencoders are a type of unsupervised neural network designed to learn efficient codings of input data by compressing it into a lower-dimensional representation and then reconstructing it. This involves an encoder compressing the input and a decoder reconstructing it. Autoencoders are particularly well-suited for tasks such as dimensionality reduction, data denoising, and anomaly detection. They excel in applications like image and signal processing, where they can remove noise from data or detect unusual patterns that deviate from the norm. Additionally, they are used in generating synthetic data and feature extraction, making them versatile tools in various machine learning and data preprocessing tasks.

- Use Case: Data compression, anomaly detection, and noise reduction.

- Compute Requirements: Moderate compute; can run on mid-range GPUs for smaller data.

- Popular Architectures: Vanilla autoencoders, variational autoencoders (VAE).

Model Selection Guideline

We developed a table and a rough flow chart to help you choose the appropriate AI model for your use case. These are just suggestions, and there are numerous other models to choose from, but this can get you started.

| Model | Use Case | GPU Compute Requirement |

| Convolutional Neural Network | Image Processing, Classification and Detection | ⭐⭐⭐⭐ |

| Recurrent Neural Network | Sequential Data, Time Series | ⭐⭐⭐ |

| Transformers | Complex Natural Language, Chatbots, Knowledge Bases | ⭐⭐⭐⭐⭐ |

| General Adversarial Networks | Data Generation | ⭐⭐⭐⭐ |

| Diffusion Models | Image Generation | ⭐⭐⭐⭐ |

| Reinforcement Learning | Decision Making, Robotics, Games | ⭐⭐⭐ |

| Autoencoders | Data Compression, Anomaly Detection | ⭐⭐⭐ |

Model Selection Decision Tree

There are also alternatives to CNNs that utilize transformers called ViTs as well as other models that may perform better for your specific use case. We encourage practitioners to experiment with different architectures to achieve a desired result.

But to train these models efficiency, run exploratory analysis, and benchmark various codes is not computationally cheap. High performance hardware is necessary for faster train time completion.

If you are looking to upgrade your computing infrastructure and level up productivity levels Exxact empowers AI engineers and data scientists with custom build hardware optimized for your use case. Increasing GPU compute is a worthwhile investment for any operation especially in the AI landscape of today.

Accelerate Training with an Exxact Multi-GPU Workstation

With the latest CPUs and most powerful GPUs available, accelerate your deep learning and AI project optimized to your deployment, budget, and desired performance!

Configure Now