NVIDIA RTX A5000 Benchmarks

For this blog article, we conducted deep learning performance benchmarks for TensorFlow on NVIDIA A5000 GPUs.

Our Deep Learning Server was fitted with eight A5000 GPUs and we ran the standard “tf_cnn_benchmarks.py” benchmark script found in the official TensorFlow GitHub. We tested on the following networks: ResNet50, ResNet152, Inception v3, and Googlenet. Furthermore, we ran the same tests using 1, 2, 4, and 8 GPU configurations with a batch size of 128 for FP32 and 256 for FP16.

Key Points and Observations

- The NVIDIA RTX A5000 exhibits near linear scaling up to 8 GPUs.

- The RTX A5000 is expandable up to 48GB of memory using NVIDIA NVLink® to connect two GPUs.

- PCIe Gen 4: Doubles the bandwidth of the previous generation and speeds up data transfers for data-intensive tasks such as AI, data science, and creating 3D models.

- Supports NVIDIA RTX vWS (virtual workstation software) so it can deliver multiple high-performance virtual workstation instances that enable remote users to share resources.

NVIDIA RTX A5000 Highlights:

| GPU Features | NVIDIA RTX A5000 |

| CUDA Cores | 8192 |

| Tensor Cores & Performance | 256/222.2 TFLOPS |

| RT Cores & Performance | 64/54.2 TFLOPS |

| Single Precision Performance | 27.8 TFLOPS |

| GPU Memory | 24GB GDDR6 with ECC |

| Memory Interface & Bandwidth | 384-bit/768GB/sec |

| System Interface | PCI Express 4.0 x16 |

| Display Connectors | 4x DisplayPort 1.4a |

| Maximum Power Consumption | 230 W |

Interested in getting faster results?

Learn more about Exxact deep learning workstations starting at $3,700

Exxact RTX A5000 System Specs:

| Workstation Feature | Specification |

| Make/Model | Supermicro AS-4124GS-TN |

| Nodes | 1 |

| Processor/Count | 2x AMD EPYC 7552 |

| Total Logical Cores | 48 |

| Memory | DDR4 512GB |

| Storage | NVMe 3.84TB |

| OS | Ubuntu 18.04 |

| CUDA Version | 11.2 |

| TensorFlow Version | 2.31 |

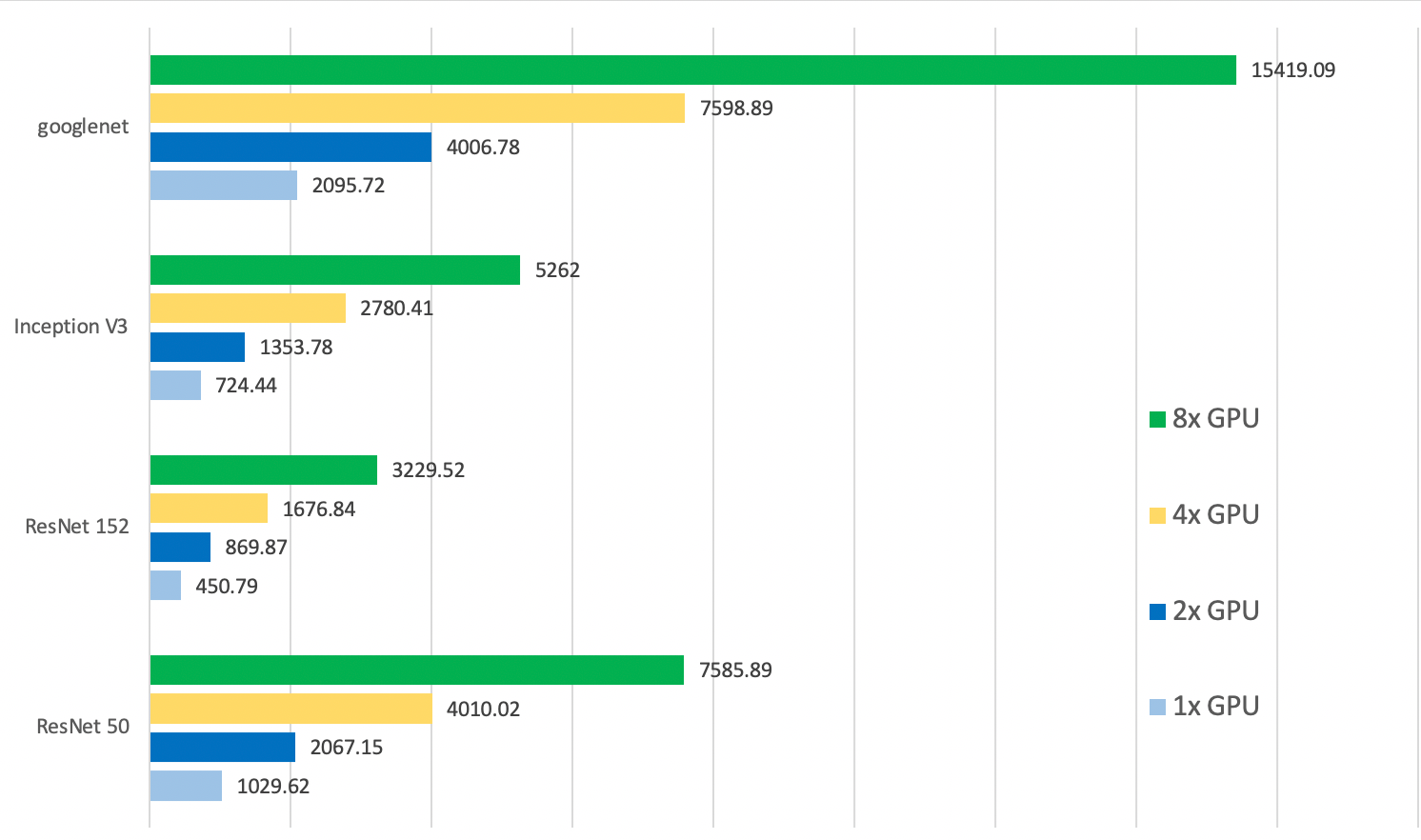

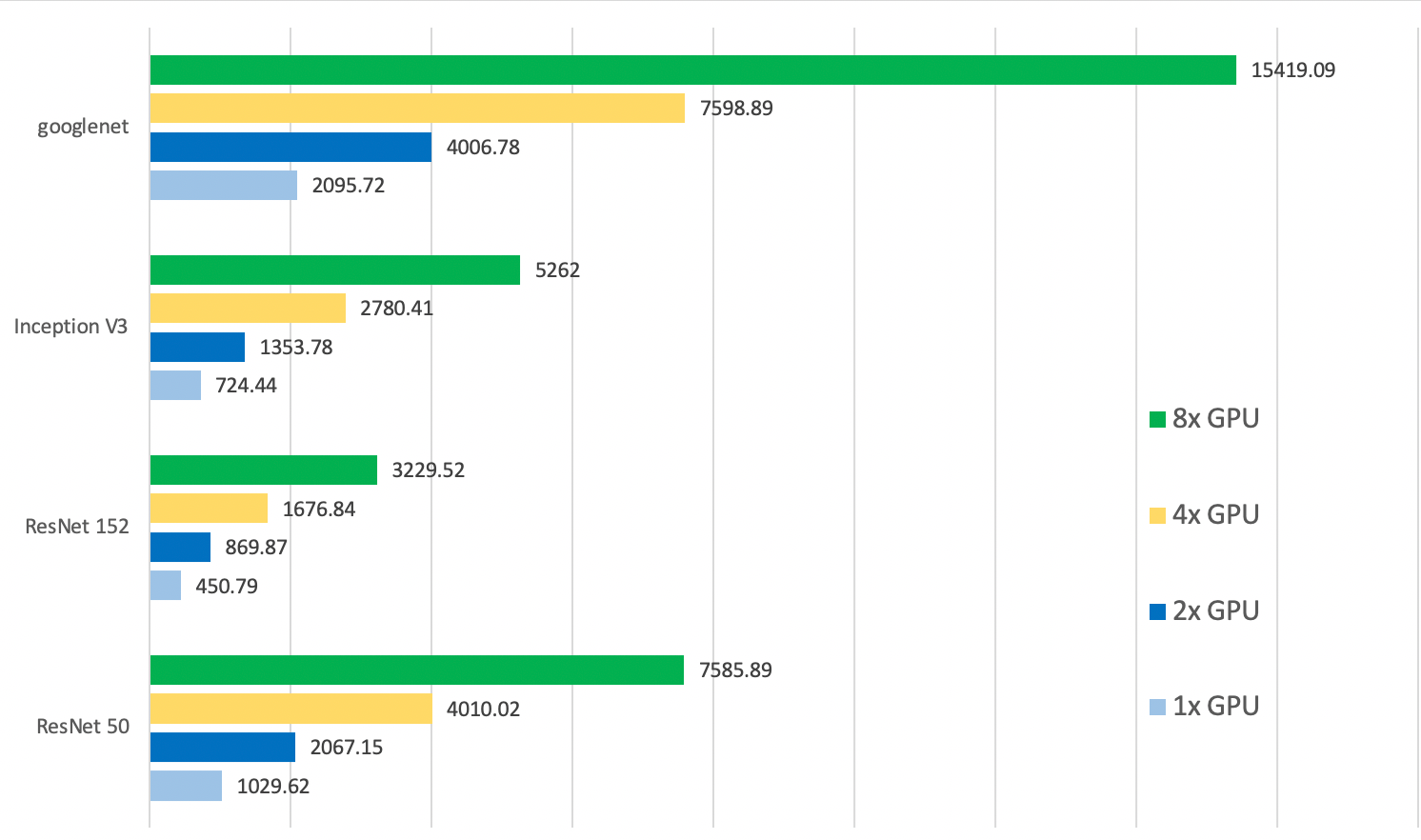

NVIDIA RTX A5000 TensorFlow FP 16 Benchmarks

| Model Type | 1x GPU | 2x GPU | 4x GPU | 8x GPU |

| ResNet 50 | 1029.62 | 2067.15 | 4010.02 | 7585.89 |

| ResNet 152 | 450.79 | 869.87 | 1676.84 | 3229.52 |

| Inception V3 | 724.44 | 1353.78 | 2780.41 | 5262 |

| Googlenet | 2095.72 | 4006.78 | 7598.89 | 15419.09 |

Batch Size 256 for all FP16 tests.

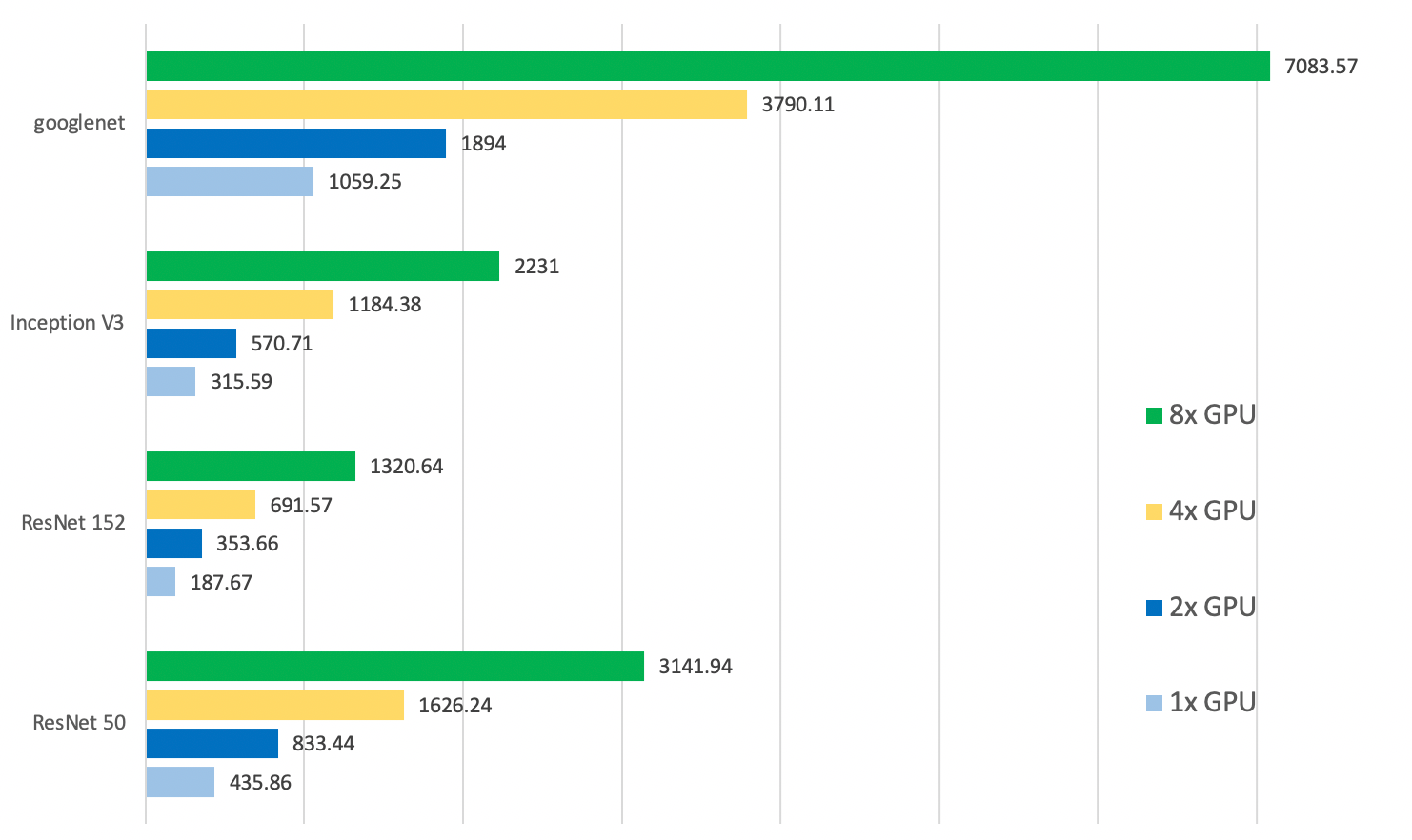

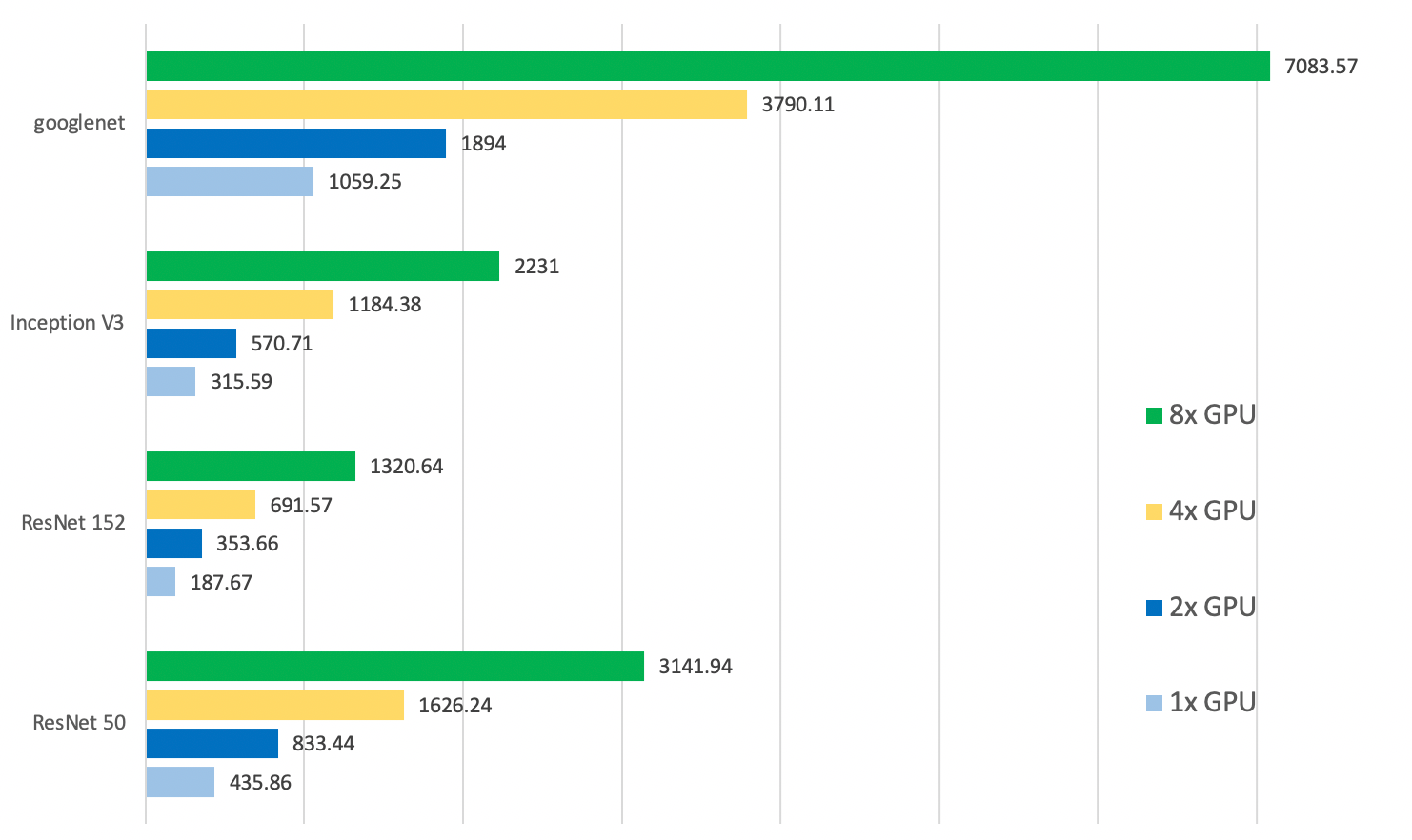

NVIDIA RTX A5000 TensorFlow FP 32 Benchmarks

| Model Type | 1x GPU | 2x GPU | 4x GPU | 8x GPU |

| ResNet 50 | 435.86 | 833.44 | 1626.24 | 3141.94 |

| ResNet 152 | 187.67 | 353.66 | 691.57 | 1320.64 |

| Inception V3 | 315.59 | 570.71 | 1184.38 | 2231 |

| Googlenet | 1059.25 | 1894 | 3790.11 | 7083.57 |

Batch Size 128 for all FP32 tests.

More About NVIDIA RTX A5000's Features

- NVIDIA Ampere Architecture-Based CUDA Cores: Accelerate graphics workflows with the latest CUDA® cores for up to 2.5X single-precision floating-point (FP32) performance compared to the previous generation.

- Second-Generation RT Cores: Produce more visually accurate renders faster with hardware-accelerated motion blur and up to 2X faster ray-tracing performance than the previous generation.

- Third Generation Tensor Cores: Boost AI and data science model training with up to 10X faster training performance compared to the previous generation with hardware-support for structural sparsity.

- 24GB of GPU Memory: Tackle memory-intensive workloads, from virtual production to engineering simulation, with 24 GB of GDDR6 memory with ECC.

- Virtualization Ready: Repurpose your personal workstation into multiple high-performance virtual workstations with support for NVIDIA RTX Virtual Workstation (vWS) software

- Third-Generation NVIDIA NVLink: Scale memory and performance across multiple GPUs with NVIDIA® NVLink™ to tackle larger datasets, models, and scenes.

- PCI Express Gen 4: Improve data-transfer speeds from CPU memory for data-intensive tasks with support for PCI Express Gen 4.

- Power Efficiency: Leverage a dual-slot, power efficient design that’s 2.5X more power efficient than the previous generation and crafted to fit a wide range of workstations.

Have any questions about NVIDIA GPUs or AI workstations and servers?

Contact Exxact Today

NVIDIA RTX A5000 Deep Learning Benchmarks for TensorFlow

NVIDIA RTX A5000 Benchmarks

For this blog article, we conducted deep learning performance benchmarks for TensorFlow on NVIDIA A5000 GPUs.

Our Deep Learning Server was fitted with eight A5000 GPUs and we ran the standard “tf_cnn_benchmarks.py” benchmark script found in the official TensorFlow GitHub. We tested on the following networks: ResNet50, ResNet152, Inception v3, and Googlenet. Furthermore, we ran the same tests using 1, 2, 4, and 8 GPU configurations with a batch size of 128 for FP32 and 256 for FP16.

Key Points and Observations

- The NVIDIA RTX A5000 exhibits near linear scaling up to 8 GPUs.

- The RTX A5000 is expandable up to 48GB of memory using NVIDIA NVLink® to connect two GPUs.

- PCIe Gen 4: Doubles the bandwidth of the previous generation and speeds up data transfers for data-intensive tasks such as AI, data science, and creating 3D models.

- Supports NVIDIA RTX vWS (virtual workstation software) so it can deliver multiple high-performance virtual workstation instances that enable remote users to share resources.

NVIDIA RTX A5000 Highlights:

| GPU Features | NVIDIA RTX A5000 |

| CUDA Cores | 8192 |

| Tensor Cores & Performance | 256/222.2 TFLOPS |

| RT Cores & Performance | 64/54.2 TFLOPS |

| Single Precision Performance | 27.8 TFLOPS |

| GPU Memory | 24GB GDDR6 with ECC |

| Memory Interface & Bandwidth | 384-bit/768GB/sec |

| System Interface | PCI Express 4.0 x16 |

| Display Connectors | 4x DisplayPort 1.4a |

| Maximum Power Consumption | 230 W |

Interested in getting faster results?

Learn more about Exxact deep learning workstations starting at $3,700

Exxact RTX A5000 System Specs:

| Workstation Feature | Specification |

| Make/Model | Supermicro AS-4124GS-TN |

| Nodes | 1 |

| Processor/Count | 2x AMD EPYC 7552 |

| Total Logical Cores | 48 |

| Memory | DDR4 512GB |

| Storage | NVMe 3.84TB |

| OS | Ubuntu 18.04 |

| CUDA Version | 11.2 |

| TensorFlow Version | 2.31 |

NVIDIA RTX A5000 TensorFlow FP 16 Benchmarks

| Model Type | 1x GPU | 2x GPU | 4x GPU | 8x GPU |

| ResNet 50 | 1029.62 | 2067.15 | 4010.02 | 7585.89 |

| ResNet 152 | 450.79 | 869.87 | 1676.84 | 3229.52 |

| Inception V3 | 724.44 | 1353.78 | 2780.41 | 5262 |

| Googlenet | 2095.72 | 4006.78 | 7598.89 | 15419.09 |

Batch Size 256 for all FP16 tests.

NVIDIA RTX A5000 TensorFlow FP 32 Benchmarks

| Model Type | 1x GPU | 2x GPU | 4x GPU | 8x GPU |

| ResNet 50 | 435.86 | 833.44 | 1626.24 | 3141.94 |

| ResNet 152 | 187.67 | 353.66 | 691.57 | 1320.64 |

| Inception V3 | 315.59 | 570.71 | 1184.38 | 2231 |

| Googlenet | 1059.25 | 1894 | 3790.11 | 7083.57 |

Batch Size 128 for all FP32 tests.

More About NVIDIA RTX A5000's Features

- NVIDIA Ampere Architecture-Based CUDA Cores: Accelerate graphics workflows with the latest CUDA® cores for up to 2.5X single-precision floating-point (FP32) performance compared to the previous generation.

- Second-Generation RT Cores: Produce more visually accurate renders faster with hardware-accelerated motion blur and up to 2X faster ray-tracing performance than the previous generation.

- Third Generation Tensor Cores: Boost AI and data science model training with up to 10X faster training performance compared to the previous generation with hardware-support for structural sparsity.

- 24GB of GPU Memory: Tackle memory-intensive workloads, from virtual production to engineering simulation, with 24 GB of GDDR6 memory with ECC.

- Virtualization Ready: Repurpose your personal workstation into multiple high-performance virtual workstations with support for NVIDIA RTX Virtual Workstation (vWS) software

- Third-Generation NVIDIA NVLink: Scale memory and performance across multiple GPUs with NVIDIA® NVLink™ to tackle larger datasets, models, and scenes.

- PCI Express Gen 4: Improve data-transfer speeds from CPU memory for data-intensive tasks with support for PCI Express Gen 4.

- Power Efficiency: Leverage a dual-slot, power efficient design that’s 2.5X more power efficient than the previous generation and crafted to fit a wide range of workstations.

Have any questions about NVIDIA GPUs or AI workstations and servers?

Contact Exxact Today