.jpg?format=webp)

Deep Learning Benchmarks for TensorFlow

For this blog article, we conducted deep learning performance benchmarks for TensorFlow comparing the NVIDIA RTX A4000 to NVIDIA RTX A5000 and A6000 GPUs.

Our Deep Learning Server was fitted with four RTX A4000 GPUs and we ran the standard “tf_cnn_benchmarks.py” benchmark script found in the official TensorFlow GitHub. We tested on the following networks: ResNet50, ResNet152, Inception v3, and Inception v4. Furthermore, we ran the same tests using 1, 2, and 4 GPU configurations with largest batch size for FP16.

Key Points and Observations

- In our benchmarks the NVIDIA RTX A4000 exhibits near linear scaling up to 4 GPUs.

- The RTX A4000 GPU memory foot print of 16GB limited our batch size to 256 against much larger datasets.

- Powered by the NVIDIA Ampere architecture, the RTX A4000 features 3rd gen Tensor Core, 2nd gen RT Cores. Plus 16GB of superfast GDDR6X memory, as well as Single Slot form factor and PCI Express Gen 4.

- The A4000 offers full support for all our latest and greatest technologies.

NVIDIA RTX A4000 Highlights:

| Spec | |

| CUDA Cores | 6144 |

| Tensor Cores | 192 |

| RT Cores | 48 |

| Single Precision Performance | 19.2 TFLOPS |

| RT Core Performance | 37.4 TFLOPS |

| Tensor Performance | 153.4 TFLOPS |

| GPU Memory | 16 GB GDDR6 with ECC |

| Memory Interface | 256-bit |

| Memory Bandwidth | 448 GB/sec |

Interested in getting faster results?

Learn more about Exxact deep learning workstations starting at $3,700

Exxact RTX A4000 Workstation System Specs:

| Spec | |

| Nodes | 1 |

| Processor / Count | 2x AMD EPYC 7552 |

| Total Logical Cores | 48 |

| Memory | DDR4 512 GB |

| Storage | NVMe 3.84 TB |

| OS | Ubuntu 18.04 |

| CUDA Version | 11.2 |

| Tensorflow Version | 2.40 |

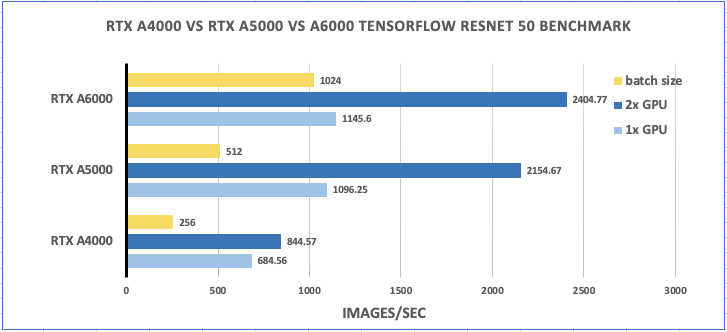

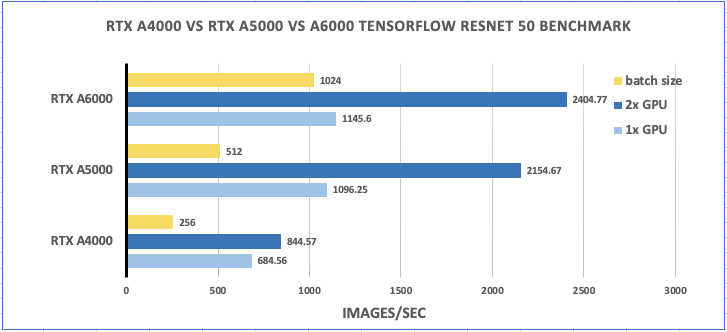

TensorFlow FP16 Benchmarks comparison on ResNet50

| GPUType | 1x GPU | 2x GPU | Batch Size |

| RTX A4000 | 684.56 | 844.57 | 256 |

| RTX A5000 | 1096.25 | 2154.67 | 512 |

| RTX A6000 | 1145.6 | 2404.77 | 1024 |

Largest Batch Size for all FP16 tests.

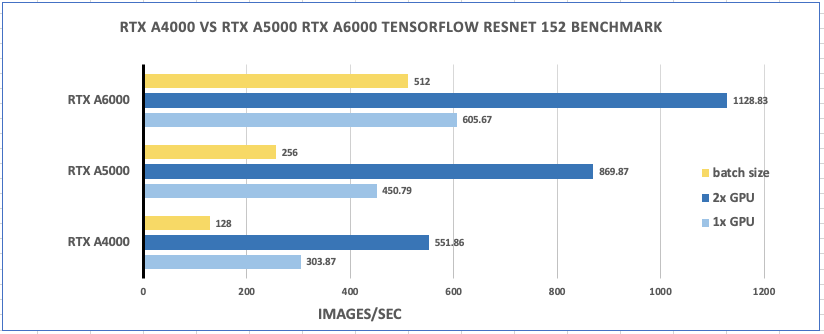

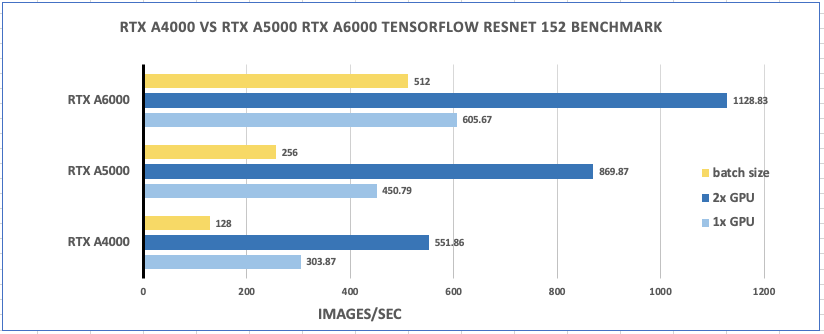

TensorFlow FP16 Benchmarks comparison on ResNet152

| GPU Type | 1x GPU | 2x GPU | Batch Size |

| RTX A4000 | 303.87 | 551.86 | 128 |

| RTX A5000 | 450.79 | 869.87 | 256 |

| RTX A6000 | 605.67 | 1128.83 | 512 |

Largest Batch Size for all FP16 tests.

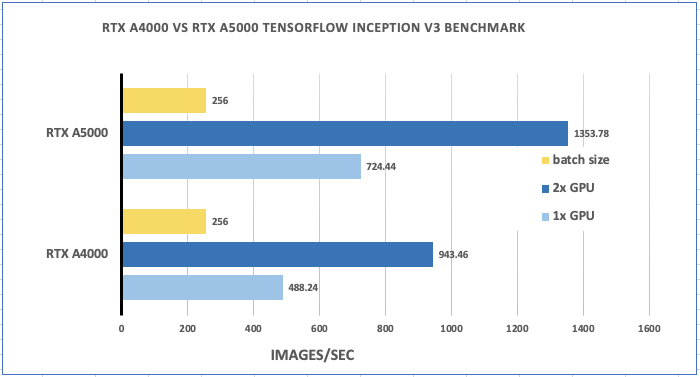

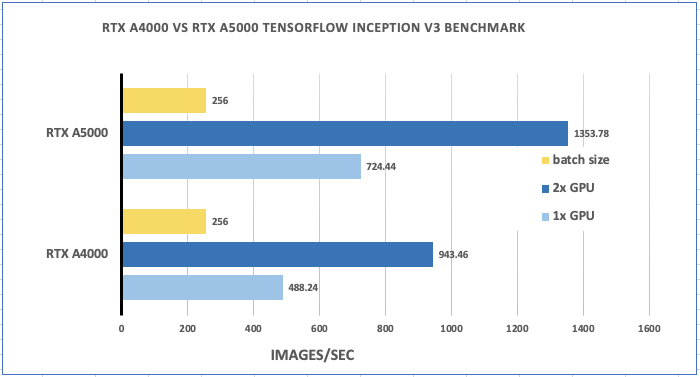

TensorFlow FP16 Benchmarks comparison on Inception3

| GPU Type | 1x GPU | 2x GPU | Batch Size |

| RTX A4000 | 488.24 | 943.46 | 256 |

| RTX A5000 | 724.44 | 1353.78 | 256 |

Largest Batch Size for all FP16 tests.

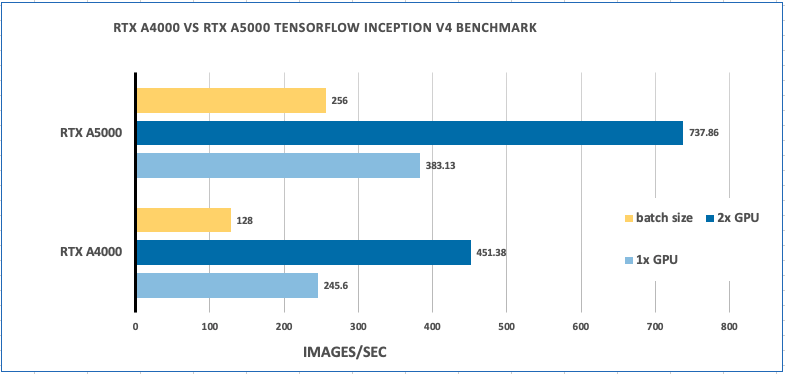

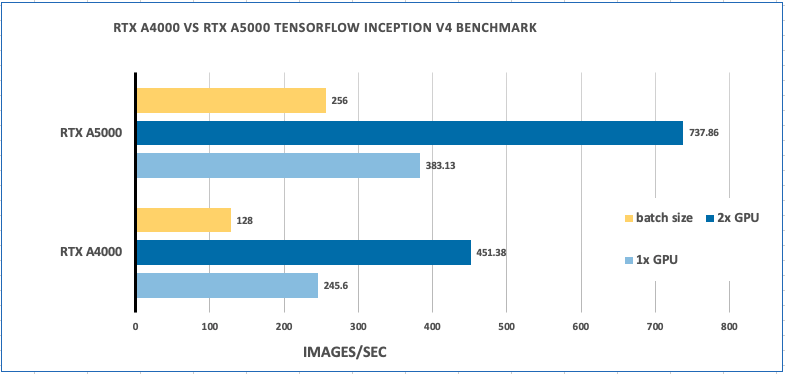

TensorFlow FP16 Benchmarks comparison on Inception4

| GPU Type | 1x GPU | 2x GPU | Batch Size |

| RTX A4000 | 245.6 | 451.38 | 128 |

| RTX A5000 | 383.13 | 737.86 | 256 |

Largest Batch Size for all FP16 tests.

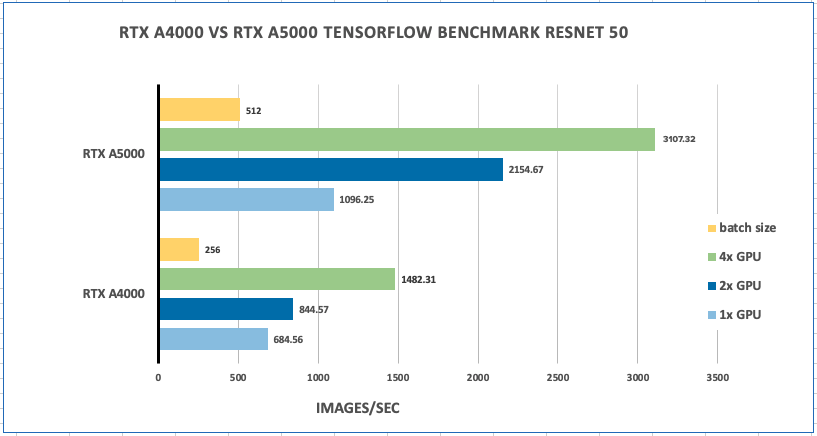

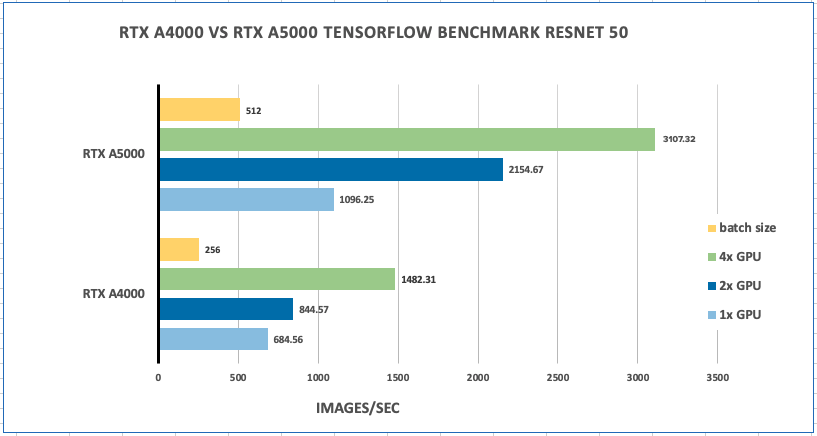

4x GPU TensorFlow FP16 Benchmarks comparison on ResNet50

| GPU Type | 1x GPU | 2x GPU | 4x GPU | Batch Size |

| RTX A4000 | 684.56 | 844.57 | 1482.31 | 256 |

| RTX A5000 | 1096.25 | 2154.67 | 3107.32 | 512 |

Largest Batch Size for all FP16 tests.

NVIDIA RTX (Ampere) / Quadro RTX (Turing) comparison

| RTX A4000 | RTX A5000 | RTX A6000 | Quadro RTX 4000 | Quadro RTX 5000 | Quadro RTX 6000 | |

| Architecture | Ampere | Ampere | Ampere | Turing | Turing | Turing |

| GPU memory | 16 GB GDDR6 | 24 GB GDDR6 | 48 GB GDDR6 | 8 GB GDDR6 | 16 GB GDDR6 | 24 GB GDDR6 |

| ECC memory | Yes | Yes | Yes | No | Yes | Yes |

| CUDA cores | 6,144 | 8,192 | 10,752 | 2304 | 3,072 | 4,608 |

| Tensor Cores | 192 | 256 | 336 | 288 | 384 | 576 |

| RT Cores | 48 | 64 | 84 | 36 | 48 | 72 |

| SP perf | 19.2 TFLOPS | 27.8 TFLOPS | 38.7 TFLOPS | 7.1 TFLOPS | 11.2 TFLOPS | 16.3 TFLOPS |

| RT Core perf | 37.4 TFLOPS | 54.2 TFLOPS | 75.6 TFLOPS | N/A | N/A | N/A |

| Tensor perf | 153.4 TFLOPS | 222.2 TFLOPS | 309.7 TFLOPS | 57.0 TFLOPS | 89.2 TFLOPS | 130.5 TFLOPS |

| Max Power | 140W | 230W | 300W | 160W | 265W | 295W |

| Graphic bus | PCI-E 4.0 x16 | PCI-E 4.0 x16 | PCI-E 4.0 x16 | PCI-E 3.0 x16 | PCI-E 3.0 x16 | PCI-E 3.0 x16 |

| Connectors | DP 1.4 (4) | DP 1.4 (4) | DP 1.4 (4) | DP 1.4 (3), USB-C | DP 1.4 (4), USB-C | DP 1.4 (4), USB-C |

| Form Factor | Single slot | Dual Slot | Dual Slot | Single slot | Dual Slot | Dual Slot |

| vGPU Software | N/A | NVIDIA RTX vWS | NVIDIA RTX vWS | N/A | N/A | NVIDIA RTX vWS |

| Nvlink | N/A | 2x RTX A5000 | 2x RTX A6000 | N/A | 2x RTX 5000 | 2x RTX 6000 |

| Power Connector | 1x 6-pin PCIe | 1x 8-pin PCIe | 1x 8-pin CPU | 1x 6-pin PCIe | 1x 8-pin PCIe | 2x 8-pin PCIe |

Additional GPU Benchmarks

- NVIDIA A5000 Deep Learning Benchmarks for TensorFlow

- NVIDIA A30 Deep Learning Benchmarks for TensorFlow

- NVIDIA RTX A6000 Deep Learning Benchmarks for TensorFlow

- NVIDIA RTX A6000 Benchmarks for RELION Cryo-EM

- NVIDIA A100 Deep Learning Benchmarks for TensorFlow

Final Thoughts for NVIDIA RTX A4000

The NVIDIA RTX A4000 is the most powerful single-slot GPU for professionals, delivering real-time ray tracing, AI-accelerated compute, and high-performance graphics performance to your desktop.

Built on the NVIDIA Ampere architecture, the RTX A4000 combines 48 second-generation RT Cores, 192 third-generation Tensor Cores, and 6144 CUDA cores with 16 GB of graphics memory. So you can engineer products and solutions from your desktop workstation.

Have any questions?

Contact Exxact Today

.jpg?format=webp)

NVIDIA RTX A4000, A5000 and A6000 Comparison: Deep Learning Benchmarks for TensorFlow

Deep Learning Benchmarks for TensorFlow

For this blog article, we conducted deep learning performance benchmarks for TensorFlow comparing the NVIDIA RTX A4000 to NVIDIA RTX A5000 and A6000 GPUs.

Our Deep Learning Server was fitted with four RTX A4000 GPUs and we ran the standard “tf_cnn_benchmarks.py” benchmark script found in the official TensorFlow GitHub. We tested on the following networks: ResNet50, ResNet152, Inception v3, and Inception v4. Furthermore, we ran the same tests using 1, 2, and 4 GPU configurations with largest batch size for FP16.

Key Points and Observations

- In our benchmarks the NVIDIA RTX A4000 exhibits near linear scaling up to 4 GPUs.

- The RTX A4000 GPU memory foot print of 16GB limited our batch size to 256 against much larger datasets.

- Powered by the NVIDIA Ampere architecture, the RTX A4000 features 3rd gen Tensor Core, 2nd gen RT Cores. Plus 16GB of superfast GDDR6X memory, as well as Single Slot form factor and PCI Express Gen 4.

- The A4000 offers full support for all our latest and greatest technologies.

NVIDIA RTX A4000 Highlights:

| Spec | |

| CUDA Cores | 6144 |

| Tensor Cores | 192 |

| RT Cores | 48 |

| Single Precision Performance | 19.2 TFLOPS |

| RT Core Performance | 37.4 TFLOPS |

| Tensor Performance | 153.4 TFLOPS |

| GPU Memory | 16 GB GDDR6 with ECC |

| Memory Interface | 256-bit |

| Memory Bandwidth | 448 GB/sec |

Interested in getting faster results?

Learn more about Exxact deep learning workstations starting at $3,700

Exxact RTX A4000 Workstation System Specs:

| Spec | |

| Nodes | 1 |

| Processor / Count | 2x AMD EPYC 7552 |

| Total Logical Cores | 48 |

| Memory | DDR4 512 GB |

| Storage | NVMe 3.84 TB |

| OS | Ubuntu 18.04 |

| CUDA Version | 11.2 |

| Tensorflow Version | 2.40 |

TensorFlow FP16 Benchmarks comparison on ResNet50

| GPUType | 1x GPU | 2x GPU | Batch Size |

| RTX A4000 | 684.56 | 844.57 | 256 |

| RTX A5000 | 1096.25 | 2154.67 | 512 |

| RTX A6000 | 1145.6 | 2404.77 | 1024 |

Largest Batch Size for all FP16 tests.

TensorFlow FP16 Benchmarks comparison on ResNet152

| GPU Type | 1x GPU | 2x GPU | Batch Size |

| RTX A4000 | 303.87 | 551.86 | 128 |

| RTX A5000 | 450.79 | 869.87 | 256 |

| RTX A6000 | 605.67 | 1128.83 | 512 |

Largest Batch Size for all FP16 tests.

TensorFlow FP16 Benchmarks comparison on Inception3

| GPU Type | 1x GPU | 2x GPU | Batch Size |

| RTX A4000 | 488.24 | 943.46 | 256 |

| RTX A5000 | 724.44 | 1353.78 | 256 |

Largest Batch Size for all FP16 tests.

TensorFlow FP16 Benchmarks comparison on Inception4

| GPU Type | 1x GPU | 2x GPU | Batch Size |

| RTX A4000 | 245.6 | 451.38 | 128 |

| RTX A5000 | 383.13 | 737.86 | 256 |

Largest Batch Size for all FP16 tests.

4x GPU TensorFlow FP16 Benchmarks comparison on ResNet50

| GPU Type | 1x GPU | 2x GPU | 4x GPU | Batch Size |

| RTX A4000 | 684.56 | 844.57 | 1482.31 | 256 |

| RTX A5000 | 1096.25 | 2154.67 | 3107.32 | 512 |

Largest Batch Size for all FP16 tests.

NVIDIA RTX (Ampere) / Quadro RTX (Turing) comparison

| RTX A4000 | RTX A5000 | RTX A6000 | Quadro RTX 4000 | Quadro RTX 5000 | Quadro RTX 6000 | |

| Architecture | Ampere | Ampere | Ampere | Turing | Turing | Turing |

| GPU memory | 16 GB GDDR6 | 24 GB GDDR6 | 48 GB GDDR6 | 8 GB GDDR6 | 16 GB GDDR6 | 24 GB GDDR6 |

| ECC memory | Yes | Yes | Yes | No | Yes | Yes |

| CUDA cores | 6,144 | 8,192 | 10,752 | 2304 | 3,072 | 4,608 |

| Tensor Cores | 192 | 256 | 336 | 288 | 384 | 576 |

| RT Cores | 48 | 64 | 84 | 36 | 48 | 72 |

| SP perf | 19.2 TFLOPS | 27.8 TFLOPS | 38.7 TFLOPS | 7.1 TFLOPS | 11.2 TFLOPS | 16.3 TFLOPS |

| RT Core perf | 37.4 TFLOPS | 54.2 TFLOPS | 75.6 TFLOPS | N/A | N/A | N/A |

| Tensor perf | 153.4 TFLOPS | 222.2 TFLOPS | 309.7 TFLOPS | 57.0 TFLOPS | 89.2 TFLOPS | 130.5 TFLOPS |

| Max Power | 140W | 230W | 300W | 160W | 265W | 295W |

| Graphic bus | PCI-E 4.0 x16 | PCI-E 4.0 x16 | PCI-E 4.0 x16 | PCI-E 3.0 x16 | PCI-E 3.0 x16 | PCI-E 3.0 x16 |

| Connectors | DP 1.4 (4) | DP 1.4 (4) | DP 1.4 (4) | DP 1.4 (3), USB-C | DP 1.4 (4), USB-C | DP 1.4 (4), USB-C |

| Form Factor | Single slot | Dual Slot | Dual Slot | Single slot | Dual Slot | Dual Slot |

| vGPU Software | N/A | NVIDIA RTX vWS | NVIDIA RTX vWS | N/A | N/A | NVIDIA RTX vWS |

| Nvlink | N/A | 2x RTX A5000 | 2x RTX A6000 | N/A | 2x RTX 5000 | 2x RTX 6000 |

| Power Connector | 1x 6-pin PCIe | 1x 8-pin PCIe | 1x 8-pin CPU | 1x 6-pin PCIe | 1x 8-pin PCIe | 2x 8-pin PCIe |

Additional GPU Benchmarks

- NVIDIA A5000 Deep Learning Benchmarks for TensorFlow

- NVIDIA A30 Deep Learning Benchmarks for TensorFlow

- NVIDIA RTX A6000 Deep Learning Benchmarks for TensorFlow

- NVIDIA RTX A6000 Benchmarks for RELION Cryo-EM

- NVIDIA A100 Deep Learning Benchmarks for TensorFlow

Final Thoughts for NVIDIA RTX A4000

The NVIDIA RTX A4000 is the most powerful single-slot GPU for professionals, delivering real-time ray tracing, AI-accelerated compute, and high-performance graphics performance to your desktop.

Built on the NVIDIA Ampere architecture, the RTX A4000 combines 48 second-generation RT Cores, 192 third-generation Tensor Cores, and 6144 CUDA cores with 16 GB of graphics memory. So you can engineer products and solutions from your desktop workstation.

Have any questions?

Contact Exxact Today