NVIDIA Titan RTX Benchmarks

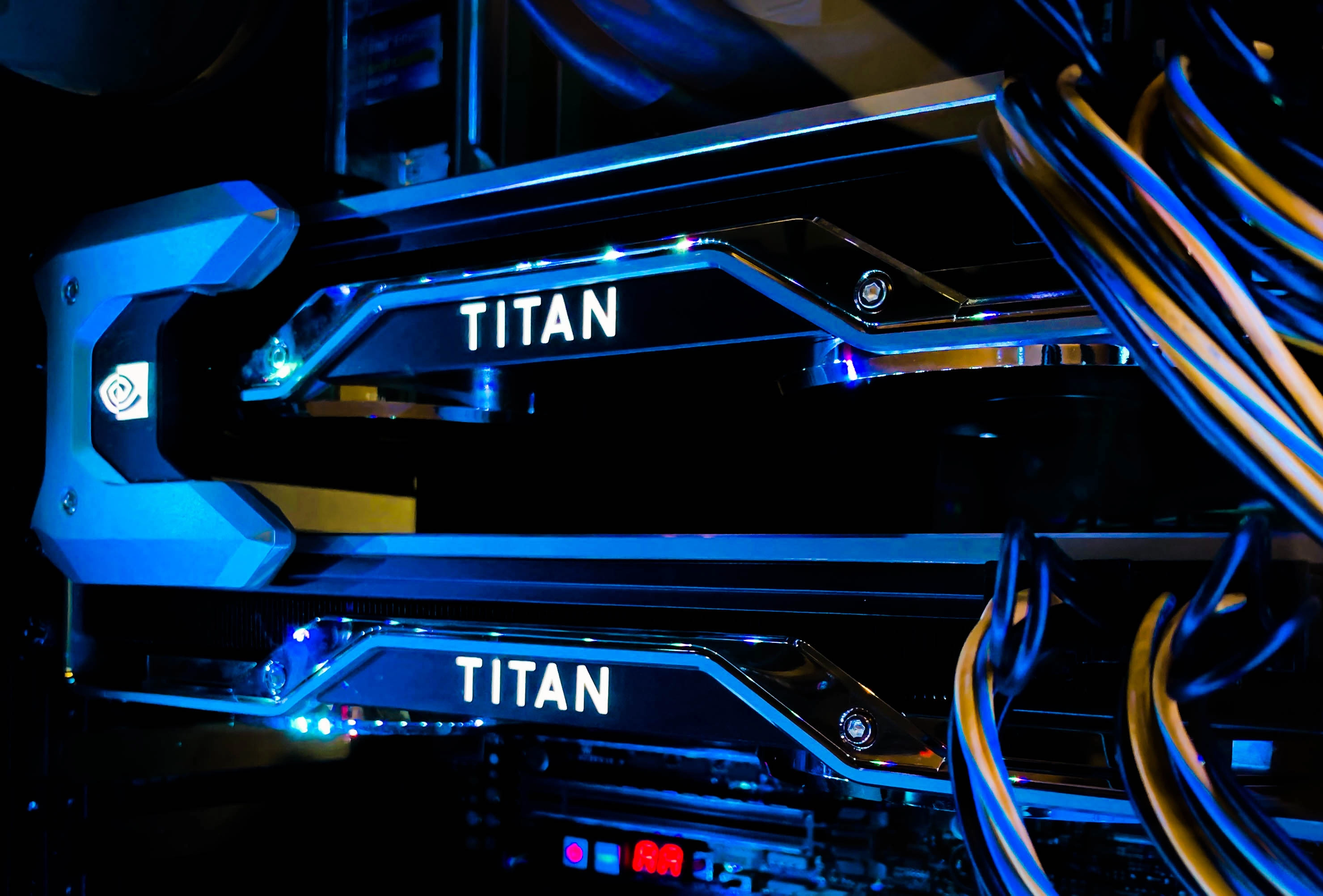

For this blog article, we conducted deep learning performance benchmarks for TensorFlow using NVIDIA TITAN RTX GPUs. Tests were conducted using an Exxact TITAN Workstation outfitted with 2x TITAN RTXs with an NVLink bridge. We ran the standard "tf_cnn_benchmarks.py" benchmark script found here in the official TensorFlow github.

We ran tests on the following networks: ResNet50, ResNet152, Inception v3, Inception v4, VGG-16, AlexNet, and Nasnet. Furthermore, we compared FP16 to FP32 performance, and compared numbers using XLA. The same tests were conducted using 1 and 2 GPU configurations, and batch size used was the largest that could fit in memory (powers of two).

Key Points and Observations

- The TITAN RTX an excellent choice if you will need large batch size for training while keeping costs within decent price point.

- Performance (img/sec) is comparable to Quadro RTX 6000 Benchmark Performance in most instances.

- Observing this dual GPU configuration, the workstation ran silently, and very cool during training workloads (Note: The chassis offers a lot of airflow).

- Significant gains were made using XLA in most cases, especially when in FP16.

TITAN RTX Benchmark Snapshot, All Models, XLA on/off, FP32, FP16

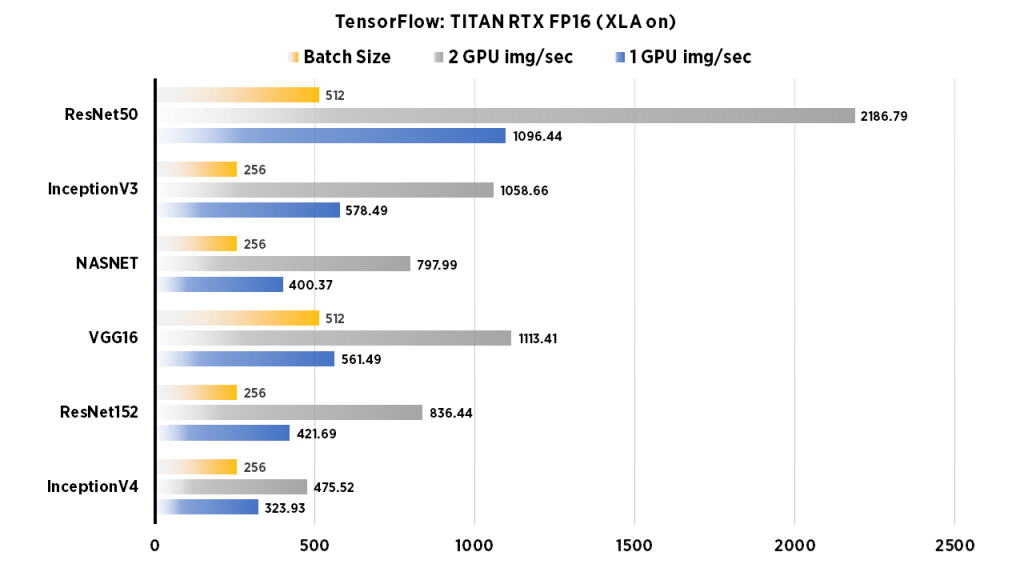

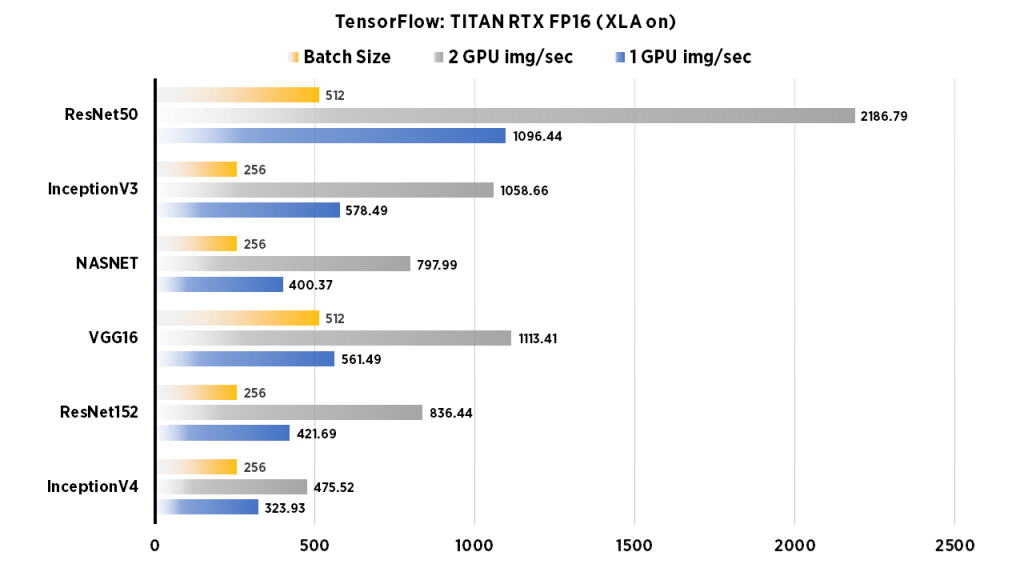

TITAN RTX Deep Learning Benchmarks: FP16 (XLA on)

[supsystic-tables id=31]

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=256 --num_batches=100 --model=inception4 --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --use_fp16=True --nodistortions --gradient_repacking=2 --datasets_use_codefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

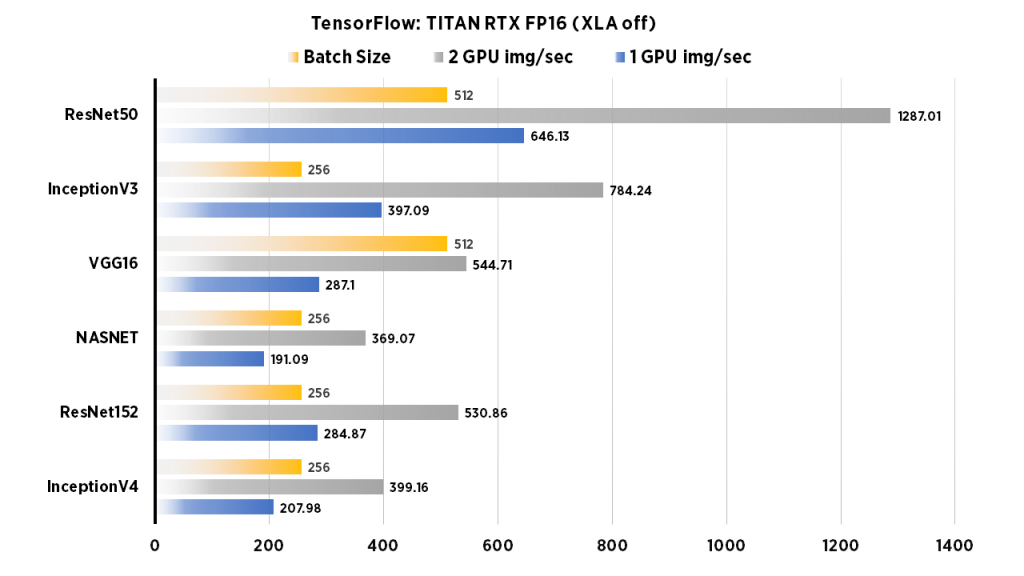

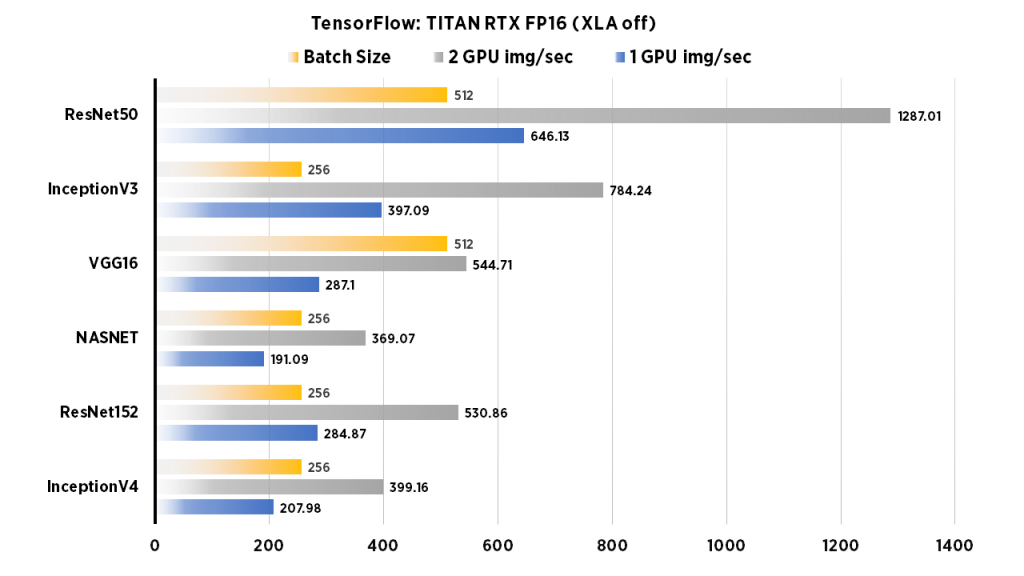

TITAN RTX Deep Learning Benchmarks: FP16 (XLA off)

| 1 GPU img/sec | 2 GPU img/sec | Batch Size | |

| InceptionV4 | 323.93 | 475.52 | 256 |

| ResNet152 | 421.69 | 836.44 | 256 |

| VGG16 | 561.49 | 1113.41 | 512 |

| NASNET | 400.37 | 797.99 | 256 |

| InceptionV3 | 578.49 | 1058.66 | 256 |

| ResNet50 | 1096.44 | 2186.79 | 512 |

Run these benchmarks

Apply the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=512 --model=resnet50 --variable_update=parameter_server --use_fp16=TrueTITAN RTX Deep Learning Benchmarks: FP32 (XLA on)

| 1 GPU img/sec | 2 GPU img/sec | Batch Size | |

| InceptionV4 | 207.98 | 399.16 | 256 |

| ResNet152 | 284.87 | 530.86 | 256 |

| NASNET | 191.09 | 369.07 | 256 |

| VGG16 | 287.1 | 544.71 | 512 |

| InceptionV3 | 397.09 | 784.24 | 256 |

| ResNet50 | 646.13 | 1287.01 | 512 |

Run these benchmarks

Change the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=256 --num_batches=100 --model=resnet50 --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --nodistortions --gradient_repacking=2 --datasets_use_codefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

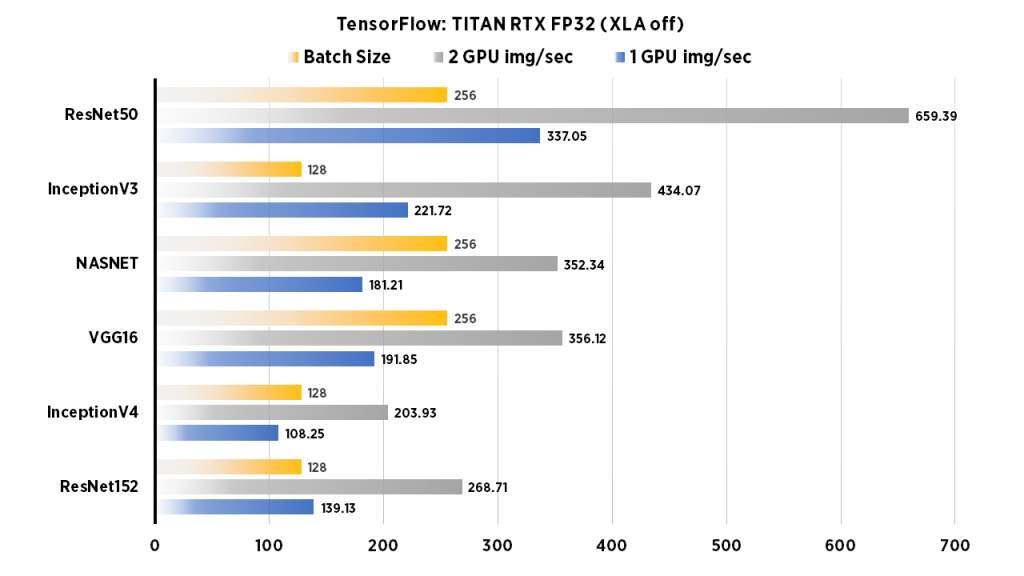

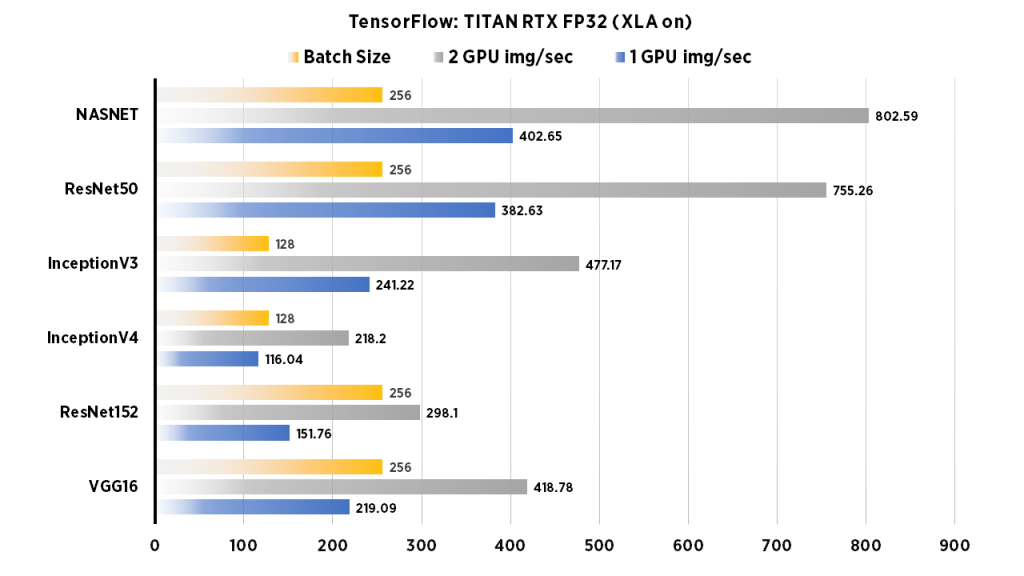

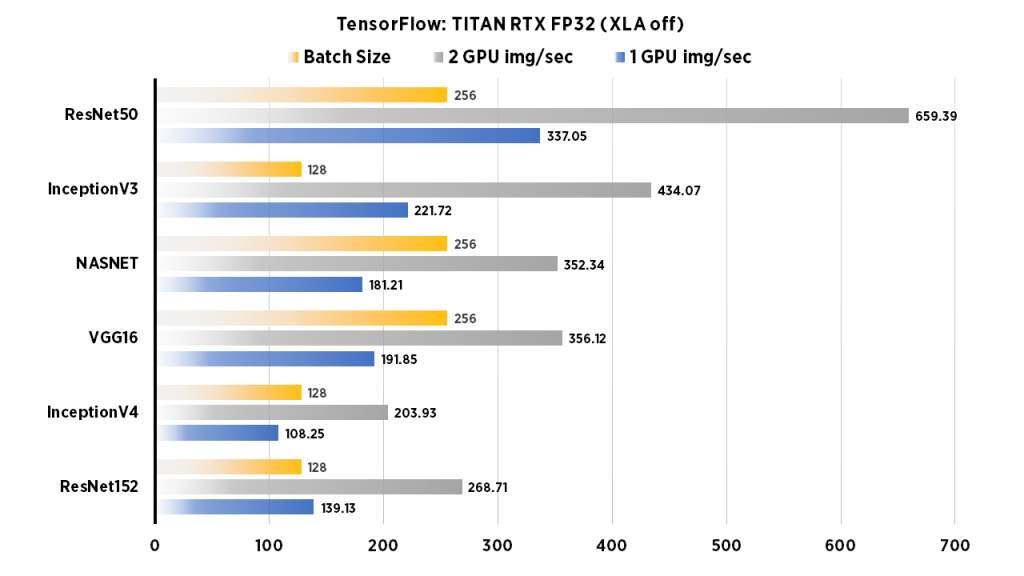

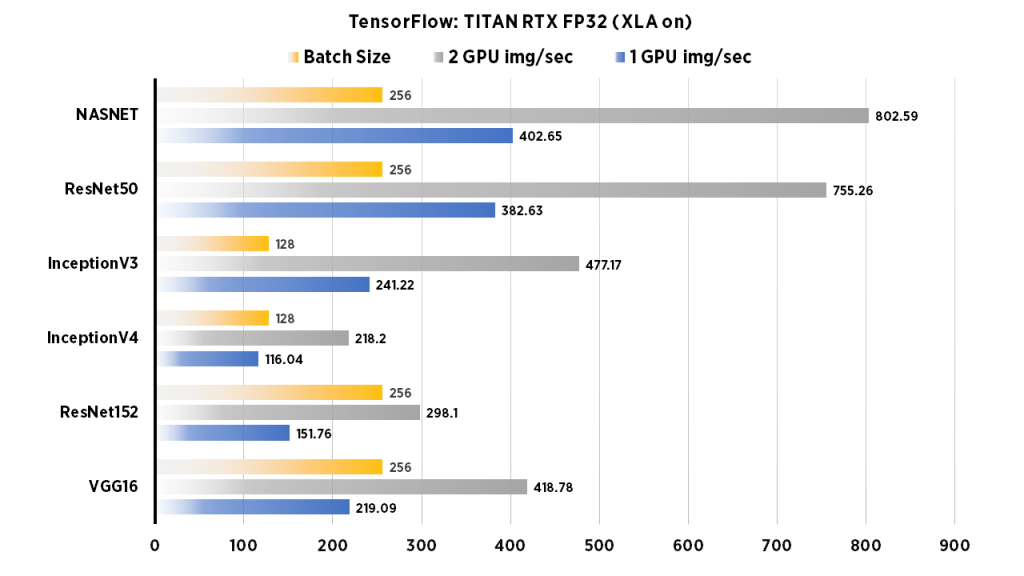

TITAN RTX Deep Learning Benchmarks: FP32 (XLA off)

| 1 GPU img/sec | 2 GPU img/sec | Batch Size | |

| VGG16 | 219.09 | 418.78 | 256 |

| ResNet152 | 151.76 | 298.1 | 256 |

| InceptionV4 | 116.04 | 218.2 | 128 |

| InceptionV3 | 241.22 | 477.17 | 128 |

| ResNet50 | 382.63 | 755.26 | 256 |

| NASNET | 402.65 | 802.59 | 256 |

Run these benchmarks

Set the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=256 --model=resnet50 --variable_update=parameter_server

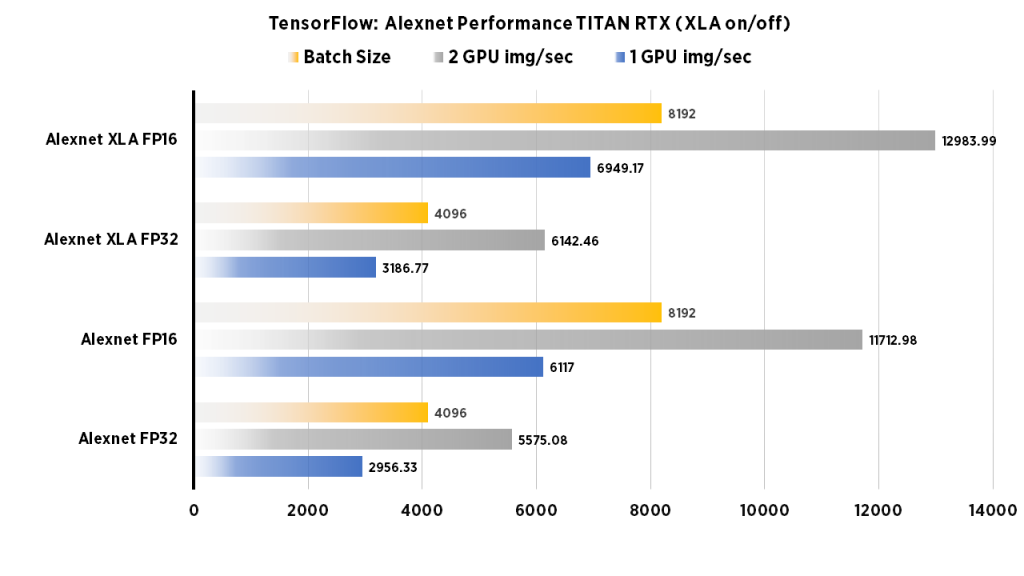

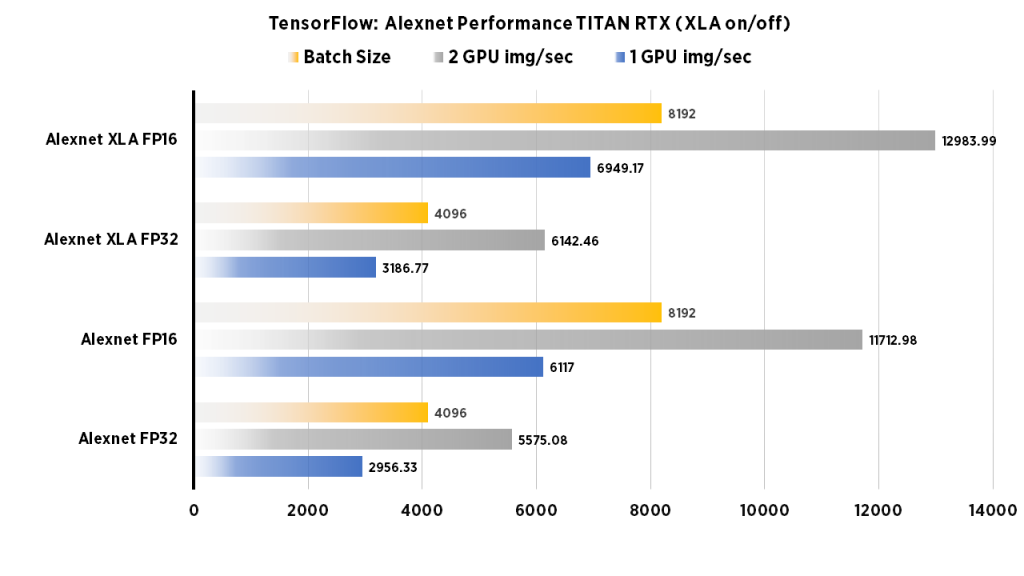

TITAN RTX Deep Learning Benchmarks: Alexnet (FP32, FP16, XLA FP16, XLA FP32)

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=4096--model=alexnet --variable_update=parameter_server --use_fp16=True

Run these benchmarks with XLA

To run with XLA, configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=8192 --num_batches=100 --model=alexnet --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --use_fp16=True --nodistortions --gradient_repacking=2 --datasets_use_codefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

System Specifications:

| System | Exxact TITAN Workstation |

| GPU | 2 x NVIDIA TITAN RTX |

| CPU | Intel CORE I7-7820X 3.6GHZ |

| RAM | 32GB DDR4 |

| SSD | 480 GB SSD |

| HDD (data) | 10 TB HDD |

| OS | Ubuntu 18.04 |

| NVIDIA DRIVER | 418.43 |

| CUDA Version | 10.1 |

| Python | 2.7, 3.7 |

| TensorFlow | 1.14 |

| Docker Image | tensorflow/tensorflow:nightly-gpu |

Training Parameters (non XLA)

| Dataset: | Imagenet (synthetic) |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | Varied |

| Num Batches: | 100 |

| Num Epochs: | 0.08 |

| Devices: | ['/gpu:0']...(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | sgd |

| Variables: | parameter_server |

Training Parameters (XLA)

| Dataset: | Imagenet (synthetic) |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | Varied |

| Num Batches: | 100 |

| Num Epochs: | 0.08 |

| Devices: | ['/gpu:0']...(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | momentum |

| Variables: | replicated |

| AllReduce | nccl |

More Deep Learning Benchmarks...

TITAN RTX Benchmarks for Deep Learning in TensorFlow 2019: XLA, FP16, FP32, & NVLink

NVIDIA Titan RTX Benchmarks

For this blog article, we conducted deep learning performance benchmarks for TensorFlow using NVIDIA TITAN RTX GPUs. Tests were conducted using an Exxact TITAN Workstation outfitted with 2x TITAN RTXs with an NVLink bridge. We ran the standard "tf_cnn_benchmarks.py" benchmark script found here in the official TensorFlow github.

We ran tests on the following networks: ResNet50, ResNet152, Inception v3, Inception v4, VGG-16, AlexNet, and Nasnet. Furthermore, we compared FP16 to FP32 performance, and compared numbers using XLA. The same tests were conducted using 1 and 2 GPU configurations, and batch size used was the largest that could fit in memory (powers of two).

Key Points and Observations

- The TITAN RTX an excellent choice if you will need large batch size for training while keeping costs within decent price point.

- Performance (img/sec) is comparable to Quadro RTX 6000 Benchmark Performance in most instances.

- Observing this dual GPU configuration, the workstation ran silently, and very cool during training workloads (Note: The chassis offers a lot of airflow).

- Significant gains were made using XLA in most cases, especially when in FP16.

TITAN RTX Benchmark Snapshot, All Models, XLA on/off, FP32, FP16

TITAN RTX Deep Learning Benchmarks: FP16 (XLA on)

[supsystic-tables id=31]

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=256 --num_batches=100 --model=inception4 --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --use_fp16=True --nodistortions --gradient_repacking=2 --datasets_use_codefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

TITAN RTX Deep Learning Benchmarks: FP16 (XLA off)

| 1 GPU img/sec | 2 GPU img/sec | Batch Size | |

| InceptionV4 | 323.93 | 475.52 | 256 |

| ResNet152 | 421.69 | 836.44 | 256 |

| VGG16 | 561.49 | 1113.41 | 512 |

| NASNET | 400.37 | 797.99 | 256 |

| InceptionV3 | 578.49 | 1058.66 | 256 |

| ResNet50 | 1096.44 | 2186.79 | 512 |

Run these benchmarks

Apply the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=512 --model=resnet50 --variable_update=parameter_server --use_fp16=TrueTITAN RTX Deep Learning Benchmarks: FP32 (XLA on)

| 1 GPU img/sec | 2 GPU img/sec | Batch Size | |

| InceptionV4 | 207.98 | 399.16 | 256 |

| ResNet152 | 284.87 | 530.86 | 256 |

| NASNET | 191.09 | 369.07 | 256 |

| VGG16 | 287.1 | 544.71 | 512 |

| InceptionV3 | 397.09 | 784.24 | 256 |

| ResNet50 | 646.13 | 1287.01 | 512 |

Run these benchmarks

Change the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=256 --num_batches=100 --model=resnet50 --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --nodistortions --gradient_repacking=2 --datasets_use_codefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

TITAN RTX Deep Learning Benchmarks: FP32 (XLA off)

| 1 GPU img/sec | 2 GPU img/sec | Batch Size | |

| VGG16 | 219.09 | 418.78 | 256 |

| ResNet152 | 151.76 | 298.1 | 256 |

| InceptionV4 | 116.04 | 218.2 | 128 |

| InceptionV3 | 241.22 | 477.17 | 128 |

| ResNet50 | 382.63 | 755.26 | 256 |

| NASNET | 402.65 | 802.59 | 256 |

Run these benchmarks

Set the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=256 --model=resnet50 --variable_update=parameter_server

TITAN RTX Deep Learning Benchmarks: Alexnet (FP32, FP16, XLA FP16, XLA FP32)

Run these benchmarks

Configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --num_gpus=1 --batch_size=4096--model=alexnet --variable_update=parameter_server --use_fp16=True

Run these benchmarks with XLA

To run with XLA, configure the num_gpus to the number of GPUs desired to test. Change model to desired architecture.

python tf_cnn_benchmarks.py --data_format=NCHW --batch_size=8192 --num_batches=100 --model=alexnet --optimizer=momentum --variable_update=replicated --all_reduce_spec=nccl --use_fp16=True --nodistortions --gradient_repacking=2 --datasets_use_codefetch=True --per_gpu_thread_count=2 --loss_type_to_report=base_loss --compute_lr_on_cpu=True --single_l2_loss_op=True --xla_compile=True --local_parameter_device=gpu --num_gpus=1 --display_every=10

System Specifications:

| System | Exxact TITAN Workstation |

| GPU | 2 x NVIDIA TITAN RTX |

| CPU | Intel CORE I7-7820X 3.6GHZ |

| RAM | 32GB DDR4 |

| SSD | 480 GB SSD |

| HDD (data) | 10 TB HDD |

| OS | Ubuntu 18.04 |

| NVIDIA DRIVER | 418.43 |

| CUDA Version | 10.1 |

| Python | 2.7, 3.7 |

| TensorFlow | 1.14 |

| Docker Image | tensorflow/tensorflow:nightly-gpu |

Training Parameters (non XLA)

| Dataset: | Imagenet (synthetic) |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | Varied |

| Num Batches: | 100 |

| Num Epochs: | 0.08 |

| Devices: | ['/gpu:0']...(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | sgd |

| Variables: | parameter_server |

Training Parameters (XLA)

| Dataset: | Imagenet (synthetic) |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | Varied |

| Num Batches: | 100 |

| Num Epochs: | 0.08 |

| Devices: | ['/gpu:0']...(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | momentum |

| Variables: | replicated |

| AllReduce | nccl |