Using Datasets in Natural Language Processing (NLP)

NLP is an exciting domain right now, especially in use-cases like AutoNLP with Hugging Face, but it is painfully difficult to master. The main problem with getting started with NLP is the dearth of proper guidance and the excessive breadth of the domain. It’s easy to get lost in various papers and code trying to take everything in.

The thing to realize is that you cannot really learn everything when it comes to NLP as it is a vast field, but you can try to make incremental progress. And as you persevere, you might find that you know more than everyone else in the room. Just like everything else, the main thing here is taking those incremental steps.

One of the first steps you need to take is to train your NLP model on datasets. Creating your own dataset is a lot of work, and actually unnecessary when just starting out.

There are countless open-source datasets being released daily focusing on words, text, speech, sentences, slang, and just about anything else you can think of. Just keep in mind that open-source datasets aren't without their problems. Unfortunately, you have to deal with bias, incomplete data, and a slew of other concerns when just grabbing any old dataset to test on.

However, there are a couple of places online that do a great job of curating datasets to make it easier to find what you're looking for:

- Papers With Code - Nearly 5,000 machine learning datasets are categorized and easy to find.

- Hugging Face - A great site to find datasets focused on audio, text, speech, and other datasets specifically targeting NLP.

That being said, the following list is what we recommend as some of the best open-source datasets to start learning NLP, or you can try out the various models and follow those steps.

Interested in a deep learning solution for NLP research?

Learn more about Exxact workstations built to accelerate AI development.

1. Quora Question Insincerity Dataset

This dataset is pretty fun. In this NLP Challenge on Kaggle we are provided a classification dataset where you have to predict if a question is toxic based on question content. Another thing that makes this dataset invaluable is the great kernels by various Kaggle users.

There’s been a number of various posts on the same dataset, which could help a lot if you want to start with NLP.

- The article, Text Preprocessing Methods for Deep Learning, contains preprocessing techniques that work with Deep learning models, where we talk about increasing embedding coverage.

- In the second article Conventional Methods for Text Classification, we try to take you through some basic conventional models like TFIDF, Countvectorizer, Hashing, etc. that have been used in text classification and try to access their performance to create a baseline.

- You can delve deeper into Deep learning models in Attention, CNN, and what not for Text Classification article, which focuses on different architectures for solving the text classification problem.

- And here’s one about transfer learning using BERT and ULMFit.

2. Stanford Question Answering Dataset (SQuAD)

The Stanford Question Answering Dataset (SQuAD) is a collection of question-answer pairs derived from Wikipedia articles.

To put it simply, in this dataset we are given a question and a text wherein the answer to the question lies. The task then is to find out the span in the text where the answer lies. This task is commonly referred to as the Question & Answering Task.

If you want to delve deeper, look at the Understanding BERT with Hugging Face article, sharing how you can predict answers to questions using this dataset and BERT model using the Hugging Face library.

3. UCI ML Drug Review Dataset

Drug review predicts disease conditions using NLP, Photo by Michał Parzuchowski on Unsplash

Can you predict the disease conditions based on the drug review? The UCI ML Drug Review dataset provides patient reviews on specific drugs along with related conditions and a 10-star patient rating system reflecting overall patient satisfaction.

This dataset could be used for multiclass classification as published in Using Deep Learning for End-to-End Multiclass Text Classification, and one can also try to use various numerical features along with text to use this dataset to solve a multiclass problem.

This is a good small dataset to play with if you want to get your hands dirty with NLP.

4. Yelp Reviews Dataset

Do you love food and are looking to create a good reviewing website?

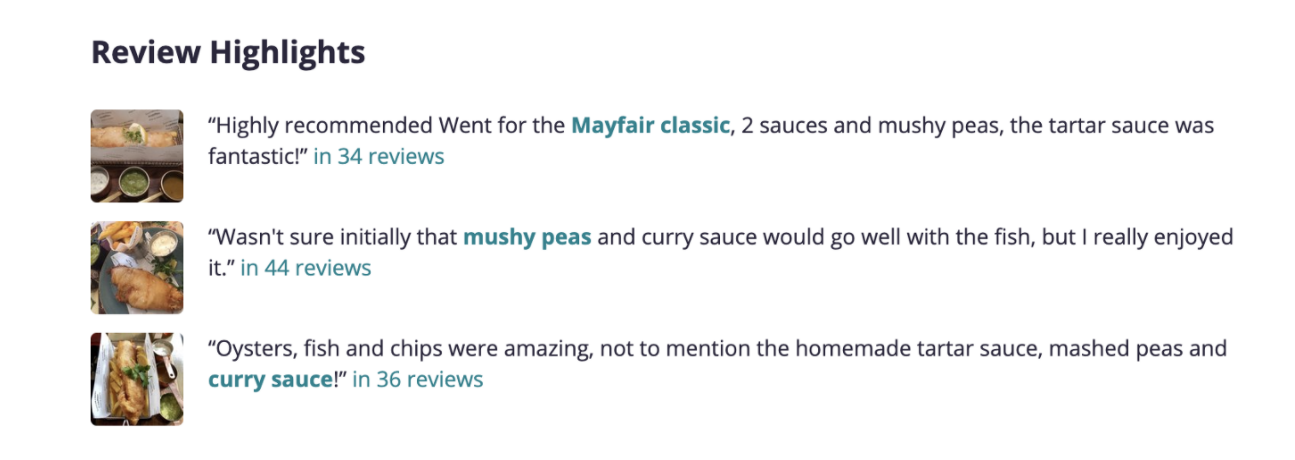

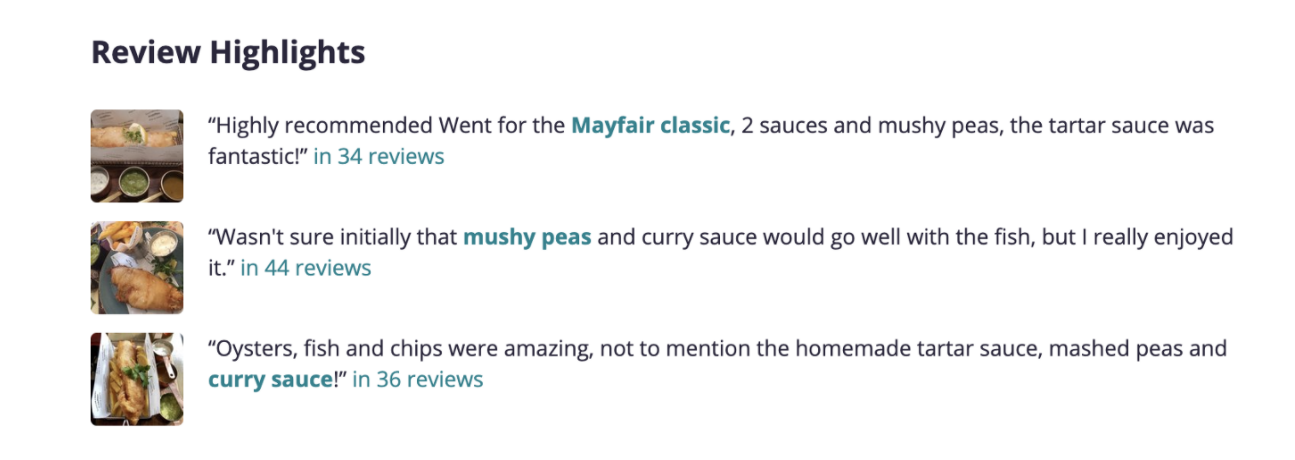

This Yelp dataset lets you have Yelp restaurant reviews as well as other information like categories, opening times, and closing times in JSON format. One of the problems one can try to solve is to create a system to categorize dishes into categories. Or use it for named entity recognition (NER) to find out dishes in the review. Can you find out or create a system on how Yelp gets the review highlights for the restaurants?

This is also a good dataset for understanding Yelp's business and search. The sky's the limit on how you would like to use this dataset.

This open source dataset contains 8,635,403 reviews of restaurants from 8 metropolitan areas; Source: Yelp

5. IMDB Movie Dataset

NLP open source datasets for IMDB movie information, Photo by Marques Kaspbrak on Unsplash

Looking for the next movie to watch? This dataset contains the movie description, average rating, number of votes, genre, and cast information for 50k movies from IMDB.

Again, this dataset can be used in a variety of ways and not only from an NLP perspective. The most common ways to use this dataset can be to build recommendation engines, Genre classification, and find similar movies.

6. 20 Newsgroups

The twenty newsgroups dataset comprises around 18,000 newsgroup posts on twenty topics. The topics are diverse and range from sports, crypto, religion, politics, electronics, and more.

This is a multiclass classification dataset, but you could use this one to learn topic modeling too, as demonstrated in Topic Modeling using Gensim-LDA in Python.

7. IWSLT (International Workshop on Spoken Language Translation) Dataset

This machine translation dataset is sort of the de facto standard used for translation tasks and contains the translation of TED and TEDx talks on diverse topics in German, English, Italian, Dutch and Romanian languages. This means you would be able to train a translator between any pair of these languages.

Another good thing is that it can be accessed with PyTorch using torchtext.datasets.

If you want to dig deeper into how you can use this dataset to create your own transformer, we walk through BERT Transformers and how they work, and you can also learn more on how to use BERT to create a translator from scratch. You can learn more about NLP and solve various tasks, and there are also some provided avenues where you can look to solve problems using these datasets.

Good luck!

Have any questions?

Contact Exxact Today

7 Top Open Source Datasets to Train Natural Language Processing (NLP) & Text Models

Using Datasets in Natural Language Processing (NLP)

NLP is an exciting domain right now, especially in use-cases like AutoNLP with Hugging Face, but it is painfully difficult to master. The main problem with getting started with NLP is the dearth of proper guidance and the excessive breadth of the domain. It’s easy to get lost in various papers and code trying to take everything in.

The thing to realize is that you cannot really learn everything when it comes to NLP as it is a vast field, but you can try to make incremental progress. And as you persevere, you might find that you know more than everyone else in the room. Just like everything else, the main thing here is taking those incremental steps.

One of the first steps you need to take is to train your NLP model on datasets. Creating your own dataset is a lot of work, and actually unnecessary when just starting out.

There are countless open-source datasets being released daily focusing on words, text, speech, sentences, slang, and just about anything else you can think of. Just keep in mind that open-source datasets aren't without their problems. Unfortunately, you have to deal with bias, incomplete data, and a slew of other concerns when just grabbing any old dataset to test on.

However, there are a couple of places online that do a great job of curating datasets to make it easier to find what you're looking for:

- Papers With Code - Nearly 5,000 machine learning datasets are categorized and easy to find.

- Hugging Face - A great site to find datasets focused on audio, text, speech, and other datasets specifically targeting NLP.

That being said, the following list is what we recommend as some of the best open-source datasets to start learning NLP, or you can try out the various models and follow those steps.

Interested in a deep learning solution for NLP research?

Learn more about Exxact workstations built to accelerate AI development.

1. Quora Question Insincerity Dataset

This dataset is pretty fun. In this NLP Challenge on Kaggle we are provided a classification dataset where you have to predict if a question is toxic based on question content. Another thing that makes this dataset invaluable is the great kernels by various Kaggle users.

There’s been a number of various posts on the same dataset, which could help a lot if you want to start with NLP.

- The article, Text Preprocessing Methods for Deep Learning, contains preprocessing techniques that work with Deep learning models, where we talk about increasing embedding coverage.

- In the second article Conventional Methods for Text Classification, we try to take you through some basic conventional models like TFIDF, Countvectorizer, Hashing, etc. that have been used in text classification and try to access their performance to create a baseline.

- You can delve deeper into Deep learning models in Attention, CNN, and what not for Text Classification article, which focuses on different architectures for solving the text classification problem.

- And here’s one about transfer learning using BERT and ULMFit.

2. Stanford Question Answering Dataset (SQuAD)

The Stanford Question Answering Dataset (SQuAD) is a collection of question-answer pairs derived from Wikipedia articles.

To put it simply, in this dataset we are given a question and a text wherein the answer to the question lies. The task then is to find out the span in the text where the answer lies. This task is commonly referred to as the Question & Answering Task.

If you want to delve deeper, look at the Understanding BERT with Hugging Face article, sharing how you can predict answers to questions using this dataset and BERT model using the Hugging Face library.

3. UCI ML Drug Review Dataset

Drug review predicts disease conditions using NLP, Photo by Michał Parzuchowski on Unsplash

Can you predict the disease conditions based on the drug review? The UCI ML Drug Review dataset provides patient reviews on specific drugs along with related conditions and a 10-star patient rating system reflecting overall patient satisfaction.

This dataset could be used for multiclass classification as published in Using Deep Learning for End-to-End Multiclass Text Classification, and one can also try to use various numerical features along with text to use this dataset to solve a multiclass problem.

This is a good small dataset to play with if you want to get your hands dirty with NLP.

4. Yelp Reviews Dataset

Do you love food and are looking to create a good reviewing website?

This Yelp dataset lets you have Yelp restaurant reviews as well as other information like categories, opening times, and closing times in JSON format. One of the problems one can try to solve is to create a system to categorize dishes into categories. Or use it for named entity recognition (NER) to find out dishes in the review. Can you find out or create a system on how Yelp gets the review highlights for the restaurants?

This is also a good dataset for understanding Yelp's business and search. The sky's the limit on how you would like to use this dataset.

This open source dataset contains 8,635,403 reviews of restaurants from 8 metropolitan areas; Source: Yelp

5. IMDB Movie Dataset

NLP open source datasets for IMDB movie information, Photo by Marques Kaspbrak on Unsplash

Looking for the next movie to watch? This dataset contains the movie description, average rating, number of votes, genre, and cast information for 50k movies from IMDB.

Again, this dataset can be used in a variety of ways and not only from an NLP perspective. The most common ways to use this dataset can be to build recommendation engines, Genre classification, and find similar movies.

6. 20 Newsgroups

The twenty newsgroups dataset comprises around 18,000 newsgroup posts on twenty topics. The topics are diverse and range from sports, crypto, religion, politics, electronics, and more.

This is a multiclass classification dataset, but you could use this one to learn topic modeling too, as demonstrated in Topic Modeling using Gensim-LDA in Python.

7. IWSLT (International Workshop on Spoken Language Translation) Dataset

This machine translation dataset is sort of the de facto standard used for translation tasks and contains the translation of TED and TEDx talks on diverse topics in German, English, Italian, Dutch and Romanian languages. This means you would be able to train a translator between any pair of these languages.

Another good thing is that it can be accessed with PyTorch using torchtext.datasets.

If you want to dig deeper into how you can use this dataset to create your own transformer, we walk through BERT Transformers and how they work, and you can also learn more on how to use BERT to create a translator from scratch. You can learn more about NLP and solve various tasks, and there are also some provided avenues where you can look to solve problems using these datasets.

Good luck!

Have any questions?

Contact Exxact Today

.jpg?format=webp)