Deep learning has helped advance the state-of-the-art in multiple fields over the last decade, with scientific research as no exception. We've previously discussed Deepmind's impressive debut in protein folding prediction, as well as a project by Stanford students studying protein complex binding operations, which are both examples of using deep learning to study very small things.

Deep learning has likewise found applications in scientific research at the opposite end of the scale spectrum. In this post, we'll discuss some recent applications of deep learning used to study cosmology, aka the study of the universe. As you might imagine, this topic encompasses a wide variety of sub-categories. We'll rate the projects on hype and impact from a machine learning and basic science perspective, although we'll judge this on interesting-ness ourselves instead of relying on a citation metric. We'll also include a link to each project's public repository when possible so you can check them out for yourself.

What's All the Hype About? AI Solves the 3-Body Problem in Cosmology

(Green et al. 2019)At a Glance:

TL;DR: A multi-layer perceptron was trained to predict future positions in simulations of a simplified 3-body problem.

Hype: 4/3 triple espressos. Coverage of this project ranged from AI could solve the baffling three-body problem that stumped Isaac Newton to A neural net solves the three-body problem 100 million times faster. The hype in the press follows on from the descriptive approach in the research paper, which seems to be precision-engineered to wow readers outside of their domain of expertise. Since co-expertise in deep learning and n-body orbital mechanics is going to be rare-squared, it's easy to get bogged down in unfamiliar details and miss the point.

Impact (ML): 3/10 dense layers. It is interesting to see a deep multi-layer perceptron being used in the age where big conv-nets are ubiquitous.

Impact (Physics): 3/n massive bodies.

Model: 10 layers fully connected neural network with ReLU activations.

Input data: Starting position of one of the three bodies and target time t.

Output: Positions of two of the three particles at time t. The third particle position is implied by the coordinate reference system.

Code: https://github.com/pgbreen/NVM (inference with pre-trained model weights only)

Unpacking the Details of How AI Solves the 3-body Problem in Cosmology

In classical orbital mechanics, predicting the future positions of an isolated system of 2 gravitational bodies is comparatively easy. Add a single additional body and we have the infamous 3-body problem, a classic example of how chaos can emerge in the dynamic interactions of a seemingly simple system. A hallmark of chaotic systems is that they display extreme sensitivity to initial conditions and their behavior can seem random. These states are difficult to predict, and the longer a chaotic system evolves the more difficult prediction becomes as past errors compound. It's one reason why it's much easier for an agent to learn to swing up a solid pole than a jointed one:

A chaotic system like this double pendulum is difficult to predict and control

Like a reinforcement learning agent struggling to control a double pendulum, scientists also find it difficult to predict the future states of chaotic systems like the 3-body problem. There's no analytical solution, so computational physicists fall back on their old friend brute force computation. This works, but it's not always clear how much numerical precision is needed and can eat up a lot of resources.

The authors of the article used a 10 layer multi-layer perceptron to predict future states from 3-body orbital problems. The training data was computed by a brute force numerical simulator called Brutus. I enjoyed seeing an 'old-fashioned' multi-layer perceptron, and it would have been interesting to have a look at the code to play around with different training hyperparameters and different architectures. Unfortunately, the public code that is available doesn't include any training utilities.

I agree with recent skepticism in that the claims of the paper are supported by a very narrow and simplified use-case, unlikely to generalize easily to more complex situations. I'd add that the results were less impressive than they are billed. The performance degrades substantially when trained to predict further into the future, ranging from a mean absolute error of about 0.01 to 0.2. Those errors are large when the unitless numbers in question are almost always between -1 and +1. Training the network to predict further into the future also results in much greater overfitting on the training set, an issue which isn't addressed in the discussion.

What's the Most Valuable Area of Using Machine Learning (ML) in Cosmology? Discovering More Exoplanets with Deep Learning

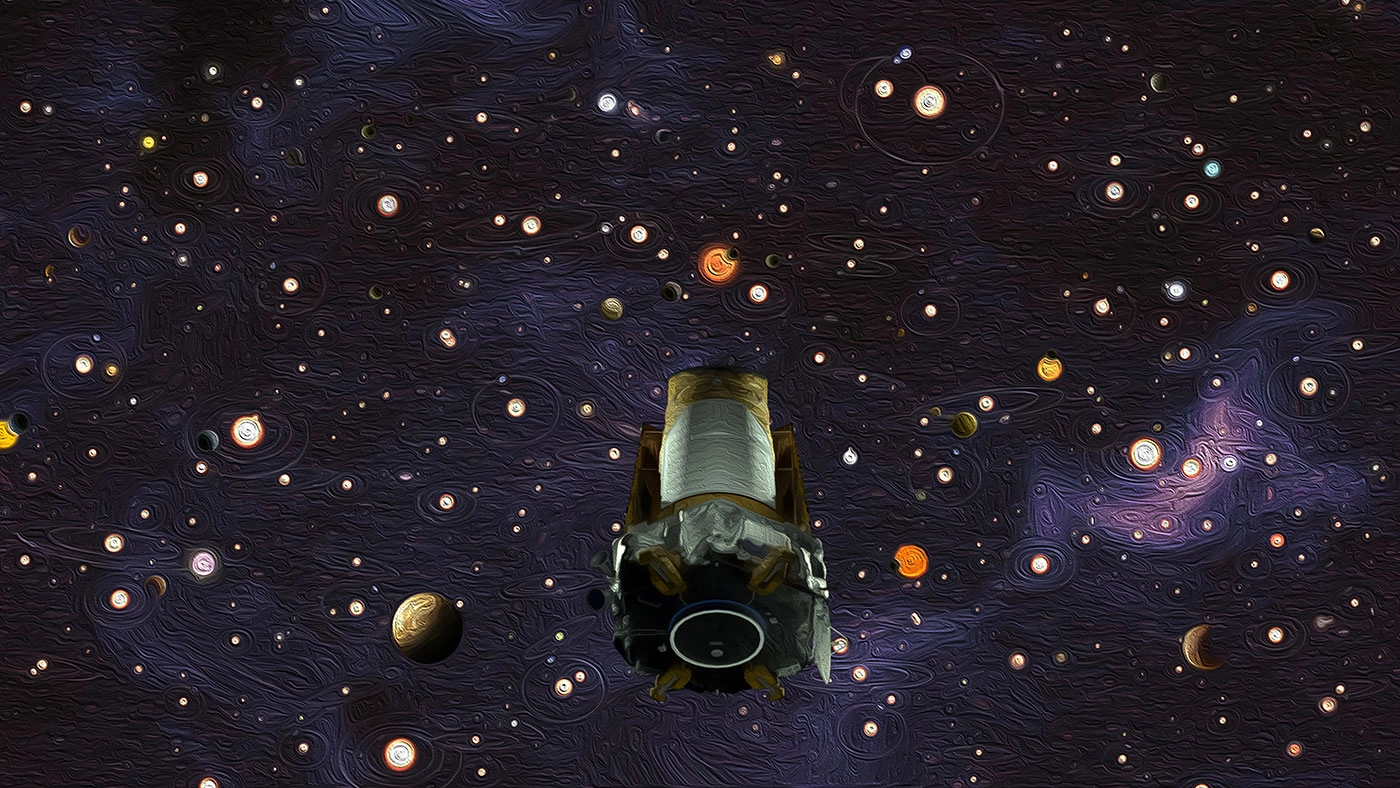

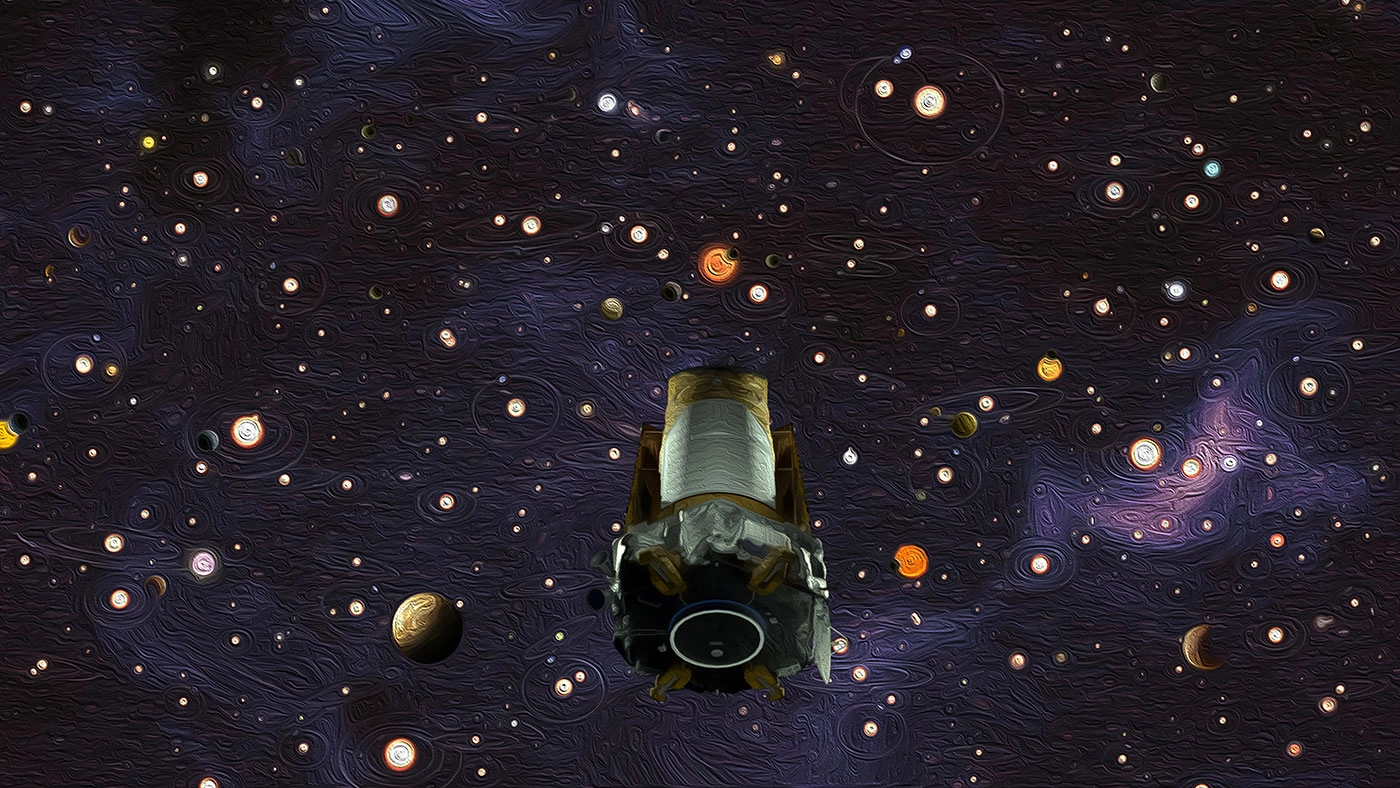

Artist's impression of K2-18b by Martin Kornmesser. Image credit NASA

(Dattilo et al. 2019)

At a Glance on How Machine Learning Helps Discover New Planets

TL;DR: The Kepler space telescope broke in 2013 leading to noisy data, and lots of it. Researchers trained AstroNet-K2 as a modified version of a previous model, AstroNet, to work with the new noisy data and discovered two new exoplanets. These planets were verified with follow-up observations.

Hype: 1/3 triple espressos. Coverage such as the write-up at MIT's Technology Review was reasonably measured, failing to make exaggerated or unrealistic claims about the project but sometimes neglecting to mention that AstroNet-K2 was based on a previous project, AstroNet, published a year earlier.

Impact (ML): 4/8 convolutional filters. The difference between AstroNet and AstroNet-K2 seems to be down to a hyperparameter search and different dataset.

) discovered by AstroNet-K2 and validated with additional observations. I would consider this to be a pretty rewarding outcome of what started as an undergraduate research project.

) discovered by AstroNet-K2 and validated with additional observations. I would consider this to be a pretty rewarding outcome of what started as an undergraduate research project.Model: Two separate convolutional arms extract features from input data. The output of these convolutional layers is joined and fed into 4 fully connected layers that output the final prediction.

Input data: 1D light curves from the K2 run of the Kepler telescope.

Output: Probability that a given signal is caused by a transiting exoplanet.

Code: https://github.com/aedattilo/models_K2

Unpacking the Details on How Machine Learning Discovers New Planets

The Kepler instrument is a space-based telescope designed to study and planets outside our solar system, aka exoplanets. The first exoplanet orbiting a star like our own was described by Didier Queloz and Michel Mayor in 1995, landing the pair the 2019 Nobel Prize in Physics. More than a decade later when Kepler was launched in 2009, the total number of known exoplanets was less than 400. The now-dormant telescope began operation in 2009, discovering more than 1,000 new exoplanets before a reaction wheel, a component used for precision pointing, failed in 2013. This brought the primary phase of the mission to an end.

Some clever engineering changes allowed the telescope to begin the second period of data acquisition, termed K2. The data from K2 were noisier and limited to 80 days of continuous observation or less. These limitations pose challenges in identifying promising planetary candidates among the thousands of putative planetary signals, a task that had been previously handled nicely by a convolutional neural network (AstroNet) working with the data from Kepler's primary data collection phase. Researchers at the University of Texas, Austin decided to try the same approach and derived AstroNet-K2 from the architecture of AstroNet to sort K2 planetary signals.

After training, AstroNet-K2 had a 98% accuracy rate in identifying confirmed exoplanets in the test set, with low false positives. The authors deemed this performance to be sufficient for use as an analysis tool rather than full automation, requiring human follow-up. From the paper:

While the performance of our network is not quite at the level

required to generate fully automatic and uniform

planet candidate catalogs, it serves as a proof of

concept. - (Dattilo et al. 2019)

AstroNet-K2 receives this blog post's coveted "Best Value" award for achieving a significant scientific bang-for-your-buck. Unlike the other two projects on this list that are more conceptual demonstrations, this project resulted in actual scientific progress, adding two new confirmed entries to the catalog of known exoplanets: EPIC 246151543 b and EPIC 246078672 b.

In addition to the intrinsic challenges of the K2 data, the signals for the planets were further confounded by Mars transiting the observation window and by 5 days of missing data associated with a safe mode event. This is a pretty good example of effective machine learning in action: the authors took an existing conv-net with a proven track record and modified it to perform well on the given data, adding a couple of new discoveries from a difficult observation run without re-inventing the wheel.

Worth noting is that the study's lead author, Anne Dattilo, was an undergraduate at the time the work was completed. That's a pretty good outcome for an undergraduate research project. The use of open-source software and building on previously developed architectures underlines the fact that deep learning is in an advanced readiness phase. The technology is not fully mature to the point of ubiquity, but the tools are all there on the shelf ready to be applied.

CosmoGAN, a Generative Adversarial Network Approach to Gravitational Lensing

(Mustafa et al 2019)At a Glance

Gravitational lensing of the Cheshire Cat galaxy cluster. Image credit NASA

TL;DR: There's a bunch of missing mass in the Universe and we call it dark matter. Gravity from this missing mass bends light, and cosmologists can guess where dark matter is based on how light is distorted. Deep Convolutional Generative Adversarial Networks are good at making realistic images, and these researchers trained one to make images that look like data associated with dark matter distributions.

Hype: 1/3 triple espressos. Essentially all of the press coverage I could find for this paper is cribbed from the press release from Lawrence Berkeley National Laboratory where the work was conducted. As such the coverage is not overly exaggerated or outlandish, although I would say that the description of what CosmoGAN actually does is vague (but the paper is not exactly clear in this regard either). My favorite headline was Cosmoboffins use neural networks to build dark matter maps the easy way.

Impact (ML): 6/64 latent space random variables. This is a bog-standard DCGAN trained to mimic gravitational weak lensing data.

Impact (Cosmology): 1 unobservable mass out of 10.

Model: CosmoGAN is a DCGAN. Each network has 4 layers, but the Generator has about 3x more parameters (12.3 million) than the Discriminator (4.4 million) by way of having fewer convolution filters. The parameter disparity is to stabilize training and keep the discriminator from running away from the generator.

Input data: 64 unit latent vector (Generator), generated (by CosmoGAN) and simulated (numerical physics simulator) 2D images of weak lensing convergence maps, which can correspond to dark matter distributions (Discriminator).

Output: Plausible convergence map (Generator), or probability that a given image is a real (simulated) convergence map (Discriminator)

Code: https://github.com/MustafaMustafa/cosmoGAN

Unpacking the Details on How Generative Adversarial Network Leads to a Better Approach to Gravitational Lensing

Dark matter is a comparatively mysterious form of matter that accounts for (we think) a substantial proportion (about 85%) of the mass of the universe. The "Dark" moniker refers to the fact that this form of matter is invisible to normal observations, but rather can only be extrapolated from gravitational effects like the discrepancy in galactic rotation speeds observed by Vera Rubin in the 1960s. An essential observable for studying dark matter is gravitational lensing, where massive objects distort the light originating from more distant objects. When lensing effects are caused by invisible dark matter, this becomes a difficult inverse problem requiring intensive simulations to overcome.

"If you wish to make an apple pie from scratch, you must first invent the universe" (Carl Sagan, Cosmos). The same goes for studying the presence of dark matter: the gold-standard method for building up a map of dark matter based on observations of gravitational lensing is to create a (virtual) universe. As we all know, universe creation is computationally expensive and spinning up a high-fidelity universe to check if it agrees with observations places substantial limits on the amount of science that can be done.

When data is plentiful and sufficient computation to analyze it is scarce, scientists look for ways to develop surrogate models that can explain the data without building a brand new universe each time. CosmoGAN is one such approach, leveraging modern deep learning generative networks to estimate convergence maps for gravitational lensing data.

Generative Adversarial Networks have come a long way since they were described by Goodfellow et al. in 2014. The GAN framework is a (now quite diverse) niche of generative models that pit a generative network against a discriminative, or forgery-detecting, network. These two networks work against each other to produce increasingly realistic synthetic data while the discriminator gets increasingly better at detecting the fakes. This interaction provides the only training signal necessary in a pure GAN training loop (although variants like conditional GANs may make use of additional data), and as a result, balancing the two networks in GAN training is something of an art, and GANs are notoriously prone to instability when this balance is uneven.

Given their reputation as difficult to train and interpret, it may come as a bit of a surprise that GANs have found a number of practitioners among computational cosmologists. CosmoGAN is pretty limited in scope, however. The Generator learns to mimic statistically realistic images of convergence maps, but these aren't related to anything but the random inputs in the latent space. A conditional GAN like the pix2pix scheme underlying the venerable edges2cats demo would make more sense in this context. That is to say that it would be useful to generate a convergence map that plausibly explains lensing in given astronomical images, which could be verified by additional observations.

Also, the convergence maps produced by the generator are 2D, but the dark matter will actually be distributed in 3 dimensions. It's implied by the authors that some of these limitations will be addressed in future work. For example, a reference to "controllable GANs" sounds similar to conditional GANs mentioned above, and they do intend to make a 3D version for volumetric dark matter distributions. If that's the case, 85% of the work in this project remains unobservable for now.

These 3 Machine Learning (ML) Applications are Allowing for Incredible Discoveries and Breakthroughs in Cosmology

And we are just beginning to see its potential in cosmology. This post highlights the fact that making new discoveries in space research doesn't require boldly going where no scientist has gone before. Rather, clever implementation of a proven technique adapted for and applied to a new dataset can yield discovery, as we saw in the exoplanet project. On the other hand, the two other projects on this list, while possessing loftier aspirations and shinier descriptions, are conceptual explorations using simulated data only.

We can also note that the hype factor in the lay press coverage in these examples is correlated to the level of hyperbole employed in research paper descriptions. This suggests that researchers can't blame exaggerated press coverage solely on science journalists, a problem that plagues most areas of research and not just AI/ML. Cultivating excessive hype can cause unproductive feedback loops, leading to economical/technological bubbles and inefficient allocation of research resources. That's why we prefer to take a closer look at the stellar claims of new deep learning applications, be they studying the universe or something more mundane.

Deep Learning in the Cosmos: Ranking 3 Machine Learning (ML) Applications

Deep learning has helped advance the state-of-the-art in multiple fields over the last decade, with scientific research as no exception. We've previously discussed Deepmind's impressive debut in protein folding prediction, as well as a project by Stanford students studying protein complex binding operations, which are both examples of using deep learning to study very small things.

Deep learning has likewise found applications in scientific research at the opposite end of the scale spectrum. In this post, we'll discuss some recent applications of deep learning used to study cosmology, aka the study of the universe. As you might imagine, this topic encompasses a wide variety of sub-categories. We'll rate the projects on hype and impact from a machine learning and basic science perspective, although we'll judge this on interesting-ness ourselves instead of relying on a citation metric. We'll also include a link to each project's public repository when possible so you can check them out for yourself.

What's All the Hype About? AI Solves the 3-Body Problem in Cosmology

(Green et al. 2019)At a Glance:

TL;DR: A multi-layer perceptron was trained to predict future positions in simulations of a simplified 3-body problem.

Hype: 4/3 triple espressos. Coverage of this project ranged from AI could solve the baffling three-body problem that stumped Isaac Newton to A neural net solves the three-body problem 100 million times faster. The hype in the press follows on from the descriptive approach in the research paper, which seems to be precision-engineered to wow readers outside of their domain of expertise. Since co-expertise in deep learning and n-body orbital mechanics is going to be rare-squared, it's easy to get bogged down in unfamiliar details and miss the point.

Impact (ML): 3/10 dense layers. It is interesting to see a deep multi-layer perceptron being used in the age where big conv-nets are ubiquitous.

Impact (Physics): 3/n massive bodies.

Model: 10 layers fully connected neural network with ReLU activations.

Input data: Starting position of one of the three bodies and target time t.

Output: Positions of two of the three particles at time t. The third particle position is implied by the coordinate reference system.

Code: https://github.com/pgbreen/NVM (inference with pre-trained model weights only)

Unpacking the Details of How AI Solves the 3-body Problem in Cosmology

In classical orbital mechanics, predicting the future positions of an isolated system of 2 gravitational bodies is comparatively easy. Add a single additional body and we have the infamous 3-body problem, a classic example of how chaos can emerge in the dynamic interactions of a seemingly simple system. A hallmark of chaotic systems is that they display extreme sensitivity to initial conditions and their behavior can seem random. These states are difficult to predict, and the longer a chaotic system evolves the more difficult prediction becomes as past errors compound. It's one reason why it's much easier for an agent to learn to swing up a solid pole than a jointed one:

A chaotic system like this double pendulum is difficult to predict and control

Like a reinforcement learning agent struggling to control a double pendulum, scientists also find it difficult to predict the future states of chaotic systems like the 3-body problem. There's no analytical solution, so computational physicists fall back on their old friend brute force computation. This works, but it's not always clear how much numerical precision is needed and can eat up a lot of resources.

The authors of the article used a 10 layer multi-layer perceptron to predict future states from 3-body orbital problems. The training data was computed by a brute force numerical simulator called Brutus. I enjoyed seeing an 'old-fashioned' multi-layer perceptron, and it would have been interesting to have a look at the code to play around with different training hyperparameters and different architectures. Unfortunately, the public code that is available doesn't include any training utilities.

I agree with recent skepticism in that the claims of the paper are supported by a very narrow and simplified use-case, unlikely to generalize easily to more complex situations. I'd add that the results were less impressive than they are billed. The performance degrades substantially when trained to predict further into the future, ranging from a mean absolute error of about 0.01 to 0.2. Those errors are large when the unitless numbers in question are almost always between -1 and +1. Training the network to predict further into the future also results in much greater overfitting on the training set, an issue which isn't addressed in the discussion.

What's the Most Valuable Area of Using Machine Learning (ML) in Cosmology? Discovering More Exoplanets with Deep Learning

Artist's impression of K2-18b by Martin Kornmesser. Image credit NASA

(Dattilo et al. 2019)

At a Glance on How Machine Learning Helps Discover New Planets

TL;DR: The Kepler space telescope broke in 2013 leading to noisy data, and lots of it. Researchers trained AstroNet-K2 as a modified version of a previous model, AstroNet, to work with the new noisy data and discovered two new exoplanets. These planets were verified with follow-up observations.

Hype: 1/3 triple espressos. Coverage such as the write-up at MIT's Technology Review was reasonably measured, failing to make exaggerated or unrealistic claims about the project but sometimes neglecting to mention that AstroNet-K2 was based on a previous project, AstroNet, published a year earlier.

Impact (ML): 4/8 convolutional filters. The difference between AstroNet and AstroNet-K2 seems to be down to a hyperparameter search and different dataset.

) discovered by AstroNet-K2 and validated with additional observations. I would consider this to be a pretty rewarding outcome of what started as an undergraduate research project.

) discovered by AstroNet-K2 and validated with additional observations. I would consider this to be a pretty rewarding outcome of what started as an undergraduate research project.Model: Two separate convolutional arms extract features from input data. The output of these convolutional layers is joined and fed into 4 fully connected layers that output the final prediction.

Input data: 1D light curves from the K2 run of the Kepler telescope.

Output: Probability that a given signal is caused by a transiting exoplanet.

Code: https://github.com/aedattilo/models_K2

Unpacking the Details on How Machine Learning Discovers New Planets

The Kepler instrument is a space-based telescope designed to study and planets outside our solar system, aka exoplanets. The first exoplanet orbiting a star like our own was described by Didier Queloz and Michel Mayor in 1995, landing the pair the 2019 Nobel Prize in Physics. More than a decade later when Kepler was launched in 2009, the total number of known exoplanets was less than 400. The now-dormant telescope began operation in 2009, discovering more than 1,000 new exoplanets before a reaction wheel, a component used for precision pointing, failed in 2013. This brought the primary phase of the mission to an end.

Some clever engineering changes allowed the telescope to begin the second period of data acquisition, termed K2. The data from K2 were noisier and limited to 80 days of continuous observation or less. These limitations pose challenges in identifying promising planetary candidates among the thousands of putative planetary signals, a task that had been previously handled nicely by a convolutional neural network (AstroNet) working with the data from Kepler's primary data collection phase. Researchers at the University of Texas, Austin decided to try the same approach and derived AstroNet-K2 from the architecture of AstroNet to sort K2 planetary signals.

After training, AstroNet-K2 had a 98% accuracy rate in identifying confirmed exoplanets in the test set, with low false positives. The authors deemed this performance to be sufficient for use as an analysis tool rather than full automation, requiring human follow-up. From the paper:

While the performance of our network is not quite at the level

required to generate fully automatic and uniform

planet candidate catalogs, it serves as a proof of

concept. - (Dattilo et al. 2019)

AstroNet-K2 receives this blog post's coveted "Best Value" award for achieving a significant scientific bang-for-your-buck. Unlike the other two projects on this list that are more conceptual demonstrations, this project resulted in actual scientific progress, adding two new confirmed entries to the catalog of known exoplanets: EPIC 246151543 b and EPIC 246078672 b.

In addition to the intrinsic challenges of the K2 data, the signals for the planets were further confounded by Mars transiting the observation window and by 5 days of missing data associated with a safe mode event. This is a pretty good example of effective machine learning in action: the authors took an existing conv-net with a proven track record and modified it to perform well on the given data, adding a couple of new discoveries from a difficult observation run without re-inventing the wheel.

Worth noting is that the study's lead author, Anne Dattilo, was an undergraduate at the time the work was completed. That's a pretty good outcome for an undergraduate research project. The use of open-source software and building on previously developed architectures underlines the fact that deep learning is in an advanced readiness phase. The technology is not fully mature to the point of ubiquity, but the tools are all there on the shelf ready to be applied.

CosmoGAN, a Generative Adversarial Network Approach to Gravitational Lensing

(Mustafa et al 2019)At a Glance

Gravitational lensing of the Cheshire Cat galaxy cluster. Image credit NASA

TL;DR: There's a bunch of missing mass in the Universe and we call it dark matter. Gravity from this missing mass bends light, and cosmologists can guess where dark matter is based on how light is distorted. Deep Convolutional Generative Adversarial Networks are good at making realistic images, and these researchers trained one to make images that look like data associated with dark matter distributions.

Hype: 1/3 triple espressos. Essentially all of the press coverage I could find for this paper is cribbed from the press release from Lawrence Berkeley National Laboratory where the work was conducted. As such the coverage is not overly exaggerated or outlandish, although I would say that the description of what CosmoGAN actually does is vague (but the paper is not exactly clear in this regard either). My favorite headline was Cosmoboffins use neural networks to build dark matter maps the easy way.

Impact (ML): 6/64 latent space random variables. This is a bog-standard DCGAN trained to mimic gravitational weak lensing data.

Impact (Cosmology): 1 unobservable mass out of 10.

Model: CosmoGAN is a DCGAN. Each network has 4 layers, but the Generator has about 3x more parameters (12.3 million) than the Discriminator (4.4 million) by way of having fewer convolution filters. The parameter disparity is to stabilize training and keep the discriminator from running away from the generator.

Input data: 64 unit latent vector (Generator), generated (by CosmoGAN) and simulated (numerical physics simulator) 2D images of weak lensing convergence maps, which can correspond to dark matter distributions (Discriminator).

Output: Plausible convergence map (Generator), or probability that a given image is a real (simulated) convergence map (Discriminator)

Code: https://github.com/MustafaMustafa/cosmoGAN

Unpacking the Details on How Generative Adversarial Network Leads to a Better Approach to Gravitational Lensing

Dark matter is a comparatively mysterious form of matter that accounts for (we think) a substantial proportion (about 85%) of the mass of the universe. The "Dark" moniker refers to the fact that this form of matter is invisible to normal observations, but rather can only be extrapolated from gravitational effects like the discrepancy in galactic rotation speeds observed by Vera Rubin in the 1960s. An essential observable for studying dark matter is gravitational lensing, where massive objects distort the light originating from more distant objects. When lensing effects are caused by invisible dark matter, this becomes a difficult inverse problem requiring intensive simulations to overcome.

"If you wish to make an apple pie from scratch, you must first invent the universe" (Carl Sagan, Cosmos). The same goes for studying the presence of dark matter: the gold-standard method for building up a map of dark matter based on observations of gravitational lensing is to create a (virtual) universe. As we all know, universe creation is computationally expensive and spinning up a high-fidelity universe to check if it agrees with observations places substantial limits on the amount of science that can be done.

When data is plentiful and sufficient computation to analyze it is scarce, scientists look for ways to develop surrogate models that can explain the data without building a brand new universe each time. CosmoGAN is one such approach, leveraging modern deep learning generative networks to estimate convergence maps for gravitational lensing data.

Generative Adversarial Networks have come a long way since they were described by Goodfellow et al. in 2014. The GAN framework is a (now quite diverse) niche of generative models that pit a generative network against a discriminative, or forgery-detecting, network. These two networks work against each other to produce increasingly realistic synthetic data while the discriminator gets increasingly better at detecting the fakes. This interaction provides the only training signal necessary in a pure GAN training loop (although variants like conditional GANs may make use of additional data), and as a result, balancing the two networks in GAN training is something of an art, and GANs are notoriously prone to instability when this balance is uneven.

Given their reputation as difficult to train and interpret, it may come as a bit of a surprise that GANs have found a number of practitioners among computational cosmologists. CosmoGAN is pretty limited in scope, however. The Generator learns to mimic statistically realistic images of convergence maps, but these aren't related to anything but the random inputs in the latent space. A conditional GAN like the pix2pix scheme underlying the venerable edges2cats demo would make more sense in this context. That is to say that it would be useful to generate a convergence map that plausibly explains lensing in given astronomical images, which could be verified by additional observations.

Also, the convergence maps produced by the generator are 2D, but the dark matter will actually be distributed in 3 dimensions. It's implied by the authors that some of these limitations will be addressed in future work. For example, a reference to "controllable GANs" sounds similar to conditional GANs mentioned above, and they do intend to make a 3D version for volumetric dark matter distributions. If that's the case, 85% of the work in this project remains unobservable for now.

These 3 Machine Learning (ML) Applications are Allowing for Incredible Discoveries and Breakthroughs in Cosmology

And we are just beginning to see its potential in cosmology. This post highlights the fact that making new discoveries in space research doesn't require boldly going where no scientist has gone before. Rather, clever implementation of a proven technique adapted for and applied to a new dataset can yield discovery, as we saw in the exoplanet project. On the other hand, the two other projects on this list, while possessing loftier aspirations and shinier descriptions, are conceptual explorations using simulated data only.

We can also note that the hype factor in the lay press coverage in these examples is correlated to the level of hyperbole employed in research paper descriptions. This suggests that researchers can't blame exaggerated press coverage solely on science journalists, a problem that plagues most areas of research and not just AI/ML. Cultivating excessive hype can cause unproductive feedback loops, leading to economical/technological bubbles and inefficient allocation of research resources. That's why we prefer to take a closer look at the stellar claims of new deep learning applications, be they studying the universe or something more mundane.