Introduction

When it comes to deep learning and AI, GPUs are the driving force behind training speed, model capacity, and overall productivity. The number of GPUs you choose directly impacts how quickly experiments run, how large a dataset or model you can handle, and how efficiently your team can scale. The key question isn’t just “How many GPUs do I need?” but rather

“What workload am I running, and what’s the right balance between performance, cost, and scalability?”

Let’s discuss whether GPUs are a good choice for a deep learning workstation, how many GPUs are needed for deep learning, and which GPUs are the best picks for your deep learning computing solution.

Why GPUs Lead Deep Learning Innovation

GPUs have become the standard for AI workloads thanks to their parallel processing design, which outpaces the sequential nature of CPUs. While CPUs once held an advantage with larger RAM capacity, they couldn’t match the speed GPUs brought to training neural networks and handling constant computations.

The shift began with NVIDIA’s CUDA, which expanded GPUs beyond graphics into general-purpose computing. Breakthroughs like AlexNet in the mid-2010s showcased GPUs’ superiority for deep learning, cementing their role in AI research and industry.

Choosing GPUs for Deep Learning

Selecting the right GPU depends on your workload and environment. Key considerations include Memory, Interconnect, Form Factor, and Use Case. With those in mind, here’s how GPU options break down:

- Consumer-Grade: Affordable, powerful single-card setups (24–32GB VRAM), but limited in multi-GPU scaling.

- Professional: Workstation GPUs with stable professional workload drivers, larger memory (up to 96GB), and better multi-GPU support.

- Enterprise: Data center GPUs optimized for AI/HPC. Passively cooled, no video outputs, maximum compute performance, and best multi-GPU scaling.

- Examples: NVIDIA DGX B200, NVIDIA HGX H200, NVIDIA H200 NVL

For most beginners, a high-end consumer-grade GPU like the NVIDIA RTX 5090 with 32GB VRAM offers the best balance of cost and capability. For power users, a 4x NVIDIA RTX PRO 6000 Blackwell Max-Q workstation is an excellent option. For enterprise, contact Exxact today to help you configure a dedicated GPU-accelerated computing infrastructure.

Configure an Exxact Workstation featuring 4x NVIDIA RTX PRO 6000 Blackwell Max-Q

Configure our most powerful workstation platform featuring AMD Threadripper PRO 9000WX and up to 4x NVIDIA RTX PRO 6000 Max-Q GPUs. Power your workloads with the best of the best.

Build NowDifferent GPU Setups for Deep Learning

Single GPU Setup

A single GPU is often the starting point for many researchers and enthusiasts. It provides a cost-effective way to learn, experiment, and build smaller models. While single-GPU systems may seem like the start, they satisfy 80% of user’s needs, especially in the enthusiast space, powering local LLMs and lightweight machine learning models.

- Best suited for prototyping, coursework, and personal projects

- Lower power and cooling needs compared to multi-GPU systems

- Limited by memory size and training time for larger models

If you are just beginning or primarily working with smaller datasets, a single high-memory GPU like an RTX 5090 with 32GB of VRAM is recommended. If you plan to upgrade this system, opt for a workstation GPU like the RTX PRO 6000 Blackwell Max-Q for future scalability.

Multi-GPU Workstations/Server (2–4 GPUs)

Once you need more performance than a single GPU can provide, moving to 2–4 GPUs is the natural step. Multi-GPU platforms provide:

- Speeds up training through parallelism

- Enables larger datasets and models to fit into memory

- More complex setup that requires software support for scaling

A multi-GPU setup balances power with accessibility, but you must choose between a workstation and a server:

- Workload: If you primarily do model development and testing, a workstation works. For production training or deployment pipelines, Servers are better suited.

- Networking: Servers provide better connectivity to storage, clusters, and high-speed networks. Workstations are often limited to local networking.

- Environment: Workstations belong under a desk or in a lab. Servers are meant for racks with proper cooling and 24/7 operation, accessible remotely through the network by a team.

This setup is ideal for research labs, startups, and engineers who need faster turnaround on training without stepping into full data center infrastructure.

Multi-GPU Compute Servers (8-10 GPUs)

Servers with 8 GPUs represent enterprise-class performance. These systems are designed for production AI, large-scale research, and high-performance training. Organizations that invest in an 8-GPU system often deploy multiple units for maximum throughput.

- High-bandwidth interconnects like NVLink or NVSwitch improve scaling efficiency

- Handles workloads such as large language models, diffusion networks, and complex computer vision

- Comes with higher costs, increased power requirements, and advanced cooling needs

For these systems, NVIDIA offers PCIe and SXM GPU form factors to consider:

- Interconnect Bandwidth: SXM provides higher bandwidth and supports NVSwitch for better scaling across all GPUs. PCIe is more affordable but limited to PCIe lanes for communication.

- Thermal and Power Design: SXM GPUs require liquid or advanced air cooling in dense rack environments. PCIe GPUs are easier to integrate in standard servers.

- Upgrade Flexibility: PCIe GPUs are easier to replace or mix generations. SXM is soldered and less flexible for incremental upgrades.

If your workloads demand maximum inter-GPU communication and you have the infrastructure for advanced cooling, SXM is the best choice. For more flexible and cost-conscious deployments, PCIe GPUs remain a strong option. For more information, you can read more here about SXM vs PCIe.

Which GPU Is Best For Deep Learning?

Any GPU can be used for deep learning, but the majority of the best GPUs are from NVIDIA. All of our recommendations will support this because NVIDIA has some of the highest-quality GPUs on the market right now. Although AMD is quickly gaining ground in graphics-intensive workloads, and as the cornerstone of a reliable data center.

Whether you are looking to dip your toe in the deep learning waters and start with a consumer-grade GPU, jump in with our recommendation for a top-tier data center GPU, or even making the leap to have a managed workstation server, we have you covered with these top three picks.

While the number of GPUs for a deep learning workstation may change based on which you spring for, in general, trying to maximize the amount you can have connected to your deep learning model is ideal. Starting with at least four GPUs for deep learning is going to be your best bet.

NVIDIA GeForce RTX 5090

The RTX 5090 is NVIDIA’s most powerful consumer-class GPU and a strong starting point for deep learning. While marketed as a gaming GPU, its performance is close to professional cards and it comes with 32GB of VRAM—enough for most mainstream AI models, including semi-quantized LLMs and large vision models.

- Best for individuals, hobbyists, and small-scale AI developers

- Cost-effective for prototyping and training mid-sized models

- Limited for multi-GPU scaling due to consumer card design

If you want to build a high-end workstation without breaking into enterprise budgets, the RTX 5090 is the best option available.

NVIDIA RTX PRO 6000 Blackwell

The RTX PRO 6000 Blackwell is NVIDIA’s new flagship for professional AI and HPC workloads. It features 96GB of GDDR7 VRAM with 1.8TB/s memory bandwidth, delivering twice the memory and bandwidth of the previous generation. This makes it ideal for deep learning models that exceed consumer GPU limits, including advanced vision models and larger parameter LLMs.

- Perfect for research labs, engineering teams, and content creators working on high-end AI

- Large VRAM allows running full-precision models without quantization

- High bandwidth ensures faster data throughput for training and inference

The RTX PRO 6000 Blackwell comes in three editions: Workstation, Max-Q, and Server Edition, allowing flexibility for desktops or rack servers.

NVIDIA H200 NVL

For enterprise AI, the NVIDIA H200 NVL sets the standard. It offers 141GB of HBM3e memory and an incredible 4.8TB/s memory bandwidth, making it one of the fastest and most memory-rich GPUs available. Built for data centers and large-scale AI, the H200 NVL is designed for maximum throughput and multi-GPU performance.

- Includes NVLink, enabling dual-GPU configurations with 1.8TB/s GPU-to-GPU bandwidth for bypassing PCIe bottlenecks

- Ideal for training very large models, high-parameter LLMs, and HPC simulations

- Requires rack-mounted server environments with advanced cooling and power

If you need the ultimate performance for enterprise AI or HPC, the H200 NVL is the most capable PCIe-based GPU currently available.

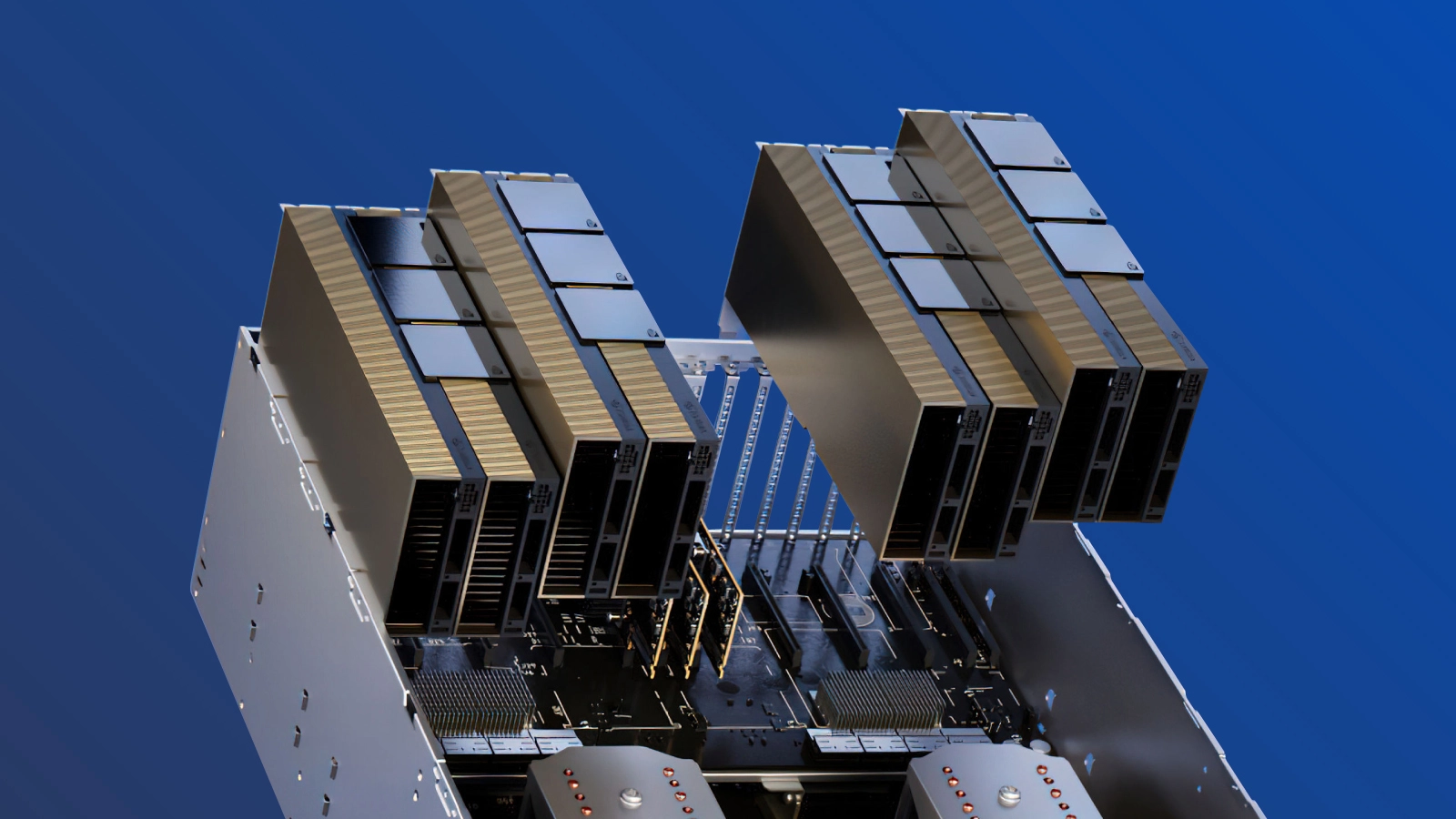

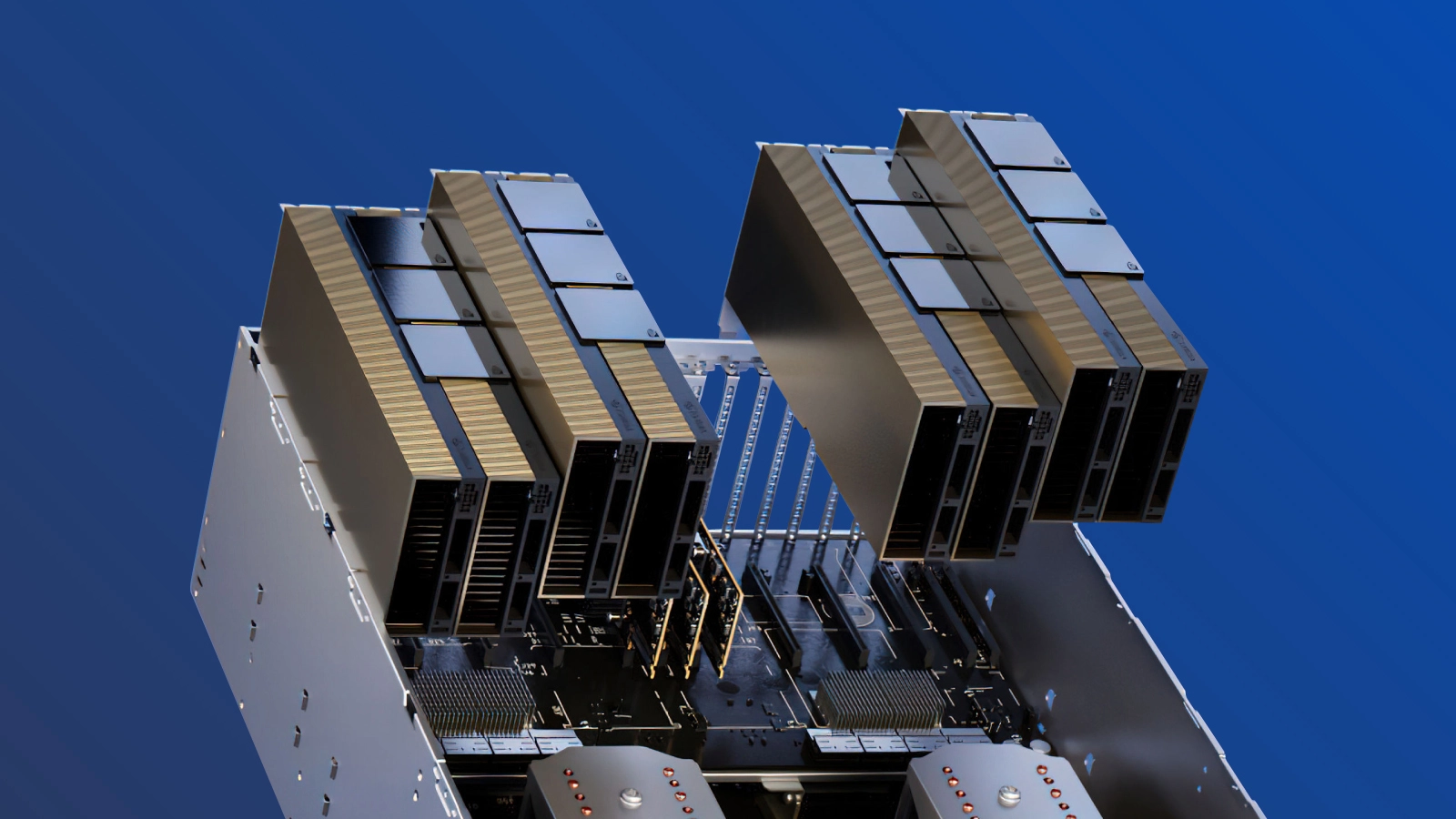

Bonus: NVIDIA HGX B200

The NVIDIA HGX B200 is the next generation of SXM-based GPUs, built for the highest-performance AI and HPC workloads. Each B200 GPU delivers massive compute with 192GB of HBM3e memory, optimized for large-scale training and inference. Unlike PCIe GPUs, the B200 is part of an SXM platform, offering full NVLink and NVSwitch interconnect, which enables all GPUs in a system to communicate at extremely high bandwidth for near-linear scaling.

- Designed for 8-GPU servers and multi-node clusters

- Higher interconnect bandwidth than PCIe solutions for superior scaling

- Requires advanced cooling (often liquid) and a data center environment

The HGX B200 platform is the foundation of systems like the NVIDIA DGX and OEM-built AI servers, making it the top choice for enterprises training and deploying enterprise LLMs and multimodal AI. If your goal is maximum performance and scalability across GPUs, the SXM-based B200 is unmatched.

Accelerate AI Training an NVIDIA HGX B300

Training AI models on massive datasets can be accelerated exponentially with purpose-built AI systems. NVIDIA HGX isn't just a high-performance computer, but a tool to propel and accelerate your research. Deploy multiple NVIDIA HGX B300 or NVIDIA HGX B200.

Get a Quote TodayFAQ: How Many GPUs for Deep Learning and AI

Do I really need multiple GPUs for deep learning?

Not always. A single high-memory GPU is enough for learning, prototyping, and running smaller models. Multi-GPU setups become necessary when training large models or working on enterprise-scale workloads.

How many GPUs should I start with?

For most beginners and researchers, starting with one high-end GPU (like an RTX 4090 or RTX 5090) is sufficient. As your models and datasets grow, scaling to 2–4 GPUs is common for serious research or production work.

What is the difference between PCIe and SXM GPUs?

PCIe GPUs are easier to integrate, upgrade, and fit into standard servers. SXM GPUs provide higher bandwidth, better interconnect with NVLink/NVSwitch, and superior scaling for multi-GPU systems, but require advanced cooling and are fixed to the system.

When should I move from a workstation to a server?

Choose a workstation for development, experimentation, and local training. Move to a server when you need 24/7 uptime, remote access, better networking, or plan to scale into a rack or cluster environment.

How do I know if I need a GPU cluster?

If your workloads involve training large language models, multimodal AI, or any job requiring hundreds of GB of VRAM and weeks of compute, a single server is not enough. At that point, multi-node clusters with fast interconnect become essential.

Conclusion

There is no one-size-fits-all answer to how many GPUs you need; it depends on your stage in the AI journey. whether you are experimenting, training production models, or deploying large-scale systems. A single GPU may be enough for early research, but as models grow and projects move into production, multi-GPU servers and eventually multi-system clusters become essential.

Investing in GPU compute is about planning for growth. Start with what meets your current workload, but consider future needs for faster training, distributed workloads, and reliable deployment. The right infrastructure at each stage ensures your AI development remains efficient, scalable, and ready for what’s next. Talk to an Exxact engineer to get your computing infrastructure configured to address your unique needs.

Fueling Innovation with an Exxact Designed Computing Cluster

Deploying full-scale AI models can be accelerated exponentially with the right computing infrastructure. Storage, head node, networking, compute - all components of your next Exxact cluster are configurable to your workload to drive and accelerate research and innovation.

Get a Quote Today

How Many GPUs Should Your Deep Learning Workstation Have?

Introduction

When it comes to deep learning and AI, GPUs are the driving force behind training speed, model capacity, and overall productivity. The number of GPUs you choose directly impacts how quickly experiments run, how large a dataset or model you can handle, and how efficiently your team can scale. The key question isn’t just “How many GPUs do I need?” but rather

“What workload am I running, and what’s the right balance between performance, cost, and scalability?”

Let’s discuss whether GPUs are a good choice for a deep learning workstation, how many GPUs are needed for deep learning, and which GPUs are the best picks for your deep learning computing solution.

Why GPUs Lead Deep Learning Innovation

GPUs have become the standard for AI workloads thanks to their parallel processing design, which outpaces the sequential nature of CPUs. While CPUs once held an advantage with larger RAM capacity, they couldn’t match the speed GPUs brought to training neural networks and handling constant computations.

The shift began with NVIDIA’s CUDA, which expanded GPUs beyond graphics into general-purpose computing. Breakthroughs like AlexNet in the mid-2010s showcased GPUs’ superiority for deep learning, cementing their role in AI research and industry.

Choosing GPUs for Deep Learning

Selecting the right GPU depends on your workload and environment. Key considerations include Memory, Interconnect, Form Factor, and Use Case. With those in mind, here’s how GPU options break down:

- Consumer-Grade: Affordable, powerful single-card setups (24–32GB VRAM), but limited in multi-GPU scaling.

- Professional: Workstation GPUs with stable professional workload drivers, larger memory (up to 96GB), and better multi-GPU support.

- Enterprise: Data center GPUs optimized for AI/HPC. Passively cooled, no video outputs, maximum compute performance, and best multi-GPU scaling.

- Examples: NVIDIA DGX B200, NVIDIA HGX H200, NVIDIA H200 NVL

For most beginners, a high-end consumer-grade GPU like the NVIDIA RTX 5090 with 32GB VRAM offers the best balance of cost and capability. For power users, a 4x NVIDIA RTX PRO 6000 Blackwell Max-Q workstation is an excellent option. For enterprise, contact Exxact today to help you configure a dedicated GPU-accelerated computing infrastructure.

Configure an Exxact Workstation featuring 4x NVIDIA RTX PRO 6000 Blackwell Max-Q

Configure our most powerful workstation platform featuring AMD Threadripper PRO 9000WX and up to 4x NVIDIA RTX PRO 6000 Max-Q GPUs. Power your workloads with the best of the best.

Build NowDifferent GPU Setups for Deep Learning

Single GPU Setup

A single GPU is often the starting point for many researchers and enthusiasts. It provides a cost-effective way to learn, experiment, and build smaller models. While single-GPU systems may seem like the start, they satisfy 80% of user’s needs, especially in the enthusiast space, powering local LLMs and lightweight machine learning models.

- Best suited for prototyping, coursework, and personal projects

- Lower power and cooling needs compared to multi-GPU systems

- Limited by memory size and training time for larger models

If you are just beginning or primarily working with smaller datasets, a single high-memory GPU like an RTX 5090 with 32GB of VRAM is recommended. If you plan to upgrade this system, opt for a workstation GPU like the RTX PRO 6000 Blackwell Max-Q for future scalability.

Multi-GPU Workstations/Server (2–4 GPUs)

Once you need more performance than a single GPU can provide, moving to 2–4 GPUs is the natural step. Multi-GPU platforms provide:

- Speeds up training through parallelism

- Enables larger datasets and models to fit into memory

- More complex setup that requires software support for scaling

A multi-GPU setup balances power with accessibility, but you must choose between a workstation and a server:

- Workload: If you primarily do model development and testing, a workstation works. For production training or deployment pipelines, Servers are better suited.

- Networking: Servers provide better connectivity to storage, clusters, and high-speed networks. Workstations are often limited to local networking.

- Environment: Workstations belong under a desk or in a lab. Servers are meant for racks with proper cooling and 24/7 operation, accessible remotely through the network by a team.

This setup is ideal for research labs, startups, and engineers who need faster turnaround on training without stepping into full data center infrastructure.

Multi-GPU Compute Servers (8-10 GPUs)

Servers with 8 GPUs represent enterprise-class performance. These systems are designed for production AI, large-scale research, and high-performance training. Organizations that invest in an 8-GPU system often deploy multiple units for maximum throughput.

- High-bandwidth interconnects like NVLink or NVSwitch improve scaling efficiency

- Handles workloads such as large language models, diffusion networks, and complex computer vision

- Comes with higher costs, increased power requirements, and advanced cooling needs

For these systems, NVIDIA offers PCIe and SXM GPU form factors to consider:

- Interconnect Bandwidth: SXM provides higher bandwidth and supports NVSwitch for better scaling across all GPUs. PCIe is more affordable but limited to PCIe lanes for communication.

- Thermal and Power Design: SXM GPUs require liquid or advanced air cooling in dense rack environments. PCIe GPUs are easier to integrate in standard servers.

- Upgrade Flexibility: PCIe GPUs are easier to replace or mix generations. SXM is soldered and less flexible for incremental upgrades.

If your workloads demand maximum inter-GPU communication and you have the infrastructure for advanced cooling, SXM is the best choice. For more flexible and cost-conscious deployments, PCIe GPUs remain a strong option. For more information, you can read more here about SXM vs PCIe.

Which GPU Is Best For Deep Learning?

Any GPU can be used for deep learning, but the majority of the best GPUs are from NVIDIA. All of our recommendations will support this because NVIDIA has some of the highest-quality GPUs on the market right now. Although AMD is quickly gaining ground in graphics-intensive workloads, and as the cornerstone of a reliable data center.

Whether you are looking to dip your toe in the deep learning waters and start with a consumer-grade GPU, jump in with our recommendation for a top-tier data center GPU, or even making the leap to have a managed workstation server, we have you covered with these top three picks.

While the number of GPUs for a deep learning workstation may change based on which you spring for, in general, trying to maximize the amount you can have connected to your deep learning model is ideal. Starting with at least four GPUs for deep learning is going to be your best bet.

NVIDIA GeForce RTX 5090

The RTX 5090 is NVIDIA’s most powerful consumer-class GPU and a strong starting point for deep learning. While marketed as a gaming GPU, its performance is close to professional cards and it comes with 32GB of VRAM—enough for most mainstream AI models, including semi-quantized LLMs and large vision models.

- Best for individuals, hobbyists, and small-scale AI developers

- Cost-effective for prototyping and training mid-sized models

- Limited for multi-GPU scaling due to consumer card design

If you want to build a high-end workstation without breaking into enterprise budgets, the RTX 5090 is the best option available.

NVIDIA RTX PRO 6000 Blackwell

The RTX PRO 6000 Blackwell is NVIDIA’s new flagship for professional AI and HPC workloads. It features 96GB of GDDR7 VRAM with 1.8TB/s memory bandwidth, delivering twice the memory and bandwidth of the previous generation. This makes it ideal for deep learning models that exceed consumer GPU limits, including advanced vision models and larger parameter LLMs.

- Perfect for research labs, engineering teams, and content creators working on high-end AI

- Large VRAM allows running full-precision models without quantization

- High bandwidth ensures faster data throughput for training and inference

The RTX PRO 6000 Blackwell comes in three editions: Workstation, Max-Q, and Server Edition, allowing flexibility for desktops or rack servers.

NVIDIA H200 NVL

For enterprise AI, the NVIDIA H200 NVL sets the standard. It offers 141GB of HBM3e memory and an incredible 4.8TB/s memory bandwidth, making it one of the fastest and most memory-rich GPUs available. Built for data centers and large-scale AI, the H200 NVL is designed for maximum throughput and multi-GPU performance.

- Includes NVLink, enabling dual-GPU configurations with 1.8TB/s GPU-to-GPU bandwidth for bypassing PCIe bottlenecks

- Ideal for training very large models, high-parameter LLMs, and HPC simulations

- Requires rack-mounted server environments with advanced cooling and power

If you need the ultimate performance for enterprise AI or HPC, the H200 NVL is the most capable PCIe-based GPU currently available.

Bonus: NVIDIA HGX B200

The NVIDIA HGX B200 is the next generation of SXM-based GPUs, built for the highest-performance AI and HPC workloads. Each B200 GPU delivers massive compute with 192GB of HBM3e memory, optimized for large-scale training and inference. Unlike PCIe GPUs, the B200 is part of an SXM platform, offering full NVLink and NVSwitch interconnect, which enables all GPUs in a system to communicate at extremely high bandwidth for near-linear scaling.

- Designed for 8-GPU servers and multi-node clusters

- Higher interconnect bandwidth than PCIe solutions for superior scaling

- Requires advanced cooling (often liquid) and a data center environment

The HGX B200 platform is the foundation of systems like the NVIDIA DGX and OEM-built AI servers, making it the top choice for enterprises training and deploying enterprise LLMs and multimodal AI. If your goal is maximum performance and scalability across GPUs, the SXM-based B200 is unmatched.

Accelerate AI Training an NVIDIA HGX B300

Training AI models on massive datasets can be accelerated exponentially with purpose-built AI systems. NVIDIA HGX isn't just a high-performance computer, but a tool to propel and accelerate your research. Deploy multiple NVIDIA HGX B300 or NVIDIA HGX B200.

Get a Quote TodayFAQ: How Many GPUs for Deep Learning and AI

Do I really need multiple GPUs for deep learning?

Not always. A single high-memory GPU is enough for learning, prototyping, and running smaller models. Multi-GPU setups become necessary when training large models or working on enterprise-scale workloads.

How many GPUs should I start with?

For most beginners and researchers, starting with one high-end GPU (like an RTX 4090 or RTX 5090) is sufficient. As your models and datasets grow, scaling to 2–4 GPUs is common for serious research or production work.

What is the difference between PCIe and SXM GPUs?

PCIe GPUs are easier to integrate, upgrade, and fit into standard servers. SXM GPUs provide higher bandwidth, better interconnect with NVLink/NVSwitch, and superior scaling for multi-GPU systems, but require advanced cooling and are fixed to the system.

When should I move from a workstation to a server?

Choose a workstation for development, experimentation, and local training. Move to a server when you need 24/7 uptime, remote access, better networking, or plan to scale into a rack or cluster environment.

How do I know if I need a GPU cluster?

If your workloads involve training large language models, multimodal AI, or any job requiring hundreds of GB of VRAM and weeks of compute, a single server is not enough. At that point, multi-node clusters with fast interconnect become essential.

Conclusion

There is no one-size-fits-all answer to how many GPUs you need; it depends on your stage in the AI journey. whether you are experimenting, training production models, or deploying large-scale systems. A single GPU may be enough for early research, but as models grow and projects move into production, multi-GPU servers and eventually multi-system clusters become essential.

Investing in GPU compute is about planning for growth. Start with what meets your current workload, but consider future needs for faster training, distributed workloads, and reliable deployment. The right infrastructure at each stage ensures your AI development remains efficient, scalable, and ready for what’s next. Talk to an Exxact engineer to get your computing infrastructure configured to address your unique needs.

Fueling Innovation with an Exxact Designed Computing Cluster

Deploying full-scale AI models can be accelerated exponentially with the right computing infrastructure. Storage, head node, networking, compute - all components of your next Exxact cluster are configurable to your workload to drive and accelerate research and innovation.

Get a Quote Today