Graphcore Bow IPU

Graphcore announced a very special AI processor and it's an exciting one. Graphcore is a UK-based semiconductor manufacturer specifically for AI computation calling their processors Intelligence Processing Units (IPU). The Bow IPU is fabricated at 7nm in conjunction with TSMC using custom Wafer-on-Wafer 3D stacking technology versus others that use chip-on-wafer stacking where the cache is stacked on top of the processor.

Graphcore and TSMC’s 3D wafer-on-wafer is more sophisticated; an AI logic processor’s power delivery die is stacked directly on top for unprecedented gains in power while also being more power-efficient. The power is delivered through BTSV silicon and high-density deep trench capacitors to achieve a smooth power delivery enabling faster speeds between the capacitor wafer and the logic wafer at higher voltages.

Interested in a Graphcore AI Solution?

Click here to learn more

Power is a big deal when it comes to HPC and AI computing. HPC processors are power-hungry so manufacturers will take advantage of any possible power savings. Not only is Bow IPU 16% more power-efficient, but it also increases performance by 40% with the same static RAM and processor core count as its preceding IPU. A single Bow IPU processor delivers 350 TFLOPs of mixed-precision AI compute.

The Bow IPU is designed for scale-up and scale-out machine intelligence infrastructures. Bow IPUs are intended to be packaged in Graphcore’s Bow-2000 IPU machines. Each Bow-2000 has 4 Bow IPU processors with a total of 5888 IPU cores, capable of 1.4 petaFLOPS of AI compute and are the building block of Graphcore’s scalable Pod system with options of Bow Pod16, Pod32, Pod64, and Pod256 (the number representing how many Bow-IPUs each system has).

Graphcore's flagship superscale computer Bow Pod1024 has 256 Bow-2000s or 1024 of Graphcore's new generation IPU processors delivering 350 petaFLOPs of AI compute allowing machine learning to grow exponentially.

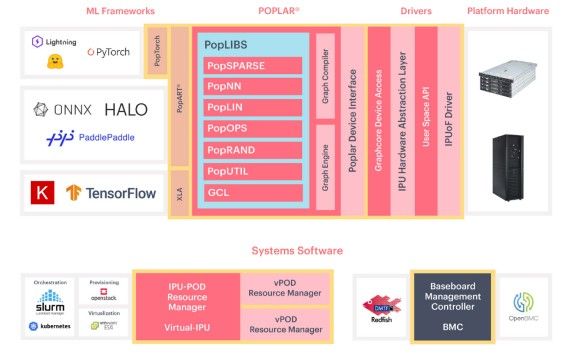

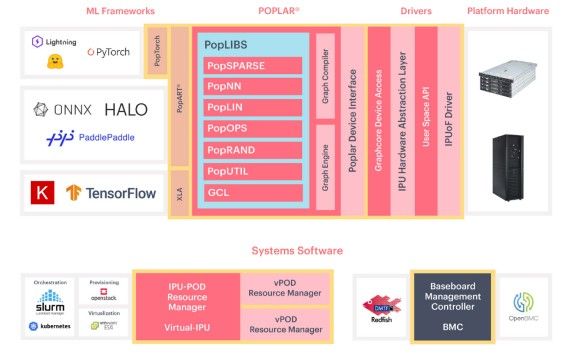

To complement the IPU systems, Graphcore is also offering a complete toolchain designed for machine intelligence software called Poplar SDK. Like NVIDIA’s CUDA and AMD’s ROCm, Poplar integrates common machine learning tools like TensorFlow, PyTorch, and ONNX.

Graphcore IPU features tools like PopLibs's open-source library of code and functions, Graph Complier's data management and work partitioning, Graph Engine's highly optimized IPU data transfer, and IPU-Link's IPU-to-IPU high bandwidth communication, and more to help with any AI or deep learning workloads. Poplar SDK also includes a complete set of open source libraries where developers have full function in their AI neural networking needs. Graphcore wants to make implementing their IPU systems as user-friendly as possible and their implementation of software definitely improves the user experience with their IPU systems.

The WoW 3D stacking design doesn't stop at just stacking the power delivery and the processor; Graphcore is in the process of developing what they call the Good computer named after Jack Good who talked about the concept of ultra-intelligence. The new project takes advantage of the wafer-on-wafer with next-generation stacking, possibly with logic boards instead. Logic-on-logic or LoL would be a great name for such a marvel of technology! Stacking 2 logic processors on top of each other cuts the power efficiency of the Bow IPU but in favor returns theoretically fast chip-to-chip bandwidth and extremely dense processing power. Graphcore plans to develop this supercomputer with a capacity more than the human brain at 10 exaFLOPs of AI compute by 2024.

Have any questions?

Contact Exxact Today

Introducing Graphcore Bow IPU

Graphcore Bow IPU

Graphcore announced a very special AI processor and it's an exciting one. Graphcore is a UK-based semiconductor manufacturer specifically for AI computation calling their processors Intelligence Processing Units (IPU). The Bow IPU is fabricated at 7nm in conjunction with TSMC using custom Wafer-on-Wafer 3D stacking technology versus others that use chip-on-wafer stacking where the cache is stacked on top of the processor.

Graphcore and TSMC’s 3D wafer-on-wafer is more sophisticated; an AI logic processor’s power delivery die is stacked directly on top for unprecedented gains in power while also being more power-efficient. The power is delivered through BTSV silicon and high-density deep trench capacitors to achieve a smooth power delivery enabling faster speeds between the capacitor wafer and the logic wafer at higher voltages.

Interested in a Graphcore AI Solution?

Click here to learn more

Power is a big deal when it comes to HPC and AI computing. HPC processors are power-hungry so manufacturers will take advantage of any possible power savings. Not only is Bow IPU 16% more power-efficient, but it also increases performance by 40% with the same static RAM and processor core count as its preceding IPU. A single Bow IPU processor delivers 350 TFLOPs of mixed-precision AI compute.

The Bow IPU is designed for scale-up and scale-out machine intelligence infrastructures. Bow IPUs are intended to be packaged in Graphcore’s Bow-2000 IPU machines. Each Bow-2000 has 4 Bow IPU processors with a total of 5888 IPU cores, capable of 1.4 petaFLOPS of AI compute and are the building block of Graphcore’s scalable Pod system with options of Bow Pod16, Pod32, Pod64, and Pod256 (the number representing how many Bow-IPUs each system has).

Graphcore's flagship superscale computer Bow Pod1024 has 256 Bow-2000s or 1024 of Graphcore's new generation IPU processors delivering 350 petaFLOPs of AI compute allowing machine learning to grow exponentially.

To complement the IPU systems, Graphcore is also offering a complete toolchain designed for machine intelligence software called Poplar SDK. Like NVIDIA’s CUDA and AMD’s ROCm, Poplar integrates common machine learning tools like TensorFlow, PyTorch, and ONNX.

Graphcore IPU features tools like PopLibs's open-source library of code and functions, Graph Complier's data management and work partitioning, Graph Engine's highly optimized IPU data transfer, and IPU-Link's IPU-to-IPU high bandwidth communication, and more to help with any AI or deep learning workloads. Poplar SDK also includes a complete set of open source libraries where developers have full function in their AI neural networking needs. Graphcore wants to make implementing their IPU systems as user-friendly as possible and their implementation of software definitely improves the user experience with their IPU systems.

The WoW 3D stacking design doesn't stop at just stacking the power delivery and the processor; Graphcore is in the process of developing what they call the Good computer named after Jack Good who talked about the concept of ultra-intelligence. The new project takes advantage of the wafer-on-wafer with next-generation stacking, possibly with logic boards instead. Logic-on-logic or LoL would be a great name for such a marvel of technology! Stacking 2 logic processors on top of each other cuts the power efficiency of the Bow IPU but in favor returns theoretically fast chip-to-chip bandwidth and extremely dense processing power. Graphcore plans to develop this supercomputer with a capacity more than the human brain at 10 exaFLOPs of AI compute by 2024.

Have any questions?

Contact Exxact Today