NVIDIA A100 GPU with Multi-Instance GPU - Getting the multiple bang for your buck!

Imagine being in the grocery store during the early days of the pandemic, restlessly waiting at the back a very long line with a cart full of groceries and only one cashier. You stand silently while some grumble and others gab about trivial matters, wishing that they had more cashiers in place for processing. Now imagine multiple cashiers and self-checkout counters running simultaneously...enough said, right?

That's the pith of the MIG (Multi-Instance GPU) enabled in the NVIDIA Ampere architecture.

What is MIG?

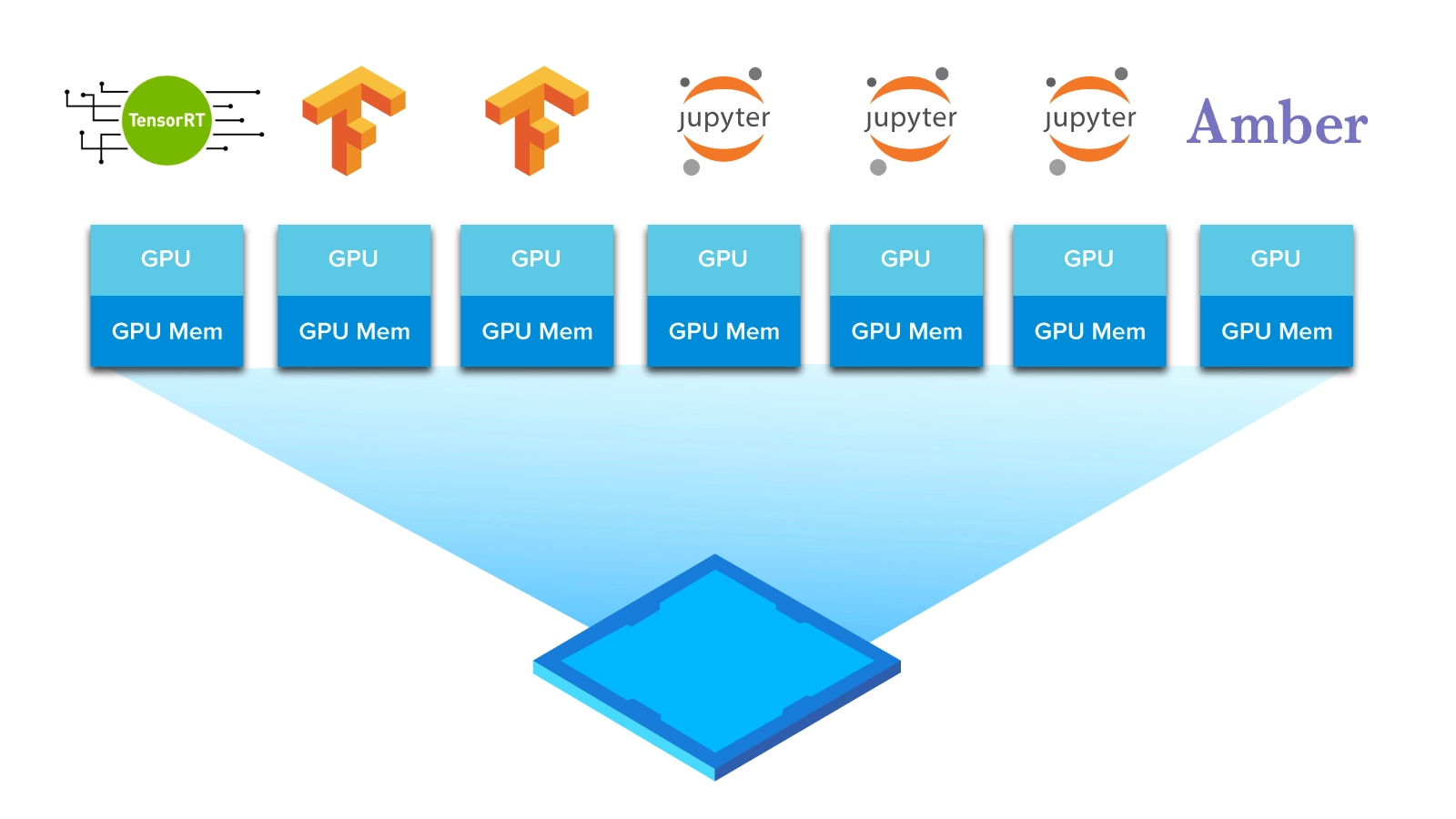

Multi-Instance GPU (MIG) expands the performance and value of each NVIDIA A100 Tensor Core GPU. MIG can partition a single NVIDIA A100 GPU into as many as seven GPU instances, each fully isolated with their own high-bandwidth memory, cache, and streaming multiprocessors. That enables the A100 GPU to deliver guaranteed quality-of-service (QoS) for every job, optimizing utilization and extending the reach of accelerated computing resources to every user at up to 7x higher utilization compared to prior GPUs.

The A100 in MIG mode provides the flexibility to choose many different instance sizes. It can run any mix of up to seven HPC or AI workloads of different sizes, provisioning of right-sized GPU instance for each workload. This is particularly beneficial for workloads that do not fully saturate the GPU’s compute capacity, so you may want to run different workloads in parallel to maximize utilization.

For example: users can create three MIG instances with 10 gigabytes (GB) of memory each, two instances with 20GB or seven with 5GB. With MIG users create the mix that’s right for their workloads.

GPU Instances vs Compute Instances

When you think of GPU instances, think of them as slicing one big GPU into various smaller GPUs, with each GPU instance having HW-level separation and dedicated compute and memory resources. All isolated and protected from faults in the other instances. Or, imagine a whole GPU as one GPU instance, that can be divided into up to seven GPU instances with MIG.

A compute instance on the other hand can configure different levels of compute power within a GPU instance. A GPU instance can be subdivided into many smaller compute instances to further separate its compute resources.

MIG partitioning and GPU instance profiles

MIG does not allow GPU instances to be created with an arbitrary number of GPU slices. Instead, it provides several GPU instance profiles for you to choose from. On a given GPU, you can create multiple GPU instances from a mix and match of these profiles within the availability of GPU slices.

Built for the Developer

Developers won’t have to change the CUDA programming model to get the benefits of MIG. For AI and HPC, MIG works with existing Linux operating systems as well as Kubernetes and containers. MIG enables a new range of AI-accelerated workloads that run on Red Hat platforms from the cloud to the edge.

MIG is enabled with software provided for NVIDIA A100s. That includes GPU drivers, NVIDIA’s CUDA 11 software, an updated NVIDIA container runtime and a new resource type in Kubernetes via the NVIDIA Device Plugin. With NVIDIA A100 and its software in place, developers will be able to see and schedule jobs on all GPU instances as if they were physical GPUs.

Have any questions about MIG or NVIDIA A100?

Contact Exxact Today

NVIDIA A100 GPU with Multi-Instance GPU (MIG)

NVIDIA A100 GPU with Multi-Instance GPU - Getting the multiple bang for your buck!

Imagine being in the grocery store during the early days of the pandemic, restlessly waiting at the back a very long line with a cart full of groceries and only one cashier. You stand silently while some grumble and others gab about trivial matters, wishing that they had more cashiers in place for processing. Now imagine multiple cashiers and self-checkout counters running simultaneously...enough said, right?

That's the pith of the MIG (Multi-Instance GPU) enabled in the NVIDIA Ampere architecture.

What is MIG?

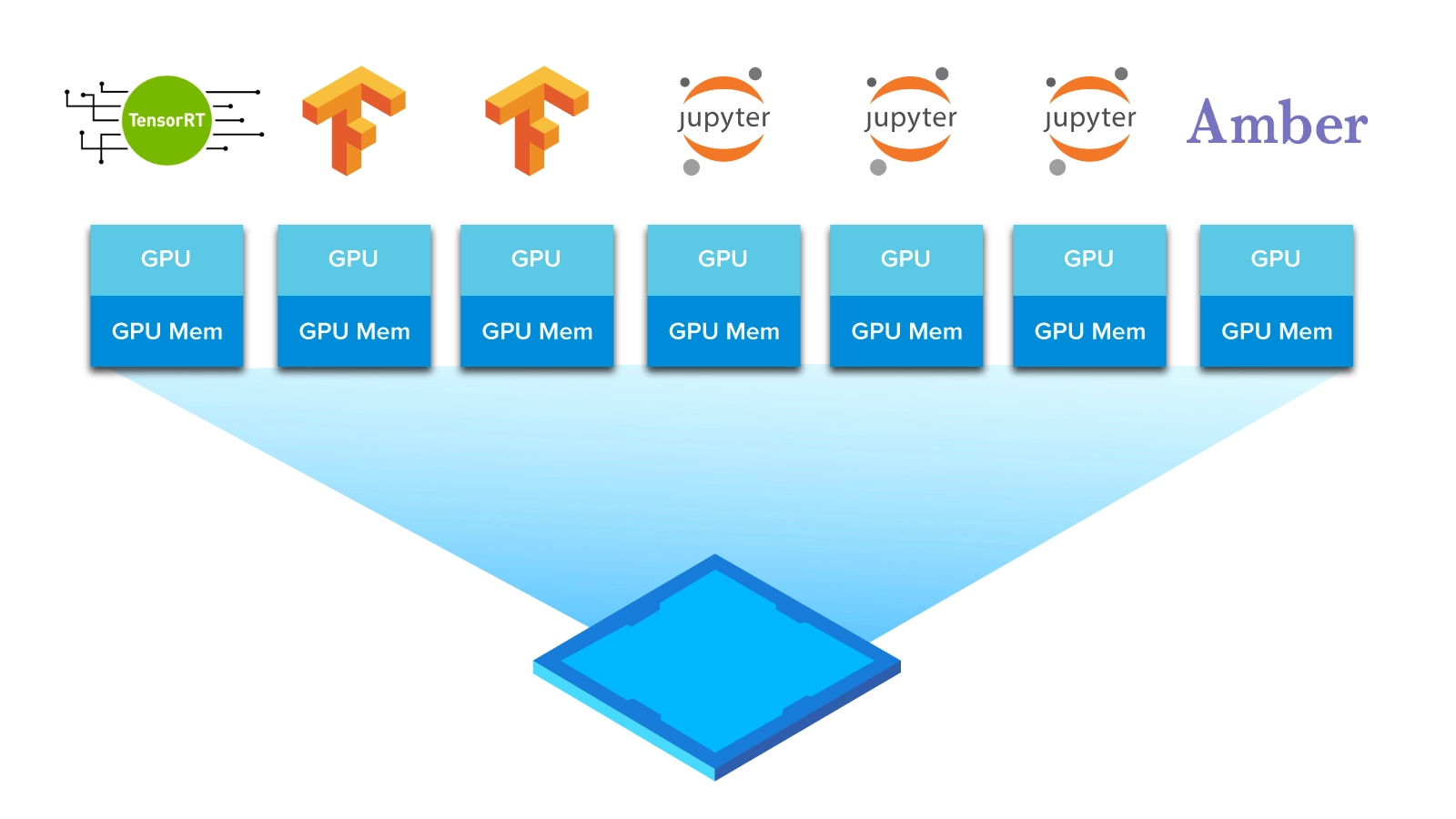

Multi-Instance GPU (MIG) expands the performance and value of each NVIDIA A100 Tensor Core GPU. MIG can partition a single NVIDIA A100 GPU into as many as seven GPU instances, each fully isolated with their own high-bandwidth memory, cache, and streaming multiprocessors. That enables the A100 GPU to deliver guaranteed quality-of-service (QoS) for every job, optimizing utilization and extending the reach of accelerated computing resources to every user at up to 7x higher utilization compared to prior GPUs.

The A100 in MIG mode provides the flexibility to choose many different instance sizes. It can run any mix of up to seven HPC or AI workloads of different sizes, provisioning of right-sized GPU instance for each workload. This is particularly beneficial for workloads that do not fully saturate the GPU’s compute capacity, so you may want to run different workloads in parallel to maximize utilization.

For example: users can create three MIG instances with 10 gigabytes (GB) of memory each, two instances with 20GB or seven with 5GB. With MIG users create the mix that’s right for their workloads.

GPU Instances vs Compute Instances

When you think of GPU instances, think of them as slicing one big GPU into various smaller GPUs, with each GPU instance having HW-level separation and dedicated compute and memory resources. All isolated and protected from faults in the other instances. Or, imagine a whole GPU as one GPU instance, that can be divided into up to seven GPU instances with MIG.

A compute instance on the other hand can configure different levels of compute power within a GPU instance. A GPU instance can be subdivided into many smaller compute instances to further separate its compute resources.

MIG partitioning and GPU instance profiles

MIG does not allow GPU instances to be created with an arbitrary number of GPU slices. Instead, it provides several GPU instance profiles for you to choose from. On a given GPU, you can create multiple GPU instances from a mix and match of these profiles within the availability of GPU slices.

Built for the Developer

Developers won’t have to change the CUDA programming model to get the benefits of MIG. For AI and HPC, MIG works with existing Linux operating systems as well as Kubernetes and containers. MIG enables a new range of AI-accelerated workloads that run on Red Hat platforms from the cloud to the edge.

MIG is enabled with software provided for NVIDIA A100s. That includes GPU drivers, NVIDIA’s CUDA 11 software, an updated NVIDIA container runtime and a new resource type in Kubernetes via the NVIDIA Device Plugin. With NVIDIA A100 and its software in place, developers will be able to see and schedule jobs on all GPU instances as if they were physical GPUs.

Have any questions about MIG or NVIDIA A100?

Contact Exxact Today

.jpg?format=webp)