What is NVIDIA NGC?

Modern science- and enterprise-driven Artificial intelligence (AI) and Machine Learning (ML) workflows are not simple to execute given the complexities arising from multiple packages and frameworks often used in any such typical task. All the mutual dependencies and interrelationships between these open-source frameworks can make the life of a data scientist quite miserable.

One universally powerful, yet deceptively simple way to solve this problem is the use of containers. A container is a portable unit of software that combines the application and all its dependencies into a single package that is agnostic to the underlying host OS. Thereby, it removes the need to build complex environments and simplifies the process of application development to deployment.

Docker and Kubernetes (container management) are two open-source technologies that come to mind immediately in this aspect.

However, building GPU-optimized containers, tuned specifically for the most demanding deep learning applications, is not a trivial task. To solve this issue, NVIDIA, the pioneer in the GPU technologies and deep learning revolution, has come up with an excellent catalog of specialized containers that they call NGC Collections.

In this article, we explore their basic usage and some variations.

Interested in a deep learning workstation?

Learn more about Exxact AI workstations starting at $3,700

Basic Features of NVIDIA NGC Containers

There are many use cases and scenarios where these containers can be applied to. Data scientists and hardcore ML engineers can both use them for various purposes. Some of the key features of this NGC catalog are:

- They represent a truly diverse set of containers spanning a multitude of use cases

- They encompass all the popular built-in libraries and dependencies for easy compiling of custom applications as practiced in typical data science and ML domain

- They are extremely portable, allowing a data scientist to develop her applications on the cloud, on-premises, or at the edge

- They realize reduced time-to-solution by scaling up from single-node to multi-node systems in an intuitive manner

Professional data scientists and even hobbyists can use these features to their full advantage. But NGCs are more than that. They are also built with large enterprises in mind.

- They are enterprise-ready and scanned for common vulnerabilities and exposures (CVEs).

- They are backed by optional enterprise support to troubleshoot issues for NVIDIA-built software

Use Cases & Varieties

As mentioned above, the NGC containers and catalog covers a wide variety of use cases and application scenarios. For example, they feature both popular deep learning frameworks and High-performance Computing (HPC) libraries and toolkits that also leverage the accelerated computing of GPU clusters. The following figure illustrates this universe,

Fig 1: Universe of application frameworks and libraries featured in NGC catalog.

Almost all data scientists are familiar with deep learning frameworks like TensorFlow and PyTorch, but containerized HPC is a specialized domain that deserves special mention and some elaboration. Here are some brief facts about these containers.

NAMD

NAMD is a parallel molecular dynamics code designed for high-performance simulation of large biomolecular systems. It uses the popular molecular graphics program VMD for simulation setup and trajectory analysis but is also file-compatible with AMBER, CHARMM, and X-PLOR. It works well with Pascal(sm60), Volta(sm70), or Ampere (sm80) NVIDIA GPU(s).

GROMACS

GROMACS is a molecular dynamics application designed to simulate Newtonian equations of motion for systems with hundreds to millions of particles. This package is designed to simulate biochemical molecules like proteins, lipids, and nucleic acids that have a lot of complicated bonded interactions.

GROMACS performs well with the following GPU family - Ampere A100, Volta V100, or Pascal P100 GPUs. A high clock rate is more important than the absolute number of cores, although having more than one thread per rank is desired. GROMACS will support multi-GPUs in one system but needs several CPU cores for each GPU.

It is best to start with one GPU using all the CPU cores and then scale up to explore what performs best for the specific application case.

RELION

REgularized LIkelihood OptimizatioN implements an empirical Bayesian approach for the analysis of electron cryo-microscopy (Cryo-EM). Specifically, RELION provides refinement methods of singular or multiple 3D reconstructions as well as 2D class averages.

It is comprised of multiple steps that cover the entire single-particle analysis workflow - beam-induced motion correction, CTF estimation, automated particle picking, particle extraction, 2D class averaging, 3D classification, and high-resolution refinement in 3D. RELION can also process movies generated from direct-electron detectors, apply final map sharpening, and perform the local-resolution estimation. It is an incredibly important tool for studying living cell mechanisms.

RELION, like the other HPCs, works well with Pascal(sm60), Volta(sm70), or Ampere (sm80) NVIDIA GPU(s). The NGC container is built to take advantage of these GPU systems. Large local scratch disk space, ideally SSD or RamFS is needed. A high clock rate is more important than the number of cores.

How to Get Started with NVIDIA NGC Containers

Before a data scientist can run an NGC deep learning framework container, they have to make sure that their localized Docker environment supports NVIDIA GPUs.

The detailed guide can be found here on the NVIDIA website: Running a container.

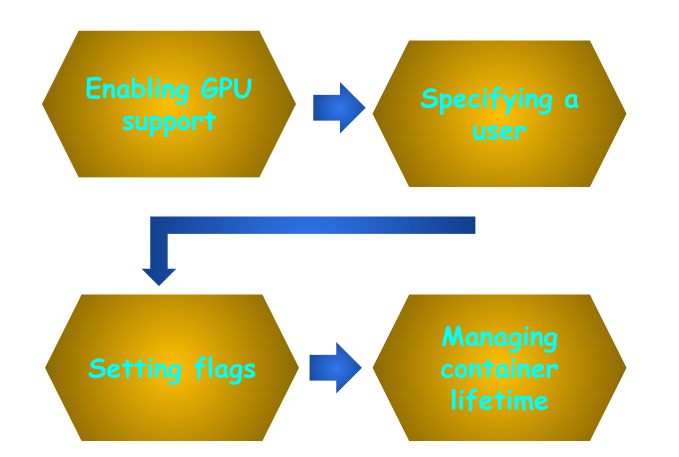

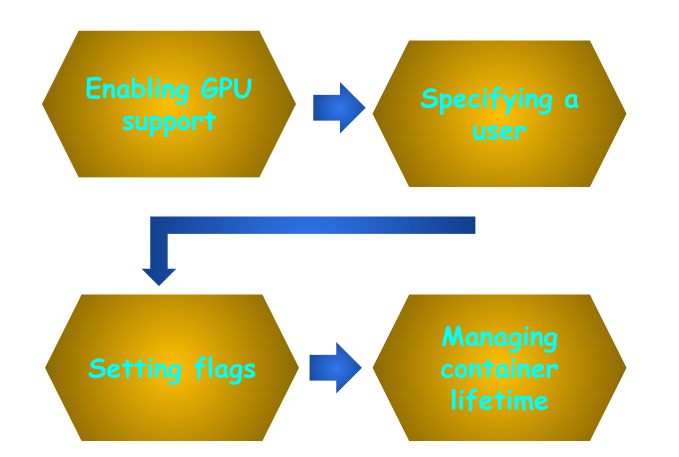

Essentially, the core components of the workflow consist of,

- Enabling the GPU support on docker

- Specifying a user

- Setting flags

- Remove flag

- Interactive flag

- Volumes flag

- Mapping ports flag

- Shared memory flag

- Restricting exposure of GPUs flag

- Managing container lifetime

The flow is visualized below.

Fig 2: NGC container running and managing workflow.

Examples of Popular Containers

The NGC catalog is an ever-expanding universe of specialized containers that data scientists are demanding and building themselves. Some of the popular ones (as per the NVIDIA site), are as follows.

AWS AI with NVIDIA

From SageMaker to P3 instances in the cloud, whether you're working with PaaS or infrastructure this collection is the place to start for leveraging the combined power of NGC and Amazon cloud AI tools in a potent mix.

Here is the link to obtain and use this container: https://ngc.nvidia.com/catalog/collections/nvidia:amazonwebservices

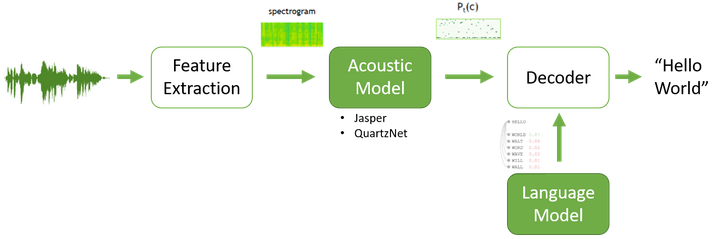

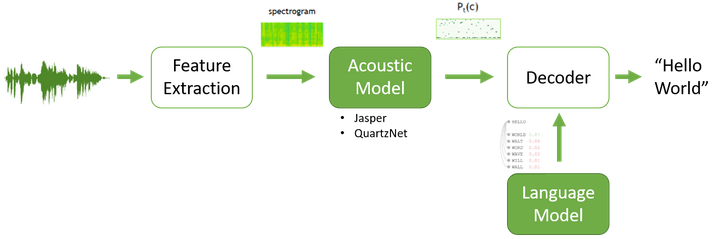

Automatic Speech Recognition

This is a collection of easy-to-use, highly optimized deep learning models for recommender systems. Optimized and carefully selected deep learning examples provide data scientists and software engineers with recipes to train, fine-tune, and deploy state-of-the-art models in these domains and a wide variety of real-life application areas.

Fig. 3: Speech recognition NVIDIA NGC

Here is the link to obtain and use this container: https://ngc.nvidia.com/catalog/collections/nvidia:automaticspeechrecognition

Clara Discovery

Clara Discovery is a collection of frameworks, applications, and AI models enabling GPU-accelerated computational drug discovery. Drug development is a cross-disciplinary endeavor.

Clara Discovery can be applied across the drug discovery process and combines accelerated computing, AI, and machine learning in genomics, proteomics, microscopy, virtual screening, computational chemistry, visualization, clinical imaging, and natural language processing (NLP).

DeepStream Container

Analyzing high-volume and high-velocity streaming sensor data from industrial or consumer applications is becoming ever-more demanding and ubiquitous in this era of massive digital transformation. NVIDIA’s DeepStream SDK delivers a complete streaming analytics toolkit for AI-based multi-sensor processing, video and image understanding. DeepStream is an integral part of NVIDIA Metropolis, the platform for building end-to-end services and solutions for transforming pixels and sensor data to actionable insights.

This SDK features hardware-accelerated building blocks, called plugins that bring deep neural networks (DNNs) and other complex pre-processing and transformation tasks into a stream processing pipeline. It allows the data scientist to focus on building core DNNs and high-value IPs rather than designing end-to-end solutions from scratch.

The SDK has the ability to use AI models to perceive pixels and analyze metadata while offering integration from the edge to the cloud. The SDK can be used to build applications across various use cases including,

- retail analytics,

- patient monitoring in healthcare facilities,

- parking management,

- optical inspection,

- managing logistics and industrial floor operations.

More details about this container can be found here: https://ngc.nvidia.com/catalog/collections/nvidia:deepstreamcomputervision

Conclusion

NVIDIA NGC containers and their comprehensive catalog are an amazing suite of prebuilt software stacks (using the Docker backend) that simplifies the use of complex deep learning and HPC libraries that must leverage some sort of GPU-accelerated computing infrastructure. It has a well-defined step-by-step guide on how to pull and start using the containers and, if followed, they can make life easy for a wide variety of data scientists, ML engineers, and scientists using HPC simulations.

The application domains covered by the existing containers are truly diverse and ever-expanding. The philosophy for the NGC catalog is "Built by developers for developers". So, it must feel close to the home for highly technically oriented professionals.

We covered the basics of these containers, provided some useful resource links, and introduced the readers to their potential usage. We hope that users can take advantage of these software stacks by marrying them with the appropriate hardware resources and support services as needed.

Have any questions?

Contact Exxact Today

What Are NVIDIA NGC Containers & How to Get Started Using Them

What is NVIDIA NGC?

Modern science- and enterprise-driven Artificial intelligence (AI) and Machine Learning (ML) workflows are not simple to execute given the complexities arising from multiple packages and frameworks often used in any such typical task. All the mutual dependencies and interrelationships between these open-source frameworks can make the life of a data scientist quite miserable.

One universally powerful, yet deceptively simple way to solve this problem is the use of containers. A container is a portable unit of software that combines the application and all its dependencies into a single package that is agnostic to the underlying host OS. Thereby, it removes the need to build complex environments and simplifies the process of application development to deployment.

Docker and Kubernetes (container management) are two open-source technologies that come to mind immediately in this aspect.

However, building GPU-optimized containers, tuned specifically for the most demanding deep learning applications, is not a trivial task. To solve this issue, NVIDIA, the pioneer in the GPU technologies and deep learning revolution, has come up with an excellent catalog of specialized containers that they call NGC Collections.

In this article, we explore their basic usage and some variations.

Interested in a deep learning workstation?

Learn more about Exxact AI workstations starting at $3,700

Basic Features of NVIDIA NGC Containers

There are many use cases and scenarios where these containers can be applied to. Data scientists and hardcore ML engineers can both use them for various purposes. Some of the key features of this NGC catalog are:

- They represent a truly diverse set of containers spanning a multitude of use cases

- They encompass all the popular built-in libraries and dependencies for easy compiling of custom applications as practiced in typical data science and ML domain

- They are extremely portable, allowing a data scientist to develop her applications on the cloud, on-premises, or at the edge

- They realize reduced time-to-solution by scaling up from single-node to multi-node systems in an intuitive manner

Professional data scientists and even hobbyists can use these features to their full advantage. But NGCs are more than that. They are also built with large enterprises in mind.

- They are enterprise-ready and scanned for common vulnerabilities and exposures (CVEs).

- They are backed by optional enterprise support to troubleshoot issues for NVIDIA-built software

Use Cases & Varieties

As mentioned above, the NGC containers and catalog covers a wide variety of use cases and application scenarios. For example, they feature both popular deep learning frameworks and High-performance Computing (HPC) libraries and toolkits that also leverage the accelerated computing of GPU clusters. The following figure illustrates this universe,

Fig 1: Universe of application frameworks and libraries featured in NGC catalog.

Almost all data scientists are familiar with deep learning frameworks like TensorFlow and PyTorch, but containerized HPC is a specialized domain that deserves special mention and some elaboration. Here are some brief facts about these containers.

NAMD

NAMD is a parallel molecular dynamics code designed for high-performance simulation of large biomolecular systems. It uses the popular molecular graphics program VMD for simulation setup and trajectory analysis but is also file-compatible with AMBER, CHARMM, and X-PLOR. It works well with Pascal(sm60), Volta(sm70), or Ampere (sm80) NVIDIA GPU(s).

GROMACS

GROMACS is a molecular dynamics application designed to simulate Newtonian equations of motion for systems with hundreds to millions of particles. This package is designed to simulate biochemical molecules like proteins, lipids, and nucleic acids that have a lot of complicated bonded interactions.

GROMACS performs well with the following GPU family - Ampere A100, Volta V100, or Pascal P100 GPUs. A high clock rate is more important than the absolute number of cores, although having more than one thread per rank is desired. GROMACS will support multi-GPUs in one system but needs several CPU cores for each GPU.

It is best to start with one GPU using all the CPU cores and then scale up to explore what performs best for the specific application case.

RELION

REgularized LIkelihood OptimizatioN implements an empirical Bayesian approach for the analysis of electron cryo-microscopy (Cryo-EM). Specifically, RELION provides refinement methods of singular or multiple 3D reconstructions as well as 2D class averages.

It is comprised of multiple steps that cover the entire single-particle analysis workflow - beam-induced motion correction, CTF estimation, automated particle picking, particle extraction, 2D class averaging, 3D classification, and high-resolution refinement in 3D. RELION can also process movies generated from direct-electron detectors, apply final map sharpening, and perform the local-resolution estimation. It is an incredibly important tool for studying living cell mechanisms.

RELION, like the other HPCs, works well with Pascal(sm60), Volta(sm70), or Ampere (sm80) NVIDIA GPU(s). The NGC container is built to take advantage of these GPU systems. Large local scratch disk space, ideally SSD or RamFS is needed. A high clock rate is more important than the number of cores.

How to Get Started with NVIDIA NGC Containers

Before a data scientist can run an NGC deep learning framework container, they have to make sure that their localized Docker environment supports NVIDIA GPUs.

The detailed guide can be found here on the NVIDIA website: Running a container.

Essentially, the core components of the workflow consist of,

- Enabling the GPU support on docker

- Specifying a user

- Setting flags

- Remove flag

- Interactive flag

- Volumes flag

- Mapping ports flag

- Shared memory flag

- Restricting exposure of GPUs flag

- Managing container lifetime

The flow is visualized below.

Fig 2: NGC container running and managing workflow.

Examples of Popular Containers

The NGC catalog is an ever-expanding universe of specialized containers that data scientists are demanding and building themselves. Some of the popular ones (as per the NVIDIA site), are as follows.

AWS AI with NVIDIA

From SageMaker to P3 instances in the cloud, whether you're working with PaaS or infrastructure this collection is the place to start for leveraging the combined power of NGC and Amazon cloud AI tools in a potent mix.

Here is the link to obtain and use this container: https://ngc.nvidia.com/catalog/collections/nvidia:amazonwebservices

Automatic Speech Recognition

This is a collection of easy-to-use, highly optimized deep learning models for recommender systems. Optimized and carefully selected deep learning examples provide data scientists and software engineers with recipes to train, fine-tune, and deploy state-of-the-art models in these domains and a wide variety of real-life application areas.

Fig. 3: Speech recognition NVIDIA NGC

Here is the link to obtain and use this container: https://ngc.nvidia.com/catalog/collections/nvidia:automaticspeechrecognition

Clara Discovery

Clara Discovery is a collection of frameworks, applications, and AI models enabling GPU-accelerated computational drug discovery. Drug development is a cross-disciplinary endeavor.

Clara Discovery can be applied across the drug discovery process and combines accelerated computing, AI, and machine learning in genomics, proteomics, microscopy, virtual screening, computational chemistry, visualization, clinical imaging, and natural language processing (NLP).

DeepStream Container

Analyzing high-volume and high-velocity streaming sensor data from industrial or consumer applications is becoming ever-more demanding and ubiquitous in this era of massive digital transformation. NVIDIA’s DeepStream SDK delivers a complete streaming analytics toolkit for AI-based multi-sensor processing, video and image understanding. DeepStream is an integral part of NVIDIA Metropolis, the platform for building end-to-end services and solutions for transforming pixels and sensor data to actionable insights.

This SDK features hardware-accelerated building blocks, called plugins that bring deep neural networks (DNNs) and other complex pre-processing and transformation tasks into a stream processing pipeline. It allows the data scientist to focus on building core DNNs and high-value IPs rather than designing end-to-end solutions from scratch.

The SDK has the ability to use AI models to perceive pixels and analyze metadata while offering integration from the edge to the cloud. The SDK can be used to build applications across various use cases including,

- retail analytics,

- patient monitoring in healthcare facilities,

- parking management,

- optical inspection,

- managing logistics and industrial floor operations.

More details about this container can be found here: https://ngc.nvidia.com/catalog/collections/nvidia:deepstreamcomputervision

Conclusion

NVIDIA NGC containers and their comprehensive catalog are an amazing suite of prebuilt software stacks (using the Docker backend) that simplifies the use of complex deep learning and HPC libraries that must leverage some sort of GPU-accelerated computing infrastructure. It has a well-defined step-by-step guide on how to pull and start using the containers and, if followed, they can make life easy for a wide variety of data scientists, ML engineers, and scientists using HPC simulations.

The application domains covered by the existing containers are truly diverse and ever-expanding. The philosophy for the NGC catalog is "Built by developers for developers". So, it must feel close to the home for highly technically oriented professionals.

We covered the basics of these containers, provided some useful resource links, and introduced the readers to their potential usage. We hope that users can take advantage of these software stacks by marrying them with the appropriate hardware resources and support services as needed.

Have any questions?

Contact Exxact Today