A wild presentation by AMD at their CES 2023 keynote with AMD CEO Lisa Su showcasing and announcing a plethora of fascinating technology for mobile CPUs and GPUs, desktop CPUs, and FPGAs/adaptable SOCs for various applications. They saved the best for last by introducing AMD’s next generation of data center processors, the AMD Instinct MI300 APU.

AMD is at the forefront of high-performance computing for its accomplishments with the Frontier Supercomputer, one of the fastest supercomputers powered by AMD EPYC CPUs and AMD Instinct MI250X GPUs. And to no surprise, Lisa Su and her team push the envelope to develop something more.

A Chiplet-Based Architecture

With constant development and innovation, AMD has been moving its platform from monolithic chip manufacturing to a chiplet-based technology. Monolithic refers to using a single silicone die to house IO, memory, and circuitry in a singular chip (hence mono meaning one). Chiplet however utilizes the division of the IO and memory by using smaller silicon connected through what AMD calls Infinity Fabric.

Chiplet-based designs allow for modularity and flexible manufacturing; smaller silicon leads to a higher yield per wafer. Datacenter AMD EPYC, prosumer AMD Ryzen Threadripper, and consumer desktop Ryzen are all chiplet-based CPUs. AMD utilizes different silicon corresponding to cores and memory to reduce both manufacturing costs while still pushing the best performance.

Recently, AMD also released their newest gaming GPUs with the RX 7900XTX and 7900XT built on their chiplet-based GPU architecture RDNA 3 and their current data center Instinct MI250X built are built on the chiplet based CDNA2. They separate the Graphics Compute Die from the Memory Cache Die similar to how AMD CPUs separate IO from RAM. Now you may ask, what gives?

What is the AMD Instinct MI300 APU?

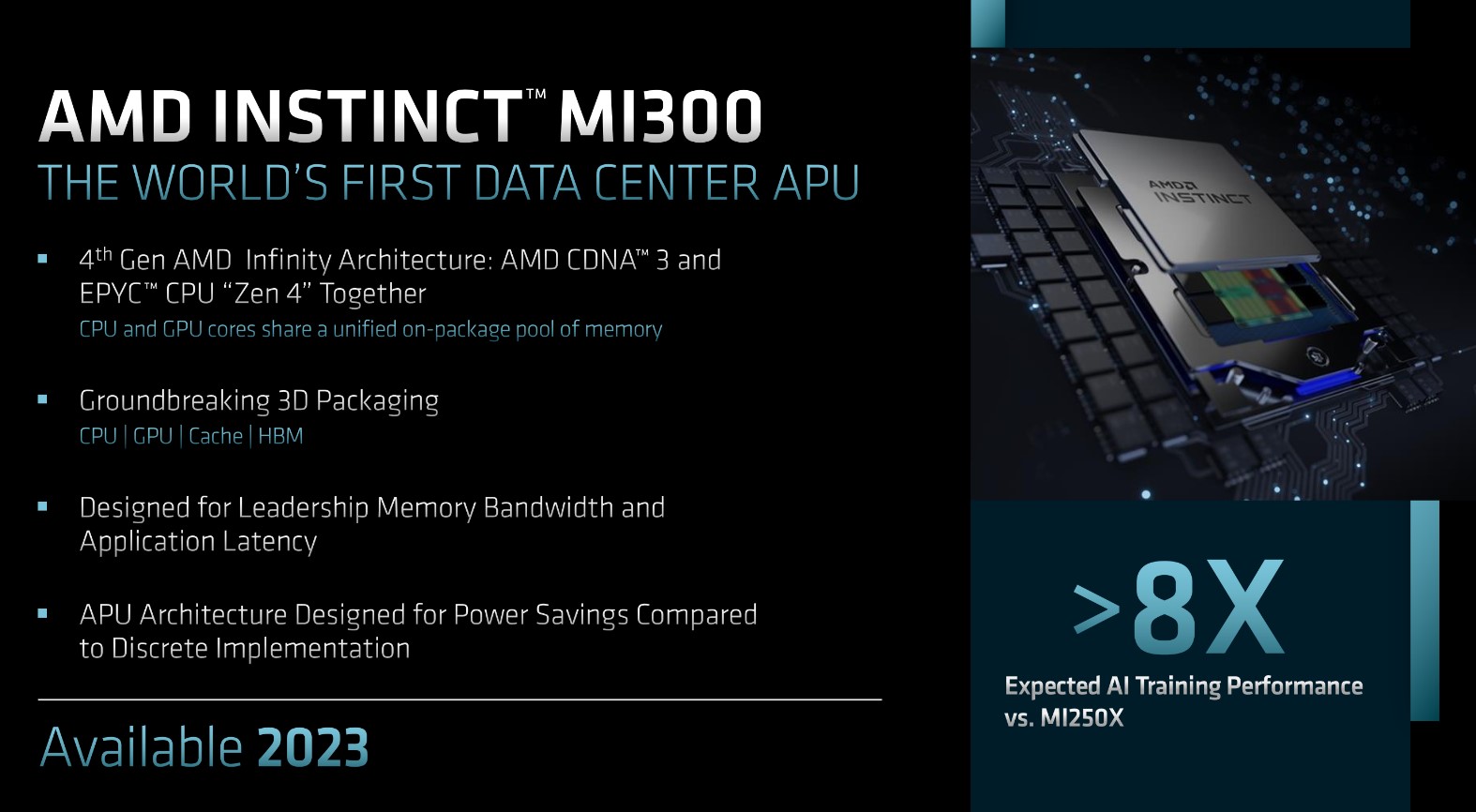

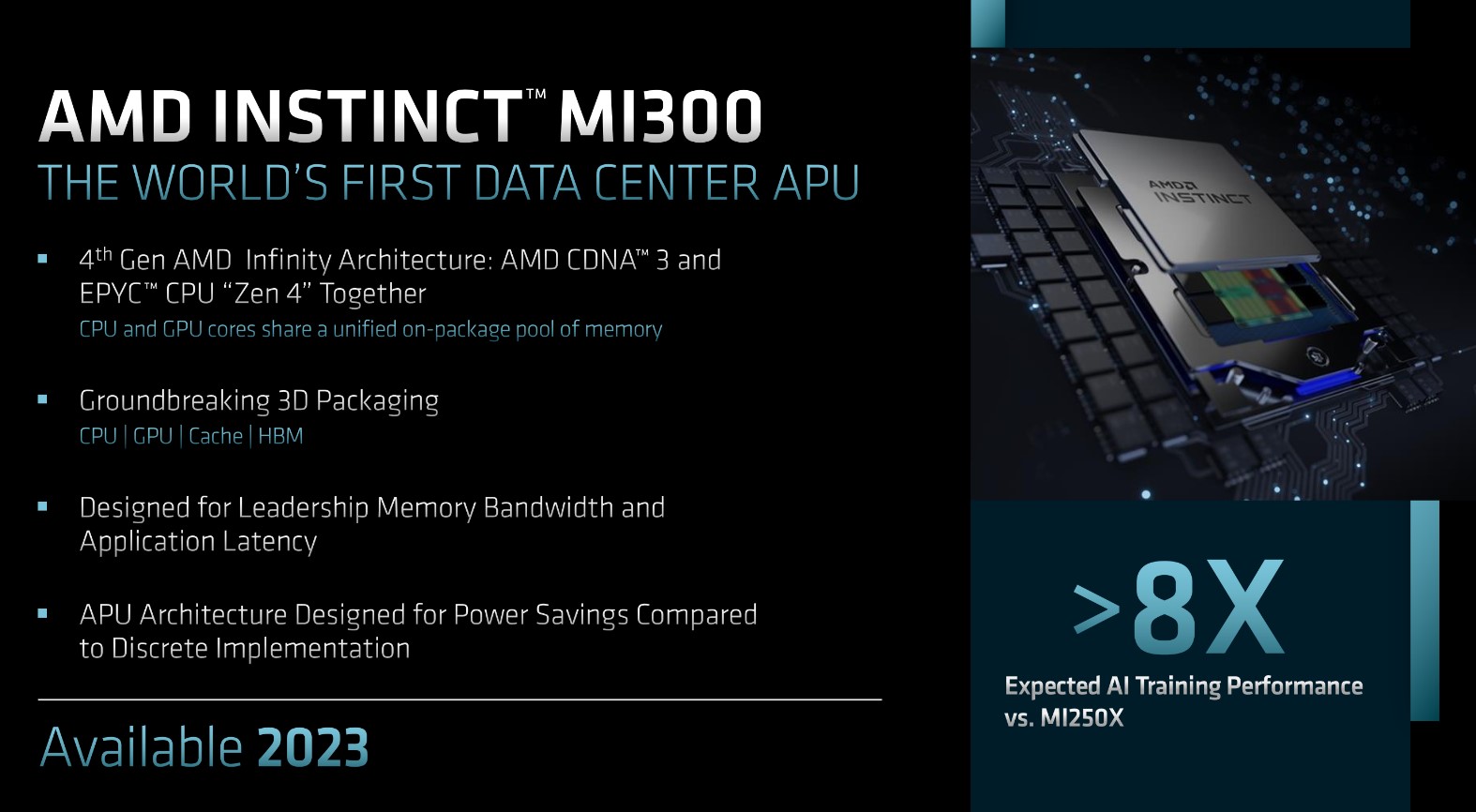

The AMD Instinct MI300 is a consolidated true data center and HPC APU (accelerated processing unit) that combines the best of AMD’s CPU and GPU chiplet technologies onto a single chip. By using 3D die stacking and multi-chipset disaggregated design, this APU isn’t just a casual CPU with integrated graphics. The AMD Instinct MI300 is an engineering marvel that brings the two complex processors into a single package enabling extremely high interconnect and chip-to-chip bandwidth.

3D Die Stacked CPU & GPU

AMD’s 3D die stacking enables the ability to expand the limits of silicon vertically. This is the world’s first data center integrated CPU and GPU built with AMD’s next-generation CDNA 3 GPU architecture optimized for AI and ML, 24 of their newest Zen 4 cores, coupled with 128GB of shared HBM3 memory. Nine 5NM compute logic chiplets logic are stacked on top of four 6NM IO and cache chiplets surrounded by the HBM memory to complete the APU for an all-in-one package.

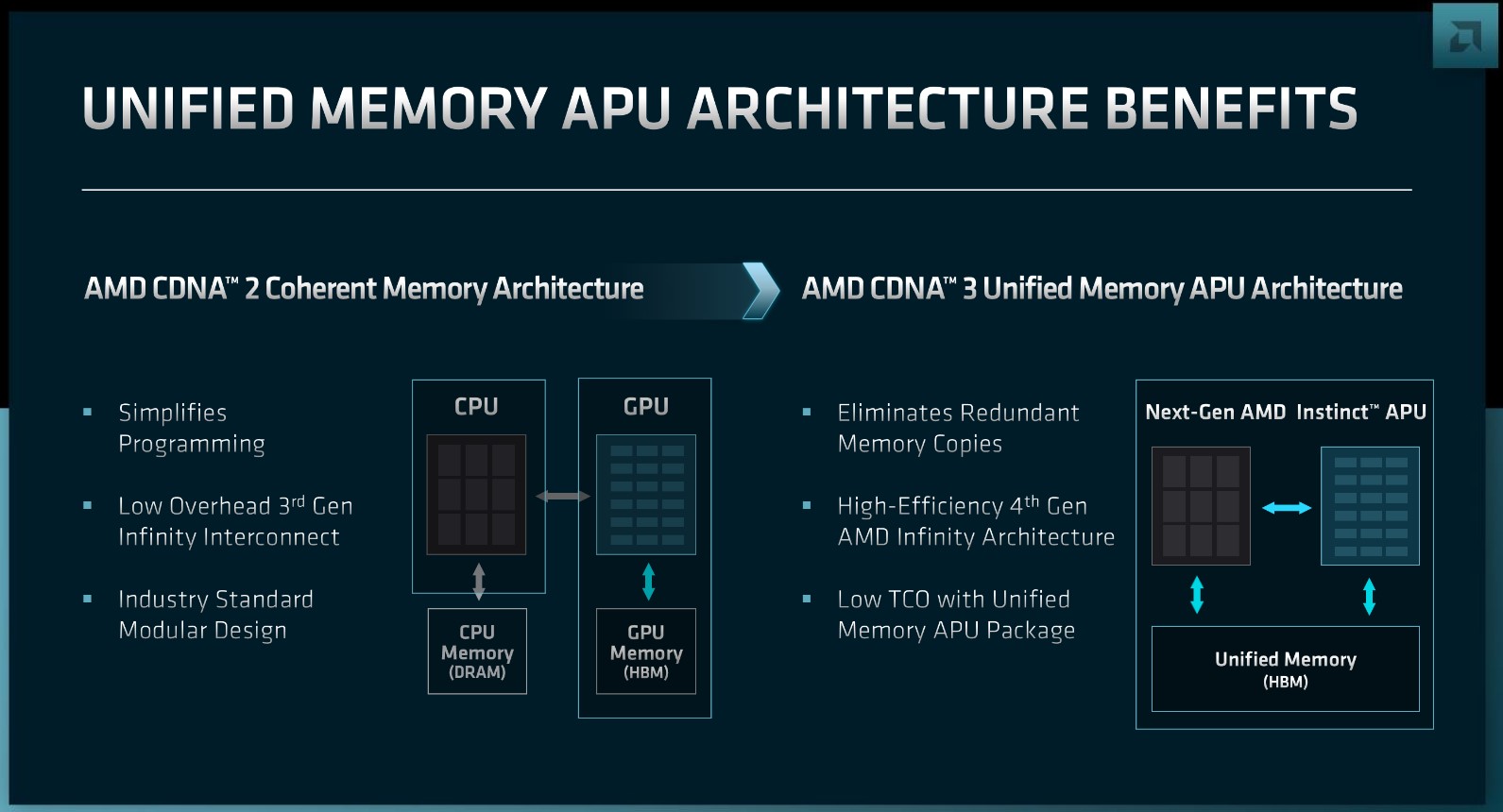

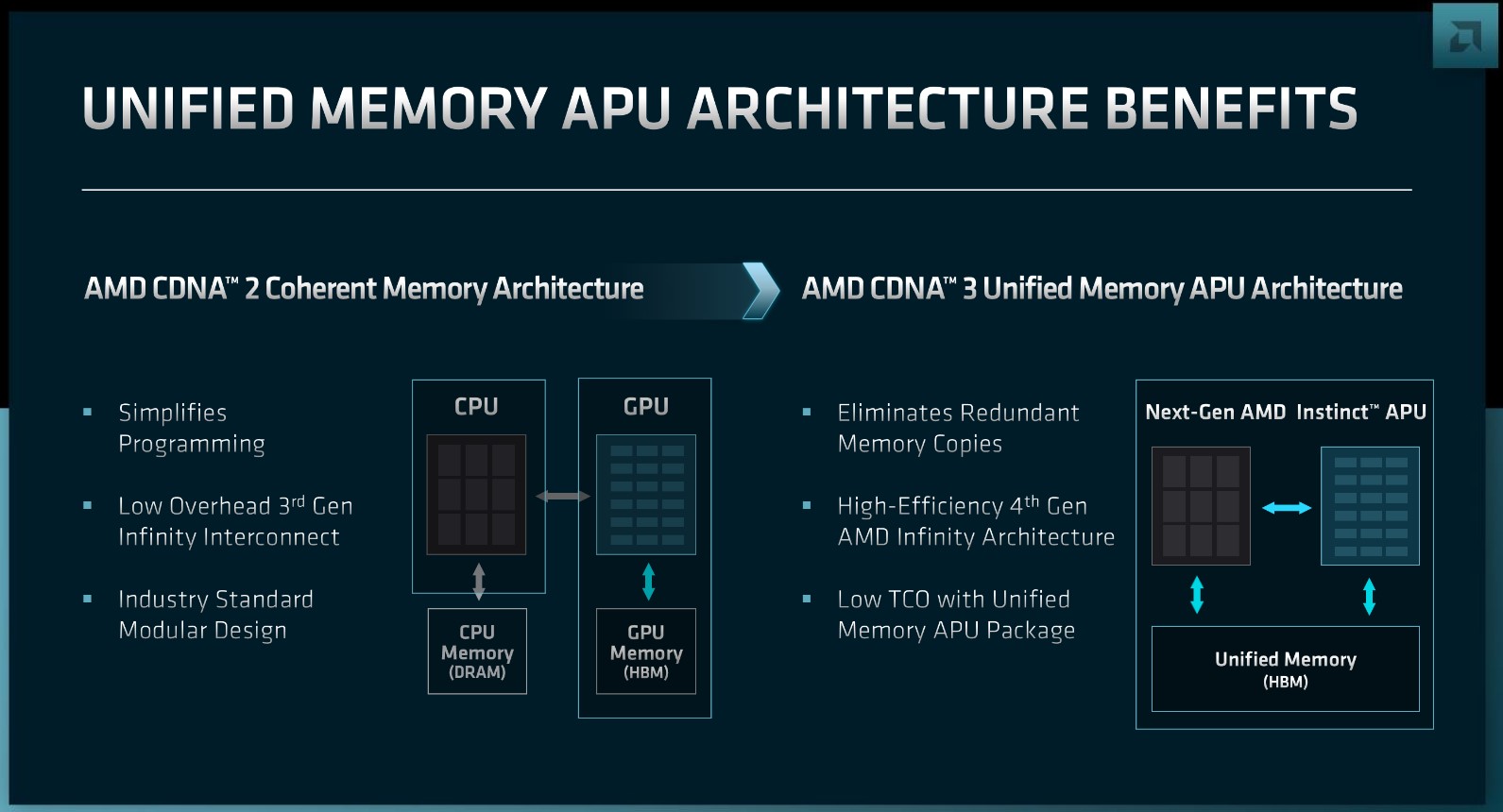

Unified Memory Architecture

AMD's new chip design integrates CPU and GPU cores on the same chip, allowing them to access shared, high-speed memory space. This enables fast and efficient communication between the CPU and GPU, allowing each to perform their respective tasks optimally. Additionally, this shared memory space simplifies programming for HPC applications by allowing both the CPU and GPU to directly access the same memory pool, rather than just a unified virtual memory space that produces copies. With access to the same memory, the MI300 enables efficiency in computation and reduces the amount of energy usage.

Leadership in Artificial Intelligence and Machine Learning Efficiency

AMD claims some mind-boggling claims of delivering 8x the performance in AI and ML workloads while being 5x higher performance per watt. Lisa Su mentions our favorite AI innovation in recent history ChatGPT, a large language model which likely took many months to train over thousands of GPUs using millions of dollars of energy. The performance and efficiency of an APU like AMD Instinct MI300 can reduce the train time of a large language model (or any complex model for that matter) from months to weeks and drastically reduce energy costs. The consolidation of computing the MI300 offers can support even larger models we have yet to conceive.

If being an early adopter of new technology isn’t quite your fashion, Exxact Corporation offers mainstream customizable options for workstations and servers for your AI and Machine Learning needs.

When to Expect Release

AMD does not have official specifications for the Instinct MI300 but they did mention a soft release date in the 2nd half of 2023. That probably means we should see these APUs in our data centers late this year or early next year. We are excited about the next generation of silicon for data centers and continue to update information as the AMD Instinct MI300 approaches release.

NVIDIA is tackling the data center APU market with its own innovation. NVIDIA Grace Hopper places both CPU and GPU on the same PCB which is not nearly as integrated as in the MI300 placing both compute dies on the same chip. However, we are excited to see what both Team Red and Team Green bring to the table with new advancements in the HPC and enterprise computing industry.

Have any Questions?

Contact Us Today!

AMD Instinct MI300: The First Integrated Data Center CPU + GPU on a Single Package

A wild presentation by AMD at their CES 2023 keynote with AMD CEO Lisa Su showcasing and announcing a plethora of fascinating technology for mobile CPUs and GPUs, desktop CPUs, and FPGAs/adaptable SOCs for various applications. They saved the best for last by introducing AMD’s next generation of data center processors, the AMD Instinct MI300 APU.

AMD is at the forefront of high-performance computing for its accomplishments with the Frontier Supercomputer, one of the fastest supercomputers powered by AMD EPYC CPUs and AMD Instinct MI250X GPUs. And to no surprise, Lisa Su and her team push the envelope to develop something more.

A Chiplet-Based Architecture

With constant development and innovation, AMD has been moving its platform from monolithic chip manufacturing to a chiplet-based technology. Monolithic refers to using a single silicone die to house IO, memory, and circuitry in a singular chip (hence mono meaning one). Chiplet however utilizes the division of the IO and memory by using smaller silicon connected through what AMD calls Infinity Fabric.

Chiplet-based designs allow for modularity and flexible manufacturing; smaller silicon leads to a higher yield per wafer. Datacenter AMD EPYC, prosumer AMD Ryzen Threadripper, and consumer desktop Ryzen are all chiplet-based CPUs. AMD utilizes different silicon corresponding to cores and memory to reduce both manufacturing costs while still pushing the best performance.

Recently, AMD also released their newest gaming GPUs with the RX 7900XTX and 7900XT built on their chiplet-based GPU architecture RDNA 3 and their current data center Instinct MI250X built are built on the chiplet based CDNA2. They separate the Graphics Compute Die from the Memory Cache Die similar to how AMD CPUs separate IO from RAM. Now you may ask, what gives?

What is the AMD Instinct MI300 APU?

The AMD Instinct MI300 is a consolidated true data center and HPC APU (accelerated processing unit) that combines the best of AMD’s CPU and GPU chiplet technologies onto a single chip. By using 3D die stacking and multi-chipset disaggregated design, this APU isn’t just a casual CPU with integrated graphics. The AMD Instinct MI300 is an engineering marvel that brings the two complex processors into a single package enabling extremely high interconnect and chip-to-chip bandwidth.

3D Die Stacked CPU & GPU

AMD’s 3D die stacking enables the ability to expand the limits of silicon vertically. This is the world’s first data center integrated CPU and GPU built with AMD’s next-generation CDNA 3 GPU architecture optimized for AI and ML, 24 of their newest Zen 4 cores, coupled with 128GB of shared HBM3 memory. Nine 5NM compute logic chiplets logic are stacked on top of four 6NM IO and cache chiplets surrounded by the HBM memory to complete the APU for an all-in-one package.

Unified Memory Architecture

AMD's new chip design integrates CPU and GPU cores on the same chip, allowing them to access shared, high-speed memory space. This enables fast and efficient communication between the CPU and GPU, allowing each to perform their respective tasks optimally. Additionally, this shared memory space simplifies programming for HPC applications by allowing both the CPU and GPU to directly access the same memory pool, rather than just a unified virtual memory space that produces copies. With access to the same memory, the MI300 enables efficiency in computation and reduces the amount of energy usage.

Leadership in Artificial Intelligence and Machine Learning Efficiency

AMD claims some mind-boggling claims of delivering 8x the performance in AI and ML workloads while being 5x higher performance per watt. Lisa Su mentions our favorite AI innovation in recent history ChatGPT, a large language model which likely took many months to train over thousands of GPUs using millions of dollars of energy. The performance and efficiency of an APU like AMD Instinct MI300 can reduce the train time of a large language model (or any complex model for that matter) from months to weeks and drastically reduce energy costs. The consolidation of computing the MI300 offers can support even larger models we have yet to conceive.

If being an early adopter of new technology isn’t quite your fashion, Exxact Corporation offers mainstream customizable options for workstations and servers for your AI and Machine Learning needs.

When to Expect Release

AMD does not have official specifications for the Instinct MI300 but they did mention a soft release date in the 2nd half of 2023. That probably means we should see these APUs in our data centers late this year or early next year. We are excited about the next generation of silicon for data centers and continue to update information as the AMD Instinct MI300 approaches release.

NVIDIA is tackling the data center APU market with its own innovation. NVIDIA Grace Hopper places both CPU and GPU on the same PCB which is not nearly as integrated as in the MI300 placing both compute dies on the same chip. However, we are excited to see what both Team Red and Team Green bring to the table with new advancements in the HPC and enterprise computing industry.

Have any Questions?

Contact Us Today!

.jpg?format=webp)