Fine Tuning BERT Large on a GPU Workstation

For this post, we measured fine-tuning performance (training and inference) for the BERT implementation of TensorFlow on NVIDIA RTX A5000 GPUs. For testing we used an Exxact Valence Workstation was fitted with 4x RTX A5000 GPUs with 24GB GPU memory per GPU.

Benchmark scripts we used for evaluation came from the NVIDIA NGC Repository BERT for TensorFlow: finetune_train_benchmark.sh and finetune_inference_benchmark.sh

We made slight modifications to the training benchmark script to get the larger batch size numbers.

The script runs multiple tests on the SQuAD v1.1 dataset using batch sizes 1, 2, 4, 8, 16, and 32 for training; and 1, 2, 4, and 8 for inference, and conducted tests using 1, 2, and 4 GPU configurations on BERT Large (we used 1 GPU for inference benchmark). In addition, we ran all benchmarks using TensorFlow's XLA across the board.

Key Points and Observations

- Performance-wise, the RTX A5000 performed well, and significantly better than the RTX 6000.

- In terms of throughput during training, the 4x configs really started to shine, performing well as the batch size increased.

- For those interested in training BERT Large, a 2x RTX A5000 system may be a great choice to start with, giving the opportunity to add additional cards as budget/scaling needs increase.

- NOTE: In order to run these benchmarks, or be able to fine-tune BERT Large with 4x GPUs, you'll need a system with at least 64GB RAM.

Interested in getting faster results?

Learn more about Exxact AI workstations starting at $3,700

| Make / Model | Supermicro AS -4124GS-TN |

| Nodes | 1 |

| Processor / Count | 2x AMD EPYC 7552 |

| Total Logical Cores | 48 |

| Memory | DDR4 512 GB |

| Storage | NVMe 3.84 TB |

| OS | Centos 7 |

| CUDA Version | 11.2 |

| BERT Dataset | squad v1 |

GPU Benchmark Overview

FP = Floating Point Precision, Seq = Sequence Length, BS = Batch Size

Our results were obtained by running the scripts/finetune_inference_benchmark.sh script in the TensorFlow 21.06-tf1-py3 NGC container 1x A5000 16GB GPUs. Performance numbers (throughput in sentences per second and latency in milliseconds) were averaged from 1024 iterations. Latency is computed as the time taken for a batch to process as they are fed in one after another in the model (i.e. no pipelining).

1x RTX A5000 BERT LARGE Inference Benchmark

| Model | Sequence-Length | Batch-size | Precision | Total-Inference-Time | Throughput-Average(sent/sec) | Latency-Average(ms) | Latency-50%(ms) | Latency-90%(ms) | Latency-95%(ms) | iLatency-99%(ms) | Latency-100%(ms) |

| base | 128 | 1 | fp16 | 23.01 | 169.35 | 9.11 | 6.09 | 6.61 | 6.8 | 7.35 | 5711.58 |

| base | 128 | 1 | fp32 | 21.15 | 179.34 | 8.69 | 5.78 | 6.26 | 6.39 | 6.73 | 5567.95 |

| base | 128 | 2 | fp16 | 20.87 | 351.11 | 9.13 | 5.84 | 6.4 | 6.56 | 6.98 | 5805.16 |

| base | 128 | 2 | fp32 | 21.02 | 349.67 | 9.14 | 5.86 | 6.39 | 6.54 | 7.01 | 5785.09 |

| base | 128 | 4 | fp16 | 26.31 | 680.97 | 11.61 | 5.9 | 6.32 | 6.48 | 7.13 | 5749.98 |

| base | 128 | 4 | fp32 | 26.61 | 672.74 | 11.74 | 6.02 | 6.5 | 6.69 | 7.58 | 5745.76 |

| base | 128 | 8 | fp16 | 32.91 | 1039.56 | 13.01 | 7.7 | 8.09 | 8.27 | 8.88 | 5855.3 |

| base | 128 | 8 | fp32 | 32.9 | 1037.42 | 13.01 | 7.7 | 8.12 | 8.32 | 8.91 | 5855.67 |

| base | 384 | 1 | fp16 | 17.5 | 175.96 | 11.7 | 5.77 | 6.28 | 6.44 | 7.72 | 6263.5 |

| base | 384 | 1 | fp32 | 17.17 | 176.4 | 11.52 | 5.71 | 6.35 | 6.55 | 7.16 | 6087.21 |

| base | 384 | 2 | fp16 | 25.29 | 258.87 | 18.88 | 7.76 | 8.06 | 8.26 | 9.36 | 6676.43 |

| base | 384 | 2 | fp32 | 25.02 | 256.01 | 18.82 | 7.79 | 8.22 | 8.37 | 9.72 | 6473.25 |

| base | 384 | 4 | fp16 | 29.94 | 338.67 | 23.03 | 11.78 | 12.16 | 12.38 | 13.82 | 6623.03 |

| base | 384 | 4 | fp32 | 30.43 | 337.72 | 23.14 | 11.84 | 12.27 | 12.42 | 13.57 | 6802.34 |

| base | 384 | 8 | fp16 | 41.07 | 402.89 | 31.8 | 19.83 | 20.29 | 20.54 | 21.64 | 6988.95 |

| base | 384 | 8 | fp32 | 41.33 | 401.45 | 31.99 | 19.9 | 20.35 | 20.63 | 21.98 | 7063.42 |

| large | 128 | 1 | fp16 | 40.89 | 104.15 | 15.79 | 10.17 | 10.92 | 11.07 | 11.44 | 11112.47 |

| large | 128 | 1 | fp32 | 37.26 | 103.92 | 15.83 | 10.25 | 11.09 | 11.26 | 11.79 | 11137.36 |

| large | 128 | 2 | fp16 | 37.81 | 193.01 | 16.95 | 10.58 | 11.04 | 11.2 | 12.06 | 11149.47 |

| large | 128 | 2 | fp32 | 37.5 | 194.01 | 16.91 | 10.49 | 11 | 11.18 | 12.26 | 11147.67 |

| large | 128 | 4 | fp16 | 52.43 | 318.74 | 24.24 | 12.71 | 13.16 | 13.31 | 13.8 | 11354.08 |

| large | 128 | 4 | fp32 | 52.81 | 318.7 | 24.25 | 12.74 | 13.19 | 13.38 | 14.04 | 11304.21 |

| large | 128 | 8 | fp16 | 68.8 | 432.67 | 29.34 | 18.61 | 19.13 | 19.32 | 19.88 | 11602.32 |

| large | 128 | 8 | fp32 | 68.41 | 435.77 | 29.16 | 18.46 | 19.01 | 19.16 | 19.89 | 11517.24 |

| large | 384 | 1 | fp16 | 33.8 | 81.64 | 24.05 | 12.45 | 12.81 | 12.96 | 13.95 | 12308.35 |

| large | 384 | 1 | fp32 | 33.75 | 81.83 | 24.07 | 12.42 | 12.86 | 13.03 | 13.55 | 12362.84 |

| large | 384 | 2 | fp16 | 51.77 | 108.18 | 41.33 | 18.6 | 19.17 | 19.37 | 20.66 | 13082.53 |

| large | 384 | 2 | fp32 | 51.5 | 108.37 | 41.29 | 18.62 | 19.13 | 19.27 | 20.16 | 13072.42 |

| large | 384 | 4 | fp16 | 66.02 | 127.29 | 54.69 | 31.5 | 32.08 | 32.28 | 33.48 | 13438.17 |

| large | 384 | 4 | fp32 | 66.1 | 126.8 | 54.82 | 31.58 | 32.13 | 32.34 | 33.75 | 13485.86 |

| large | 384 | 8 | fp16 | 93.68 | 148.62 | 78.67 | 53.86 | 54.45 | 54.66 | 56 | 14180.87 |

| large | 384 | 8 | fp32 | 93.9 | 148.74 | 78.75 | 53.77 | 54.52 | 54.66 | 55.87 | 14261.35 |

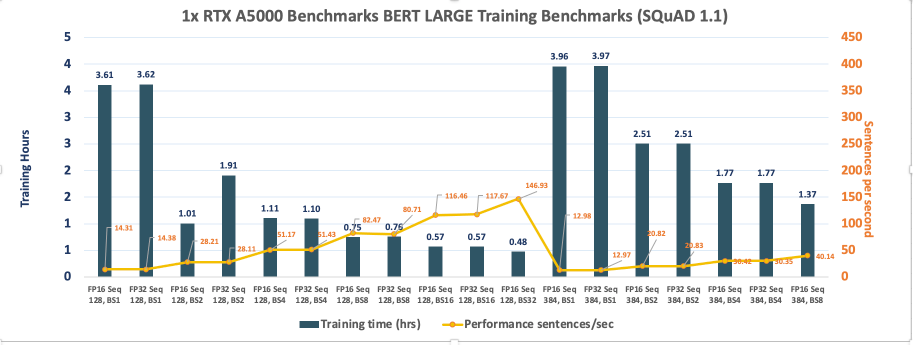

1x RTX A5000 BERT LARGE Training Benchmark

| GPUs | Precision | Sequence-Length | Total-Training-Time | Batch-size | Performance(sent/sec) |

| 1 | fp16 | 128 | 12991.12 | 1 | 14.31 |

| 1 | fp32 | 128 | 12932.53 | 1 | 14.38 |

| 1 | fp16 | 128 | 128 | 2 | 28.21 |

| 1 | fp32 | 128 | 6875.02 | 2 | 28.11 |

| 1 | fp16 | 128 | 3992.3 | 4 | 51.17 |

| 1 | fp32 | 128 | 3969.82 | 4 | 51.43 |

| 1 | fp16 | 128 | 2689.2 | 8 | 82.47 |

| 1 | fp32 | 128 | 2718.08 | 8 | 80.71 |

| 1 | fp16 | 128 | 2052.13 | 16 | 116.46 |

| 1 | fp32 | 128 | 2034.82 | 16 | 117.67 |

| 1 | fp16 | 128 | 1732.66 | 32 | 146.93 |

| 1 | fp32 | 128 | 1722.01 | 32 | 147.85 |

| 1 | fp16 | 384 | 14256.77 | 1 | 12.98 |

| 1 | fp32 | 384 | 14279.8 | 1 | 12.97 |

| 1 | fp16 | 384 | 9047.55 | 2 | 20.82 |

| 1 | fp32 | 384 | 9044.82 | 2 | 20.83 |

| 1 | fp16 | 384 | 6365.77 | 4 | 30.42 |

| 1 | fp32 | 384 | 6380.79 | 4 | 30.35 |

| 1 | fp16 | 384 | 4939.75 | 8 | 40.14 |

| 1 | fp32 | 384 | 4900.55 | 8 | 40.44 |

| 1 | fp16 | 384 | 4310.77 | 16 | 47.08 |

| 1 | fp32 | 384 | 4299.06 | 16 | 47.19 |

| 1 | fp16 | 384 | Did not Run | 32 | Did not Run |

| 1 | fp32 | 384 | Did not Run | 32 | Did not Run |

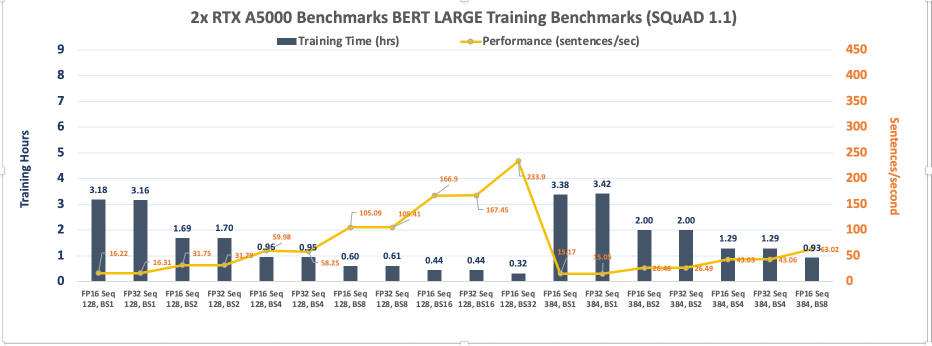

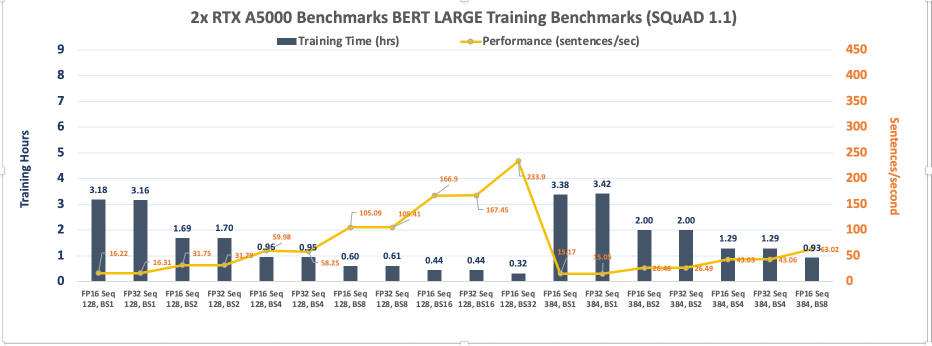

2x RTX A5000 BERT LARGE Training Benchmark

| GPUs | Precision | Sequence-Length | Total-Training-Time | Batch-size | Performance(sent/sec) |

| 2 | fp16 | 128 | 11437.17 | 1 | 16.22 |

| 2 | fp32 | 128 | 11376.72 | 1 | 16.31 |

| 2 | fp16 | 128 | 6073.78 | 2 | 31.75 |

| 2 | fp32 | 128 | 6090.61 | 2 | 31.79 |

| 2 | fp16 | 128 | 3453.41 | 4 | 59.98 |

| 2 | fp32 | 128 | 3424.23 | 4 | 58.25 |

| 2 | fp16 | 128 | 2193.27 | 8 | 105.09 |

| 2 | fp32 | 128 | 2187.78 | 8 | 105.41 |

| 2 | fp16 | 128 | 1580.9 | 16 | 166.9 |

| 2 | fp32 | 128 | 1580.07 | 16 | 167.45 |

| 2 | fp16 | 128 | 1160.76 | 32 | 233.9 |

| 2 | fp32 | 128 | 1158.72 | 32 | 233.28 |

| 2 | fp16 | 384 | 12180.88 | 1 | 15.17 |

| 2 | fp32 | 384 | 12294.78 | 1 | 15.05 |

| 2 | fp16 | 384 | 7199.95 | 2 | 26.46 |

| 2 | fp32 | 384 | 7211.68 | 2 | 26.49 |

| 2 | fp16 | 384 | 4630.27 | 4 | 43.03 |

| 2 | fp32 | 384 | 4626.98 | 4 | 43.06 |

| 2 | fp16 | 384 | 3340.13 | 8 | 63.02 |

| 2 | fp32 | 384 | 3336.61 | 8 | 63.02 |

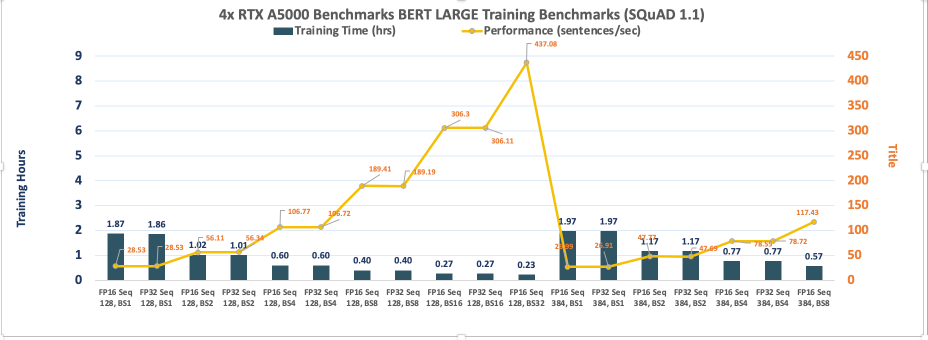

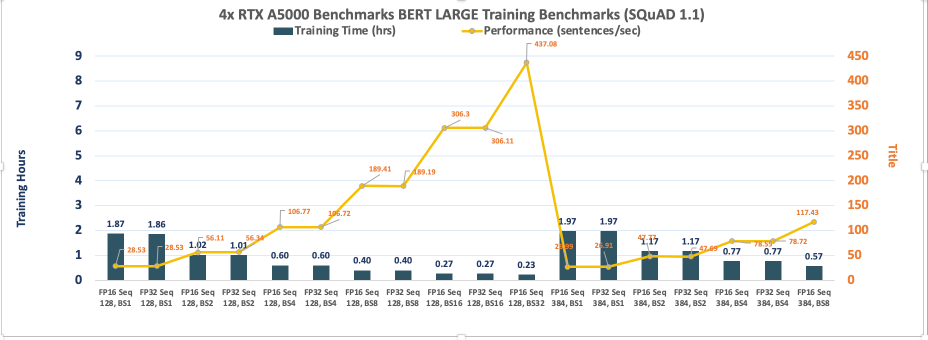

4x RTX A5000 BERT LARGE Training Benchmark

| GPUs | Precision | Sequence-Length | Total-Training-Time | Batch-size | Performance(sent/sec) |

| 4 | fp16 | 128 | 6721.29 | 1 | 28.53 |

| 4 | fp32 | 128 | 6720.39 | 1 | 28.53 |

| 4 | fp16 | 128 | 3657.87 | 2 | 56.11 |

| 4 | fp32 | 128 | 3643.79 | 2 | 56.34 |

| 4 | fp16 | 128 | 2161.74 | 4 | 106.77 |

| 4 | fp32 | 128 | 2163.32 | 4 | 106.72 |

| 4 | fp16 | 128 | 1448.3 | 8 | 189.41 |

| 4 | fp32 | 128 | 1447.99 | 8 | 189.19 |

| 4 | fp16 | 128 | 983.24 | 16 | 306.3 |

| 4 | fp32 | 128 | 980.91 | 16 | 306.11 |

| 4 | fp16 | 128 | 815.48 | 32 | 437.08 |

| 4 | fp32 | 128 | 820.45 | 32 | 436.6 |

| 4 | fp16 | 384 | 7088 | 1 | 26.99 |

| 4 | fp32 | 384 | 7109.11 | 1 | 26.91 |

| 4 | fp16 | 384 | 4220.48 | 2 | 47.77 |

| 4 | fp32 | 384 | 4227.16 | 2 | 47.69 |

| 4 | fp16 | 384 | 2777.77 | 4 | 78.55 |

| 4 | fp32 | 384 | 2775.14 | 4 | 78.72 |

| 4 | fp16 | 384 | 2047.37 | 8 | 117.43 |

| 4 | fp32 | 384 | 2039.43 | 8 | 118.1 |

RTX A5000 BERT LARGE Training Benchmark Comparison

Have any questions?

Contact Exxact Today

NVIDIA RTX A5000 BERT Large Fine Tuning Benchmarks in TensorFlow

Fine Tuning BERT Large on a GPU Workstation

For this post, we measured fine-tuning performance (training and inference) for the BERT implementation of TensorFlow on NVIDIA RTX A5000 GPUs. For testing we used an Exxact Valence Workstation was fitted with 4x RTX A5000 GPUs with 24GB GPU memory per GPU.

Benchmark scripts we used for evaluation came from the NVIDIA NGC Repository BERT for TensorFlow: finetune_train_benchmark.sh and finetune_inference_benchmark.sh

We made slight modifications to the training benchmark script to get the larger batch size numbers.

The script runs multiple tests on the SQuAD v1.1 dataset using batch sizes 1, 2, 4, 8, 16, and 32 for training; and 1, 2, 4, and 8 for inference, and conducted tests using 1, 2, and 4 GPU configurations on BERT Large (we used 1 GPU for inference benchmark). In addition, we ran all benchmarks using TensorFlow's XLA across the board.

Key Points and Observations

- Performance-wise, the RTX A5000 performed well, and significantly better than the RTX 6000.

- In terms of throughput during training, the 4x configs really started to shine, performing well as the batch size increased.

- For those interested in training BERT Large, a 2x RTX A5000 system may be a great choice to start with, giving the opportunity to add additional cards as budget/scaling needs increase.

- NOTE: In order to run these benchmarks, or be able to fine-tune BERT Large with 4x GPUs, you'll need a system with at least 64GB RAM.

Interested in getting faster results?

Learn more about Exxact AI workstations starting at $3,700

| Make / Model | Supermicro AS -4124GS-TN |

| Nodes | 1 |

| Processor / Count | 2x AMD EPYC 7552 |

| Total Logical Cores | 48 |

| Memory | DDR4 512 GB |

| Storage | NVMe 3.84 TB |

| OS | Centos 7 |

| CUDA Version | 11.2 |

| BERT Dataset | squad v1 |

GPU Benchmark Overview

FP = Floating Point Precision, Seq = Sequence Length, BS = Batch Size

Our results were obtained by running the scripts/finetune_inference_benchmark.sh script in the TensorFlow 21.06-tf1-py3 NGC container 1x A5000 16GB GPUs. Performance numbers (throughput in sentences per second and latency in milliseconds) were averaged from 1024 iterations. Latency is computed as the time taken for a batch to process as they are fed in one after another in the model (i.e. no pipelining).

1x RTX A5000 BERT LARGE Inference Benchmark

| Model | Sequence-Length | Batch-size | Precision | Total-Inference-Time | Throughput-Average(sent/sec) | Latency-Average(ms) | Latency-50%(ms) | Latency-90%(ms) | Latency-95%(ms) | iLatency-99%(ms) | Latency-100%(ms) |

| base | 128 | 1 | fp16 | 23.01 | 169.35 | 9.11 | 6.09 | 6.61 | 6.8 | 7.35 | 5711.58 |

| base | 128 | 1 | fp32 | 21.15 | 179.34 | 8.69 | 5.78 | 6.26 | 6.39 | 6.73 | 5567.95 |

| base | 128 | 2 | fp16 | 20.87 | 351.11 | 9.13 | 5.84 | 6.4 | 6.56 | 6.98 | 5805.16 |

| base | 128 | 2 | fp32 | 21.02 | 349.67 | 9.14 | 5.86 | 6.39 | 6.54 | 7.01 | 5785.09 |

| base | 128 | 4 | fp16 | 26.31 | 680.97 | 11.61 | 5.9 | 6.32 | 6.48 | 7.13 | 5749.98 |

| base | 128 | 4 | fp32 | 26.61 | 672.74 | 11.74 | 6.02 | 6.5 | 6.69 | 7.58 | 5745.76 |

| base | 128 | 8 | fp16 | 32.91 | 1039.56 | 13.01 | 7.7 | 8.09 | 8.27 | 8.88 | 5855.3 |

| base | 128 | 8 | fp32 | 32.9 | 1037.42 | 13.01 | 7.7 | 8.12 | 8.32 | 8.91 | 5855.67 |

| base | 384 | 1 | fp16 | 17.5 | 175.96 | 11.7 | 5.77 | 6.28 | 6.44 | 7.72 | 6263.5 |

| base | 384 | 1 | fp32 | 17.17 | 176.4 | 11.52 | 5.71 | 6.35 | 6.55 | 7.16 | 6087.21 |

| base | 384 | 2 | fp16 | 25.29 | 258.87 | 18.88 | 7.76 | 8.06 | 8.26 | 9.36 | 6676.43 |

| base | 384 | 2 | fp32 | 25.02 | 256.01 | 18.82 | 7.79 | 8.22 | 8.37 | 9.72 | 6473.25 |

| base | 384 | 4 | fp16 | 29.94 | 338.67 | 23.03 | 11.78 | 12.16 | 12.38 | 13.82 | 6623.03 |

| base | 384 | 4 | fp32 | 30.43 | 337.72 | 23.14 | 11.84 | 12.27 | 12.42 | 13.57 | 6802.34 |

| base | 384 | 8 | fp16 | 41.07 | 402.89 | 31.8 | 19.83 | 20.29 | 20.54 | 21.64 | 6988.95 |

| base | 384 | 8 | fp32 | 41.33 | 401.45 | 31.99 | 19.9 | 20.35 | 20.63 | 21.98 | 7063.42 |

| large | 128 | 1 | fp16 | 40.89 | 104.15 | 15.79 | 10.17 | 10.92 | 11.07 | 11.44 | 11112.47 |

| large | 128 | 1 | fp32 | 37.26 | 103.92 | 15.83 | 10.25 | 11.09 | 11.26 | 11.79 | 11137.36 |

| large | 128 | 2 | fp16 | 37.81 | 193.01 | 16.95 | 10.58 | 11.04 | 11.2 | 12.06 | 11149.47 |

| large | 128 | 2 | fp32 | 37.5 | 194.01 | 16.91 | 10.49 | 11 | 11.18 | 12.26 | 11147.67 |

| large | 128 | 4 | fp16 | 52.43 | 318.74 | 24.24 | 12.71 | 13.16 | 13.31 | 13.8 | 11354.08 |

| large | 128 | 4 | fp32 | 52.81 | 318.7 | 24.25 | 12.74 | 13.19 | 13.38 | 14.04 | 11304.21 |

| large | 128 | 8 | fp16 | 68.8 | 432.67 | 29.34 | 18.61 | 19.13 | 19.32 | 19.88 | 11602.32 |

| large | 128 | 8 | fp32 | 68.41 | 435.77 | 29.16 | 18.46 | 19.01 | 19.16 | 19.89 | 11517.24 |

| large | 384 | 1 | fp16 | 33.8 | 81.64 | 24.05 | 12.45 | 12.81 | 12.96 | 13.95 | 12308.35 |

| large | 384 | 1 | fp32 | 33.75 | 81.83 | 24.07 | 12.42 | 12.86 | 13.03 | 13.55 | 12362.84 |

| large | 384 | 2 | fp16 | 51.77 | 108.18 | 41.33 | 18.6 | 19.17 | 19.37 | 20.66 | 13082.53 |

| large | 384 | 2 | fp32 | 51.5 | 108.37 | 41.29 | 18.62 | 19.13 | 19.27 | 20.16 | 13072.42 |

| large | 384 | 4 | fp16 | 66.02 | 127.29 | 54.69 | 31.5 | 32.08 | 32.28 | 33.48 | 13438.17 |

| large | 384 | 4 | fp32 | 66.1 | 126.8 | 54.82 | 31.58 | 32.13 | 32.34 | 33.75 | 13485.86 |

| large | 384 | 8 | fp16 | 93.68 | 148.62 | 78.67 | 53.86 | 54.45 | 54.66 | 56 | 14180.87 |

| large | 384 | 8 | fp32 | 93.9 | 148.74 | 78.75 | 53.77 | 54.52 | 54.66 | 55.87 | 14261.35 |

1x RTX A5000 BERT LARGE Training Benchmark

| GPUs | Precision | Sequence-Length | Total-Training-Time | Batch-size | Performance(sent/sec) |

| 1 | fp16 | 128 | 12991.12 | 1 | 14.31 |

| 1 | fp32 | 128 | 12932.53 | 1 | 14.38 |

| 1 | fp16 | 128 | 128 | 2 | 28.21 |

| 1 | fp32 | 128 | 6875.02 | 2 | 28.11 |

| 1 | fp16 | 128 | 3992.3 | 4 | 51.17 |

| 1 | fp32 | 128 | 3969.82 | 4 | 51.43 |

| 1 | fp16 | 128 | 2689.2 | 8 | 82.47 |

| 1 | fp32 | 128 | 2718.08 | 8 | 80.71 |

| 1 | fp16 | 128 | 2052.13 | 16 | 116.46 |

| 1 | fp32 | 128 | 2034.82 | 16 | 117.67 |

| 1 | fp16 | 128 | 1732.66 | 32 | 146.93 |

| 1 | fp32 | 128 | 1722.01 | 32 | 147.85 |

| 1 | fp16 | 384 | 14256.77 | 1 | 12.98 |

| 1 | fp32 | 384 | 14279.8 | 1 | 12.97 |

| 1 | fp16 | 384 | 9047.55 | 2 | 20.82 |

| 1 | fp32 | 384 | 9044.82 | 2 | 20.83 |

| 1 | fp16 | 384 | 6365.77 | 4 | 30.42 |

| 1 | fp32 | 384 | 6380.79 | 4 | 30.35 |

| 1 | fp16 | 384 | 4939.75 | 8 | 40.14 |

| 1 | fp32 | 384 | 4900.55 | 8 | 40.44 |

| 1 | fp16 | 384 | 4310.77 | 16 | 47.08 |

| 1 | fp32 | 384 | 4299.06 | 16 | 47.19 |

| 1 | fp16 | 384 | Did not Run | 32 | Did not Run |

| 1 | fp32 | 384 | Did not Run | 32 | Did not Run |

2x RTX A5000 BERT LARGE Training Benchmark

| GPUs | Precision | Sequence-Length | Total-Training-Time | Batch-size | Performance(sent/sec) |

| 2 | fp16 | 128 | 11437.17 | 1 | 16.22 |

| 2 | fp32 | 128 | 11376.72 | 1 | 16.31 |

| 2 | fp16 | 128 | 6073.78 | 2 | 31.75 |

| 2 | fp32 | 128 | 6090.61 | 2 | 31.79 |

| 2 | fp16 | 128 | 3453.41 | 4 | 59.98 |

| 2 | fp32 | 128 | 3424.23 | 4 | 58.25 |

| 2 | fp16 | 128 | 2193.27 | 8 | 105.09 |

| 2 | fp32 | 128 | 2187.78 | 8 | 105.41 |

| 2 | fp16 | 128 | 1580.9 | 16 | 166.9 |

| 2 | fp32 | 128 | 1580.07 | 16 | 167.45 |

| 2 | fp16 | 128 | 1160.76 | 32 | 233.9 |

| 2 | fp32 | 128 | 1158.72 | 32 | 233.28 |

| 2 | fp16 | 384 | 12180.88 | 1 | 15.17 |

| 2 | fp32 | 384 | 12294.78 | 1 | 15.05 |

| 2 | fp16 | 384 | 7199.95 | 2 | 26.46 |

| 2 | fp32 | 384 | 7211.68 | 2 | 26.49 |

| 2 | fp16 | 384 | 4630.27 | 4 | 43.03 |

| 2 | fp32 | 384 | 4626.98 | 4 | 43.06 |

| 2 | fp16 | 384 | 3340.13 | 8 | 63.02 |

| 2 | fp32 | 384 | 3336.61 | 8 | 63.02 |

4x RTX A5000 BERT LARGE Training Benchmark

| GPUs | Precision | Sequence-Length | Total-Training-Time | Batch-size | Performance(sent/sec) |

| 4 | fp16 | 128 | 6721.29 | 1 | 28.53 |

| 4 | fp32 | 128 | 6720.39 | 1 | 28.53 |

| 4 | fp16 | 128 | 3657.87 | 2 | 56.11 |

| 4 | fp32 | 128 | 3643.79 | 2 | 56.34 |

| 4 | fp16 | 128 | 2161.74 | 4 | 106.77 |

| 4 | fp32 | 128 | 2163.32 | 4 | 106.72 |

| 4 | fp16 | 128 | 1448.3 | 8 | 189.41 |

| 4 | fp32 | 128 | 1447.99 | 8 | 189.19 |

| 4 | fp16 | 128 | 983.24 | 16 | 306.3 |

| 4 | fp32 | 128 | 980.91 | 16 | 306.11 |

| 4 | fp16 | 128 | 815.48 | 32 | 437.08 |

| 4 | fp32 | 128 | 820.45 | 32 | 436.6 |

| 4 | fp16 | 384 | 7088 | 1 | 26.99 |

| 4 | fp32 | 384 | 7109.11 | 1 | 26.91 |

| 4 | fp16 | 384 | 4220.48 | 2 | 47.77 |

| 4 | fp32 | 384 | 4227.16 | 2 | 47.69 |

| 4 | fp16 | 384 | 2777.77 | 4 | 78.55 |

| 4 | fp32 | 384 | 2775.14 | 4 | 78.72 |

| 4 | fp16 | 384 | 2047.37 | 8 | 117.43 |

| 4 | fp32 | 384 | 2039.43 | 8 | 118.1 |

RTX A5000 BERT LARGE Training Benchmark Comparison

Have any questions?

Contact Exxact Today

.jpg?format=webp)