For this post, we show deep learning benchmarks for TensorFlow on an Exxact TensorEX Server. To conduct these benchmarks this deep learning server was outfitted with 4 NVIDIA V100S GPUs.

We ran the standard “tf_cnn_benchmarks.py” benchmark script from TensorFlow’s github. To compare, tests were run on the following networks: ResNet-50, ResNet-152, Inception V3, Inception V4 and googlenet. In addition we compared the FP16 to FP32 performance, and used batch size of 128 . The same tests were run using 2 and 4 GPU configurations. All benchmarks were done using ‘vanilla’ TensorFlow settings for FP16 and FP32.

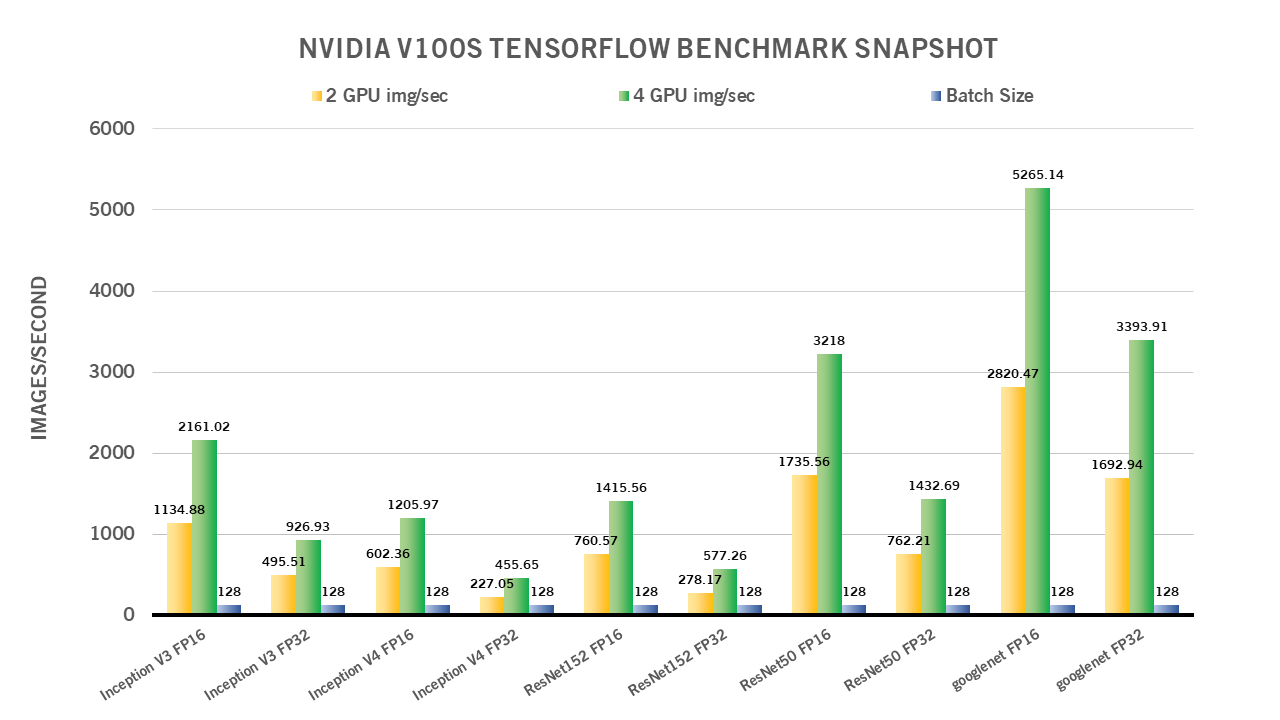

NVIDIA V100S Deep Learning Benchmark Snapshot

As we see, running FP16 gives a great boost to performance in the overall images/sec metric. If you’re able to train using FP16 vs FP32, we recommend to do so.

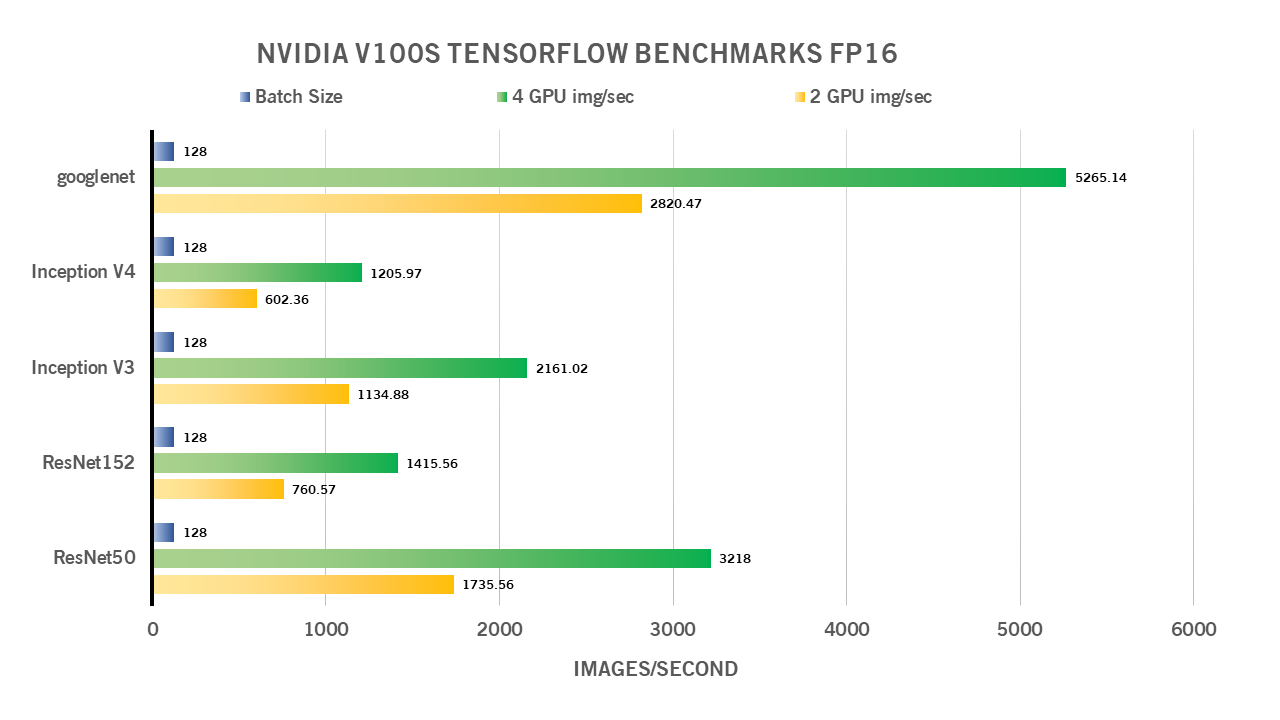

NVIDIA V100S Deep Learning Benchmarks FP16

| 2 GPU img/sec | 4 GPU img/sec | Batch Size | |

| ResNet50 | 1735.56 | 3218 | 128 |

| ResNet152 | 760.57 | 1415.56 | 128 |

| Inception V3 | 1134.88 | 2161.02 | 128 |

| Inception V4 | 602.36 | 1205.97 | 128 |

| googlenet | 2820.47 | 5265.14 | 128 |

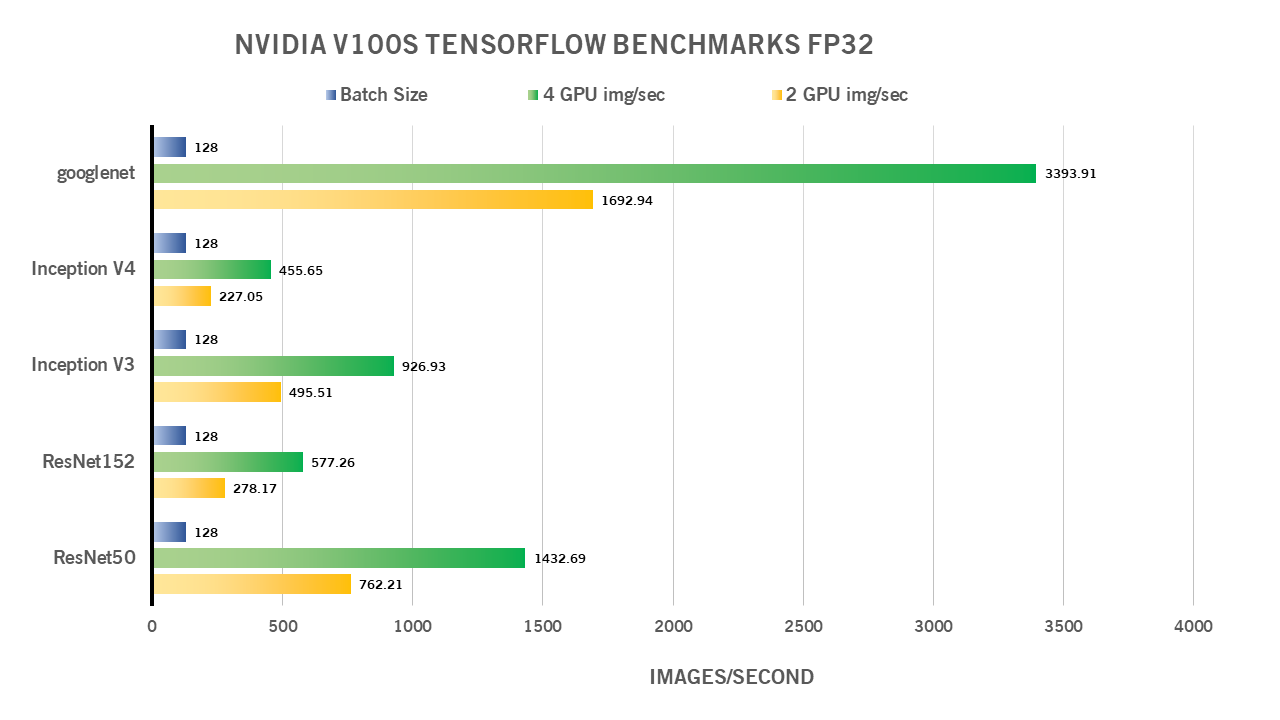

NVIDIA V100S Deep Learning Benchmarks FP32

| 2 GPU img/sec | 4 GPU img/sec | Batch Size | |

| ResNet50 | 762.21 | 1432.69 | 128 |

| ResNet152 | 278.17 | 577.26 | 128 |

| Inception V3 | 495.51 | 926.93 | 128 |

| Inception V4 | 227.05 | 455.65 | 128 |

| googlenet | 1692.94 | 3393.91 | 128 |

System Specifications:

| Model | Exxact TensorEX Deep Learning Server |

| GPU | NVIDIA Tesla V100S 32 GB PCIe |

| CPU | Intel Xeon Silver 4116 |

| RAM | 128GB DDR4 |

| SSD (OS) | 120 GB |

| SSD (Data) | 1024.2 GB |

| OS | CentOS Linux 7 |

| NVIDIA DRIVER | 440.82 |

| CUDA Version | 10.2 |

| Python | 3.6.9 |

| TensorFlow | 20.02-tf1-py3 |

| Docker Image | nvcr.io/nvidia/tensorflow:20.02-tf1-py3 |

Training Parameters

| Dataset: | Imagenet |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | 128 |

| Num Batches: | 100 |

| Num Epochs: | 0.16 |

| Devices: | [‘/gpu:0’]…(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | momentum |

| Variables: | parameter_server |

Interested in More Deep Learning Benchmarks?

TensorFlow Benchmarks for Exxact Server Featuring NVIDIA V100S

For this post, we show deep learning benchmarks for TensorFlow on an Exxact TensorEX Server. To conduct these benchmarks this deep learning server was outfitted with 4 NVIDIA V100S GPUs.

We ran the standard “tf_cnn_benchmarks.py” benchmark script from TensorFlow’s github. To compare, tests were run on the following networks: ResNet-50, ResNet-152, Inception V3, Inception V4 and googlenet. In addition we compared the FP16 to FP32 performance, and used batch size of 128 . The same tests were run using 2 and 4 GPU configurations. All benchmarks were done using ‘vanilla’ TensorFlow settings for FP16 and FP32.

NVIDIA V100S Deep Learning Benchmark Snapshot

As we see, running FP16 gives a great boost to performance in the overall images/sec metric. If you’re able to train using FP16 vs FP32, we recommend to do so.

NVIDIA V100S Deep Learning Benchmarks FP16

| 2 GPU img/sec | 4 GPU img/sec | Batch Size | |

| ResNet50 | 1735.56 | 3218 | 128 |

| ResNet152 | 760.57 | 1415.56 | 128 |

| Inception V3 | 1134.88 | 2161.02 | 128 |

| Inception V4 | 602.36 | 1205.97 | 128 |

| googlenet | 2820.47 | 5265.14 | 128 |

NVIDIA V100S Deep Learning Benchmarks FP32

| 2 GPU img/sec | 4 GPU img/sec | Batch Size | |

| ResNet50 | 762.21 | 1432.69 | 128 |

| ResNet152 | 278.17 | 577.26 | 128 |

| Inception V3 | 495.51 | 926.93 | 128 |

| Inception V4 | 227.05 | 455.65 | 128 |

| googlenet | 1692.94 | 3393.91 | 128 |

System Specifications:

| Model | Exxact TensorEX Deep Learning Server |

| GPU | NVIDIA Tesla V100S 32 GB PCIe |

| CPU | Intel Xeon Silver 4116 |

| RAM | 128GB DDR4 |

| SSD (OS) | 120 GB |

| SSD (Data) | 1024.2 GB |

| OS | CentOS Linux 7 |

| NVIDIA DRIVER | 440.82 |

| CUDA Version | 10.2 |

| Python | 3.6.9 |

| TensorFlow | 20.02-tf1-py3 |

| Docker Image | nvcr.io/nvidia/tensorflow:20.02-tf1-py3 |

Training Parameters

| Dataset: | Imagenet |

| Mode: | training |

| SingleSess: | False |

| Batch Size: | 128 |

| Num Batches: | 100 |

| Num Epochs: | 0.16 |

| Devices: | [‘/gpu:0’]…(varied) |

| NUMA bind: | False |

| Data format: | NCHW |

| Optimizer: | momentum |

| Variables: | parameter_server |