Hugging Face 🤗: The Best Natural Language Processing Ecosystem You’re Not Using?

If you’ve been even vaguely aware of developments in machine learning and AI over the last few years since 2018, you definitely have heard of the massive progress being made in the world of Natural Language Processing (or NLP) due in large part to the development of larger and larger transformer models.

Of course we don’t mean the extraterrestrial shape-shifting robots of the 1980s franchise of the same name, but rather attention-based language models. These models gained widespread attention in the ML community in 2017 with Vaswani et al.’s seminal paper Attention Is All You Need, and the subsequent mass adoption of the Transformer blueprint for NLP in 2018 has been likened by some as the ImageNet moment of language models.

A few years later, Hugging Face has established itself as a one-stop shop for all things NLP, including datasets, pre-trained models, community, and even a course.

Hugging Face is a startup built on top of open source tools and data. Unlike a typical ML business which might offer an ML-enabled service or product directly, Hugging Face focuses on community-building around the concepts of consolidating best practices and state of the art tools.

While they do offer tiered pricing options for access to premium AutoNLP capabilities and an accelerated inference application programming interface (API), basic access to the inference API is included in the free tier and their core NLP libraries (transformers, tokenizers, datasets, and accelerate) are developed in the open and freely available under an Apache 2.0 License.

Hugging Face is Built on the Concept of Transformers

Obligatory “not those ‘Transformers.’” Photo by Nadeem, under the Pixaby license.

Visit the Hugging Face website and you’ll read that Hugging Face is the “AI community building the future.” Specifically, they are focused on Natural Language Processing (NLP), and within the specialization Hugging Face is particularly focused on transformer models and closely related progeny.

Transformer models have been the predominant deep learning models used in NLP for the past several years, with well-known exemplars in GPT-3 from OpenAI and its predecessors, the Bidirectional Encoder Representations from Transformers model (BERT) developed by Google, XLNet from Carnegie Mellon and Google, and many other models and variants besides. Following Vaswani et al.’s seminal paper “Attention is All You Need” from 2017, the unofficial milestone marking the start of the “age of transformers,” transformer models have gotten bigger, better, and much closer to generating text that can pass for human writing, as well as improving substantial on statistical loss metrics and standard benchmarks.

Their training datasets, likewise, have also expanded in size and scope. For example, the original Transformer was followed by the much larger TransformerXL, BERT-Base scaled from 110 million to 340 million parameters in Bert-Large, and GPT-2 (1.5 billion parameters) was succeeded by GPT-3 (175 billion parameters).

The current occupant of the throne for largest transformer model, (excepting those that use tricks that recruit only a subset of all parameters, like the trillion-plus switch transformers from Google or the equally massive Wu Dao transformers from the Beijing Academy of Artificial Intelligence) is Microsoft’s Megatron-Turing Natural Language Generation model (MT-NLG) at 530 billion parameters. And the transformer type of model isn’t just for natural language processing, either.

Transformers have been adapted for tasks in protein and DNA sequences (e.g. ProteinBERT and others), image and video processing with vision transformers, and even reinforcement learning problems, and many other applications.

Training a large, state-of-the-art transformer model for NLP comes with an estimated price tag ranging up into the tens of millions of dollars, with considerable energy and environmental costs accompanying development.

As a result, training up a large transformer from scratch for every NLP project or business is just not feasible, but that’s no reason that the benefits of these models can’t be shared and applied across a multitude of application areas and segments of society. The answer is pre-trained models, transfer learning, and fine-tuning for specific tasks, and these values form the cornerstone of the Hugging Face ecosystem and community.

Hugging Face follows in the footsteps of Howard and Ruder’s ULMFiT, or Universal Language Model Fine-Tuning approach, perhaps the inaugural foray into transfer learning for natural language processing that made fine-tuning pre-trained models a standard practice.

Interested in a deep learning workstation that can handle NLP training?

Learn more about Exxact AI workstations starting around $5,500

The Hugging Face Ecosystem

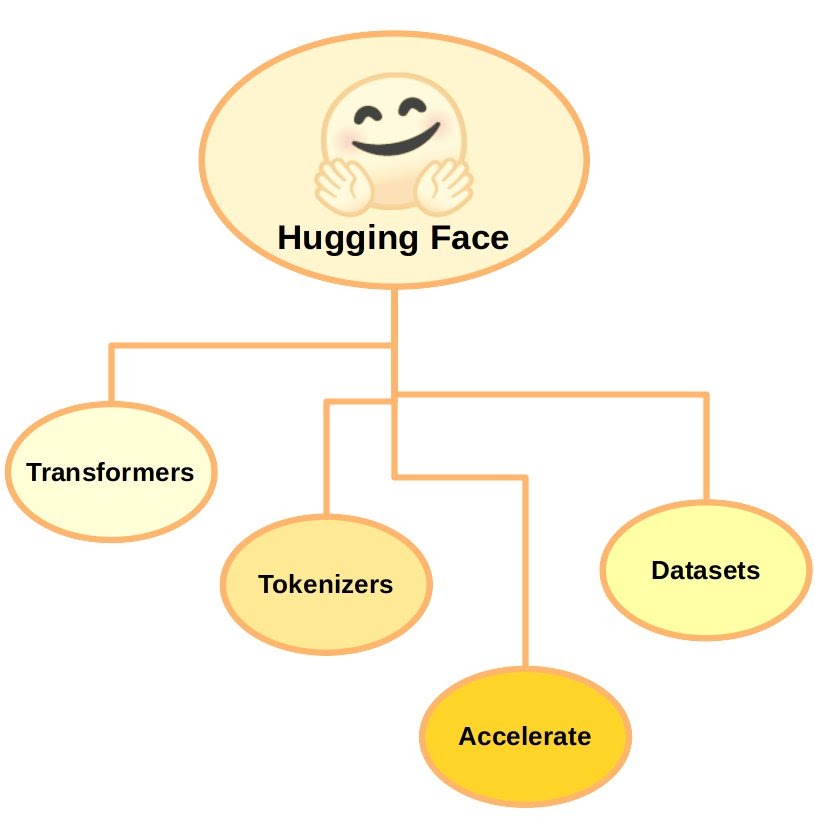

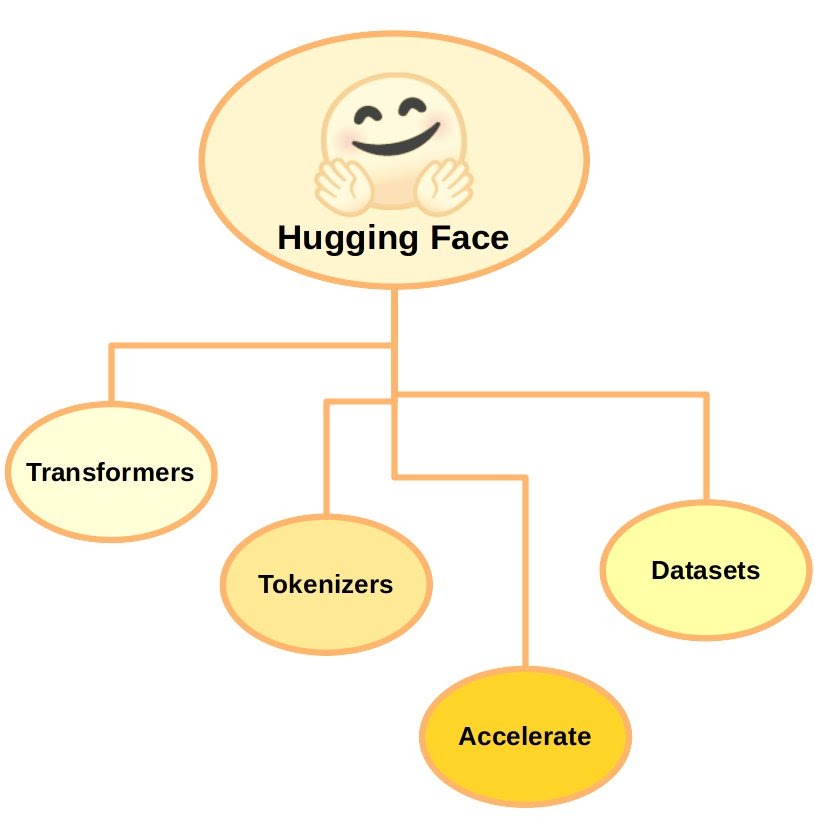

Hugging face is built around the concept of attention-based transformer models, and so it’s no surprise the core of the 🤗 ecosystem is their transformers library. The transformer library is supported by the accompanying datasets and tokenizers libraries.

Remember that transformers don’t understand text, or any sequences for that matter, in its native form of a string of characters. Rather, sequences of letters or other data must first be converted to the numerical language of vectors, matrices, and tensors. Hence, a tokenizer is an essential component of any transformer pipeline.

Hugging Face also provides the accelerate library, which integrates readily with existing Hugging Face training flows, and indeed generic PyTorch training scripts, in order to easily empower distributed training with various hardware acceleration devices like GPUs, TPUs, etc. 🤗 accelerate handles device placement, so the same training script can be used on a dedicated training run with multiple GPUs, a specialized cloud accelerator like a TPU, or on a laptop CPU for development on the go.

In addition to the transformers, tokenizers, datasets, and accelerate libraries, Hugging Face features a number of community resources. The Hugging Face Hub provides an organized way to share your own models with others, and is supported by the huggingface_hub library. The Hub adds value to your projects with tools for versioning and an API for hosted inference.

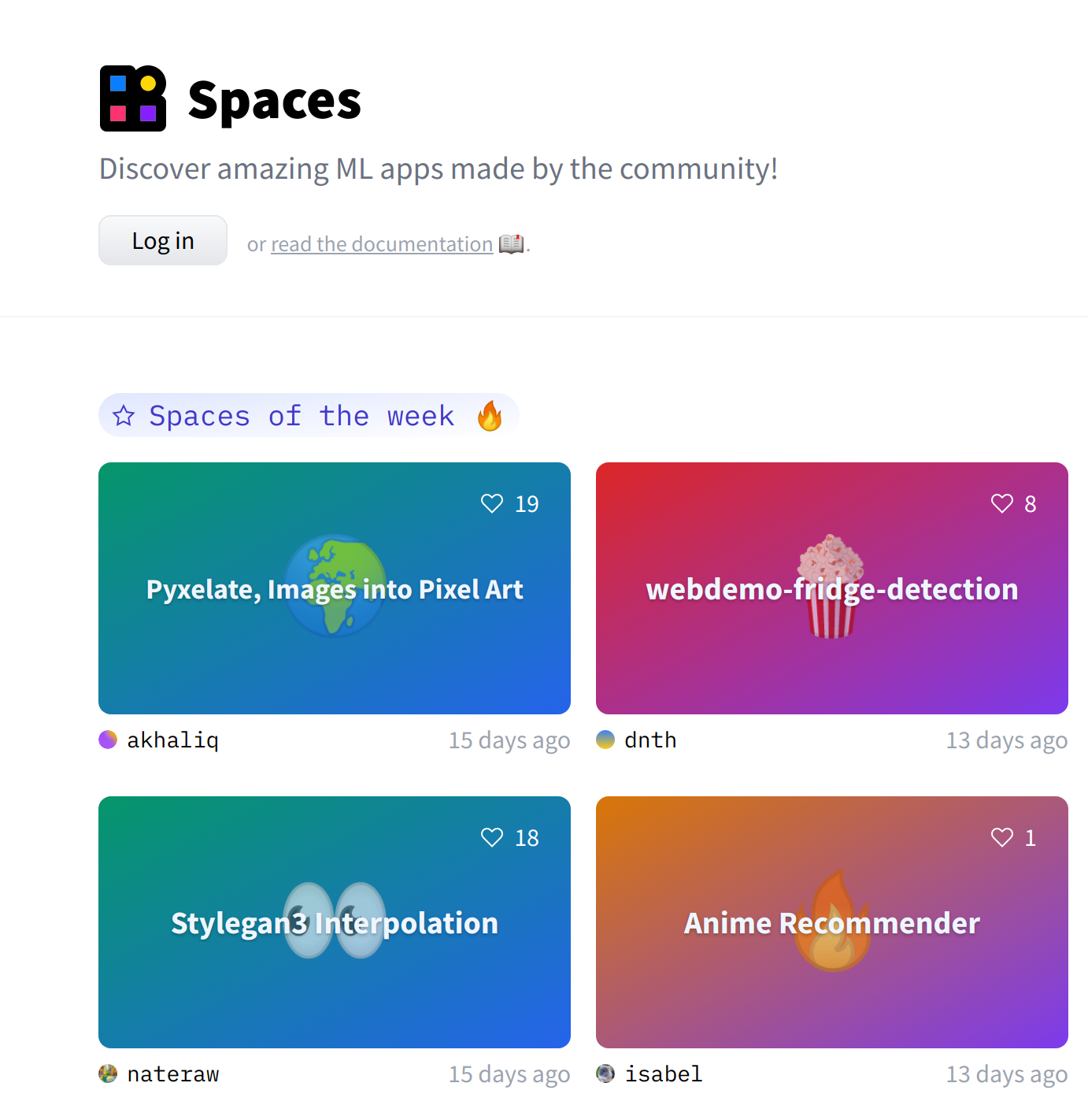

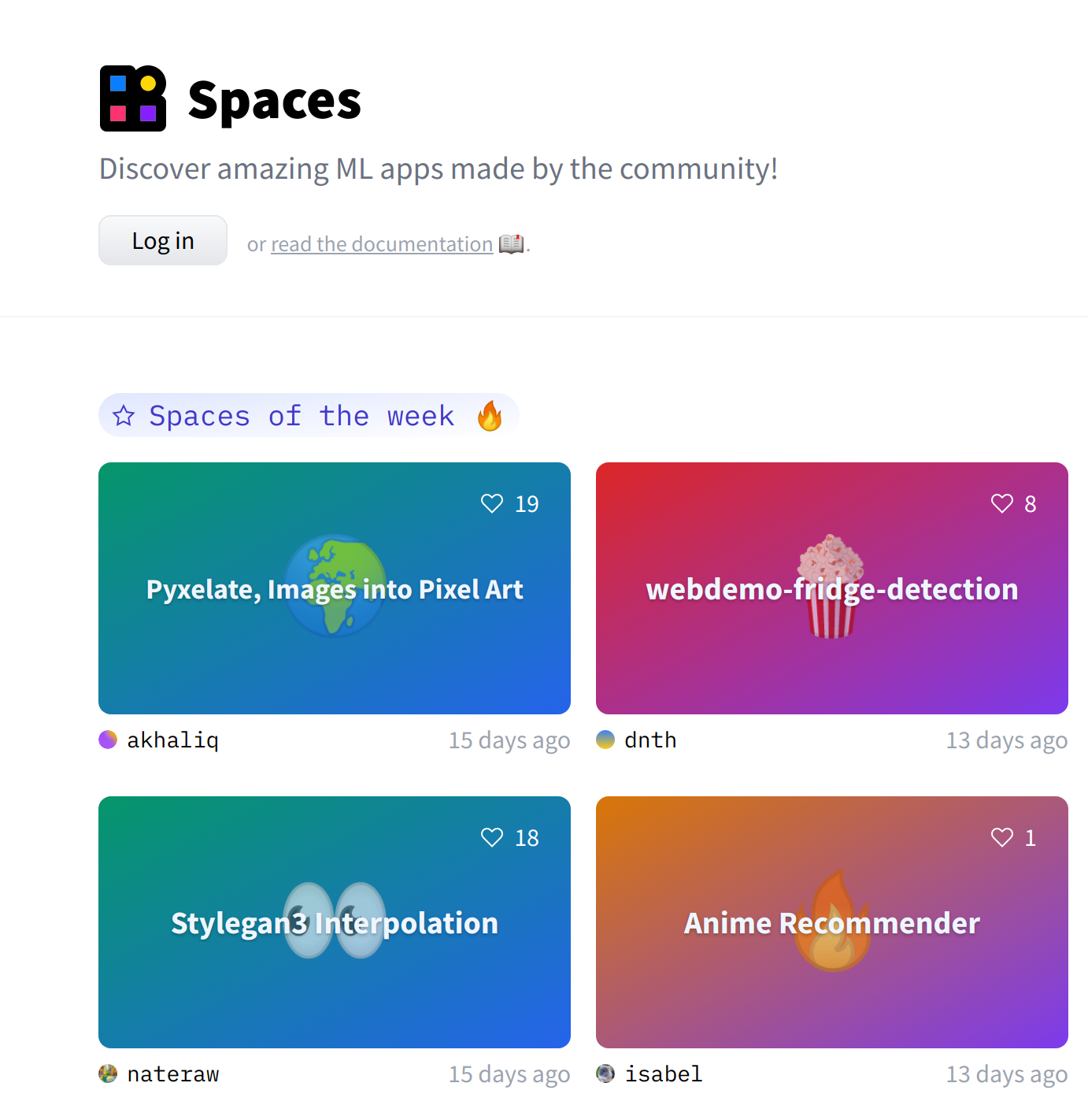

Screenshot of several of the top apps on Hugging Face Spaces.

Additionally, Hugging Face Spaces is a venue for showcasing your own 🤗-powered apps and browsing those created by others. One prominent example of an app that’s popular in Spaces right now is the CodeParrot demo. CodeParrot is a tool that highlights low-probability sequences in code. This can be useful for quickly identifying bugs or style departures like using the wrong naming convention.

Text Generation Demo

Now that we’ve gotten a feel for the libraries and goals of the Hugging Face ecosystem, let’s try a quick demo of generating some text with GPT-2.

Of course, GPT-2 has since been superseded in its lineage by the much more massive GPT-3 and variants like Codex, which is trained on writing code and powers GitHub CoPilot, but the famous "Unicorns" article generated substantial interest at the time and GPT-2 is still a powerful model well-suited for many applications, including this demo.

First, let’s set up a virtual environment and install the transformers and tokenizers libraries. I am using virtualenv as my virtual environment manager for Python, but you should use whatever dependency manager you normally work with.

# command line

virtualenv huggingface_demo –python=python3

source huggingface_demo/bin/activate

pip install torch

pip install git+https://github.com/huggingface/transformers.git

Hugging Face libraries tokenizers + transformers make text generation a snap. The demonstration here is based on examples from Hugging Face on the TensorFlow Blog, the Hugging Face Blog, and the Hugging Face Models documentation.

# python

import torch

import transformers

from transformers import GPT2Tokenizer

from transformers import GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

model = GPT2LMHeadModel.from_pretrained("gpt2", pad_token_id = tokenizer.eos_token_id)

input_string = "Yesterday I spent several hours in the library, studying"

input_tokens = tokenizer.encode(input_string, return_tensors = "pt")

output_greedy = model.generate(input_tokens, max_length = 256)

output_string = tokenizer.decode(output_greedy[0], \

skip_special_tokens=True)

print(f"Input sequence: {input_string}")

print(f"Output sequence: {output_string}")

# Output

Input sequence: Yesterday I spent several hours in the library, studying

Output sequence: Yesterday I spent several hours in the library, studying the books, and I was amazed at how much I had learned. I was amazed at how much I had learned. I was amazed at how much I had learned.

At the start the text above seems feasible, but that loop that looks like it might go on forever? It does indeed keep repeating as long as your patience allows. Feel free to try a few other input strings to check if they enter a nonsensical eternal loop like the first example.

We can combine beam search and a penalty for repetition to try and make the output more sensible, and hopefully get rid of the inane repetition problem. Beam search follows multiple probable text branches (set with the num_beams argument) along the most probable token sequences before settling. We also use no_repeat_ngram_size to penalize repetitive sequences.

By replacing the model.generate line above with:

output_beam = model.generate(input_tokens, \

max_length = 64, \

num_beams = 32, \

no_repeat_ngram_size=2, \

early_stopping = True)

The output becomes:

Output sequence: Yesterday I spent several hours in the library, studying the books, reading the papers, and listening to the music. It was a wonderful experience.

I have to say that I am very happy with the book. I think it is a very good book and I would recommend it to anyone who is interested in learning more about the world of science and technology. If you are looking for a book that will help you to understand what it means to be a scientist, then this book is for you.

That’s a lot better! It is conceivable that a human might write similar text, though it is still a bit bland and, although we get the impression that the “author” clearly enjoyed their trip to the library, we have no idea what book they are recommending.

For additional options and tricks to make the text more compelling, check out the Hugging Face docs.

Conclusions

With over 55 thousand stars on their transformers repository on GitHub, it’s clear that the Hugging Face ecosystem has gained substantial traction in the NLP applications community over the last few years.

With a recent $40 million USD Series B funding round and acquisition of nascent ML startup Gradio, Hugging Face has substantial momentum. Hopefully, the minimal demonstration of text generation in this article is a compelling demo of how easy it is to get started.

The considerable success Hugging Face has had in widespread adoption so far is also interesting as an example of an open source, community-focused ecosystem + premium services business model, and it will be interesting to see how this plays out in the future.

So if you’ve got a skull full of NLP startup ideas but you’ve been intimidated by the estimated $10 million USD and up cost of training your own state-of-the-art models from scratch, give the hugging face transformer libraries a try. It’s only a pip install command away.

Have any questions?

Contact Exxact Today

Getting Started with Hugging Face Transformers for NLP

Hugging Face 🤗: The Best Natural Language Processing Ecosystem You’re Not Using?

If you’ve been even vaguely aware of developments in machine learning and AI over the last few years since 2018, you definitely have heard of the massive progress being made in the world of Natural Language Processing (or NLP) due in large part to the development of larger and larger transformer models.

Of course we don’t mean the extraterrestrial shape-shifting robots of the 1980s franchise of the same name, but rather attention-based language models. These models gained widespread attention in the ML community in 2017 with Vaswani et al.’s seminal paper Attention Is All You Need, and the subsequent mass adoption of the Transformer blueprint for NLP in 2018 has been likened by some as the ImageNet moment of language models.

A few years later, Hugging Face has established itself as a one-stop shop for all things NLP, including datasets, pre-trained models, community, and even a course.

Hugging Face is a startup built on top of open source tools and data. Unlike a typical ML business which might offer an ML-enabled service or product directly, Hugging Face focuses on community-building around the concepts of consolidating best practices and state of the art tools.

While they do offer tiered pricing options for access to premium AutoNLP capabilities and an accelerated inference application programming interface (API), basic access to the inference API is included in the free tier and their core NLP libraries (transformers, tokenizers, datasets, and accelerate) are developed in the open and freely available under an Apache 2.0 License.

Hugging Face is Built on the Concept of Transformers

Obligatory “not those ‘Transformers.’” Photo by Nadeem, under the Pixaby license.

Visit the Hugging Face website and you’ll read that Hugging Face is the “AI community building the future.” Specifically, they are focused on Natural Language Processing (NLP), and within the specialization Hugging Face is particularly focused on transformer models and closely related progeny.

Transformer models have been the predominant deep learning models used in NLP for the past several years, with well-known exemplars in GPT-3 from OpenAI and its predecessors, the Bidirectional Encoder Representations from Transformers model (BERT) developed by Google, XLNet from Carnegie Mellon and Google, and many other models and variants besides. Following Vaswani et al.’s seminal paper “Attention is All You Need” from 2017, the unofficial milestone marking the start of the “age of transformers,” transformer models have gotten bigger, better, and much closer to generating text that can pass for human writing, as well as improving substantial on statistical loss metrics and standard benchmarks.

Their training datasets, likewise, have also expanded in size and scope. For example, the original Transformer was followed by the much larger TransformerXL, BERT-Base scaled from 110 million to 340 million parameters in Bert-Large, and GPT-2 (1.5 billion parameters) was succeeded by GPT-3 (175 billion parameters).

The current occupant of the throne for largest transformer model, (excepting those that use tricks that recruit only a subset of all parameters, like the trillion-plus switch transformers from Google or the equally massive Wu Dao transformers from the Beijing Academy of Artificial Intelligence) is Microsoft’s Megatron-Turing Natural Language Generation model (MT-NLG) at 530 billion parameters. And the transformer type of model isn’t just for natural language processing, either.

Transformers have been adapted for tasks in protein and DNA sequences (e.g. ProteinBERT and others), image and video processing with vision transformers, and even reinforcement learning problems, and many other applications.

Training a large, state-of-the-art transformer model for NLP comes with an estimated price tag ranging up into the tens of millions of dollars, with considerable energy and environmental costs accompanying development.

As a result, training up a large transformer from scratch for every NLP project or business is just not feasible, but that’s no reason that the benefits of these models can’t be shared and applied across a multitude of application areas and segments of society. The answer is pre-trained models, transfer learning, and fine-tuning for specific tasks, and these values form the cornerstone of the Hugging Face ecosystem and community.

Hugging Face follows in the footsteps of Howard and Ruder’s ULMFiT, or Universal Language Model Fine-Tuning approach, perhaps the inaugural foray into transfer learning for natural language processing that made fine-tuning pre-trained models a standard practice.

Interested in a deep learning workstation that can handle NLP training?

Learn more about Exxact AI workstations starting around $5,500

The Hugging Face Ecosystem

Hugging face is built around the concept of attention-based transformer models, and so it’s no surprise the core of the 🤗 ecosystem is their transformers library. The transformer library is supported by the accompanying datasets and tokenizers libraries.

Remember that transformers don’t understand text, or any sequences for that matter, in its native form of a string of characters. Rather, sequences of letters or other data must first be converted to the numerical language of vectors, matrices, and tensors. Hence, a tokenizer is an essential component of any transformer pipeline.

Hugging Face also provides the accelerate library, which integrates readily with existing Hugging Face training flows, and indeed generic PyTorch training scripts, in order to easily empower distributed training with various hardware acceleration devices like GPUs, TPUs, etc. 🤗 accelerate handles device placement, so the same training script can be used on a dedicated training run with multiple GPUs, a specialized cloud accelerator like a TPU, or on a laptop CPU for development on the go.

In addition to the transformers, tokenizers, datasets, and accelerate libraries, Hugging Face features a number of community resources. The Hugging Face Hub provides an organized way to share your own models with others, and is supported by the huggingface_hub library. The Hub adds value to your projects with tools for versioning and an API for hosted inference.

Screenshot of several of the top apps on Hugging Face Spaces.

Additionally, Hugging Face Spaces is a venue for showcasing your own 🤗-powered apps and browsing those created by others. One prominent example of an app that’s popular in Spaces right now is the CodeParrot demo. CodeParrot is a tool that highlights low-probability sequences in code. This can be useful for quickly identifying bugs or style departures like using the wrong naming convention.

Text Generation Demo

Now that we’ve gotten a feel for the libraries and goals of the Hugging Face ecosystem, let’s try a quick demo of generating some text with GPT-2.

Of course, GPT-2 has since been superseded in its lineage by the much more massive GPT-3 and variants like Codex, which is trained on writing code and powers GitHub CoPilot, but the famous "Unicorns" article generated substantial interest at the time and GPT-2 is still a powerful model well-suited for many applications, including this demo.

First, let’s set up a virtual environment and install the transformers and tokenizers libraries. I am using virtualenv as my virtual environment manager for Python, but you should use whatever dependency manager you normally work with.

# command line

virtualenv huggingface_demo –python=python3

source huggingface_demo/bin/activate

pip install torch

pip install git+https://github.com/huggingface/transformers.git

Hugging Face libraries tokenizers + transformers make text generation a snap. The demonstration here is based on examples from Hugging Face on the TensorFlow Blog, the Hugging Face Blog, and the Hugging Face Models documentation.

# python

import torch

import transformers

from transformers import GPT2Tokenizer

from transformers import GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

model = GPT2LMHeadModel.from_pretrained("gpt2", pad_token_id = tokenizer.eos_token_id)

input_string = "Yesterday I spent several hours in the library, studying"

input_tokens = tokenizer.encode(input_string, return_tensors = "pt")

output_greedy = model.generate(input_tokens, max_length = 256)

output_string = tokenizer.decode(output_greedy[0], \

skip_special_tokens=True)

print(f"Input sequence: {input_string}")

print(f"Output sequence: {output_string}")

# Output

Input sequence: Yesterday I spent several hours in the library, studying

Output sequence: Yesterday I spent several hours in the library, studying the books, and I was amazed at how much I had learned. I was amazed at how much I had learned. I was amazed at how much I had learned.

At the start the text above seems feasible, but that loop that looks like it might go on forever? It does indeed keep repeating as long as your patience allows. Feel free to try a few other input strings to check if they enter a nonsensical eternal loop like the first example.

We can combine beam search and a penalty for repetition to try and make the output more sensible, and hopefully get rid of the inane repetition problem. Beam search follows multiple probable text branches (set with the num_beams argument) along the most probable token sequences before settling. We also use no_repeat_ngram_size to penalize repetitive sequences.

By replacing the model.generate line above with:

output_beam = model.generate(input_tokens, \

max_length = 64, \

num_beams = 32, \

no_repeat_ngram_size=2, \

early_stopping = True)

The output becomes:

Output sequence: Yesterday I spent several hours in the library, studying the books, reading the papers, and listening to the music. It was a wonderful experience.

I have to say that I am very happy with the book. I think it is a very good book and I would recommend it to anyone who is interested in learning more about the world of science and technology. If you are looking for a book that will help you to understand what it means to be a scientist, then this book is for you.

That’s a lot better! It is conceivable that a human might write similar text, though it is still a bit bland and, although we get the impression that the “author” clearly enjoyed their trip to the library, we have no idea what book they are recommending.

For additional options and tricks to make the text more compelling, check out the Hugging Face docs.

Conclusions

With over 55 thousand stars on their transformers repository on GitHub, it’s clear that the Hugging Face ecosystem has gained substantial traction in the NLP applications community over the last few years.

With a recent $40 million USD Series B funding round and acquisition of nascent ML startup Gradio, Hugging Face has substantial momentum. Hopefully, the minimal demonstration of text generation in this article is a compelling demo of how easy it is to get started.

The considerable success Hugging Face has had in widespread adoption so far is also interesting as an example of an open source, community-focused ecosystem + premium services business model, and it will be interesting to see how this plays out in the future.

So if you’ve got a skull full of NLP startup ideas but you’ve been intimidated by the estimated $10 million USD and up cost of training your own state-of-the-art models from scratch, give the hugging face transformer libraries a try. It’s only a pip install command away.

Have any questions?

Contact Exxact Today