How AI Can Help Build New AI Chips

CPU architecture has always been a hot topic. This is especially true given the recent rise of System On Chips (SoCs), the new M1 chips developed by Apple & ARM, and the worldwide global deficit in chip manufacturing.

In the last few years, there has been a great push to incorporate AI into manufacturing chips. AI has already been quite useful in many areas such as healthcare, finances, recommendation systems, and much more.

So given the importance of efficient and advanced chip design and manufacturing, it only makes sense to use the most recent technologies to boost them.

Interested in an AI workstation?

Accelerate your machine learning research with an Exxact solution starting around $5,500

Current Chip Design Challenges

Before we start discussing how AI can be used in chip design, we have to first understand the challenges pertaining to the design of chips (and then how AI will solve them).

Transistors are the main building blocks of chips. Designing an efficient chip involves laying out those transistors in the most efficient way in terms of space and arrangement. Although optimizing the arrangement of those transistors has some rules to guide you through it, it is still regarded as a skill that can only be gained by experience.

This means that companies have to eventually rely on highly experienced & expensive chip designers. When it comes to “simulating” experience, AI is the go-to technology. This is because computers can simply crunch a huge amount of previous chip designs, and given the correct neural network, they will be able to "learn" the differences and variations between those chip designs.

When it comes to the arrangement of transistors, designers have to optimize the way they are connected to reduce the overall size of the chip (for laptops), reduce power consumption, and of course increase the overall performance of the chip.

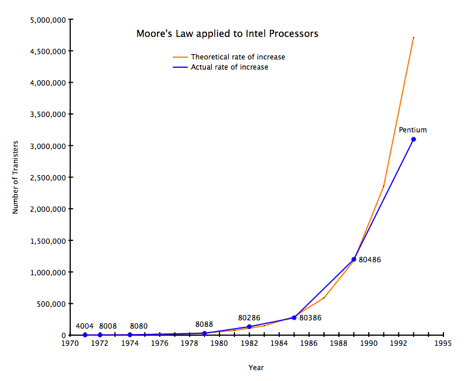

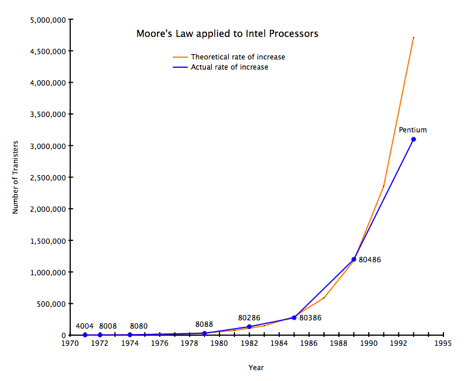

This is becoming more and more difficult as there is quite a popular law in this space known as Moore’s Law which states that the number of transistors on a chip doubles every 2 years. This means that the growth of the number of transistors on chips is exponential, and even if we can do it now, it is highly likely that it starts becoming too costly at one point.

Source: Wikiversity

AI was definitely not the first approach when it came to solving this problem with technology. Several different companies have tried to solve this problem using conventional programming.

However, to understand the true difficulty of the problem, we have to look at the size of the problem space.

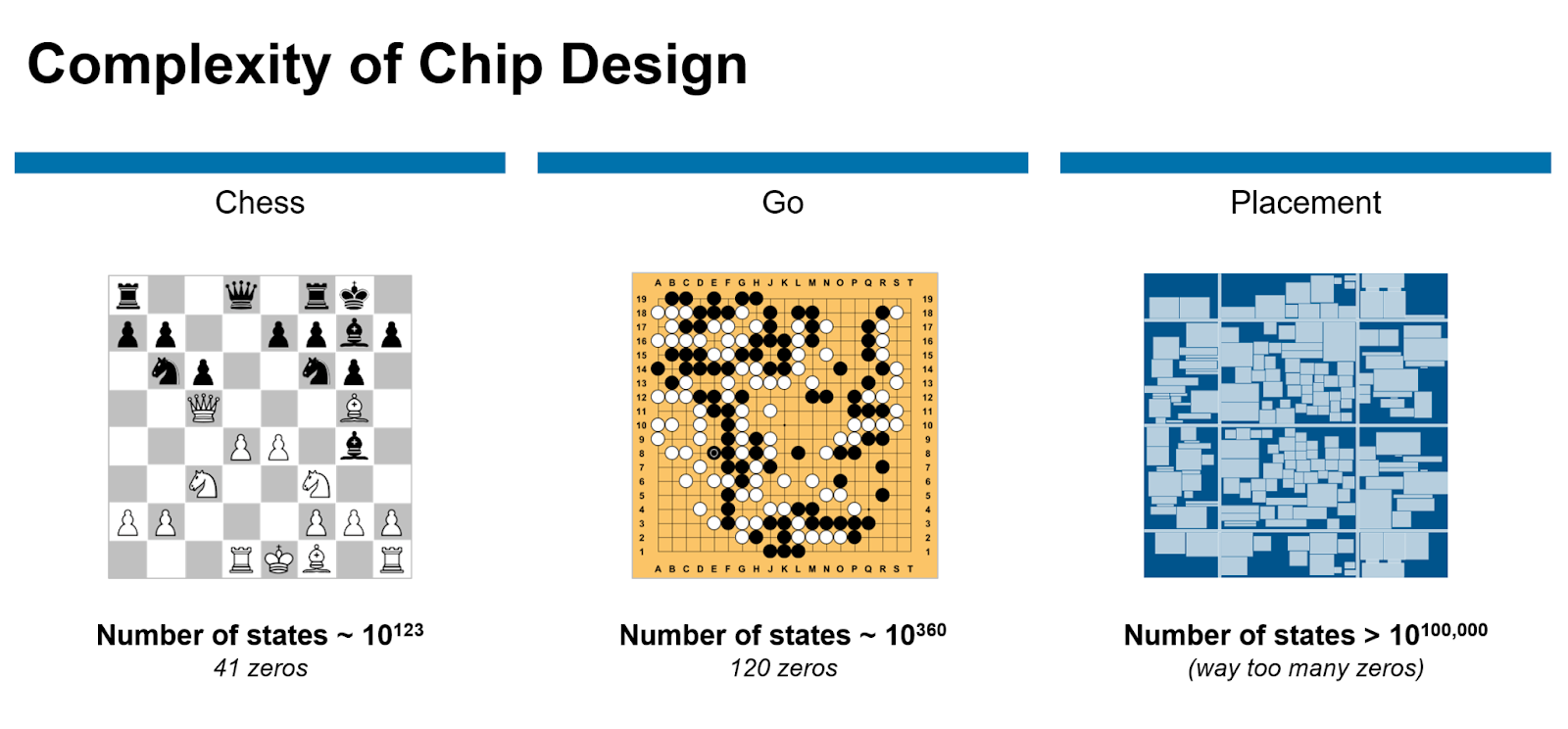

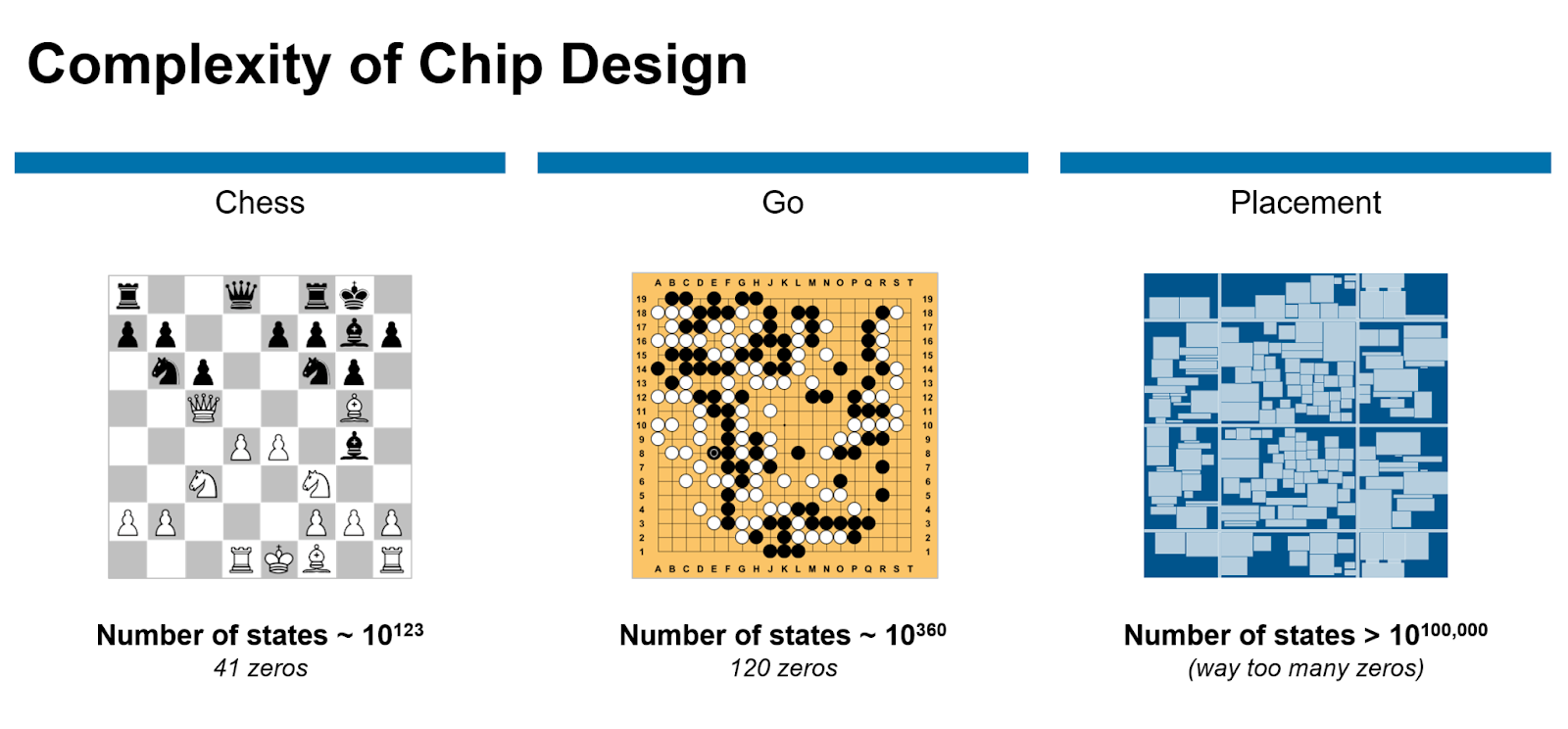

Given the typical amount of a chip and the number of transistors, there are something like 10^90000 [1] different possible combinations of arrangements of those transistors which is absolutely ridiculous.

Using Machine Learning to Speed Up Chip Design

The current problem at hand is known as "floor planning" [2] quite similar to layering wires and connecting them on an actual huge floor.

This problem can be seen as a game somehow. Google’s engineers mention that such a problem can take months of intense human effort [1], but can be done in a matter of days using a machine.

From this perspective, this problem becomes similar to another very famous AI problem which is playing the game of Go. The problem space of the game of Go is around 10^360 which isn't as huge as the chips design problem but is still quite huge.

And since the child company of Google, DeepMind has been developing state-of-the-art neural networks for playing this game over the last few years, they decided to adopt a similar strategy for chip design.

Source: Semiengineering

The subfield of machine learning being used to tackle this problem is known as reinforcement learning.

Reinforcement learning (RL) involves specifying an agent (which you could think of as a player), designing an environment for that agent where there are objects it can interact with, and finally, designing a “reward function” that dictates when the agent will get rewarded and when it will get penalized.

So for instance, if we want to design an (RL) algorithm to play the game of Go, we would have an environment that is the board of the game with the pieces from both players on each side. And we would have an agent playing against a classic algorithm, another agent, or maybe even a human!

The reward function would reward moves that increase the probability of winning and penalize moves that decrease the probability of winning. After running this algorithm for millions and maybe even trillions of iterations, it eventually gets quite competent at the game of Go.

If this sounds interesting, we suggest checking out AlphaGo which is the algorithm developed by DeepMind that was able to win against the best Go player in the world!

Similarly, we can phrase the floor planning chip design problem as rewarding the agent when they wire the transistors in a way that reduces power consumption and maximizes performance. Of course, this isn’t a straightforward task, designing the environment and the reward function is quite difficult in RL.

It's also worth noting that running these algorithms can be quite expensive due to their huge computational costs and demands. Also, it is quite difficult to have an AI that can fully design chips without human intervention simply because the algorithm is supervised in the first place.

Algorithm supervision in AI means that a human has to tag the data points with correct labels that specify correctness. Not all RL algorithms are supervised, but some of them are. The optimal current goal is to have an "AI chip assistant" that can help humans design the best chips as fast as possible.

The Future of Using AI in Chip Design

It is quite ironic that AI needs faster and better chips, and that it is being used at the same time to actually build them! Although AI most likely won't be fully replacing humans at such complicated tasks any time soon, there is a lot of room for optimization.

The most interesting bit about developing these neural networks and AI algorithms is that it forces people to think about the way they actually perform these tasks that they are building neural networks to perform.

For instance, to build a neural network that is quite performant at designing a chip, you don’t necessarily have to be an expert at chip design, but you have to at least understand how it is accomplished. This is because that understanding allows programmers to extract the most meaningful features and point the neural networks in the right direction. A direction we now appear to be moving in, at full speed.

Have any questions?

Contact Exxact Today

References:

1. Synopsys, Cadence, Google, And NVIDIA All Agree: Use AI To Help Design Chips in Forbes2. Google is using AI to design its next generation of AI chips more quickly than humans can in The verge

Using Artificial Intelligence for Chip Design & Manufacturing

How AI Can Help Build New AI Chips

CPU architecture has always been a hot topic. This is especially true given the recent rise of System On Chips (SoCs), the new M1 chips developed by Apple & ARM, and the worldwide global deficit in chip manufacturing.

In the last few years, there has been a great push to incorporate AI into manufacturing chips. AI has already been quite useful in many areas such as healthcare, finances, recommendation systems, and much more.

So given the importance of efficient and advanced chip design and manufacturing, it only makes sense to use the most recent technologies to boost them.

Interested in an AI workstation?

Accelerate your machine learning research with an Exxact solution starting around $5,500

Current Chip Design Challenges

Before we start discussing how AI can be used in chip design, we have to first understand the challenges pertaining to the design of chips (and then how AI will solve them).

Transistors are the main building blocks of chips. Designing an efficient chip involves laying out those transistors in the most efficient way in terms of space and arrangement. Although optimizing the arrangement of those transistors has some rules to guide you through it, it is still regarded as a skill that can only be gained by experience.

This means that companies have to eventually rely on highly experienced & expensive chip designers. When it comes to “simulating” experience, AI is the go-to technology. This is because computers can simply crunch a huge amount of previous chip designs, and given the correct neural network, they will be able to "learn" the differences and variations between those chip designs.

When it comes to the arrangement of transistors, designers have to optimize the way they are connected to reduce the overall size of the chip (for laptops), reduce power consumption, and of course increase the overall performance of the chip.

This is becoming more and more difficult as there is quite a popular law in this space known as Moore’s Law which states that the number of transistors on a chip doubles every 2 years. This means that the growth of the number of transistors on chips is exponential, and even if we can do it now, it is highly likely that it starts becoming too costly at one point.

Source: Wikiversity

AI was definitely not the first approach when it came to solving this problem with technology. Several different companies have tried to solve this problem using conventional programming.

However, to understand the true difficulty of the problem, we have to look at the size of the problem space.

Given the typical amount of a chip and the number of transistors, there are something like 10^90000 [1] different possible combinations of arrangements of those transistors which is absolutely ridiculous.

Using Machine Learning to Speed Up Chip Design

The current problem at hand is known as "floor planning" [2] quite similar to layering wires and connecting them on an actual huge floor.

This problem can be seen as a game somehow. Google’s engineers mention that such a problem can take months of intense human effort [1], but can be done in a matter of days using a machine.

From this perspective, this problem becomes similar to another very famous AI problem which is playing the game of Go. The problem space of the game of Go is around 10^360 which isn't as huge as the chips design problem but is still quite huge.

And since the child company of Google, DeepMind has been developing state-of-the-art neural networks for playing this game over the last few years, they decided to adopt a similar strategy for chip design.

Source: Semiengineering

The subfield of machine learning being used to tackle this problem is known as reinforcement learning.

Reinforcement learning (RL) involves specifying an agent (which you could think of as a player), designing an environment for that agent where there are objects it can interact with, and finally, designing a “reward function” that dictates when the agent will get rewarded and when it will get penalized.

So for instance, if we want to design an (RL) algorithm to play the game of Go, we would have an environment that is the board of the game with the pieces from both players on each side. And we would have an agent playing against a classic algorithm, another agent, or maybe even a human!

The reward function would reward moves that increase the probability of winning and penalize moves that decrease the probability of winning. After running this algorithm for millions and maybe even trillions of iterations, it eventually gets quite competent at the game of Go.

If this sounds interesting, we suggest checking out AlphaGo which is the algorithm developed by DeepMind that was able to win against the best Go player in the world!

Similarly, we can phrase the floor planning chip design problem as rewarding the agent when they wire the transistors in a way that reduces power consumption and maximizes performance. Of course, this isn’t a straightforward task, designing the environment and the reward function is quite difficult in RL.

It's also worth noting that running these algorithms can be quite expensive due to their huge computational costs and demands. Also, it is quite difficult to have an AI that can fully design chips without human intervention simply because the algorithm is supervised in the first place.

Algorithm supervision in AI means that a human has to tag the data points with correct labels that specify correctness. Not all RL algorithms are supervised, but some of them are. The optimal current goal is to have an "AI chip assistant" that can help humans design the best chips as fast as possible.

The Future of Using AI in Chip Design

It is quite ironic that AI needs faster and better chips, and that it is being used at the same time to actually build them! Although AI most likely won't be fully replacing humans at such complicated tasks any time soon, there is a lot of room for optimization.

The most interesting bit about developing these neural networks and AI algorithms is that it forces people to think about the way they actually perform these tasks that they are building neural networks to perform.

For instance, to build a neural network that is quite performant at designing a chip, you don’t necessarily have to be an expert at chip design, but you have to at least understand how it is accomplished. This is because that understanding allows programmers to extract the most meaningful features and point the neural networks in the right direction. A direction we now appear to be moving in, at full speed.

Have any questions?

Contact Exxact Today

References:

1. Synopsys, Cadence, Google, And NVIDIA All Agree: Use AI To Help Design Chips in Forbes2. Google is using AI to design its next generation of AI chips more quickly than humans can in The verge