Introducing the AMD Instinct MI210

The AMD Instinct™ MI210 accelerator was announced last year and is finally here to accelerate high performance computing (HPC), artificial intelligence (AI), and machine learning (ML) workflows in the data centers of the world.

AMD is planning to capture its portion of the HPC, AI, and ML market share by offering a strong contender with its new accelerator.

Built on the 3rd generation AMD Infinity Architecture CDNA™ 2, the AMD Instinct MI210 adds to the Aldebaran MI200 lineup, the next generation to the MI100 series, designed and optimized to accelerate intense HPC, AI, and ML tasks. The AMD CDNA 2 architecture includes 2nd generation Matrix cores to increase and optimized FP64 matrix capabilities and includes faster memory.

Interested in the AMD Instinct MI210?

Learn more about Exxact AMD Radeon Instinct solutions

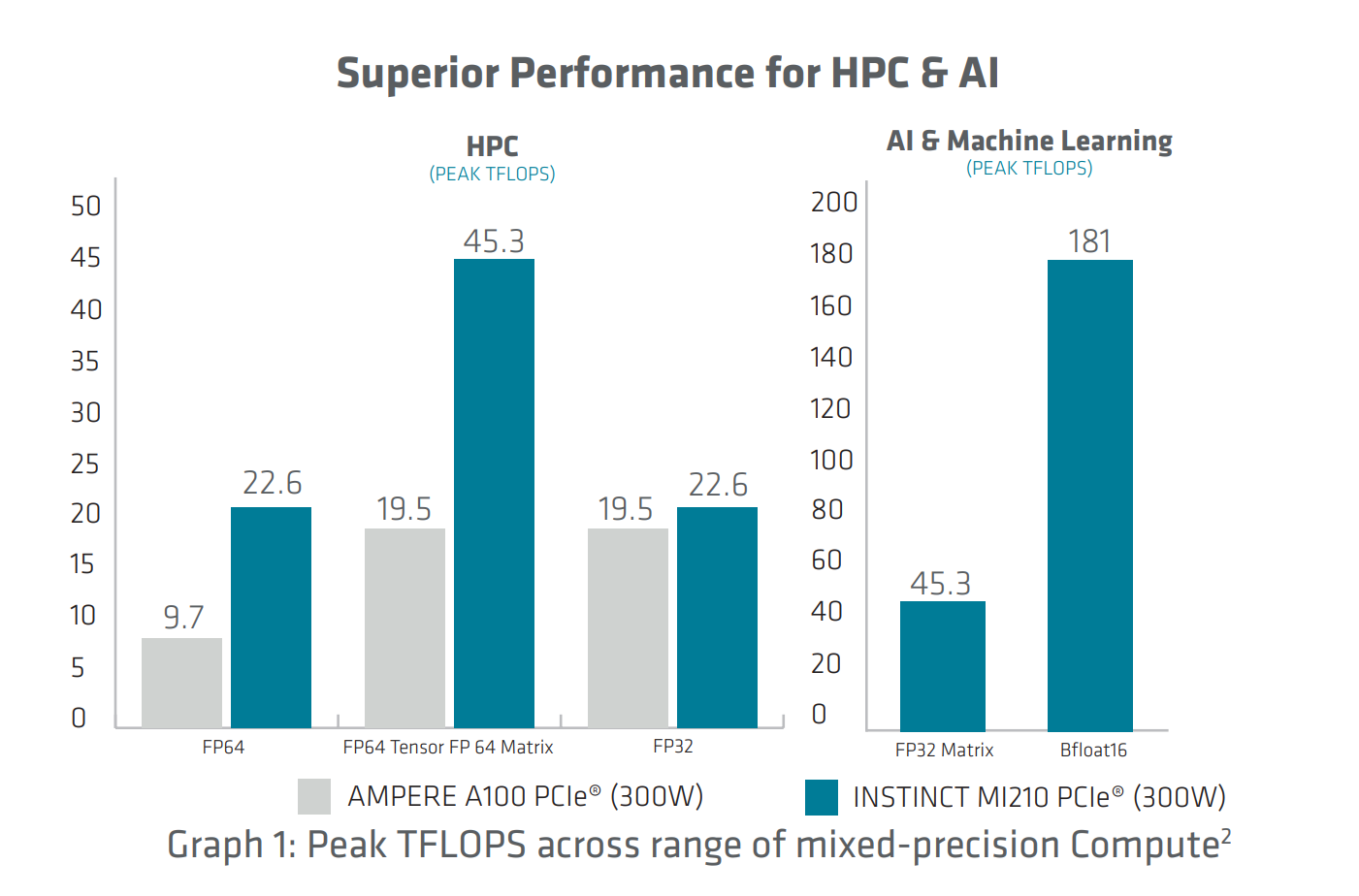

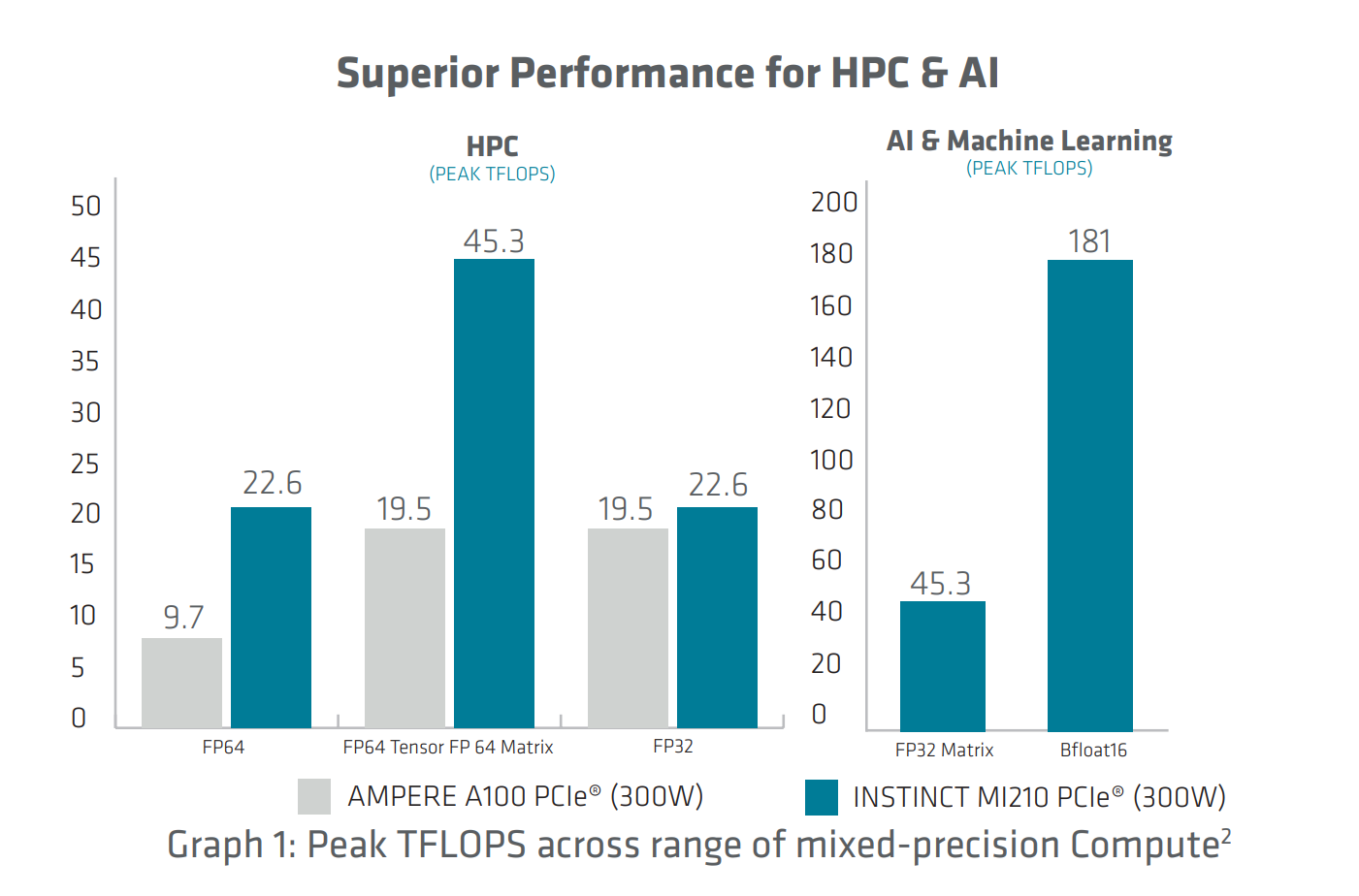

Adding to the AMD Instinct family, the AMD Instinct MI210 delivers blistering performance as a PCIe interface card on double-precision FP64 compute. With up to 45.3 TFLOPS peak in FP64 and FP32 matrix performance, and a respectable 181 teraFLOPS of FP16 and BF16 for machine AI learning, this accelerator increased performance by 40% over the last generation AMD MI100. It also has 64GB of HMB2e memory and 1.6TB/s of memory bandwidth. AMD's Infinity Fabric enables 300GB/s of dual and 600GB/s of quad total aggregate GPU-to-GPU bandwidth when linked together with other AMD Instinct MI210s.

The AMD Instinct MI210 is the PCIe option released as a more accessible option for enterprise-level servers. The 3-year-old OAM (Open Compute Project Accelerator Module) form factor is powerful but is relatively new; the Instinct MI210’s PCIe factor is more common in servers currently, so a quick upgrade of these accelerators can be a simple switch of a card.

Few servers can accommodate the high wattage and price to operate an OAM accelerator, so the Instinct MI210 allows AMD to satisfy the HPC needs of consumers at a lower wattage and TCO.

Along with the release of its new AMD Instinct MI200 line of accelerator cards, AMD also released the 5th iteration of their Radeon™ Open Ecosystem (or ROCm 5.0) software development platform that allows users to program their AMD GPUs, and supports high-performance computing and machine learning as a collection of drivers, development, APIs, and GPU monitoring tools. ROCm lets users take advantage of their AMD Instinct systems with access to open frameworks, libraries, programming, and more tools like HIP, OpenMP, and OpenCL.

Since ROCm is an open-source non-proprietary solution, it is being integrated into popular frameworks like TensorFlow, PyTorch, Kokkos, and Raja. Furthermore, AMD has Infinity Hub hosting HPC and AI applications that are already ready to go and optimized for AMD GPUs. This makes finding software easily accessible and user-friendly when they have such a powerful machine ready to take on tasks. The AMD Instinct MI210 is a powerful PCIe GPU accelerator for systems aiming to take on heavy workloads and HPC computing needs.

Have any questions?

Contact Exxact Today

AMD Instinct MI210

Introducing the AMD Instinct MI210

The AMD Instinct™ MI210 accelerator was announced last year and is finally here to accelerate high performance computing (HPC), artificial intelligence (AI), and machine learning (ML) workflows in the data centers of the world.

AMD is planning to capture its portion of the HPC, AI, and ML market share by offering a strong contender with its new accelerator.

Built on the 3rd generation AMD Infinity Architecture CDNA™ 2, the AMD Instinct MI210 adds to the Aldebaran MI200 lineup, the next generation to the MI100 series, designed and optimized to accelerate intense HPC, AI, and ML tasks. The AMD CDNA 2 architecture includes 2nd generation Matrix cores to increase and optimized FP64 matrix capabilities and includes faster memory.

Interested in the AMD Instinct MI210?

Learn more about Exxact AMD Radeon Instinct solutions

Adding to the AMD Instinct family, the AMD Instinct MI210 delivers blistering performance as a PCIe interface card on double-precision FP64 compute. With up to 45.3 TFLOPS peak in FP64 and FP32 matrix performance, and a respectable 181 teraFLOPS of FP16 and BF16 for machine AI learning, this accelerator increased performance by 40% over the last generation AMD MI100. It also has 64GB of HMB2e memory and 1.6TB/s of memory bandwidth. AMD's Infinity Fabric enables 300GB/s of dual and 600GB/s of quad total aggregate GPU-to-GPU bandwidth when linked together with other AMD Instinct MI210s.

The AMD Instinct MI210 is the PCIe option released as a more accessible option for enterprise-level servers. The 3-year-old OAM (Open Compute Project Accelerator Module) form factor is powerful but is relatively new; the Instinct MI210’s PCIe factor is more common in servers currently, so a quick upgrade of these accelerators can be a simple switch of a card.

Few servers can accommodate the high wattage and price to operate an OAM accelerator, so the Instinct MI210 allows AMD to satisfy the HPC needs of consumers at a lower wattage and TCO.

Along with the release of its new AMD Instinct MI200 line of accelerator cards, AMD also released the 5th iteration of their Radeon™ Open Ecosystem (or ROCm 5.0) software development platform that allows users to program their AMD GPUs, and supports high-performance computing and machine learning as a collection of drivers, development, APIs, and GPU monitoring tools. ROCm lets users take advantage of their AMD Instinct systems with access to open frameworks, libraries, programming, and more tools like HIP, OpenMP, and OpenCL.

Since ROCm is an open-source non-proprietary solution, it is being integrated into popular frameworks like TensorFlow, PyTorch, Kokkos, and Raja. Furthermore, AMD has Infinity Hub hosting HPC and AI applications that are already ready to go and optimized for AMD GPUs. This makes finding software easily accessible and user-friendly when they have such a powerful machine ready to take on tasks. The AMD Instinct MI210 is a powerful PCIe GPU accelerator for systems aiming to take on heavy workloads and HPC computing needs.

Have any questions?

Contact Exxact Today