.jpg?format=webp)

.jpg)

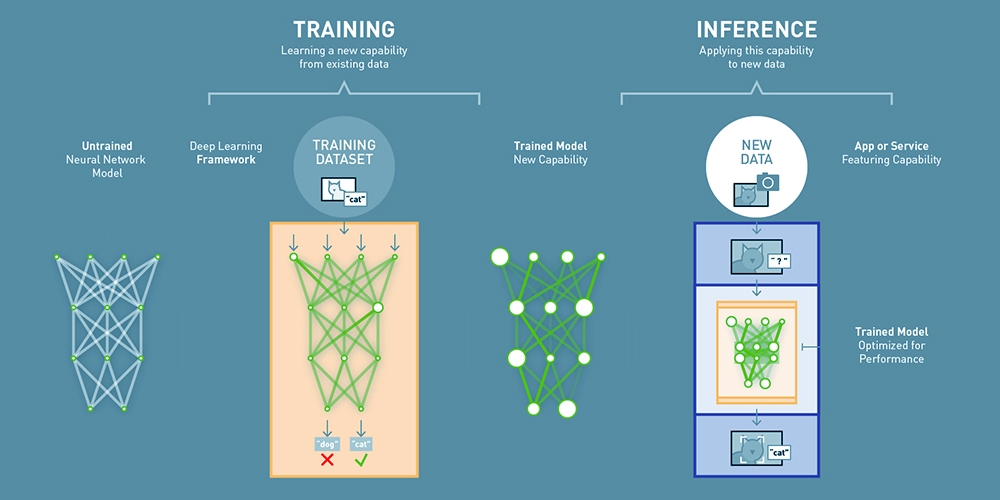

To first understand the difference between deep learning training and inference, let's take a look at the deep learning field itself. While deep learning can be defined in many ways, a very simple definition would be that it's a branch of machine learning in which the models (typically neural networks) are graphed like "deep" structures with multiple layers. Deep learning is used to learn features & patterns that best represent data. It works in a hierarchical way: the top layers learn high-level generic features such as edges, and the low-level layers learn more data specific features. The process of deep learning can be applied to various applications including image classification, text classification, speech recognition, and predicting time series data.

Deep Learning Training Explained

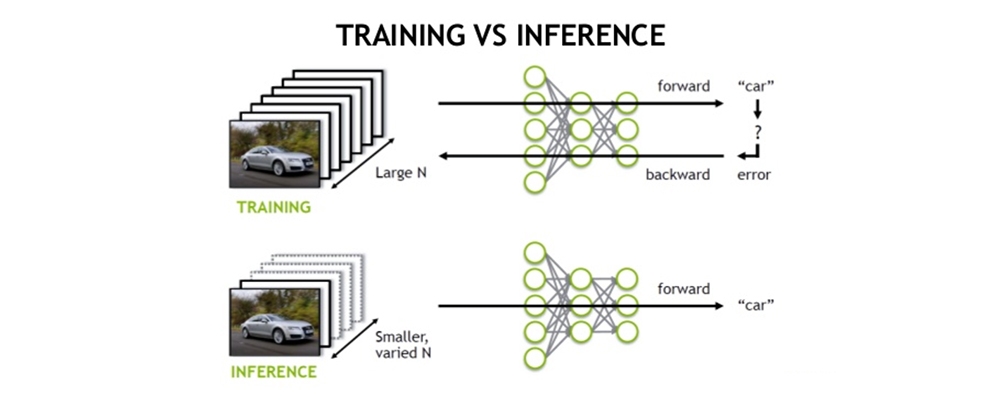

Training is the phase in which your network tries to learn from the data. In training, each layer of data is assigned some random weights and your classifier runs a forward pass through the data, predicting the class labels and scores using those weights. The class scores are then compared against the actual labels and an error is computed via a loss function. This error is then backpropagated through the network and weights are updated accordingly via some weight update algorithm such as Gradient Descent. One complete pass through all of the training samples is called an epoch. This is computationally very expensive as we only perform a single weight update after going through every sample. So, in practice, we divide the data into batches and update weights after each batch. This method takes less time to converge, and hence, we need fewer epochs to run.

Training can be sped up by using a GPU (or multiple GPUs in parallel) as they're much faster compared to CPUs for vector and matrix manipulations. For example, we had a customer using an NVIDIA GeForce GTX 1080 Ti and an Intel Core i7-5930K processor on our Spectrum TXN003-0128N Deep Learning Development Workstation, and they noticed a huge difference in performance. They noted the time taken per epoch can be reduced from 3-4 minutes (on CPU) to just 3-4 seconds when switching to the GPU for a relatively less complex (20 layers) network training on a small dataset (15 classes and roughly 100 samples per class).

Deep Learning Inference Explained

The inference is the stage in which a trained model is used to infer/predict the testing samples and comprises of a similar forward pass as training to predict the values. Unlike training, it doesn't include a backward pass to compute the error and update weights. It's usually a production phase where you deploy your model to predict real-world data. You can use a GPU to speed up your predictions, with the most common and ideal choice for deep learning being the NVIDIA Tesla series. Batch prediction is way more efficient than predicting a single image so you may like to stack up multiple samples before and then predicting in a single go. This is the ideal solution for enterprises if you have a lot of users and get hundreds of hits per second. Latency can be an issue if you don't have a lot of users and stacking up images(for a fixed batch size) might take a lot of time itself.

Lack of Data / Transfer Learning

Deep learning requires a lot of data for training, and if you're working on a niche problem, collecting data can be a big challenge. One solution to this problem is transfer learning where you use pre-trained models on other datasets and instead of initializing layer weights randomly (as you do before training a model from scratch), you use the learned weights (from the pre-trained model) for each layer and then further train the model on your data. Your model has a better chance to converge even if you have a small data set. Transfer learning can take the following forms:

- You can fine-tune the pre-trained network on your data set by continuing the backpropagation. You can fine-tune all the layers of the network, i.e. continue updating the weights via backpropagation, or you can fix some layers and fine-tune only the selected layers. You can start with the higher-level layers and gradually move towards fine-tuning earlier layers in the network and continuously assess performance to get an idea of where to stop.

- You can use pre-trained CNN as a fixed feature extractor for your data and train a linear classifier like SVM using those features. This technique is ideal if your data set is very small and fine-tuning your model may result in over-fitting.

Related Posts

- What is Deep Learning and Why It’s Different From Machine Learning

- Neural Networks for Music

- Deep Learning Image Recognition

.jpg?format=webp)

Discover the Difference Between Deep Learning Training and Inference

.jpg)

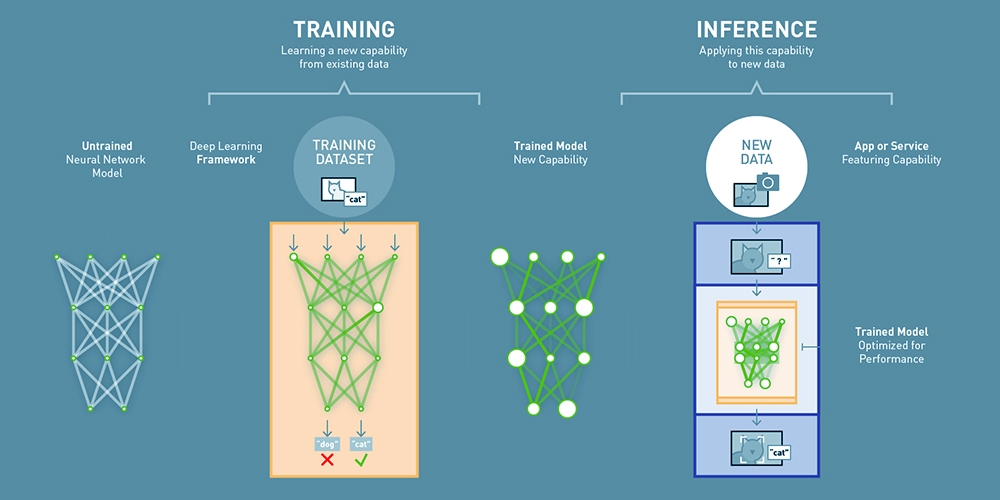

To first understand the difference between deep learning training and inference, let's take a look at the deep learning field itself. While deep learning can be defined in many ways, a very simple definition would be that it's a branch of machine learning in which the models (typically neural networks) are graphed like "deep" structures with multiple layers. Deep learning is used to learn features & patterns that best represent data. It works in a hierarchical way: the top layers learn high-level generic features such as edges, and the low-level layers learn more data specific features. The process of deep learning can be applied to various applications including image classification, text classification, speech recognition, and predicting time series data.

Deep Learning Training Explained

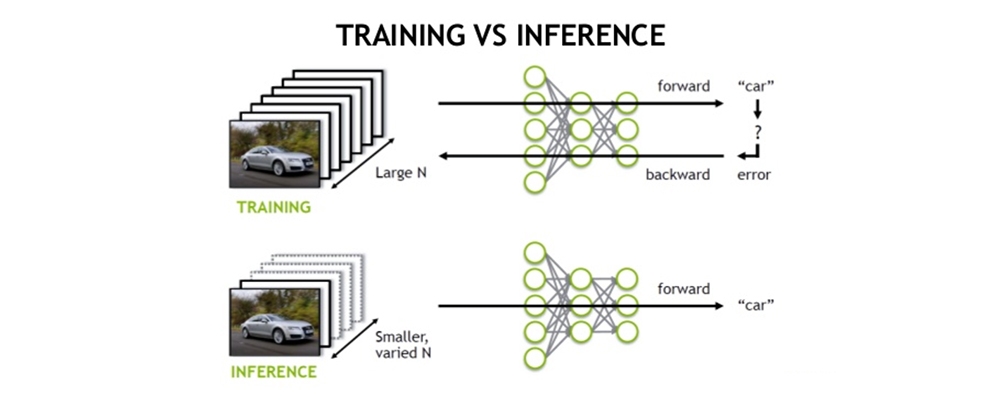

Training is the phase in which your network tries to learn from the data. In training, each layer of data is assigned some random weights and your classifier runs a forward pass through the data, predicting the class labels and scores using those weights. The class scores are then compared against the actual labels and an error is computed via a loss function. This error is then backpropagated through the network and weights are updated accordingly via some weight update algorithm such as Gradient Descent. One complete pass through all of the training samples is called an epoch. This is computationally very expensive as we only perform a single weight update after going through every sample. So, in practice, we divide the data into batches and update weights after each batch. This method takes less time to converge, and hence, we need fewer epochs to run.

Training can be sped up by using a GPU (or multiple GPUs in parallel) as they're much faster compared to CPUs for vector and matrix manipulations. For example, we had a customer using an NVIDIA GeForce GTX 1080 Ti and an Intel Core i7-5930K processor on our Spectrum TXN003-0128N Deep Learning Development Workstation, and they noticed a huge difference in performance. They noted the time taken per epoch can be reduced from 3-4 minutes (on CPU) to just 3-4 seconds when switching to the GPU for a relatively less complex (20 layers) network training on a small dataset (15 classes and roughly 100 samples per class).

Deep Learning Inference Explained

The inference is the stage in which a trained model is used to infer/predict the testing samples and comprises of a similar forward pass as training to predict the values. Unlike training, it doesn't include a backward pass to compute the error and update weights. It's usually a production phase where you deploy your model to predict real-world data. You can use a GPU to speed up your predictions, with the most common and ideal choice for deep learning being the NVIDIA Tesla series. Batch prediction is way more efficient than predicting a single image so you may like to stack up multiple samples before and then predicting in a single go. This is the ideal solution for enterprises if you have a lot of users and get hundreds of hits per second. Latency can be an issue if you don't have a lot of users and stacking up images(for a fixed batch size) might take a lot of time itself.

Lack of Data / Transfer Learning

Deep learning requires a lot of data for training, and if you're working on a niche problem, collecting data can be a big challenge. One solution to this problem is transfer learning where you use pre-trained models on other datasets and instead of initializing layer weights randomly (as you do before training a model from scratch), you use the learned weights (from the pre-trained model) for each layer and then further train the model on your data. Your model has a better chance to converge even if you have a small data set. Transfer learning can take the following forms:

- You can fine-tune the pre-trained network on your data set by continuing the backpropagation. You can fine-tune all the layers of the network, i.e. continue updating the weights via backpropagation, or you can fix some layers and fine-tune only the selected layers. You can start with the higher-level layers and gradually move towards fine-tuning earlier layers in the network and continuously assess performance to get an idea of where to stop.

- You can use pre-trained CNN as a fixed feature extractor for your data and train a linear classifier like SVM using those features. This technique is ideal if your data set is very small and fine-tuning your model may result in over-fitting.

Related Posts

- What is Deep Learning and Why It’s Different From Machine Learning

- Neural Networks for Music

- Deep Learning Image Recognition