What is SoftIron?

SoftIron® is the world-leader in task-specific data center solutions. SoftIron simplifies and improves the data storage experience by enabling the easy adoption of enterprise-class, open source software-defined storage (SDS) solutions at scale.

Its HyperDrive® enterprise storage solution is purpose-built to optimize the performance of Ceph, the leading open source SDS platform, renowned for its durability, robust resilience, and infinite scalability.

Interested in a SoftIron Storage Solution?

Learn more about Ceph-based Storage Appliances on Exxact

CephFS Testing for High Performance Computing (HPC)

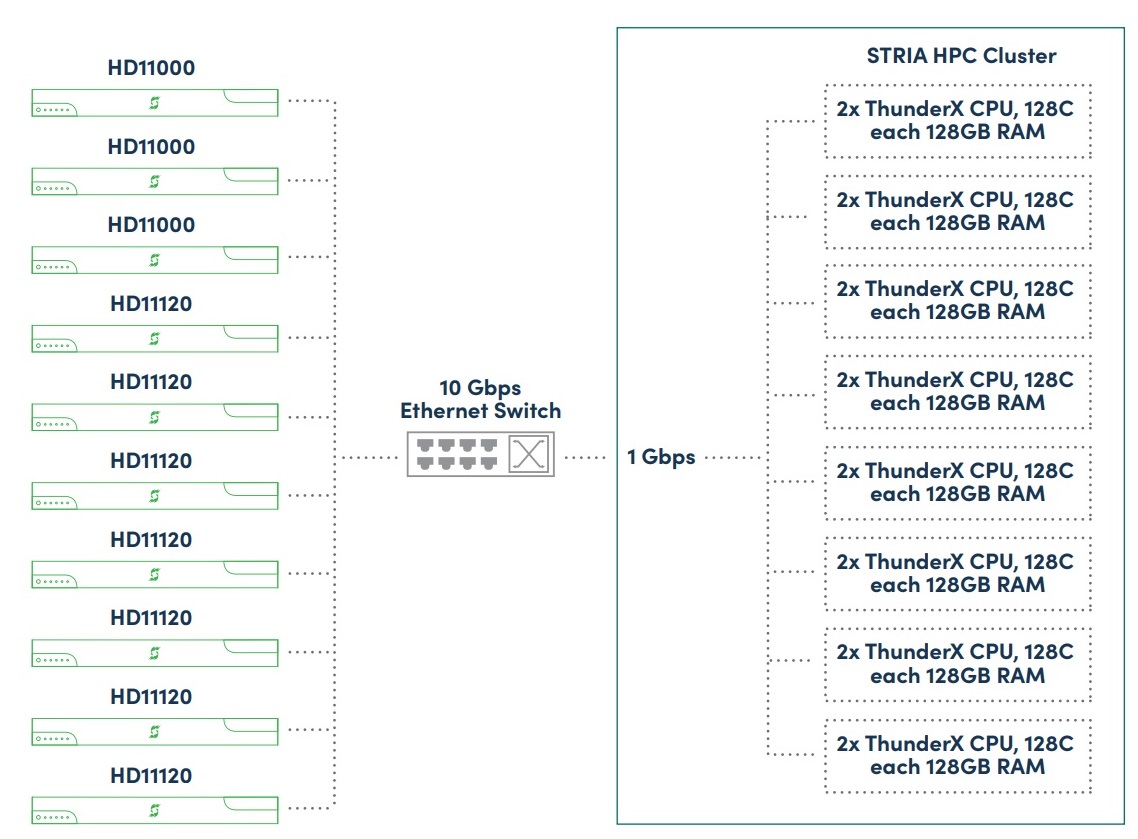

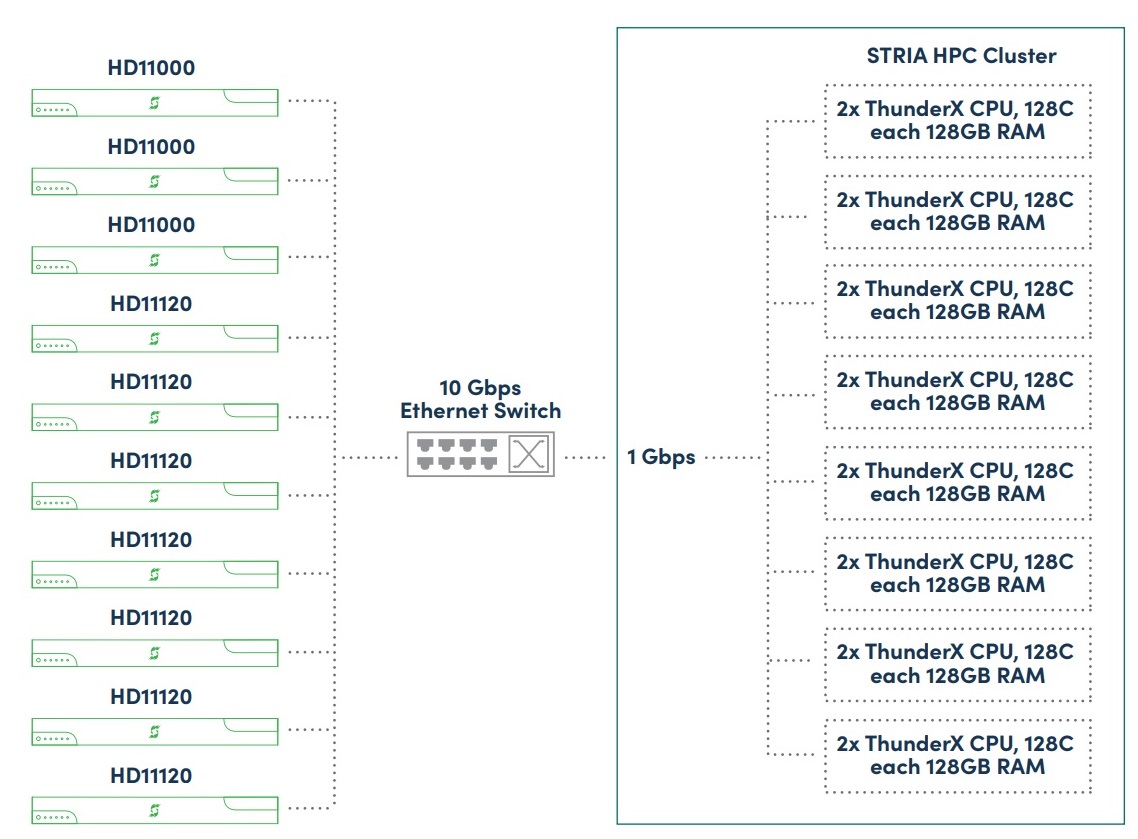

Sandia National Laboratories conducted several sets of experiments on a SoftIron HyperDrive CephFS cluster attached to Stria, a Cavium ThunderX2 64-bit ARM-based HPC system.

Test Environment

- 2048 Placement Groups

- 84 OSDs

- 3 Monitors/Metadata Servers

- Triple-Replication

- 1 Pool for Metadata, 1 Pool for Data

- Single 10GbE Network Connection

- Shared Data/Replication Network

- 1GbE Network Connection per Worker

- Number of Clients Cary per Test

Test 1

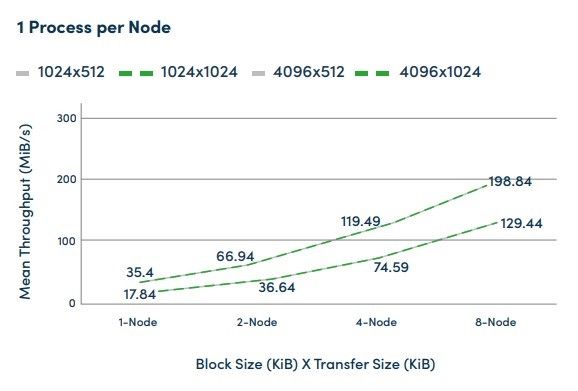

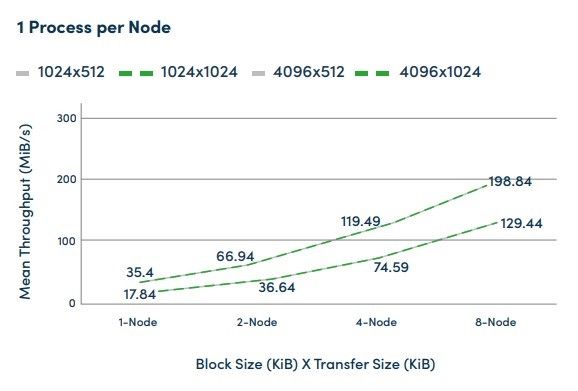

The first set of experiments uses the well-known IOR and MDTest benchmarks. These experiments provide both proof of functionality and a baseline of performance.

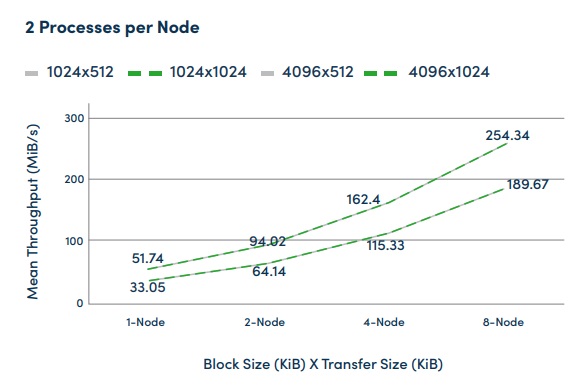

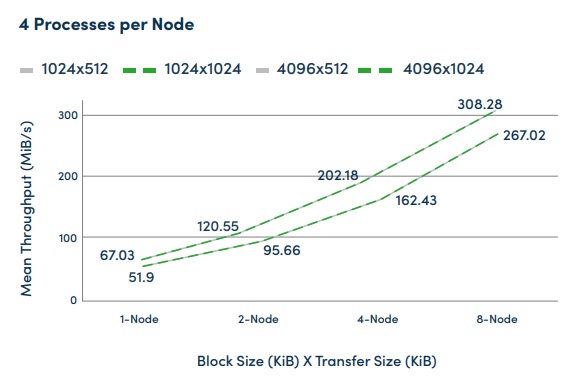

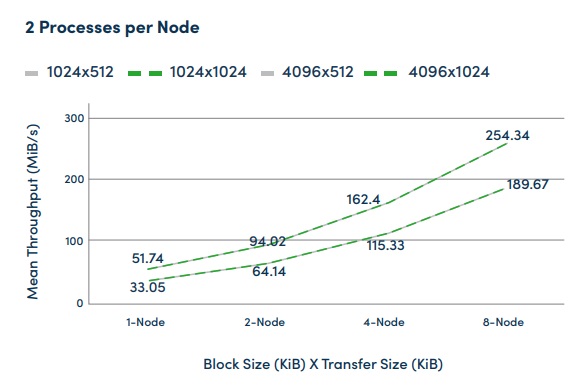

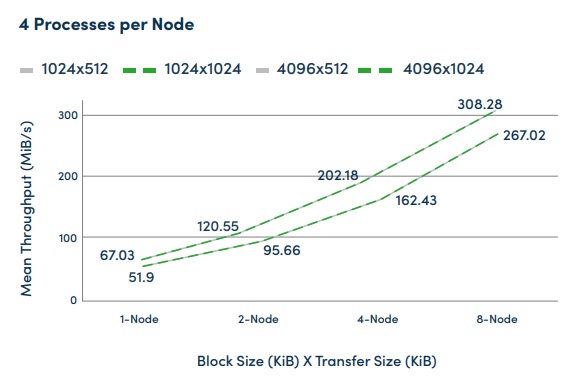

Test 1a) IOR: Rapid results data ingest from parallelized simulation trials.

IOR is a parallel IO benchmark that can be used to test the performance of parallel storage systems using various interfaces and access patterns. The IOR repository also includes the mdtest benchmark which specifically tests the peak metadata rates of storage systems under different directory structures. Both benchmarks use a common parallel I/O abstraction backend and rely on MPI for synchronization.

IOR writes with increasing parallel workers

- 10,000 Iterations, Varying Block Sizes & Transfer Sizes

- Write-Caching Mitigated to Remove Effects of RAM Caching/Flushing

- 2, 4, 8 Write Clients

- 1, 2, 4 Processors per Node

Test 1a Summary

- CephFS write throughput improves with increased parallelization across and within nodes.

- CephFS write throughput improves as block size increases from 1MiB to 4MiB.

- Negligible CephFS write throughput change based on 512KiB transfer size vs 1024KiB transfer size.

- Write-caching mitigation reduced test results, but resulted in significantly smaller standard deviation, a truer test of storage system performance.

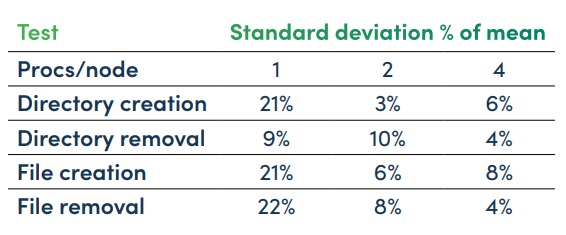

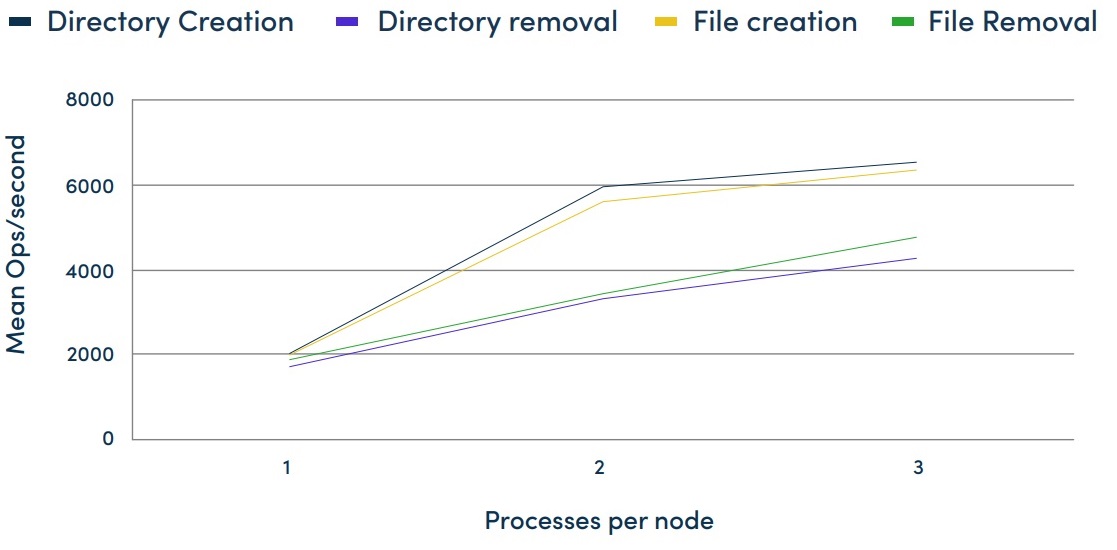

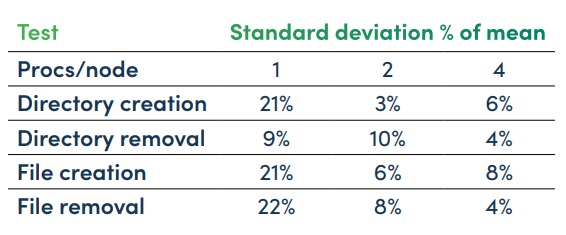

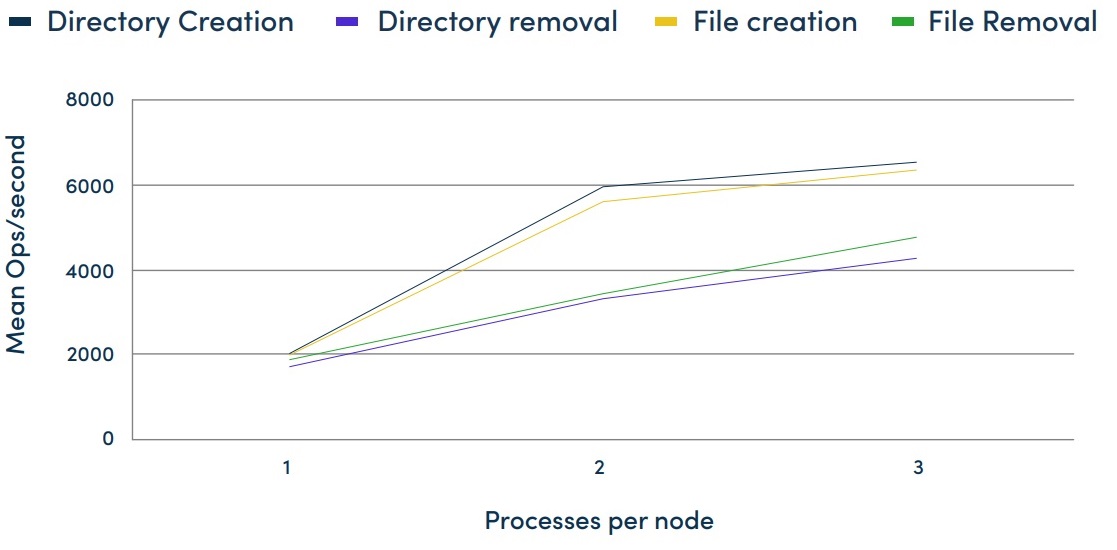

Test 1b) MDTest: Testing metadata-related performance

MDtest is an MPI-based application for evaluating the metadata performance of a file system and has been designed to test parallel file systems.

MDTest: File & Directory Creation and Removal

- Treedepth = 3, Treewidth = 3, Filecount = 5

- 8 client nodes

- 1, 2, & 4 processes per client

- 300 iterations

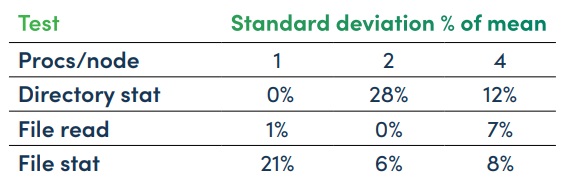

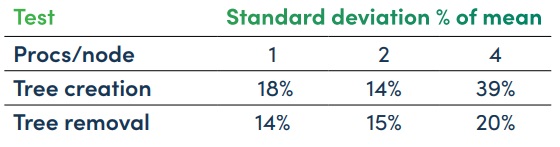

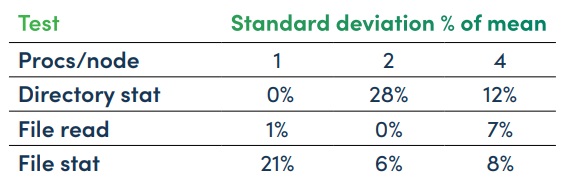

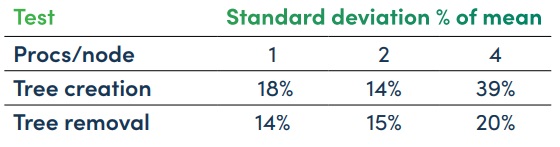

MDTest: Directory Stat, File Read & File Stat

- Treedepth = 3, Treewidth = 3, Filecount = 5

- 8 client nodes

- 1, 2, & 4 processes per client

- 300 iterations

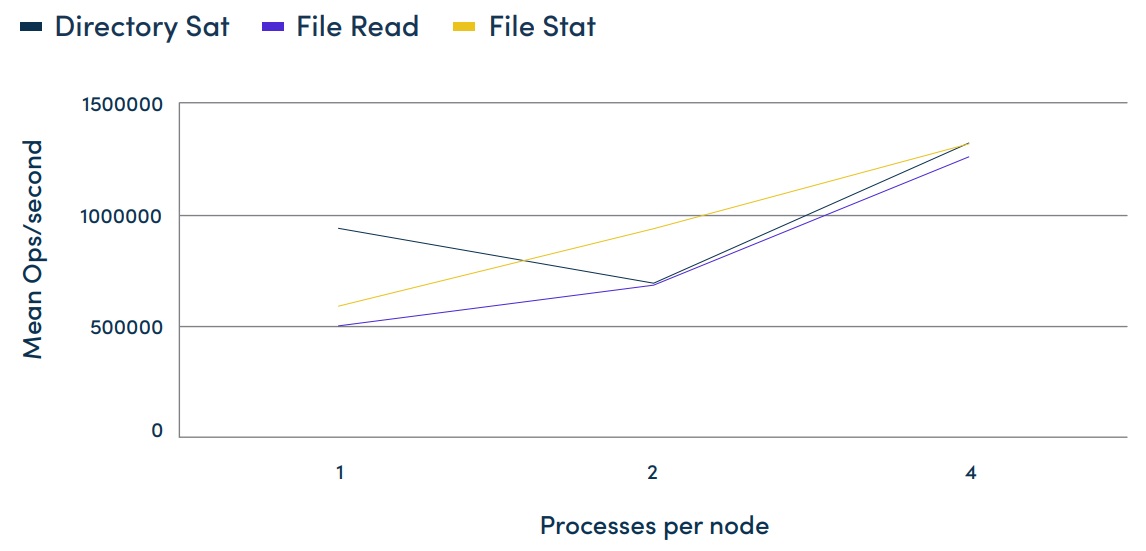

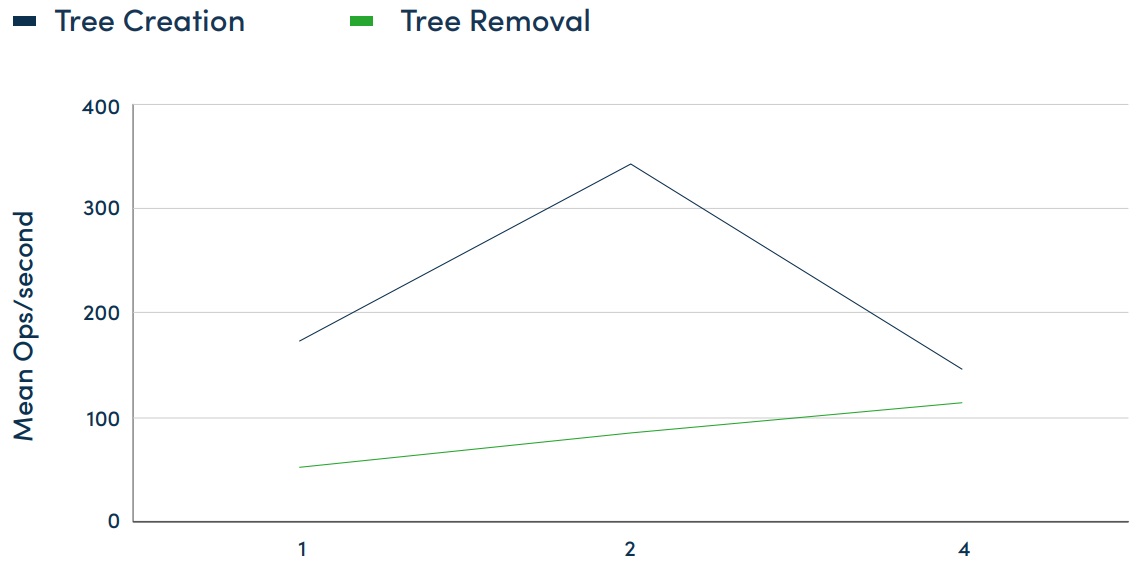

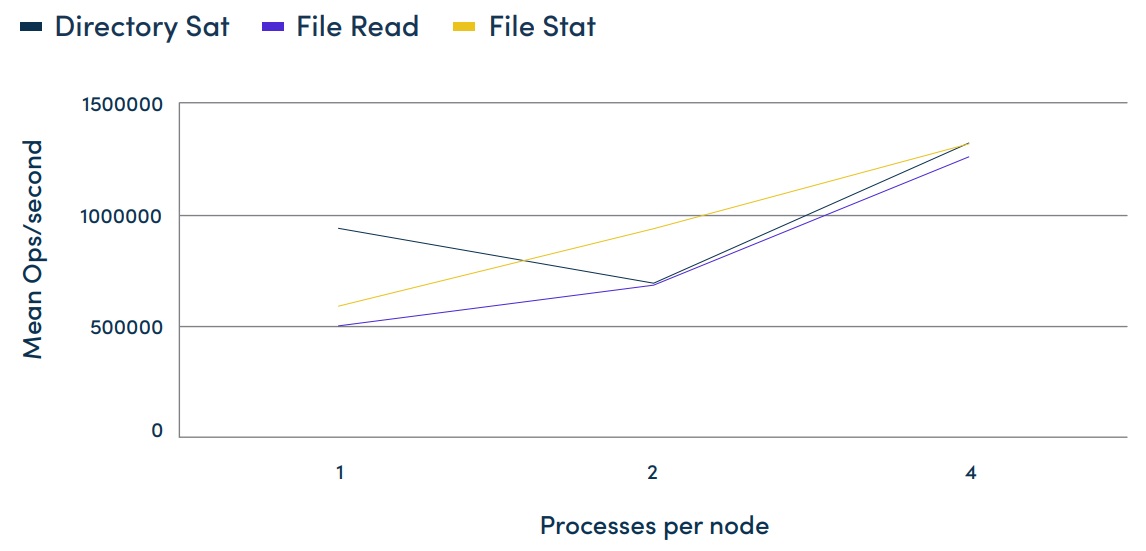

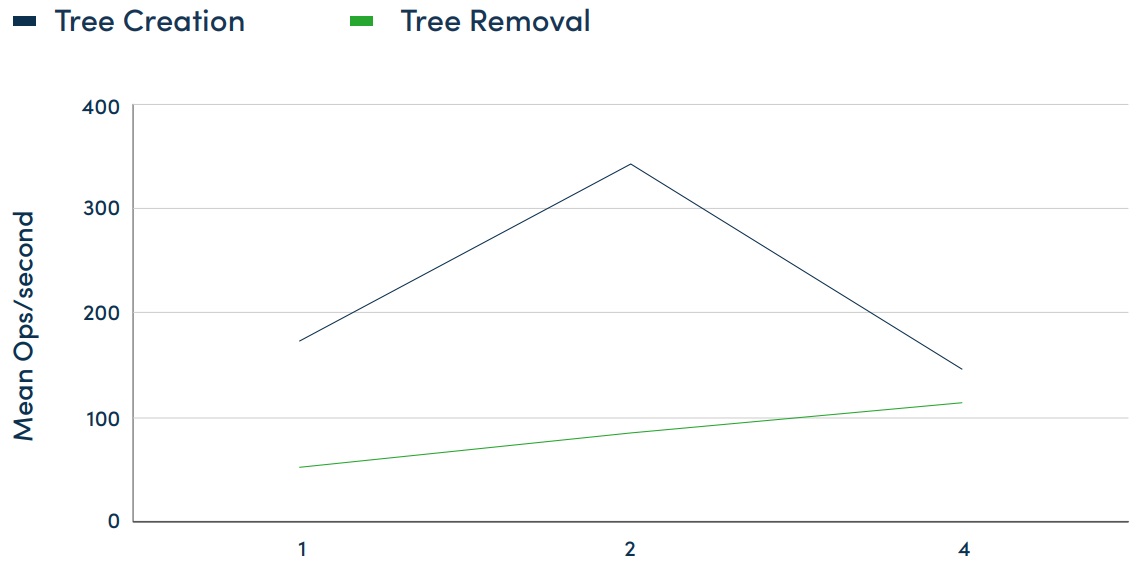

MDTest: Tree Creation & Removal

- Treedepth = 3, Treewidth = 3, Filecount = 5

- 8 client nodes

- 1, 2, & 4 process per client

- 300 iterations

Test 1b Summary

- Ceph metadata operations can scale with increasing levels of parallelization.

- Significant performance differences based on the kind of metadata activity, as expected.

Test 2

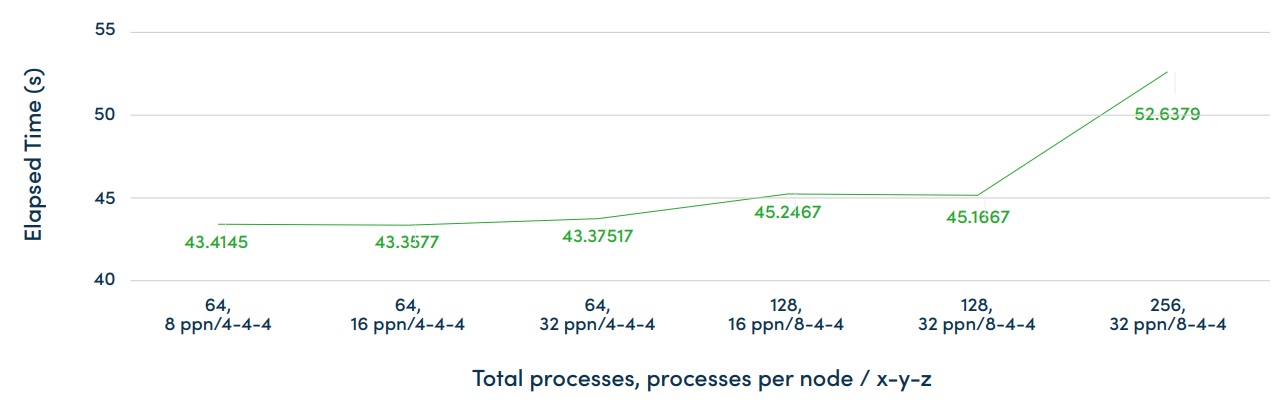

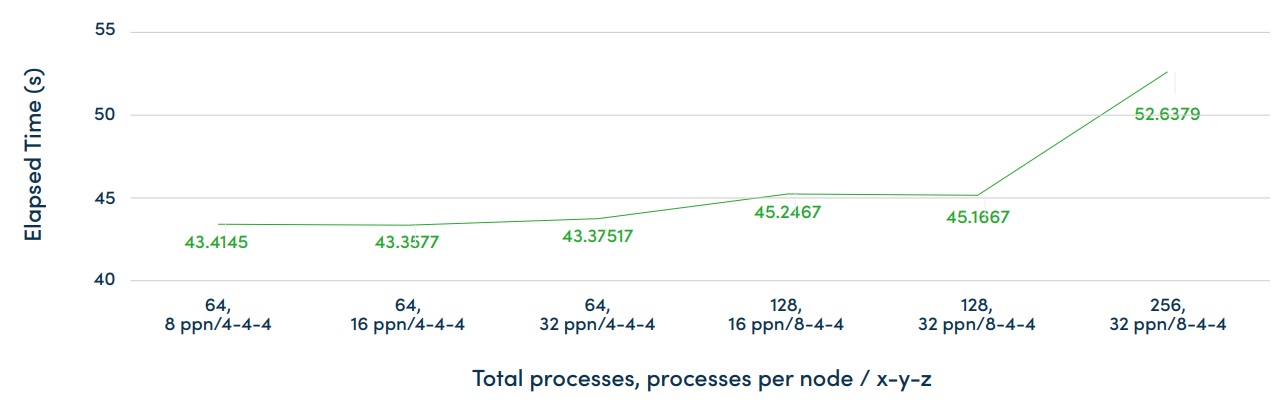

A second set of experiments are drawn from Sandia’s Trilinos computational science library known as IOSS. Using ioshell in an experiment which copies data to and from CephFS allowed experimenters to mimic the I/O behavior of an important class of Sandia applications.

IOSS: Read and writing computational meshes in the Exodus II and CGNS disk formats

- Using ioshell utility

- Average of 7 iterations

- 17MB mesh

- Data copies from CephFS to CephFS

17MB Mesh File Copy from CephFS to CephFS

Test 2 Summary

- The IOSHELL tests provide some perspective on the read and writing performance of the IOSS stack.

Test 3

The third set of experiments involved the LAMMPS molecular dynamics simulator, originally developed at Sandia, as an example of a periodic checkpointing code which uses POSIX I/O instead of IOSS. LAMMPS is well-known in the computational science community for its portability and scalability to large problem sizes. As such, it’s another useful example of application performance of CephFS.

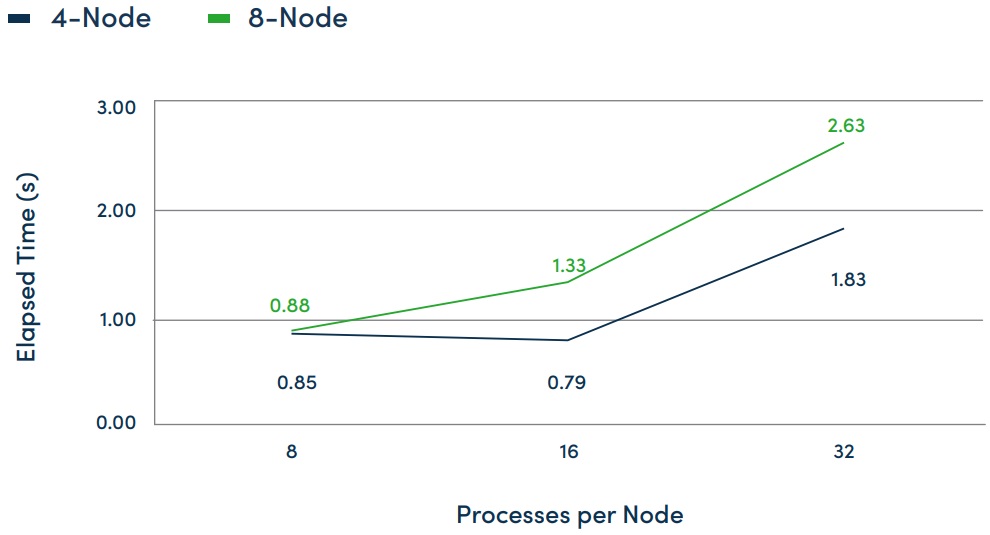

LAMMPS: Molecular dynamics simulation

- 1000 timestep run

- Dumps program state every 100 steps (10 dumps total)

- POSIX I/O

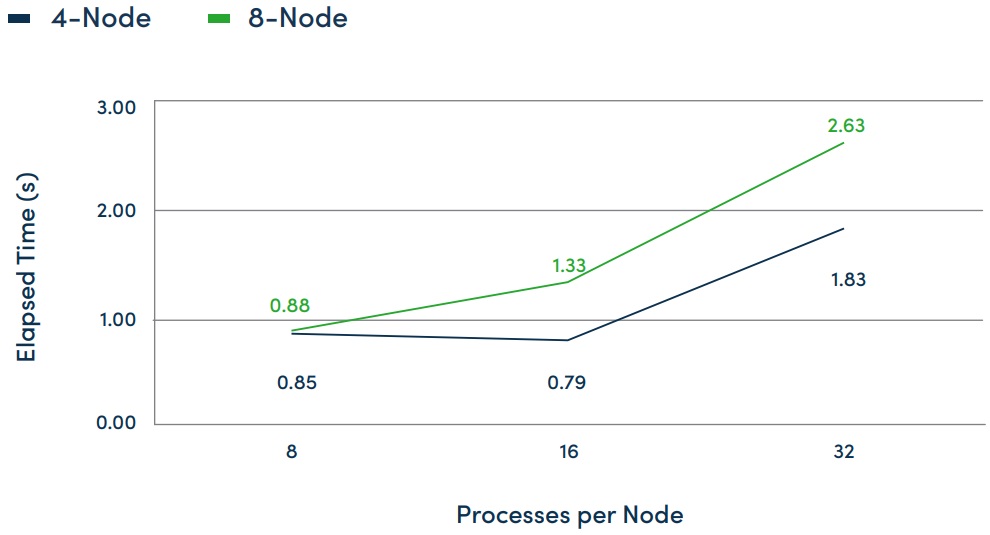

LAMMPS Checkpoint Dump Test

Test 3 Summary

The LAMMPS tests demonstrate that this Ceph configuration provides consistent performance at various levels of parallelization for the given workload. Again, this provides perspective on the performance of the LAMMPS stack.

Conclusion

Results demonstrate that across read, write, and metadata operations CephFS performance scales as parallelization increases when using HyperDrive, built with Ceph, as the storage back-end.

When analyzing storage performance for real simulated workloads rather than storage IO stress tests, measuring overall system performance (measured in completion time instead of IOPS, throughput, or transactions per second) provides more value than using storage system stress tests.

Often, the storage system is not the critical bottleneck in a high performance computing workflow. In these cases, a high performing, cost-effective, and lower power ARM-based solution will provide a more efficient approach to solving the problem.

Have any questions about the benefits of Ceph storage for HPC?

Contact Exxact Today

SoftIron CephFS Testing for High Performance Computing

What is SoftIron?

SoftIron® is the world-leader in task-specific data center solutions. SoftIron simplifies and improves the data storage experience by enabling the easy adoption of enterprise-class, open source software-defined storage (SDS) solutions at scale.

Its HyperDrive® enterprise storage solution is purpose-built to optimize the performance of Ceph, the leading open source SDS platform, renowned for its durability, robust resilience, and infinite scalability.

Interested in a SoftIron Storage Solution?

Learn more about Ceph-based Storage Appliances on Exxact

CephFS Testing for High Performance Computing (HPC)

Sandia National Laboratories conducted several sets of experiments on a SoftIron HyperDrive CephFS cluster attached to Stria, a Cavium ThunderX2 64-bit ARM-based HPC system.

Test Environment

- 2048 Placement Groups

- 84 OSDs

- 3 Monitors/Metadata Servers

- Triple-Replication

- 1 Pool for Metadata, 1 Pool for Data

- Single 10GbE Network Connection

- Shared Data/Replication Network

- 1GbE Network Connection per Worker

- Number of Clients Cary per Test

Test 1

The first set of experiments uses the well-known IOR and MDTest benchmarks. These experiments provide both proof of functionality and a baseline of performance.

Test 1a) IOR: Rapid results data ingest from parallelized simulation trials.

IOR is a parallel IO benchmark that can be used to test the performance of parallel storage systems using various interfaces and access patterns. The IOR repository also includes the mdtest benchmark which specifically tests the peak metadata rates of storage systems under different directory structures. Both benchmarks use a common parallel I/O abstraction backend and rely on MPI for synchronization.

IOR writes with increasing parallel workers

- 10,000 Iterations, Varying Block Sizes & Transfer Sizes

- Write-Caching Mitigated to Remove Effects of RAM Caching/Flushing

- 2, 4, 8 Write Clients

- 1, 2, 4 Processors per Node

Test 1a Summary

- CephFS write throughput improves with increased parallelization across and within nodes.

- CephFS write throughput improves as block size increases from 1MiB to 4MiB.

- Negligible CephFS write throughput change based on 512KiB transfer size vs 1024KiB transfer size.

- Write-caching mitigation reduced test results, but resulted in significantly smaller standard deviation, a truer test of storage system performance.

Test 1b) MDTest: Testing metadata-related performance

MDtest is an MPI-based application for evaluating the metadata performance of a file system and has been designed to test parallel file systems.

MDTest: File & Directory Creation and Removal

- Treedepth = 3, Treewidth = 3, Filecount = 5

- 8 client nodes

- 1, 2, & 4 processes per client

- 300 iterations

MDTest: Directory Stat, File Read & File Stat

- Treedepth = 3, Treewidth = 3, Filecount = 5

- 8 client nodes

- 1, 2, & 4 processes per client

- 300 iterations

MDTest: Tree Creation & Removal

- Treedepth = 3, Treewidth = 3, Filecount = 5

- 8 client nodes

- 1, 2, & 4 process per client

- 300 iterations

Test 1b Summary

- Ceph metadata operations can scale with increasing levels of parallelization.

- Significant performance differences based on the kind of metadata activity, as expected.

Test 2

A second set of experiments are drawn from Sandia’s Trilinos computational science library known as IOSS. Using ioshell in an experiment which copies data to and from CephFS allowed experimenters to mimic the I/O behavior of an important class of Sandia applications.

IOSS: Read and writing computational meshes in the Exodus II and CGNS disk formats

- Using ioshell utility

- Average of 7 iterations

- 17MB mesh

- Data copies from CephFS to CephFS

17MB Mesh File Copy from CephFS to CephFS

Test 2 Summary

- The IOSHELL tests provide some perspective on the read and writing performance of the IOSS stack.

Test 3

The third set of experiments involved the LAMMPS molecular dynamics simulator, originally developed at Sandia, as an example of a periodic checkpointing code which uses POSIX I/O instead of IOSS. LAMMPS is well-known in the computational science community for its portability and scalability to large problem sizes. As such, it’s another useful example of application performance of CephFS.

LAMMPS: Molecular dynamics simulation

- 1000 timestep run

- Dumps program state every 100 steps (10 dumps total)

- POSIX I/O

LAMMPS Checkpoint Dump Test

Test 3 Summary

The LAMMPS tests demonstrate that this Ceph configuration provides consistent performance at various levels of parallelization for the given workload. Again, this provides perspective on the performance of the LAMMPS stack.

Conclusion

Results demonstrate that across read, write, and metadata operations CephFS performance scales as parallelization increases when using HyperDrive, built with Ceph, as the storage back-end.

When analyzing storage performance for real simulated workloads rather than storage IO stress tests, measuring overall system performance (measured in completion time instead of IOPS, throughput, or transactions per second) provides more value than using storage system stress tests.

Often, the storage system is not the critical bottleneck in a high performance computing workflow. In these cases, a high performing, cost-effective, and lower power ARM-based solution will provide a more efficient approach to solving the problem.

Have any questions about the benefits of Ceph storage for HPC?

Contact Exxact Today