What's an IPU and How Can I Use It?

The IPU (Intelligence Processing Unit) is a completely new kind of massively parallel processor which holds the complete machine learning model inside the processor, and is co-designed from the ground up by Graphcore with the Poplar® SDK. Built to accelerate machine intelligence, the GC200 IPU is the world's most complex processor - but is made easy to use thanks to Poplar software, so innovators can make AI breakthroughs rapidly.

TL;DR

- Exxact is now offering a series of Graphcore based solutions from single server to large HPC clusters.

- Graphcore IPUs are compatible with popular frameworks such as PyTorch & TensorFlow using the Poplar SDK.

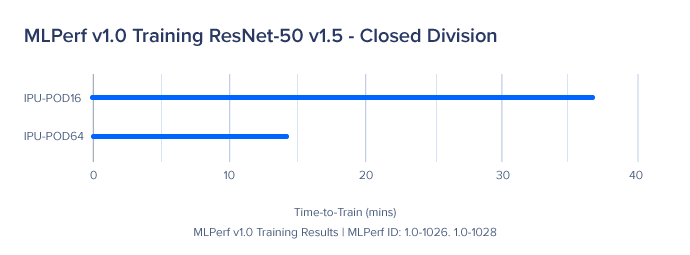

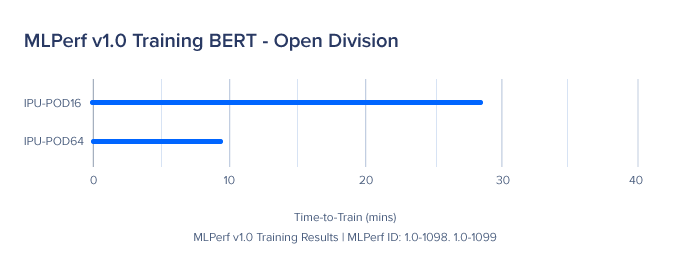

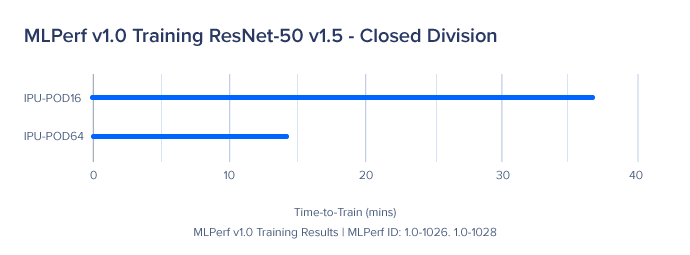

- Performance Metrics show extreme performance on AI/ML Benchmark MLPerf.

Next Generation Compute

With 59.4Bn transistors, and built using the very latest TSMC 7nm process, the Colossus MK2 GC200 IPU is the world's most sophisticated processor. Each MK2 IPU has 1472 powerful processor cores, running nearly 9,000 independent parallel program threads.

Each IPU holds an unprecedented 900MB In-Processor-Memory™ with 250 teraFLOPS of AI compute at FP16.16 and FP16.SR (stochastic rounding). The GC200 supports much more FP32 compute than any other processor.

Amazing Performance for AI Training

Curious to see how Graphcore IPUs perform? See the results for yourself below.

Ok, But Where can I get an IPU Powered System?

Luckily for you Exxact is a Elite Graphcore Partner, and has made available a series of Graphcore IPU powered systems for your AI/HPC needs. All Graphcore powered systems come with a standard 1 year warranty and support, and are offered with an extended 3 year option.

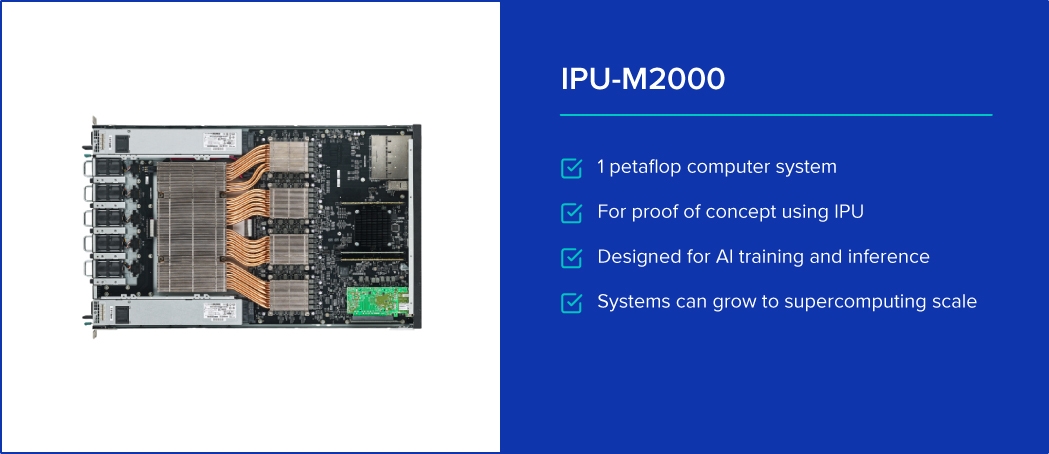

IPU-M2000 System for Prototyping

The high performance building block for AI infrastructure at scale, the IPU-M2000 sets a new standard for AI compute. One system designed for all AI workloads, supporting both training and inference, the IPU-M2000 includes 4 second generation IPU processors, packing 1 petaFLOP of AI compute in a slim 1U blade.

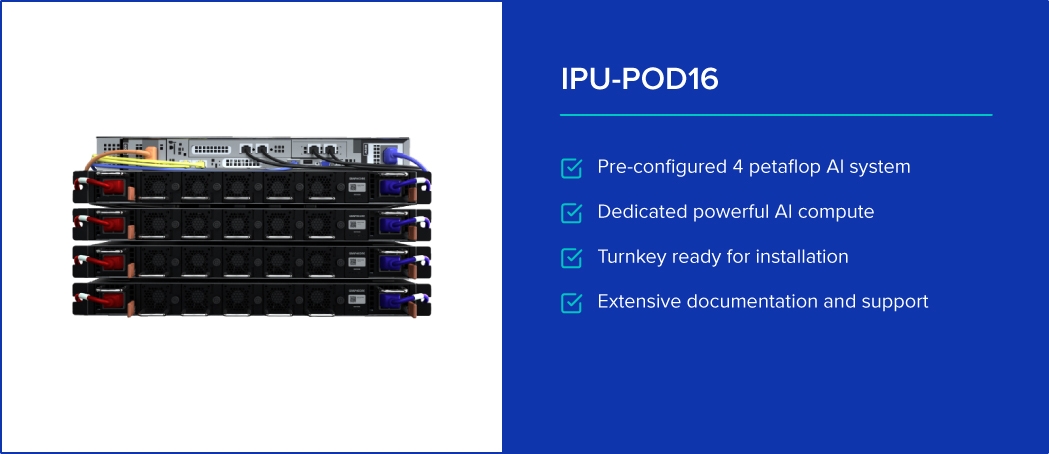

IPU-POD16 System for Larger Scalable Projects

Ideal for evaluation and exploration, IPU-POD16 is a powerful 4 petaFLOP platform for your team to develop new concepts and innovative pilot projects ready to step up to production. Four IPU-M2000 building blocks, directly connected to a host server in a pre-configured package, IPU-POD16 is your stepping stone to AI infrastructure at scale.

IPU-POD64 Cluster System for Data center, HPC, and Beyond

A completely new approach to deploying deep learning systems at a truly massive scale. Offering 16 petaFLOPS of AI-compute with ultimate flexibility to deliver the best AI compute density for your datacenter, IPU-POD64 offers ultimate performance with built-in networking ready for scale-out.

Interested in using Graphcore technologies?

Shop Graphcore Solutions

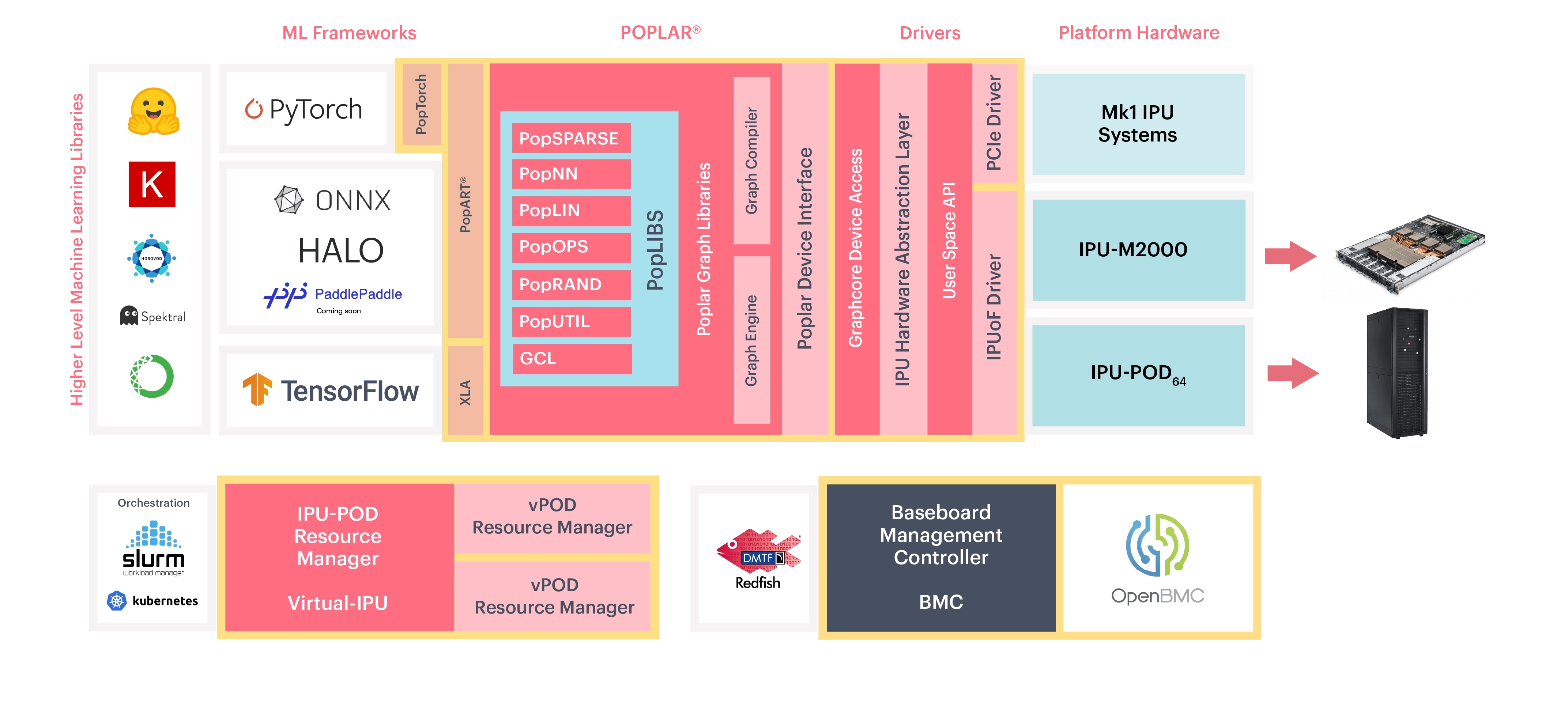

More About Poplar® Software from Graphcore

Poplar is our easy to use, production-ready software co-designed with the IPU to simplify development and deployment. Supporting standard ML frameworks, like TensorFlow and PyTorch our PopLibs libraries and framework APIs are open source to encourage community contribution and to let you create new models and improve performance with existing applications.

Have any questions?

Contact Exxact Today

The Easiest Way to Deploy IPU Accelerated Computing with Graphcore

What's an IPU and How Can I Use It?

The IPU (Intelligence Processing Unit) is a completely new kind of massively parallel processor which holds the complete machine learning model inside the processor, and is co-designed from the ground up by Graphcore with the Poplar® SDK. Built to accelerate machine intelligence, the GC200 IPU is the world's most complex processor - but is made easy to use thanks to Poplar software, so innovators can make AI breakthroughs rapidly.

TL;DR

- Exxact is now offering a series of Graphcore based solutions from single server to large HPC clusters.

- Graphcore IPUs are compatible with popular frameworks such as PyTorch & TensorFlow using the Poplar SDK.

- Performance Metrics show extreme performance on AI/ML Benchmark MLPerf.

Next Generation Compute

With 59.4Bn transistors, and built using the very latest TSMC 7nm process, the Colossus MK2 GC200 IPU is the world's most sophisticated processor. Each MK2 IPU has 1472 powerful processor cores, running nearly 9,000 independent parallel program threads.

Each IPU holds an unprecedented 900MB In-Processor-Memory™ with 250 teraFLOPS of AI compute at FP16.16 and FP16.SR (stochastic rounding). The GC200 supports much more FP32 compute than any other processor.

Amazing Performance for AI Training

Curious to see how Graphcore IPUs perform? See the results for yourself below.

Ok, But Where can I get an IPU Powered System?

Luckily for you Exxact is a Elite Graphcore Partner, and has made available a series of Graphcore IPU powered systems for your AI/HPC needs. All Graphcore powered systems come with a standard 1 year warranty and support, and are offered with an extended 3 year option.

IPU-M2000 System for Prototyping

The high performance building block for AI infrastructure at scale, the IPU-M2000 sets a new standard for AI compute. One system designed for all AI workloads, supporting both training and inference, the IPU-M2000 includes 4 second generation IPU processors, packing 1 petaFLOP of AI compute in a slim 1U blade.

IPU-POD16 System for Larger Scalable Projects

Ideal for evaluation and exploration, IPU-POD16 is a powerful 4 petaFLOP platform for your team to develop new concepts and innovative pilot projects ready to step up to production. Four IPU-M2000 building blocks, directly connected to a host server in a pre-configured package, IPU-POD16 is your stepping stone to AI infrastructure at scale.

IPU-POD64 Cluster System for Data center, HPC, and Beyond

A completely new approach to deploying deep learning systems at a truly massive scale. Offering 16 petaFLOPS of AI-compute with ultimate flexibility to deliver the best AI compute density for your datacenter, IPU-POD64 offers ultimate performance with built-in networking ready for scale-out.

Interested in using Graphcore technologies?

Shop Graphcore Solutions

More About Poplar® Software from Graphcore

Poplar is our easy to use, production-ready software co-designed with the IPU to simplify development and deployment. Supporting standard ML frameworks, like TensorFlow and PyTorch our PopLibs libraries and framework APIs are open source to encourage community contribution and to let you create new models and improve performance with existing applications.

Have any questions?

Contact Exxact Today