80GB Ampere GPU & New DGX Station Announced at SC20

Last week NVIDIA made a few big announcements during the SC20 Virtual Conference. The centerpiece of the announcements was the NVIDIA DGX Station™ A100 which, as its name implies, is the successor to the Volta powered variant. This new DGX Station comes loaded with four A100 GPUs – the standard 40GB model, or a newly announced 80GB A100 version.

Exxact will offer and support the following NVIDIA DGX Station A100 Part Numbers:

- DGXS-2040D+P2CMI00 – NVIDIA DGX Station A100 for Commercial and Government institutions (40GB NVIDIA A100 GPUs)

- DGXS-2080D+P2CMI00 – NVIDIA DGX Station for Commercial and Government institutions (80GB NVIDIA A100 GPUs)

- DGXS-2040D+P2EDI00 – NVIDIA DGX Station A100 for Educational Institutions (40GB NVIDIA A100 GPUs)

- DGXS-2080D+P2EDI00 – NVIDIA DGX Station for Educational Institutions (80GB NVIDIA A100 GPUs)

Interested in upgrading to a new NVIDIA DGX system?

Shop NVIDIA DGX Systems

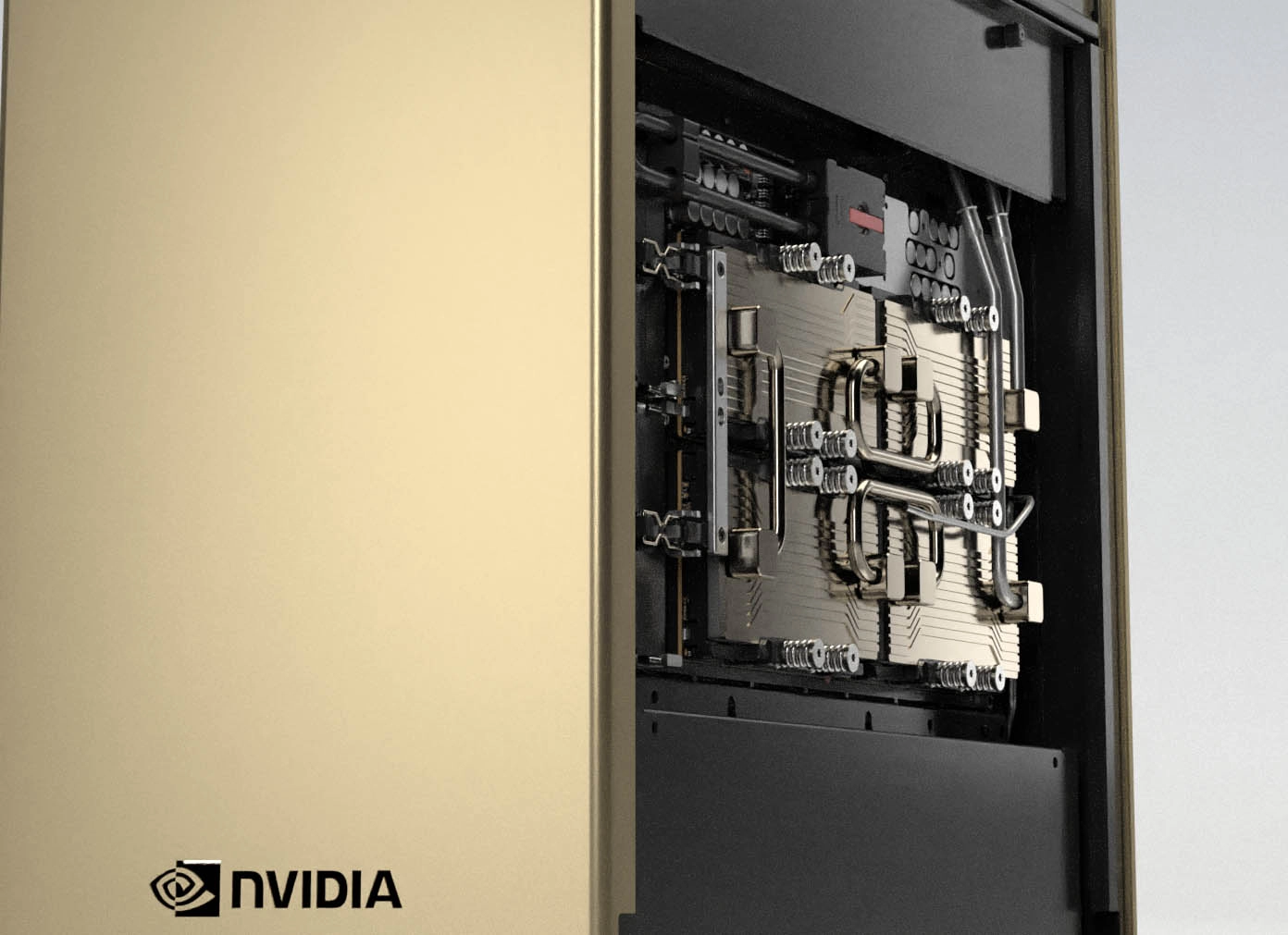

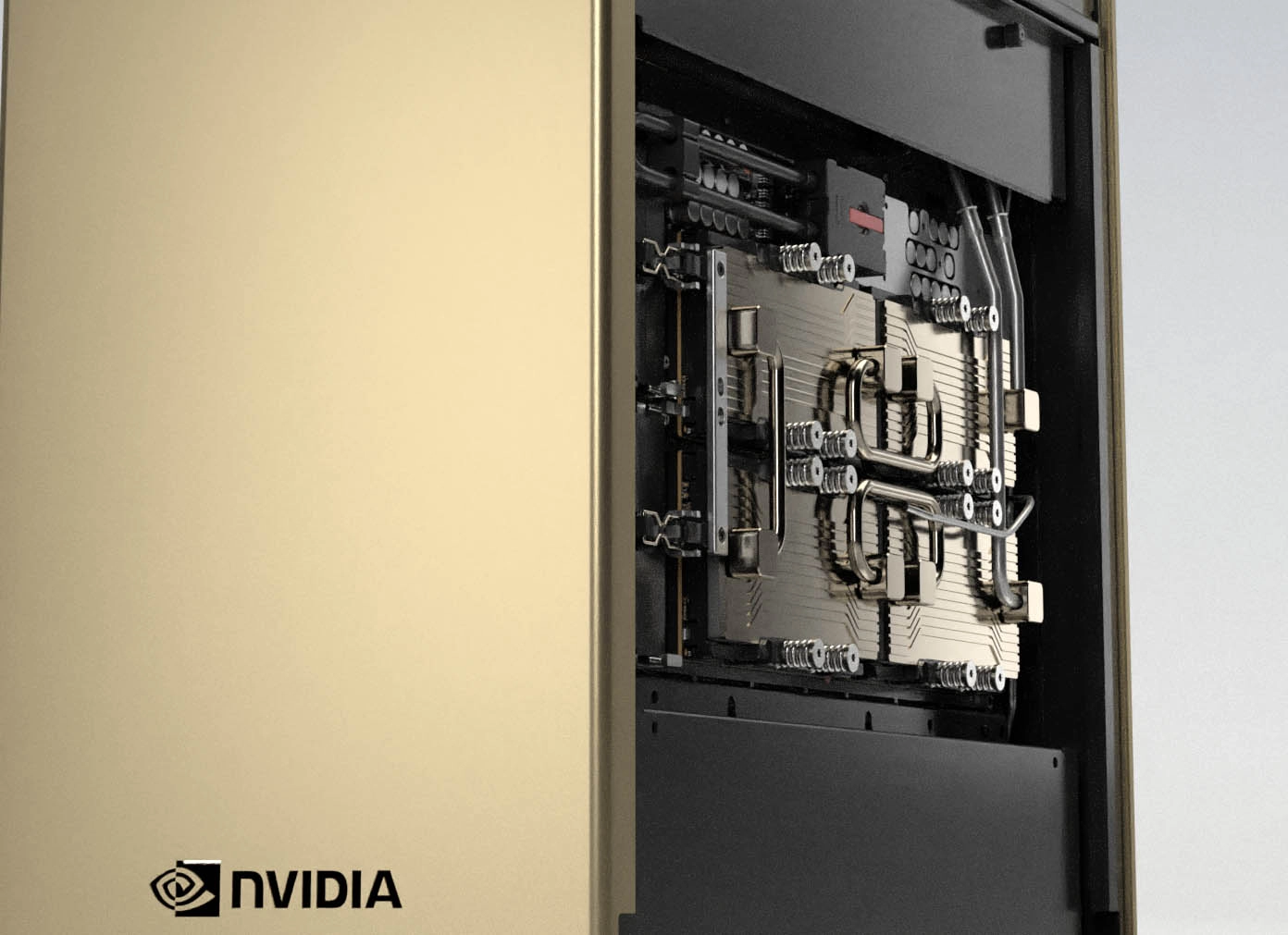

A Closer Look at the NVIDIA DGX Station A100

The DGX Station A100 is being hailed as having “data center performance without a data center,” meaning that you can plug it into a standard wall outlet. For the CPU it has a 64-core AMD EPYC server class processor along with 512GB of memory and a 7.68TB NVME drive. This system (as well as its larger cousin, the NVIDIA DGX A100) is Multi-Instance GPU (MIG) technology enabled. This allows the system to have 28 separate GPU instances users can access.

Image source: NVIDIA

What can the DGX Station do for me?

- Supercharge Data Science Teams – Organizations can provide multiple users with a centralized AI resource for all workloads. With Multi-Instance GPU (MIG), it’s possible to allocate up to 28 separate GPU devices to individual users and jobs.

- Data Center Class Performance – The four NVIDIA A100 Tensor Core GPUs provide top-of-the-line, server-grade specs, performance, and accessibility without the need for datacenter power and cooling.

- Access AI Infrastructure from Anywhere – Designed for today’s mobile workforce, the DGX Station A100 requires no complicated installation or significant IT infrastructure. Simply plug it into any standard wall outlet to get up and running in minutes.

- Solve More Complex Models Faster – Boasting up to 320GB of GPU memory, the four fully interconnected and MIG-capable NVIDIA A100 GPUs with NVIDIA® NVLink® , this system is capable of running parallel jobs and multiple users without impacting system performance.

NVIDIA DGX A100 Gets an Upgrade

The powerhouse behind the new DGX Station is the newly released 80GB A100 version. Also announced, the 80GB variant will power the NVIDIA DGX A100 offering a 640GB version of the compute platform. Furthermore, upgrade options will be made available for current NVIDIA DGX A100 customers who need the extra GPU memory boost.

Exxact will offer and support the following NVIDIA DGX A100 (640GB) Part Numbers:

- DGXA-2530F+P2CMI00 – NVIDIA DGX A100 System for Commercial or Government institutions (640GB)

- DGXA-2530F+P2EDI00 – NVIDIA DGX A100 System for Educational institutions (640GB)

What You Should Know About the New A100 80GB Version

Per NVIDIA, the A100 80GB includes the many groundbreaking features of NVIDIA Ampere architecture including:

- Third-Generation Tensor Cores: Provide up to 20x AI throughput of the previous Volta generation with a new format TF32, as well as 2.5x FP64 for HPC, 20x INT8 for AI inference and support for the BF16 data format.

- Larger, Faster HBM2e GPU Memory: Doubles the memory capacity and is the first in the industry to offer more than 2TB per second of memory bandwidth.

- MIG technology: Doubles the memory per isolated instance, providing up to seven MIGs with 10GB each.

- Structural Sparsity: Delivers up to a 2x speedup inferencing sparse models.

- Third-Generation NVLink® and NVSwitch™: Provide twice the GPU-to-GPU bandwidth of the previous generation interconnect technology, accelerating data transfers to the GPU for data-intensive workloads to 600 gigabytes per second.

Have any questions about NVIDIA products?

Contact Exxact Today

Exxact to Support New NVIDIA DGX A100 Station & NVIDIA A100 80GB Platforms

80GB Ampere GPU & New DGX Station Announced at SC20

Last week NVIDIA made a few big announcements during the SC20 Virtual Conference. The centerpiece of the announcements was the NVIDIA DGX Station™ A100 which, as its name implies, is the successor to the Volta powered variant. This new DGX Station comes loaded with four A100 GPUs – the standard 40GB model, or a newly announced 80GB A100 version.

Exxact will offer and support the following NVIDIA DGX Station A100 Part Numbers:

- DGXS-2040D+P2CMI00 – NVIDIA DGX Station A100 for Commercial and Government institutions (40GB NVIDIA A100 GPUs)

- DGXS-2080D+P2CMI00 – NVIDIA DGX Station for Commercial and Government institutions (80GB NVIDIA A100 GPUs)

- DGXS-2040D+P2EDI00 – NVIDIA DGX Station A100 for Educational Institutions (40GB NVIDIA A100 GPUs)

- DGXS-2080D+P2EDI00 – NVIDIA DGX Station for Educational Institutions (80GB NVIDIA A100 GPUs)

Interested in upgrading to a new NVIDIA DGX system?

Shop NVIDIA DGX Systems

A Closer Look at the NVIDIA DGX Station A100

The DGX Station A100 is being hailed as having “data center performance without a data center,” meaning that you can plug it into a standard wall outlet. For the CPU it has a 64-core AMD EPYC server class processor along with 512GB of memory and a 7.68TB NVME drive. This system (as well as its larger cousin, the NVIDIA DGX A100) is Multi-Instance GPU (MIG) technology enabled. This allows the system to have 28 separate GPU instances users can access.

Image source: NVIDIA

What can the DGX Station do for me?

- Supercharge Data Science Teams – Organizations can provide multiple users with a centralized AI resource for all workloads. With Multi-Instance GPU (MIG), it’s possible to allocate up to 28 separate GPU devices to individual users and jobs.

- Data Center Class Performance – The four NVIDIA A100 Tensor Core GPUs provide top-of-the-line, server-grade specs, performance, and accessibility without the need for datacenter power and cooling.

- Access AI Infrastructure from Anywhere – Designed for today’s mobile workforce, the DGX Station A100 requires no complicated installation or significant IT infrastructure. Simply plug it into any standard wall outlet to get up and running in minutes.

- Solve More Complex Models Faster – Boasting up to 320GB of GPU memory, the four fully interconnected and MIG-capable NVIDIA A100 GPUs with NVIDIA® NVLink® , this system is capable of running parallel jobs and multiple users without impacting system performance.

NVIDIA DGX A100 Gets an Upgrade

The powerhouse behind the new DGX Station is the newly released 80GB A100 version. Also announced, the 80GB variant will power the NVIDIA DGX A100 offering a 640GB version of the compute platform. Furthermore, upgrade options will be made available for current NVIDIA DGX A100 customers who need the extra GPU memory boost.

Exxact will offer and support the following NVIDIA DGX A100 (640GB) Part Numbers:

- DGXA-2530F+P2CMI00 – NVIDIA DGX A100 System for Commercial or Government institutions (640GB)

- DGXA-2530F+P2EDI00 – NVIDIA DGX A100 System for Educational institutions (640GB)

What You Should Know About the New A100 80GB Version

Per NVIDIA, the A100 80GB includes the many groundbreaking features of NVIDIA Ampere architecture including:

- Third-Generation Tensor Cores: Provide up to 20x AI throughput of the previous Volta generation with a new format TF32, as well as 2.5x FP64 for HPC, 20x INT8 for AI inference and support for the BF16 data format.

- Larger, Faster HBM2e GPU Memory: Doubles the memory capacity and is the first in the industry to offer more than 2TB per second of memory bandwidth.

- MIG technology: Doubles the memory per isolated instance, providing up to seven MIGs with 10GB each.

- Structural Sparsity: Delivers up to a 2x speedup inferencing sparse models.

- Third-Generation NVLink® and NVSwitch™: Provide twice the GPU-to-GPU bandwidth of the previous generation interconnect technology, accelerating data transfers to the GPU for data-intensive workloads to 600 gigabytes per second.

Have any questions about NVIDIA products?

Contact Exxact Today