NVIDIA GTC happened earlier this year in the Spring, but we are getting a second GTC for some new announcements. Jensen Huang takes the stage again to introduce NVIDIA’s newest advancements and technologies to fuel the world.

Again and again, Huang connects new technologies to new products to new opportunities with a strong emphasis on AI to enable things like never-before-seen graphics or building virtual proving grounds where the world’s biggest companies can refine their products NVIDIA has set out to change the world with their accelerators.

GeForce RTX 40 Series GPUs and Ada Lovelace Generation

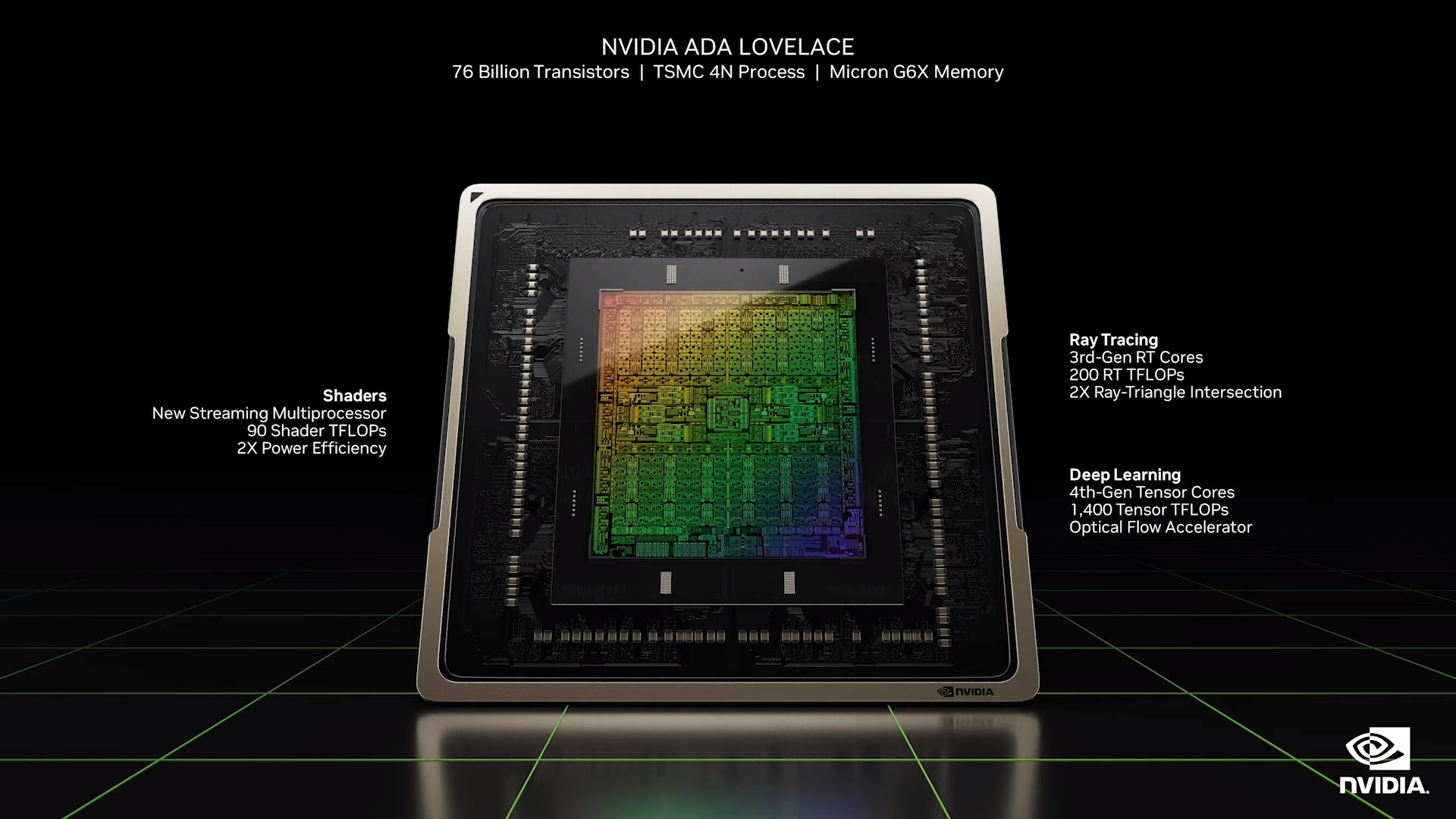

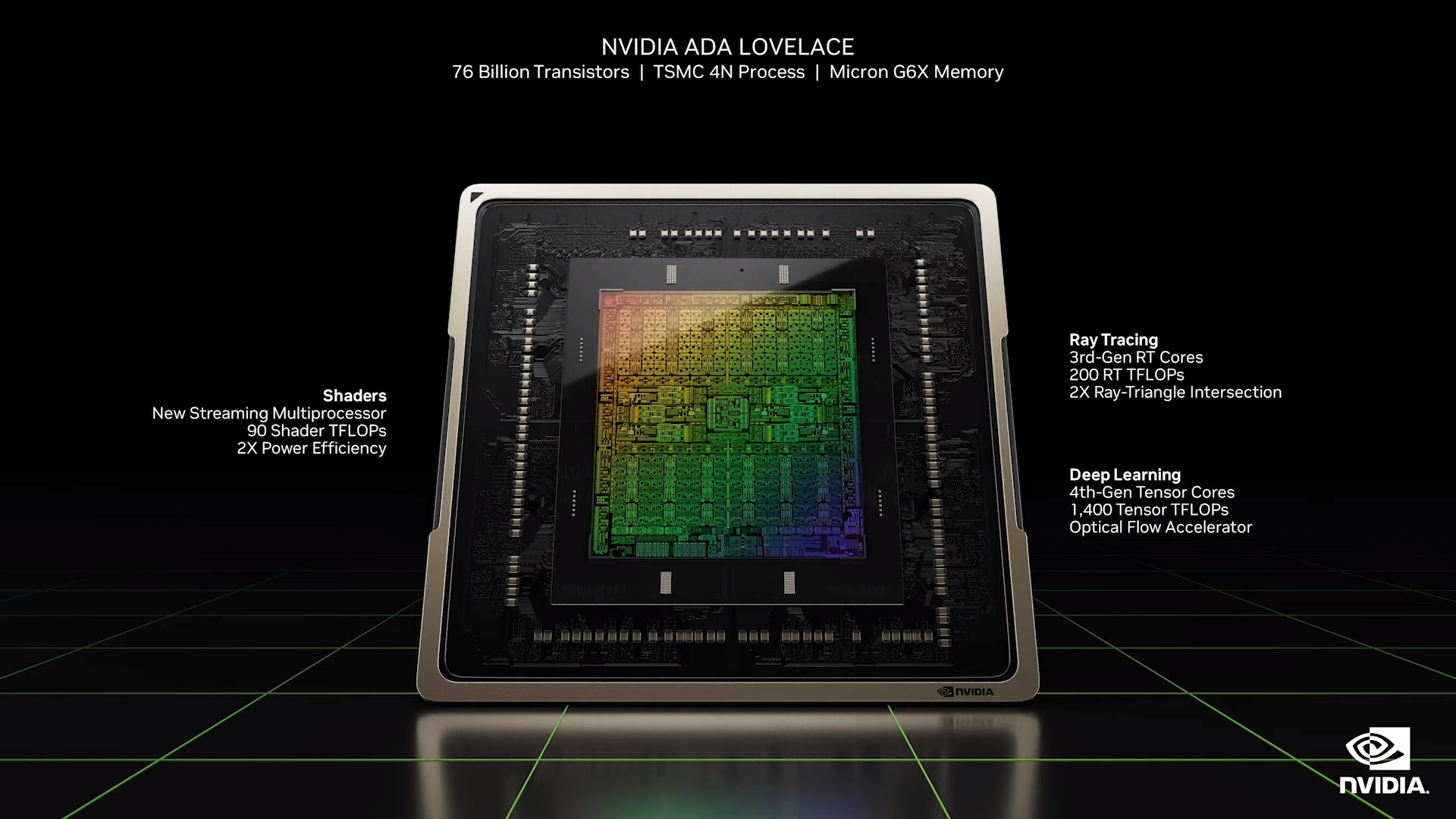

The Ada Lovelace Generation of GPUs has paved way for new iterations of the flagship GPUs. They have included next-generation advancements with a 4N TSMC chip developed with up to 76 Billion Transistors.

They have implemented New Sharders Execution Reordering to orchestrate rendering and ray tracing jobs on the fly. Their new generation Ray Tracing Cores deliver 2x the throughput with the new Opacity Micro Mapping and Micro Mesh Engine. Alongside the addition of a new Tensor Core and the addition of the NVIDIA Hopper Transformer Engine and FP8 tensor processing, the Ada Lovelace generation exceeds Ampere by at least double.

DLSS 3 or Deep Learning Super Sampling is a convolution autoencoder model that enables games to be rendered at low resolutions yet played at high resolutions. Its advancement over the previous iteration of DLSS makes this process extremely efficient and powerful, relieving GPU resources and CPU resources and resulting in higher framerates!

GeForce RTX 4090 has 24GB of GDDR6X, 16,384 Cores, built on the Ada Lovelace platform performs 2x Faster in rasterized games and 4x faster for ray traced games than the previous champion RTX 3090Ti. Available October 12th with a base price of $1599.

The GeForce RTX 4080 16GB GDDR6X has 9,728 CUDA cores Cores, is built on the Ada Lovelace platform performs twice as fast with DLSS 3 in today’s games as the GeForce RTX 3080 Ti, and is more powerful than the GeForce RTX 3090 Ti at lower power. Available November with a base price of $1,199.

The GeForce RTX 4080 12GB GDDR6X has 7,680 CUDA cores built on the Ada Lovelace platform and performs is faster than the RTX 3090 Ti with DLSS 3, the previous-generation flagship GPU. It’s priced at $899.

Omniverse and Digital Twins: Design, Build, Operate.

With advancements in Ray Tracing, NVIDIA teams up with Valve to develop Portal RTX, remastering the beloved puzzle game of the decade. Through NVIDIA Omniverse and RTX Remix to promote the creativity of modding tools to enhance game assets, shaders, and all. RTX Remix is the most advanced game modding tool created to accelerate the visual appearance and fidelity of the games we love.

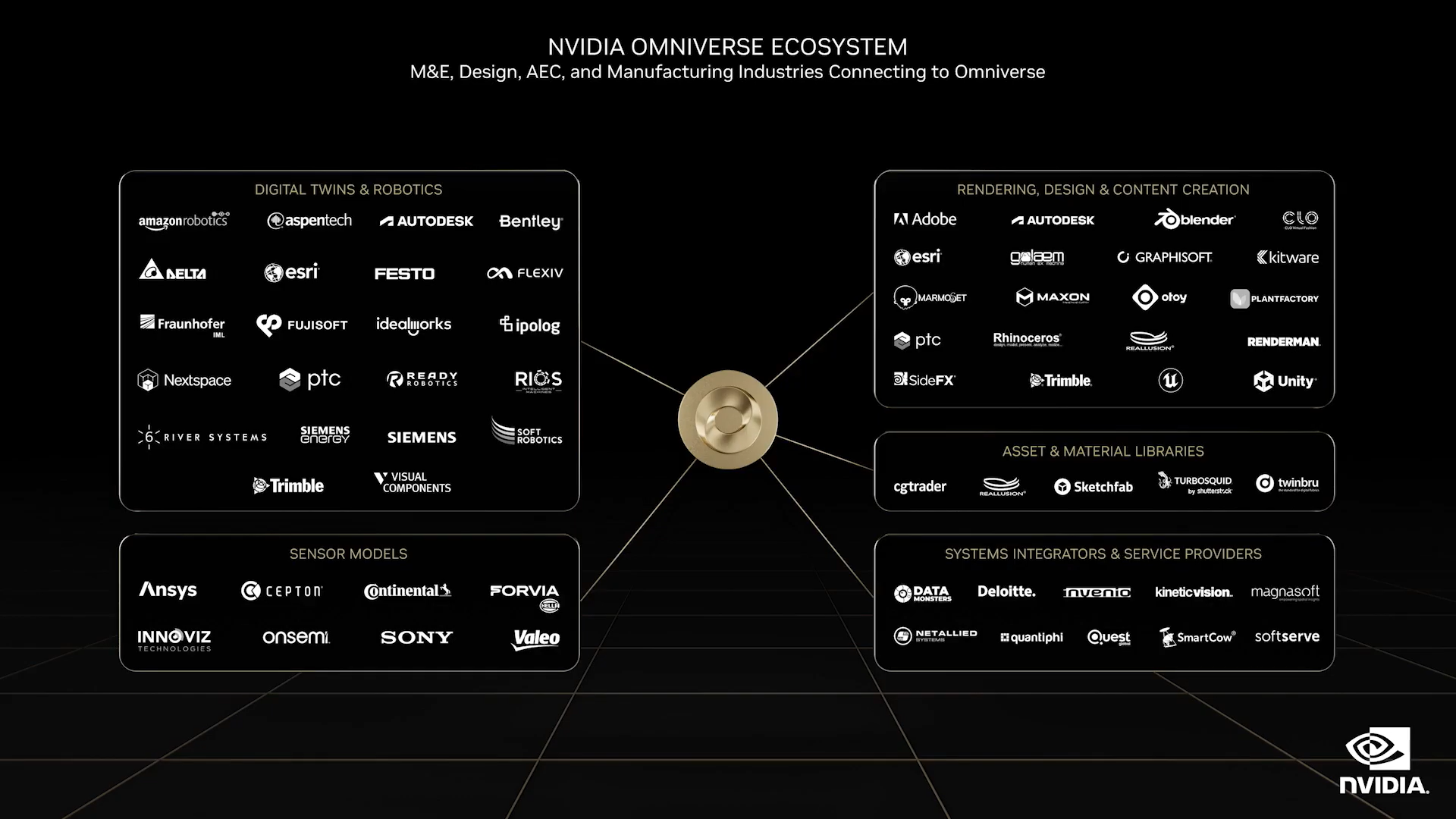

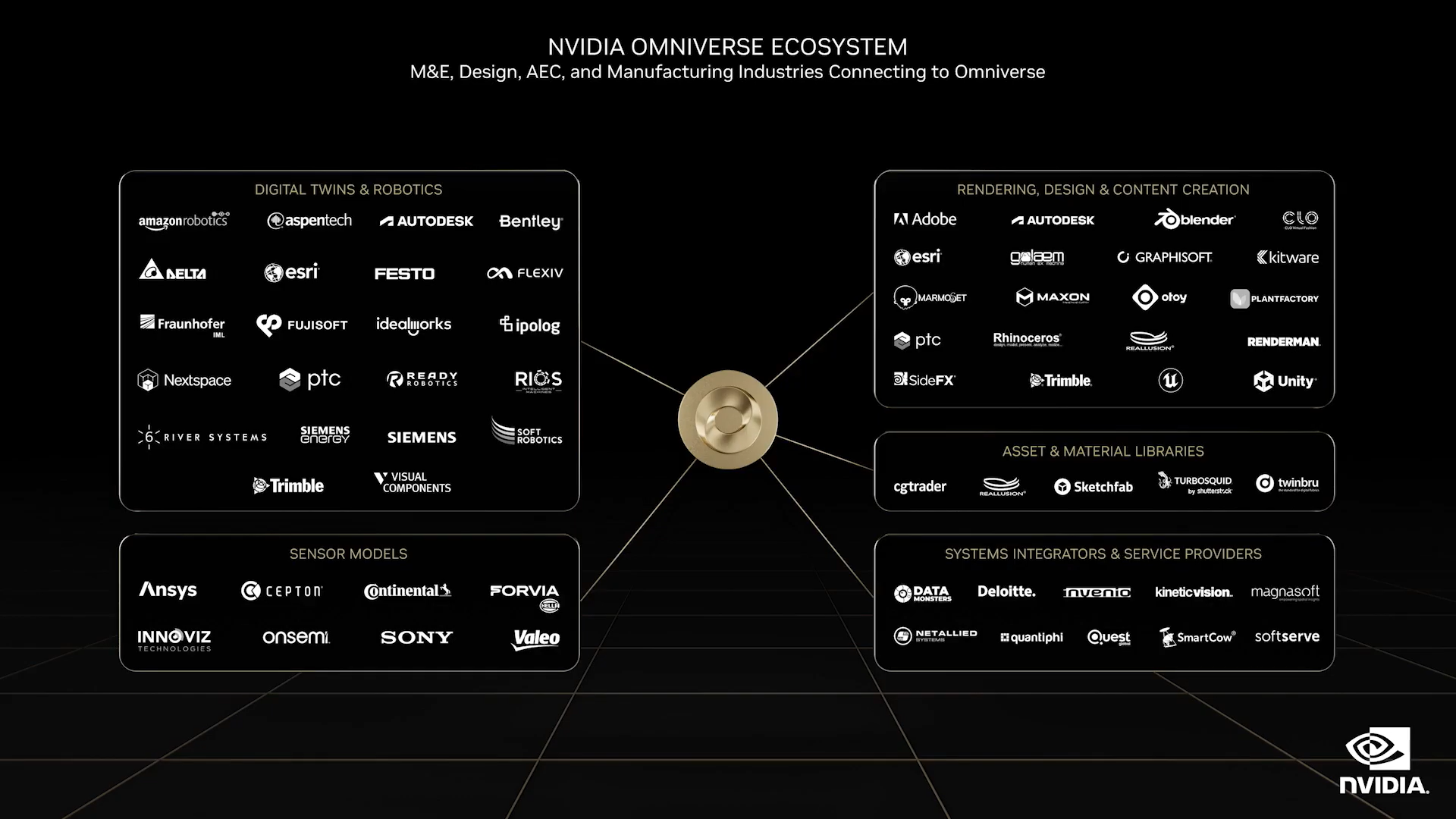

No physical product will be deployed without intensive testing in its Omniverse simulation. Digital twins are being used by KPF, Amazon Packing Facilities, General Motors Factory Lines, Lowes Department Stores, and Deutsche Bahn Railways to optimize their processes such as efficiency, understanding valuable insights, and visualizing a physical world digitally. Companies like Telco, Charter and Heavy.AI use Omniverse to map out wireless networks of 5G microcells and towers using NVIDIA Omniverse to maximize efficiency and optimize cell tower placement. Every one of these companies realizes the immense power of digital twins simulation that NVIDIA Omniverse has to offer.

NVIDIA NeMo Large Language Models

Large language models are the most important AI models today. Based on the transformer architecture, these giant models can learn to understand meanings and languages without supervision or labeled datasets, unlocking remarkable new capabilities.

With LLMs like OpenAI’s GPT-3 and DALLE2, Stable Diffusion, and more, getting a general AI that can understand human words with less human intervention can prove valuable in the advancement of AI. From a single model, these LLMs can generate images, carry conversations, translate languages, summarize text, generate text, or even write programming code. Models can perform tasks they were never trained on.

To make it easier for researchers to apply this incredible technology to their work, NVIDIA announced the Nemo LLM Service, an NVIDIA-managed cloud service to adapt pre-trained LLMs to perform specific tasks.

NVIDIA Hopper H100 and Grace Hopper Superchip

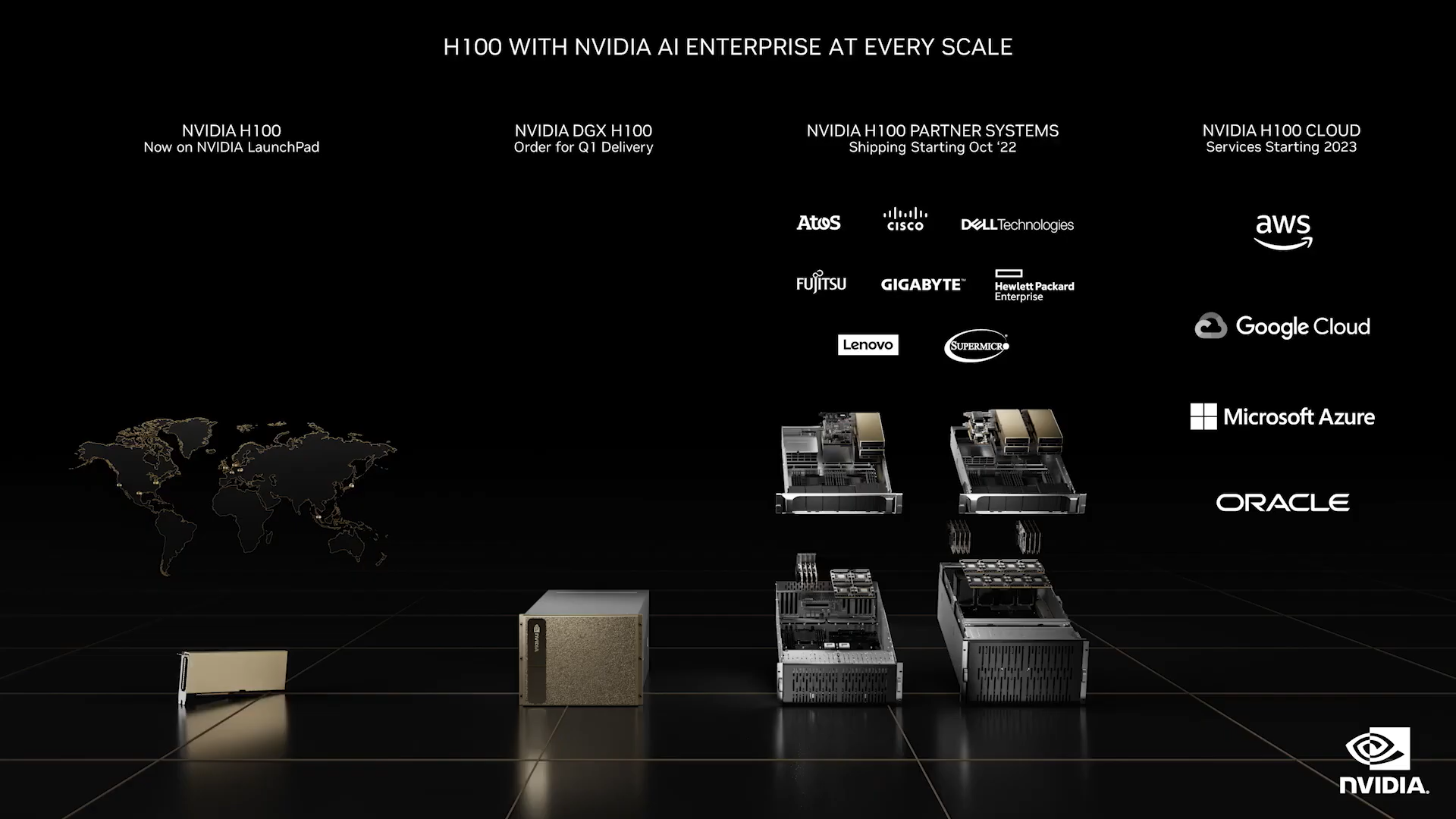

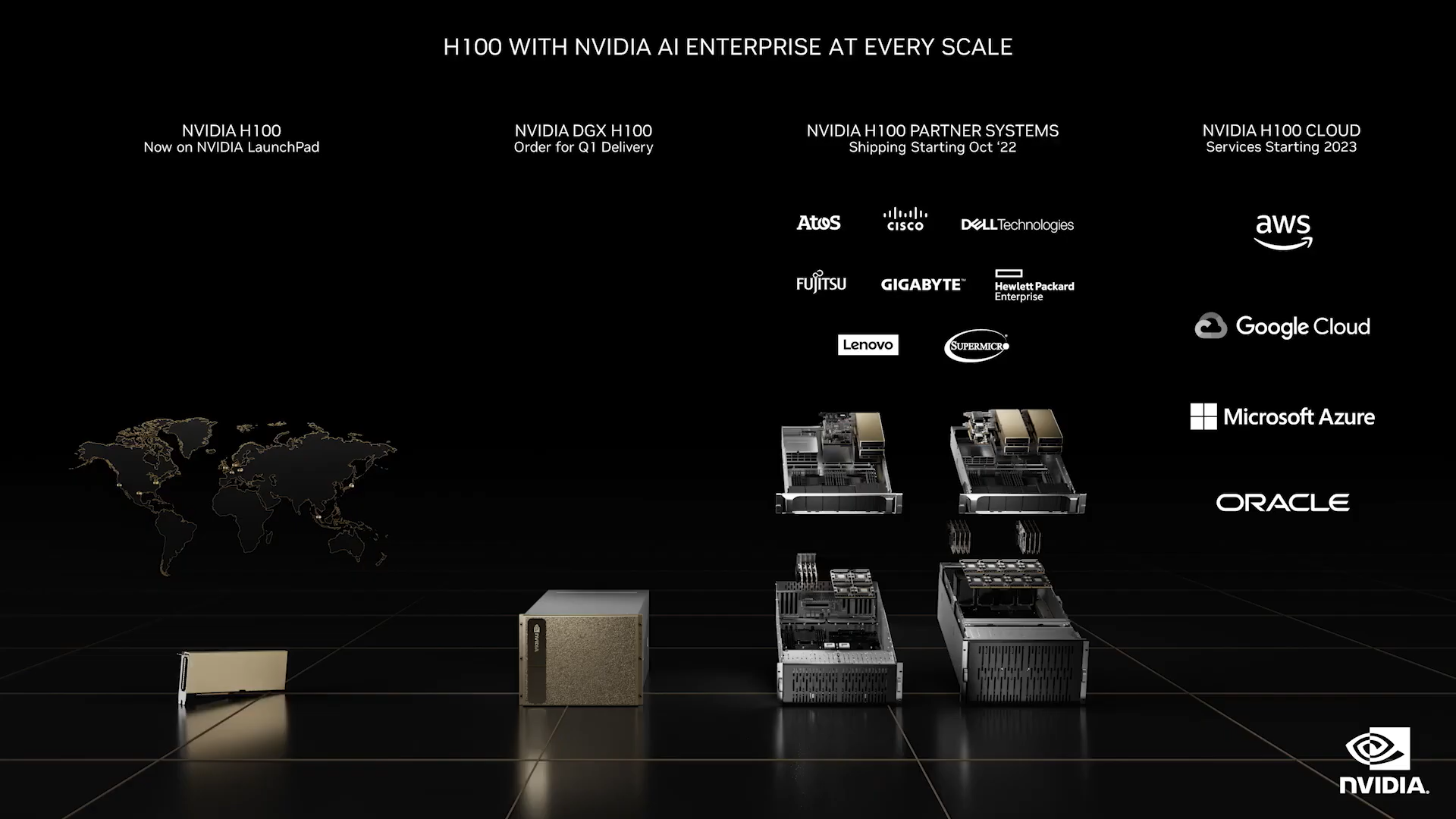

NVIDIA Hopper is NVIDIA’s data center GPU accelerator that has the massive throughput to power large language models and delivers blistering AI performance to make it possible for anyone around the world to take advantage of the advancements of AI.

Hopper is in full production and coming to power the world’s AI factories. H100 is currently available on NVIDIA Launchpad for a test drive, shipping DGX H100s in Q1 2023 with HGX board partners starting October 2022. NVIDIA H100 cloud services driven by NVIDIA’s supercomputers will be available in 2023.

The Hopper H100 boasts massive gains over the A100 with an advanced Transformer Engine that can serve over 30,000 people concurrently for inferencing.

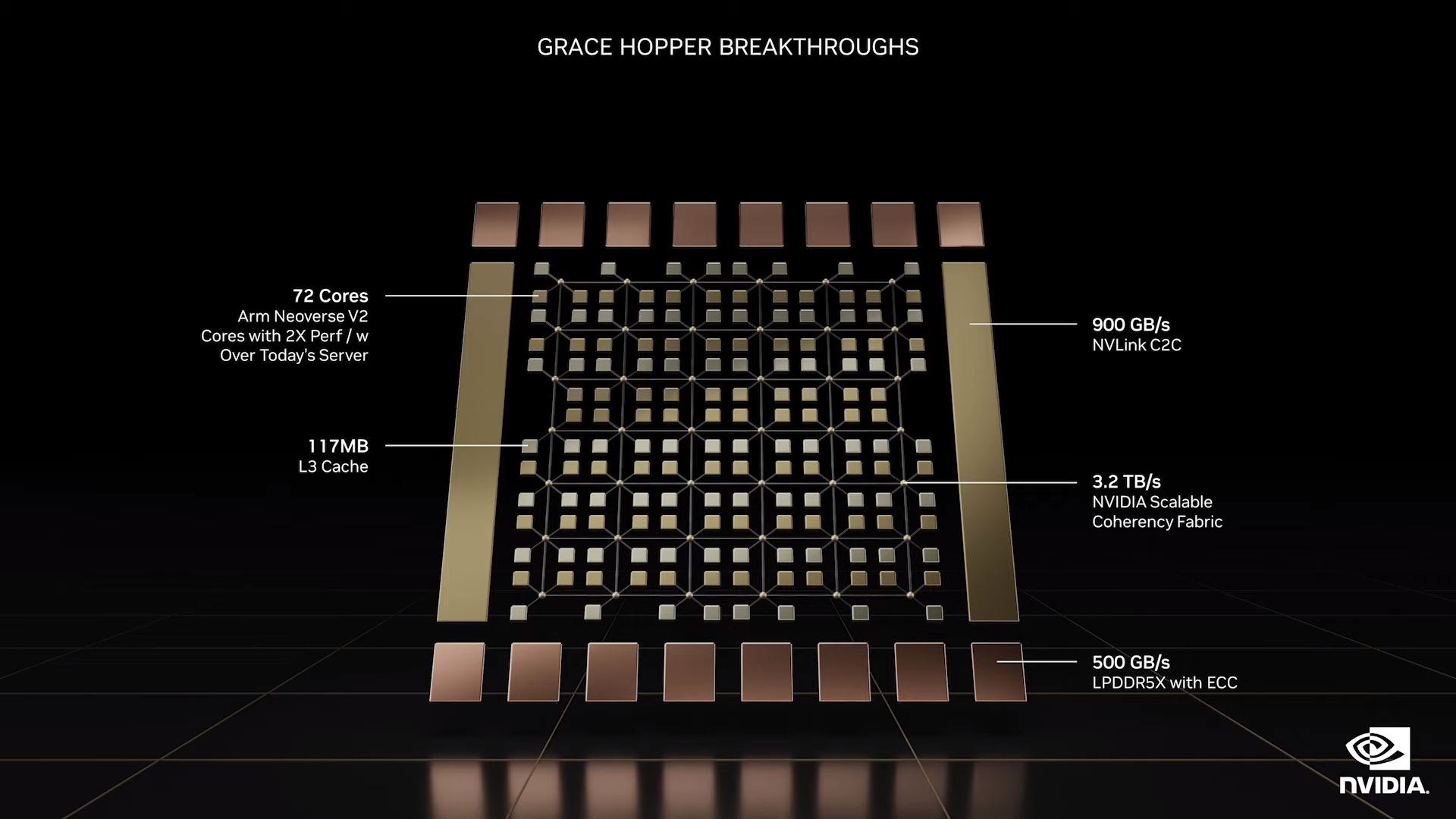

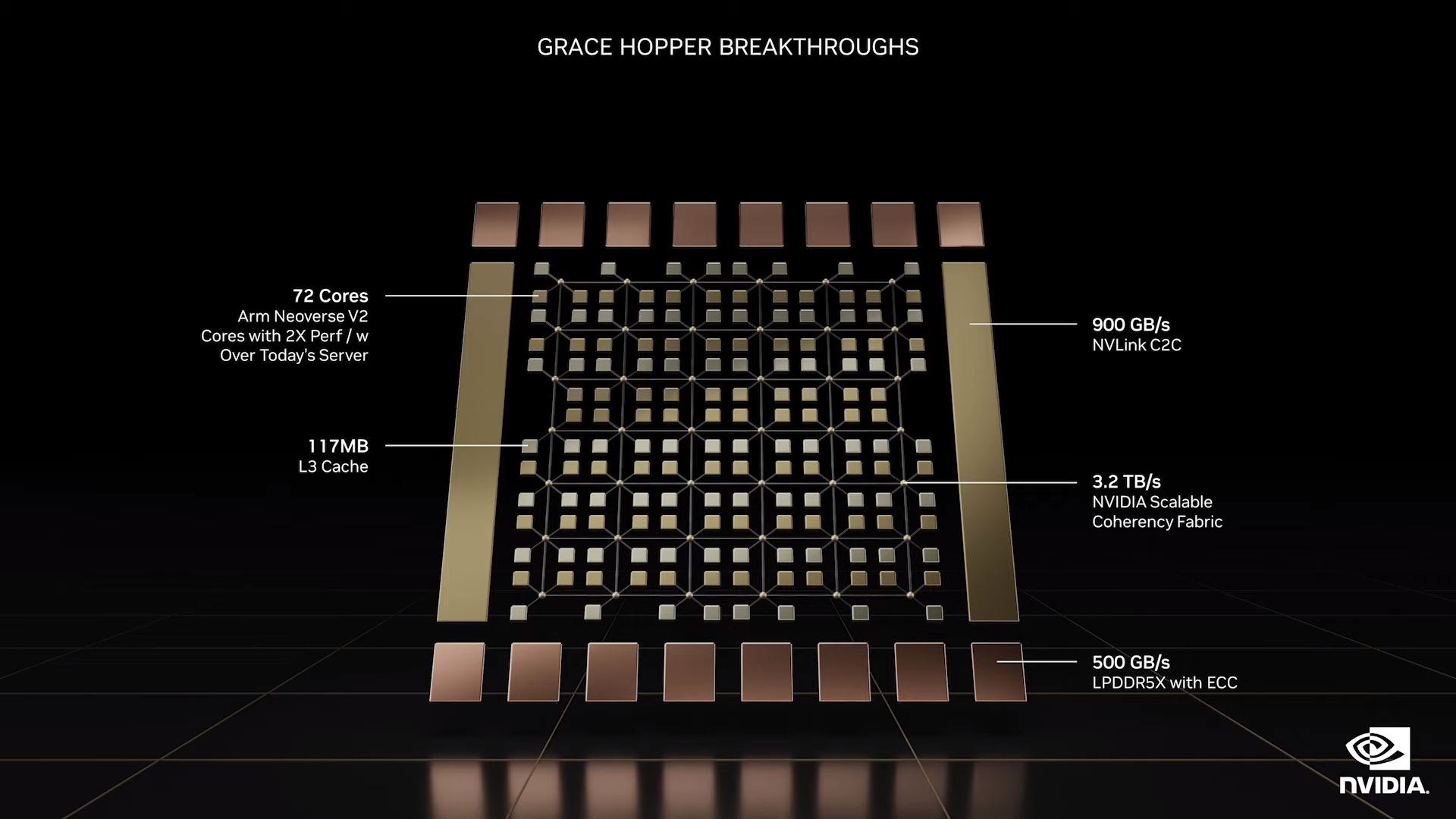

The Grace CPU has 72 Arm Neoverse Cores, 117MB of L3 cache, 900GB/s enabled for NVLink C2C, 3.2 TB/s of bisectional bandwidth, built for LPDDR5X (which delivers 1.5x bandwidth of DDR5 at an eight of the power).

Grace Hopper Superchip is NVIDIA’s greatest engineering marvel that combines an extremely dense, Arm-based Grace CPU with their flagship Hopper H100 GPU. Grace Hopper meets the modern data center workloads for powering giant amounts of data such as data analytics, recommender systems, and LLMs.

Grace Hopper solutions will be available next year in the first half of 2023.

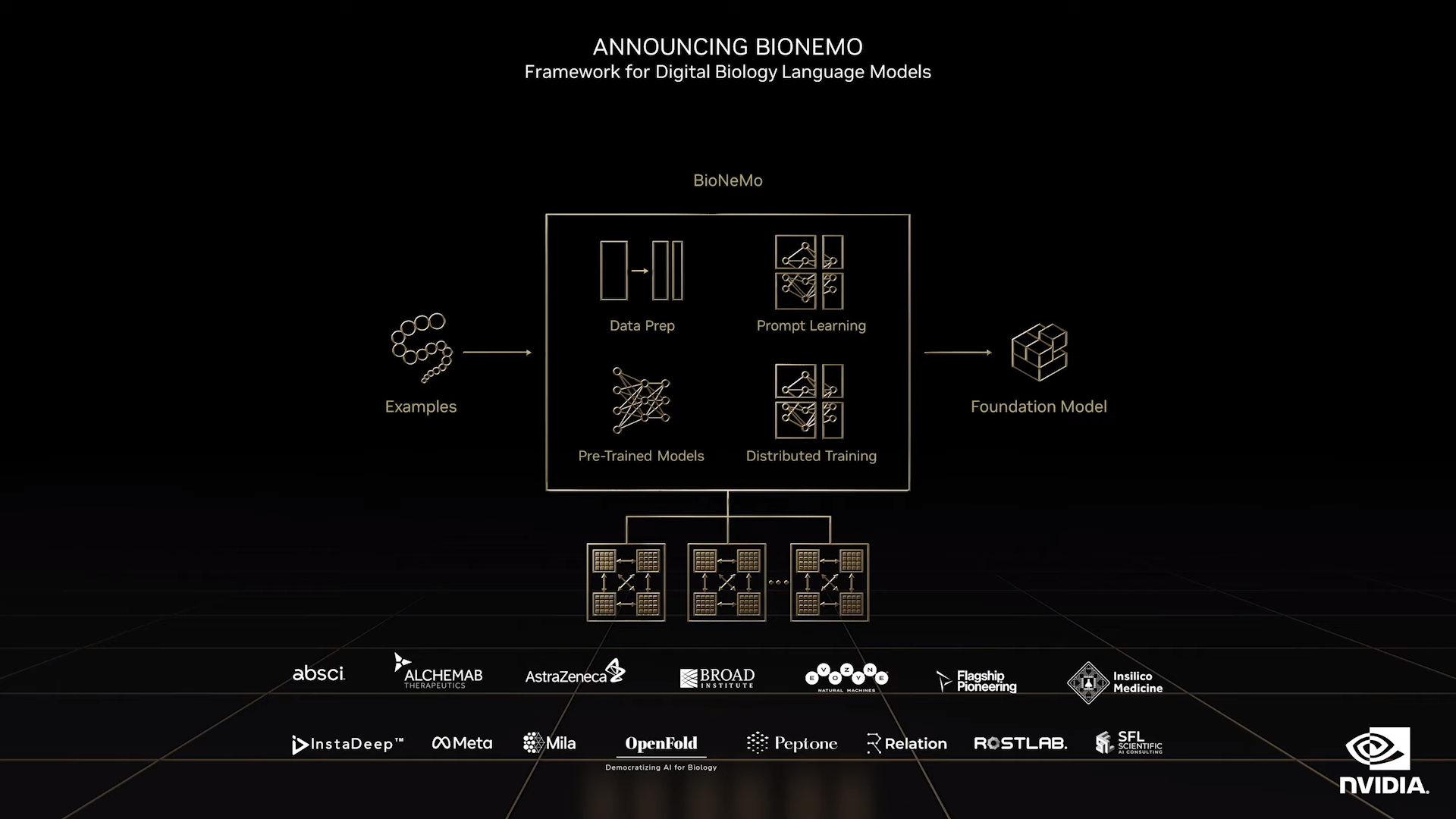

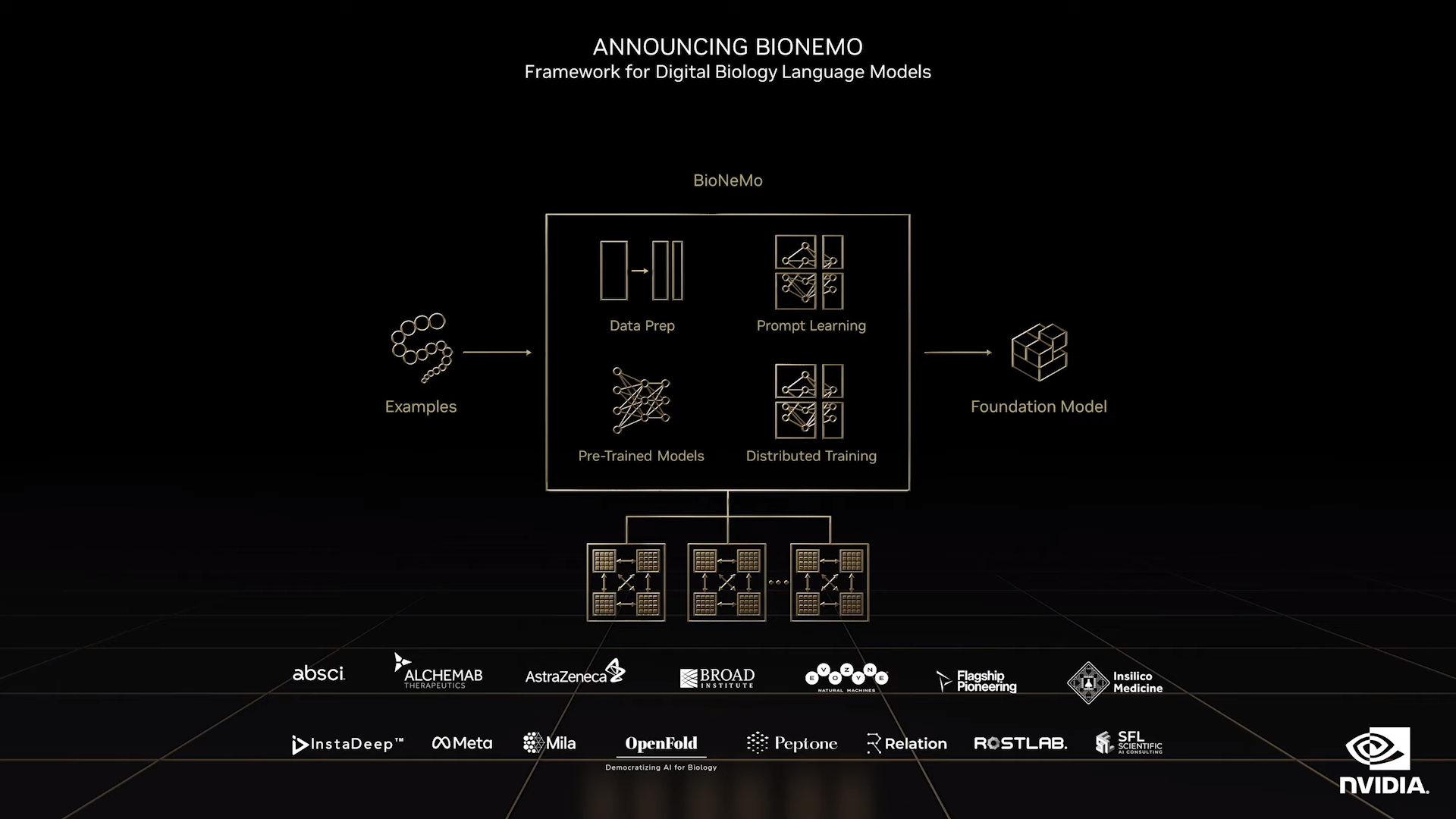

The Language Model for Biochemistry BioNeMo

If AI transformer engines and LLMs can be trained to understand human language, what’s to say they can’t learn the language of science? NVIDIA BioNeMo is an LLM service that is a digital biology framework for building models that can understand chemicals, proteins, DNA, and RNA sequences.

Pretrained with ESM-1, ProtT5, and MegaMolBart, NVIDIA BioNeMo can be used in both upstream services and downstream services like building your molecules. or predicting structure, function, or reaction properties.

NVIDIA Omniverse Cloud Computer

Huang explained how connecting and simulating these worlds will require powerful, flexible new computers. And NVIDIA OVX servers are built for scaling out metaverse applications. By implementing a cloud for Omniverse, computers around the world can leverage the power of Omniverse on edge devices. The connection of RTX computers, to OVX computers servers for Omniverse Nucleas interconnect, and GDN (graphics delivery network) for streaming high-resolution high fidelity graphics effectively the world can be encompassed in an Omniverse Computer that spans the entire planet.

NVIDIA’s 2nd-generation OVX systems will be powered by Ada Lovelace L40 data center GPUs housing 48GBs of memory. The Ada Lovelace L40 is now in full production. A single OVX server will house 8 L40s and be able to process extremely large Omniverse virtual worlds and simulations.

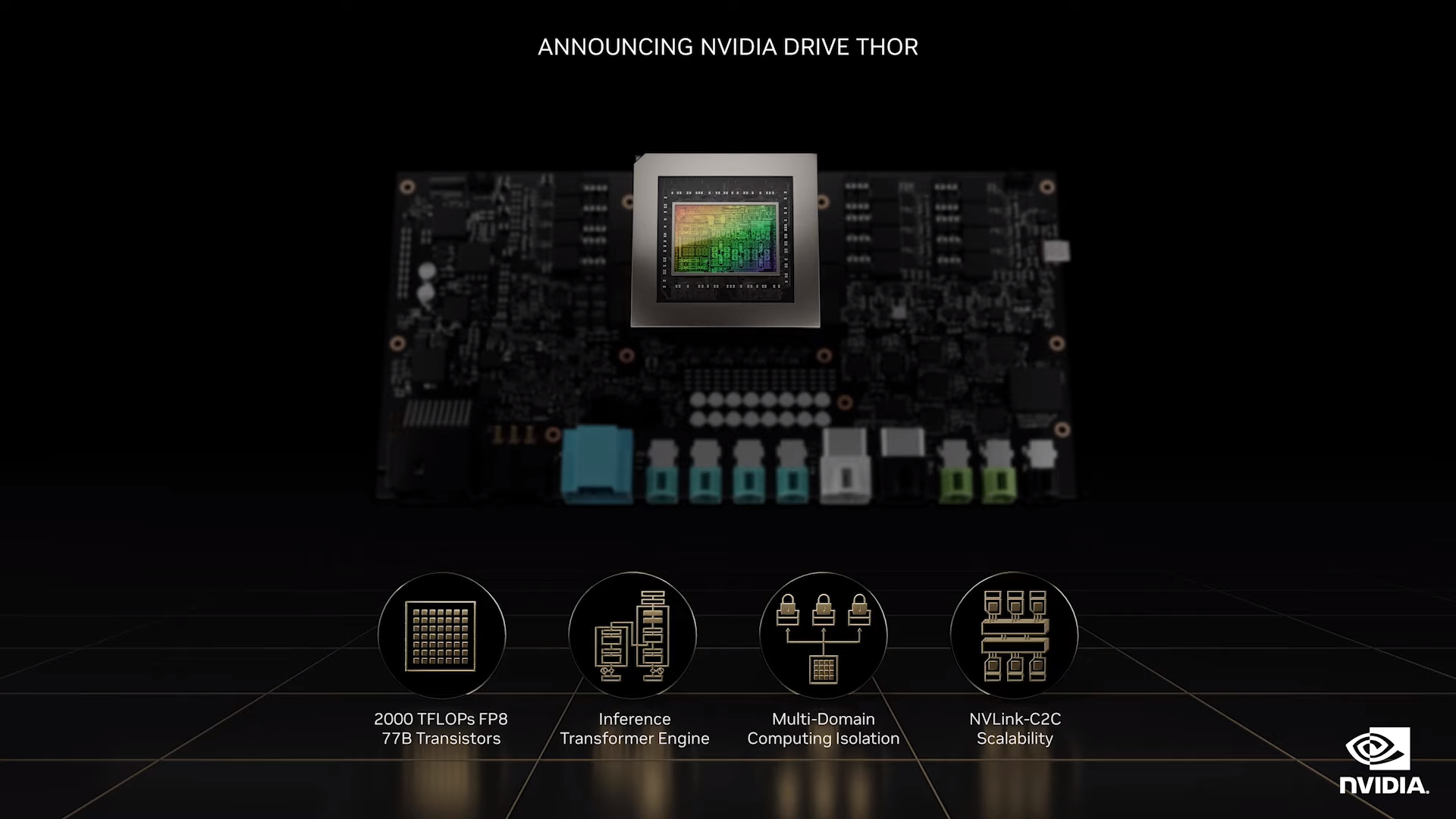

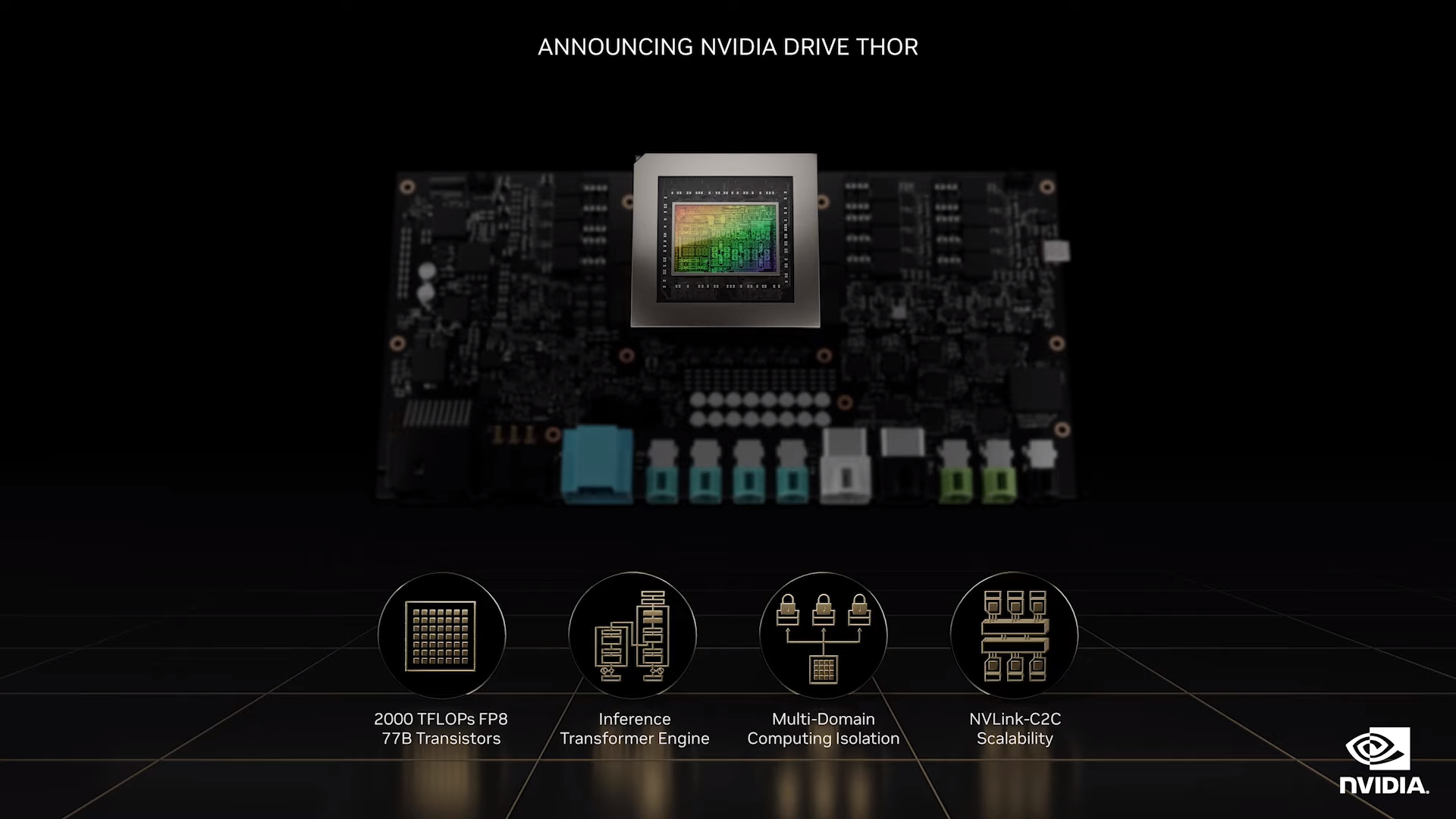

NVIDIA Thor Autonomous Vehicles, Robotics, and Medical Instruments

The new Thor superchip delivers 2,000 TOPS of performance, replacing Atlan on the DRIVE roadmap. It has 77B Transistors and provides numerous advancements to deliver over and provide an unprecedented transition from DRIVE Orin, which has 254 TOPS of performance and is currently in production vehicles. Thor will be the processor for robotics, medical instruments, industrial automation, and edge AI systems.

It will soon replace the multiple chips a vehicle needs and consolidate them into a single SOC. This means less silicon per car and a transition to software-based functions that can be updated over time. A consolidated chip delivers a more interconnected understanding of the surrounding for AI advancements in cameras, motion sensors, lane detection, object detection, and more.

NVIDIA DRIVE Sim enables autonomous driving where data is captured in real-time to develop Earth-Scale Scene Reconstruction with a Neural Reconstruction Engine. By developing an AI pipeline that constructs 3D scenes from recorded sensor data, to enable a digital world where assets can be created manually or automatically by AI. This enables the world to create simulation scenarios on a global scale training the AI in real-world situations. Recorded data can now be turned into fully reactive and modifiable simulation environments to train Autonomous vehicles more and more accurately.

NVIDIA DRIVE Central Car Computing platform is fueled by Autonomous Vehicle system Hyperion, NVIDIA Omniverse, and NVIDIA AI with a slew of amazing applications including replicator and sim-ready assets, DRIVE Sim, DRIVE Map, Active-Safety Stack, pre-trained models, and Orin AI computer.

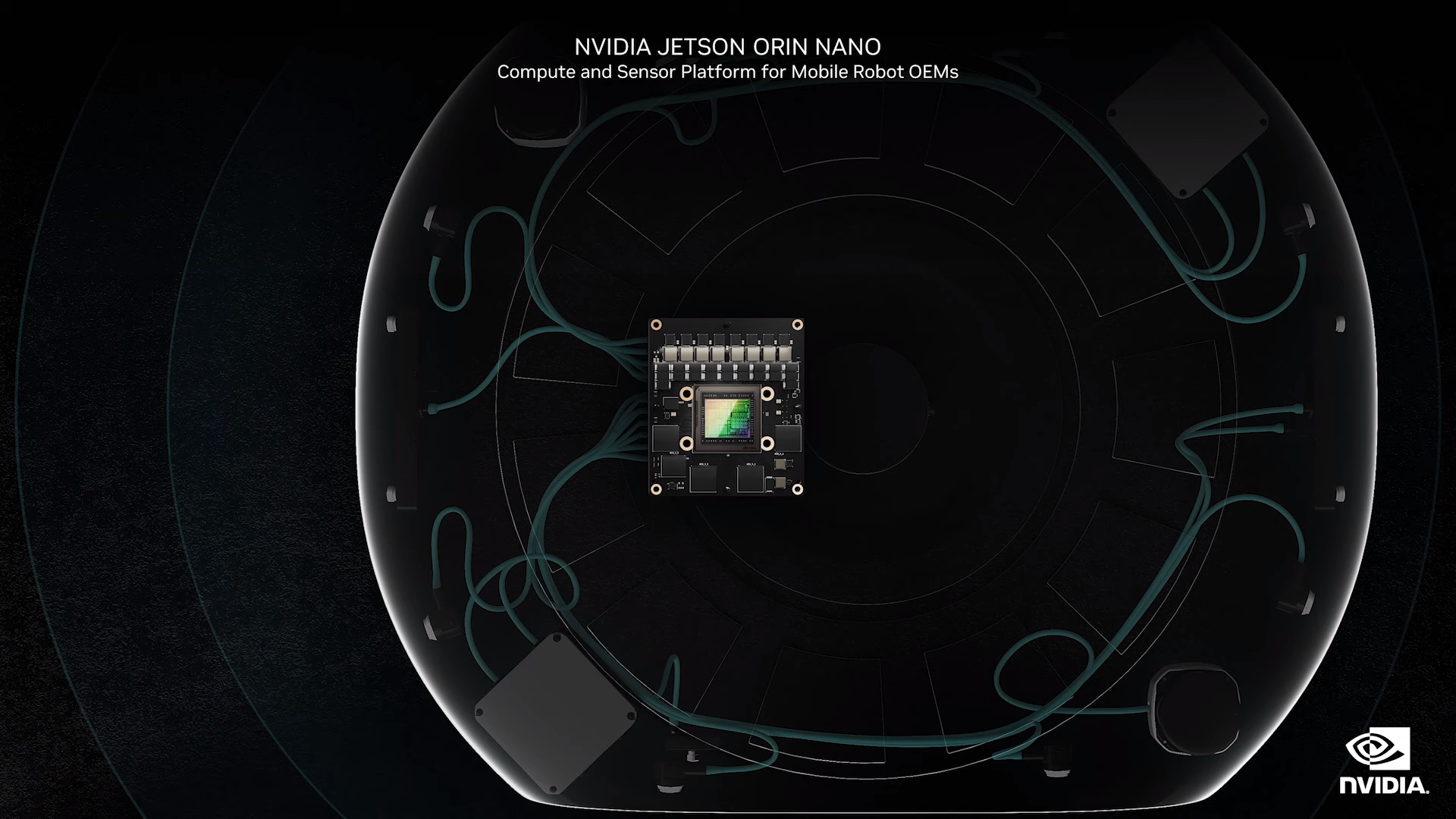

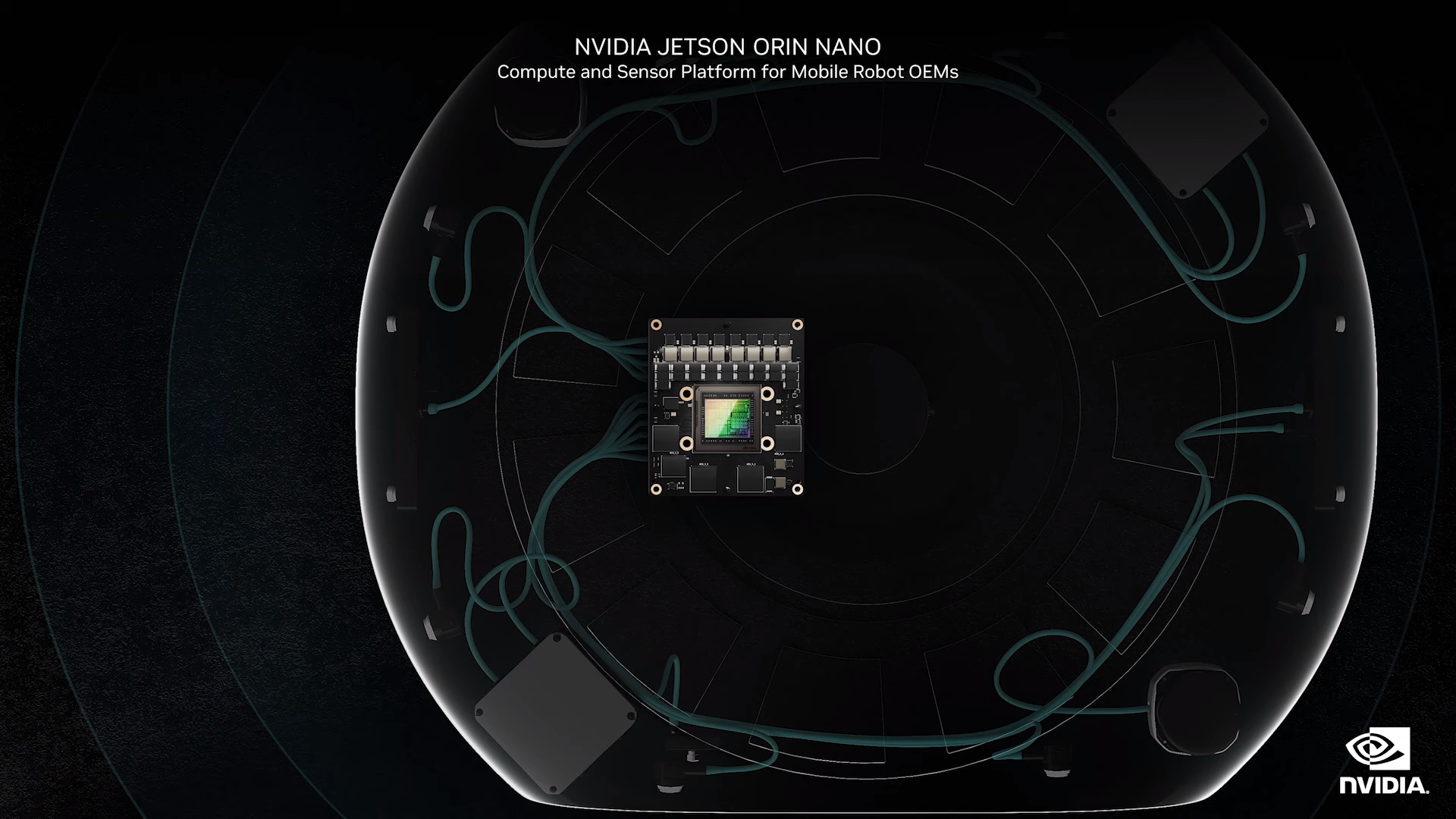

Robotics and Jetson Orin Nano

NVIDIA Isaac for AMRs such as small autonomous robots that do tasks in warehouses and factories. essentially autonomous cars that operate in unstructured areas. (factories, distribution, auto cleaning, roaming security, and last-mile delivery bots. These robots must be able to analyze environments, make decisions, move within a nonpredetermined path and operate in unstructured environments. NVIDIA DRIVE Orin is the choice computing engine for autonomous vehicles and robotics selected by over 40 companies.

Since NVIDIA Thor is set in the future 2024, NVIDIA DRIVE Orin will continue to be updated. To bring Orin to more markets, NVIDIA announced the Jetson Orin Nano, a tiny robotics computer that is 80x faster than the previous super-popular Jetson Nano. NVIDIA Isaac will also be available on the cloud via AWS.

NVIDIA Isaac consists of useful tools like sim-ready assets, NVIDIA Replicator for data, pre-trained models for easy start-up, Isaac ROS for AI vision, and CuOPT for fleet assignment and route assignment.

With NVIDIA Isaac Jensen Huang emphasizes the usefulness of making dynamic data-driven decisions within the operations of the factory. By mapping out physical worlds into digital twins, factory optimization can be evaluated using NVIDIA OVX generating thousands of environment variations.

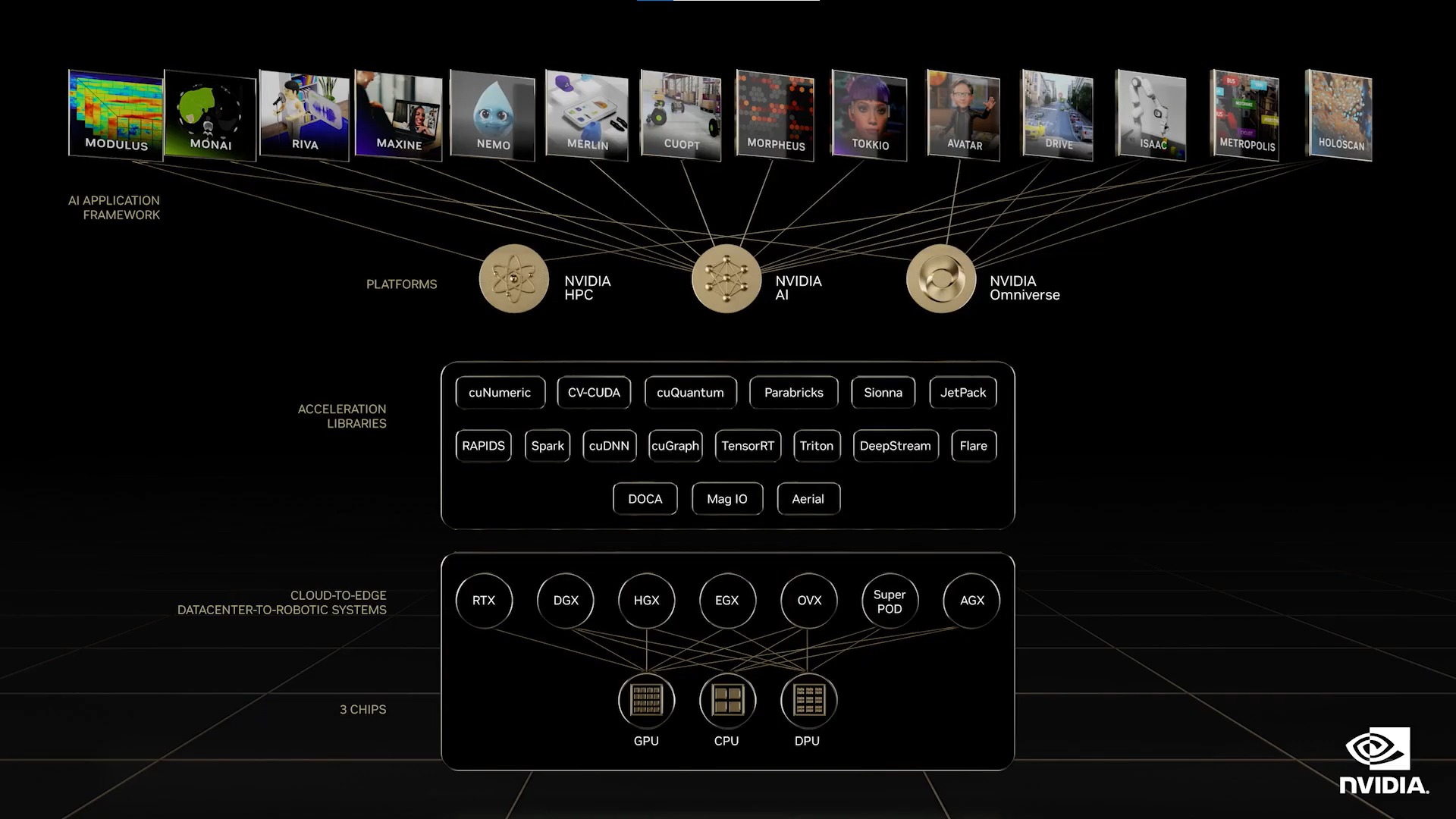

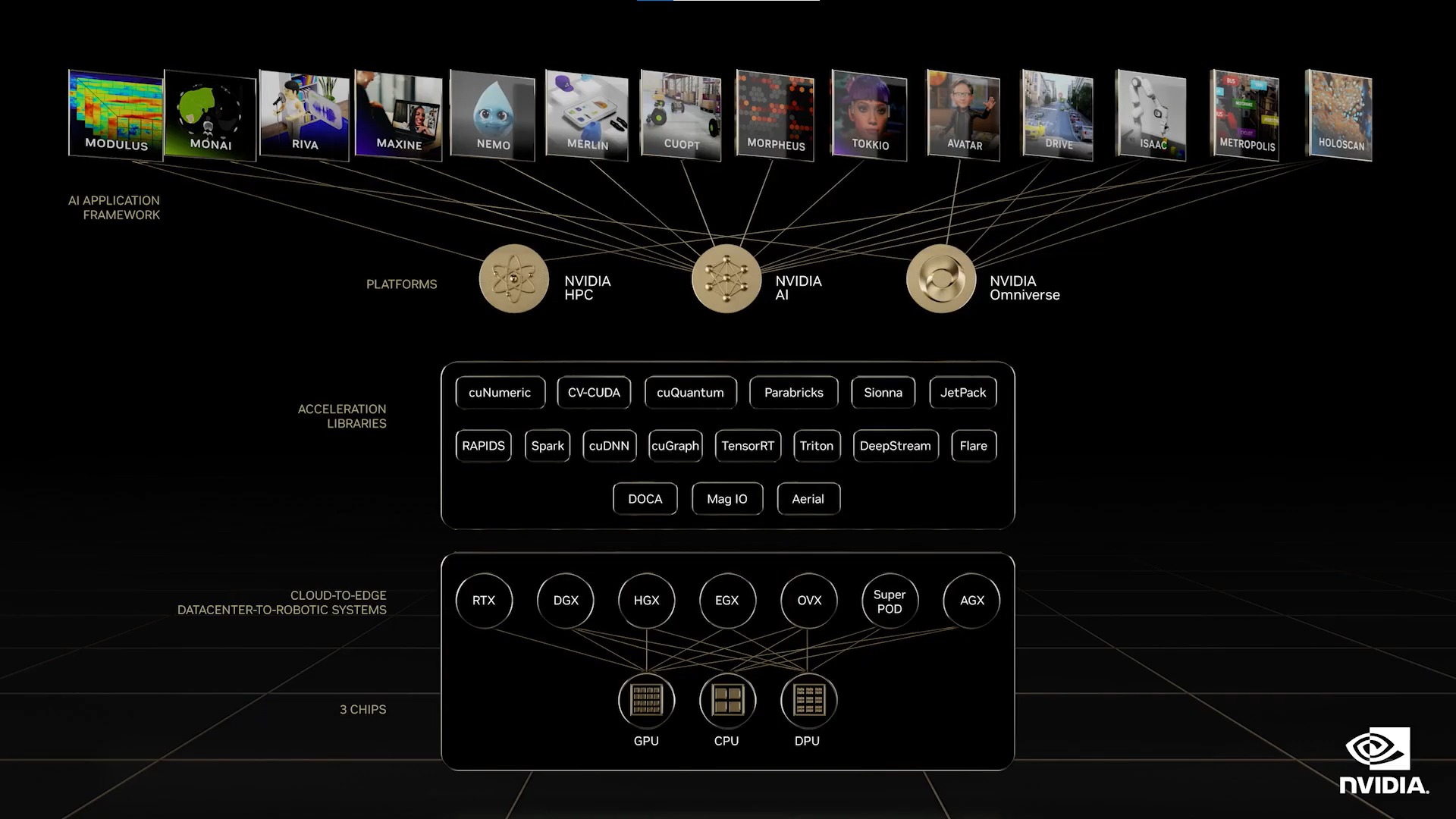

NVIDIA RAPIDS: 3.5 Million Users, 3000 Applications and Counting

NVIDIA’s systems and silicon, and advancements in accelerated computing deliver exceptional performance to industries around the world. With a software ecosystem with more than 3.5 million developers and accelerating 3,000 accelerated apps using NVIDIA’s 550 software development kits, or SDKs, and AI models.

And it’s growing fast. Over the past 12 months, NVIDIA has updated more than 100 SDKs and introduced 25 new ones. New updates enhance performance, and new frameworks enable new solutions to solving new challenges.

NVIDIA RAPIDS open source suite of SDKs that accelerate data science and data engineering. Process DataFrames, SQL arrays, machine learning, and graph analytics to accelerate from days to minutes.

More than 25% of the world’s top companies use frameworks and SDKs within NVIDIA RAPIDS. There is over 100 integration in RAPIDS. RAPIDS is expanding and now over 10 million Python developers on Windows can access NVIDIA RAPIDS through WSL. Furthermore, RAPIDS is now supported on ARM-based server architectures.

Interactive AI Models, Generations, and Avatars

Videos nowadays are the most digested form of media including webinars, conferences, and communications. Avatars are now developed with LLMs performed using computer vision, speech AI, and language processing to deliver seamless interaction from digital to the real world. These AIs are developed by NVIDIA through acceleration libraries and cloud runtime engines called UCF (Unified Computing Framework) and Omniverse ACE (Avatar Cloud Engine).

Isaac Sim enables the training of machine factories AI models such as cranes, stocking machines, and assembly machines to train at extremely accelerated speeds. NVIDIA is on a roll when it comes to AI and they won’t stop to look back.

Conclusion

NVIDIA has so many different things up its sleeve. Their business model isn’t just about delivering high-performance GPUs to accelerate your graphics anymore but instead accelerating the world’s smartest developers to solve and advance the world’s most challenging problems.

From Autonomous Vehicles, Conversational AI, LLMs, and Medical Imaging devices, NVIDIA is going all in on artificial intelligence and its capabilities to change how humans interact with the real world.

It's all about optimization, efficiency, and innovation.

Have any questions?

Contact Exxact Today!

Watch the NVIDIA GTC 2022 Keynote here.

NVIDIA GTC 2022 Keynote Highlights

NVIDIA GTC happened earlier this year in the Spring, but we are getting a second GTC for some new announcements. Jensen Huang takes the stage again to introduce NVIDIA’s newest advancements and technologies to fuel the world.

Again and again, Huang connects new technologies to new products to new opportunities with a strong emphasis on AI to enable things like never-before-seen graphics or building virtual proving grounds where the world’s biggest companies can refine their products NVIDIA has set out to change the world with their accelerators.

GeForce RTX 40 Series GPUs and Ada Lovelace Generation

The Ada Lovelace Generation of GPUs has paved way for new iterations of the flagship GPUs. They have included next-generation advancements with a 4N TSMC chip developed with up to 76 Billion Transistors.

They have implemented New Sharders Execution Reordering to orchestrate rendering and ray tracing jobs on the fly. Their new generation Ray Tracing Cores deliver 2x the throughput with the new Opacity Micro Mapping and Micro Mesh Engine. Alongside the addition of a new Tensor Core and the addition of the NVIDIA Hopper Transformer Engine and FP8 tensor processing, the Ada Lovelace generation exceeds Ampere by at least double.

DLSS 3 or Deep Learning Super Sampling is a convolution autoencoder model that enables games to be rendered at low resolutions yet played at high resolutions. Its advancement over the previous iteration of DLSS makes this process extremely efficient and powerful, relieving GPU resources and CPU resources and resulting in higher framerates!

GeForce RTX 4090 has 24GB of GDDR6X, 16,384 Cores, built on the Ada Lovelace platform performs 2x Faster in rasterized games and 4x faster for ray traced games than the previous champion RTX 3090Ti. Available October 12th with a base price of $1599.

The GeForce RTX 4080 16GB GDDR6X has 9,728 CUDA cores Cores, is built on the Ada Lovelace platform performs twice as fast with DLSS 3 in today’s games as the GeForce RTX 3080 Ti, and is more powerful than the GeForce RTX 3090 Ti at lower power. Available November with a base price of $1,199.

The GeForce RTX 4080 12GB GDDR6X has 7,680 CUDA cores built on the Ada Lovelace platform and performs is faster than the RTX 3090 Ti with DLSS 3, the previous-generation flagship GPU. It’s priced at $899.

Omniverse and Digital Twins: Design, Build, Operate.

With advancements in Ray Tracing, NVIDIA teams up with Valve to develop Portal RTX, remastering the beloved puzzle game of the decade. Through NVIDIA Omniverse and RTX Remix to promote the creativity of modding tools to enhance game assets, shaders, and all. RTX Remix is the most advanced game modding tool created to accelerate the visual appearance and fidelity of the games we love.

No physical product will be deployed without intensive testing in its Omniverse simulation. Digital twins are being used by KPF, Amazon Packing Facilities, General Motors Factory Lines, Lowes Department Stores, and Deutsche Bahn Railways to optimize their processes such as efficiency, understanding valuable insights, and visualizing a physical world digitally. Companies like Telco, Charter and Heavy.AI use Omniverse to map out wireless networks of 5G microcells and towers using NVIDIA Omniverse to maximize efficiency and optimize cell tower placement. Every one of these companies realizes the immense power of digital twins simulation that NVIDIA Omniverse has to offer.

NVIDIA NeMo Large Language Models

Large language models are the most important AI models today. Based on the transformer architecture, these giant models can learn to understand meanings and languages without supervision or labeled datasets, unlocking remarkable new capabilities.

With LLMs like OpenAI’s GPT-3 and DALLE2, Stable Diffusion, and more, getting a general AI that can understand human words with less human intervention can prove valuable in the advancement of AI. From a single model, these LLMs can generate images, carry conversations, translate languages, summarize text, generate text, or even write programming code. Models can perform tasks they were never trained on.

To make it easier for researchers to apply this incredible technology to their work, NVIDIA announced the Nemo LLM Service, an NVIDIA-managed cloud service to adapt pre-trained LLMs to perform specific tasks.

NVIDIA Hopper H100 and Grace Hopper Superchip

NVIDIA Hopper is NVIDIA’s data center GPU accelerator that has the massive throughput to power large language models and delivers blistering AI performance to make it possible for anyone around the world to take advantage of the advancements of AI.

Hopper is in full production and coming to power the world’s AI factories. H100 is currently available on NVIDIA Launchpad for a test drive, shipping DGX H100s in Q1 2023 with HGX board partners starting October 2022. NVIDIA H100 cloud services driven by NVIDIA’s supercomputers will be available in 2023.

The Hopper H100 boasts massive gains over the A100 with an advanced Transformer Engine that can serve over 30,000 people concurrently for inferencing.

The Grace CPU has 72 Arm Neoverse Cores, 117MB of L3 cache, 900GB/s enabled for NVLink C2C, 3.2 TB/s of bisectional bandwidth, built for LPDDR5X (which delivers 1.5x bandwidth of DDR5 at an eight of the power).

Grace Hopper Superchip is NVIDIA’s greatest engineering marvel that combines an extremely dense, Arm-based Grace CPU with their flagship Hopper H100 GPU. Grace Hopper meets the modern data center workloads for powering giant amounts of data such as data analytics, recommender systems, and LLMs.

Grace Hopper solutions will be available next year in the first half of 2023.

The Language Model for Biochemistry BioNeMo

If AI transformer engines and LLMs can be trained to understand human language, what’s to say they can’t learn the language of science? NVIDIA BioNeMo is an LLM service that is a digital biology framework for building models that can understand chemicals, proteins, DNA, and RNA sequences.

Pretrained with ESM-1, ProtT5, and MegaMolBart, NVIDIA BioNeMo can be used in both upstream services and downstream services like building your molecules. or predicting structure, function, or reaction properties.

NVIDIA Omniverse Cloud Computer

Huang explained how connecting and simulating these worlds will require powerful, flexible new computers. And NVIDIA OVX servers are built for scaling out metaverse applications. By implementing a cloud for Omniverse, computers around the world can leverage the power of Omniverse on edge devices. The connection of RTX computers, to OVX computers servers for Omniverse Nucleas interconnect, and GDN (graphics delivery network) for streaming high-resolution high fidelity graphics effectively the world can be encompassed in an Omniverse Computer that spans the entire planet.

NVIDIA’s 2nd-generation OVX systems will be powered by Ada Lovelace L40 data center GPUs housing 48GBs of memory. The Ada Lovelace L40 is now in full production. A single OVX server will house 8 L40s and be able to process extremely large Omniverse virtual worlds and simulations.

NVIDIA Thor Autonomous Vehicles, Robotics, and Medical Instruments

The new Thor superchip delivers 2,000 TOPS of performance, replacing Atlan on the DRIVE roadmap. It has 77B Transistors and provides numerous advancements to deliver over and provide an unprecedented transition from DRIVE Orin, which has 254 TOPS of performance and is currently in production vehicles. Thor will be the processor for robotics, medical instruments, industrial automation, and edge AI systems.

It will soon replace the multiple chips a vehicle needs and consolidate them into a single SOC. This means less silicon per car and a transition to software-based functions that can be updated over time. A consolidated chip delivers a more interconnected understanding of the surrounding for AI advancements in cameras, motion sensors, lane detection, object detection, and more.

NVIDIA DRIVE Sim enables autonomous driving where data is captured in real-time to develop Earth-Scale Scene Reconstruction with a Neural Reconstruction Engine. By developing an AI pipeline that constructs 3D scenes from recorded sensor data, to enable a digital world where assets can be created manually or automatically by AI. This enables the world to create simulation scenarios on a global scale training the AI in real-world situations. Recorded data can now be turned into fully reactive and modifiable simulation environments to train Autonomous vehicles more and more accurately.

NVIDIA DRIVE Central Car Computing platform is fueled by Autonomous Vehicle system Hyperion, NVIDIA Omniverse, and NVIDIA AI with a slew of amazing applications including replicator and sim-ready assets, DRIVE Sim, DRIVE Map, Active-Safety Stack, pre-trained models, and Orin AI computer.

Robotics and Jetson Orin Nano

NVIDIA Isaac for AMRs such as small autonomous robots that do tasks in warehouses and factories. essentially autonomous cars that operate in unstructured areas. (factories, distribution, auto cleaning, roaming security, and last-mile delivery bots. These robots must be able to analyze environments, make decisions, move within a nonpredetermined path and operate in unstructured environments. NVIDIA DRIVE Orin is the choice computing engine for autonomous vehicles and robotics selected by over 40 companies.

Since NVIDIA Thor is set in the future 2024, NVIDIA DRIVE Orin will continue to be updated. To bring Orin to more markets, NVIDIA announced the Jetson Orin Nano, a tiny robotics computer that is 80x faster than the previous super-popular Jetson Nano. NVIDIA Isaac will also be available on the cloud via AWS.

NVIDIA Isaac consists of useful tools like sim-ready assets, NVIDIA Replicator for data, pre-trained models for easy start-up, Isaac ROS for AI vision, and CuOPT for fleet assignment and route assignment.

With NVIDIA Isaac Jensen Huang emphasizes the usefulness of making dynamic data-driven decisions within the operations of the factory. By mapping out physical worlds into digital twins, factory optimization can be evaluated using NVIDIA OVX generating thousands of environment variations.

NVIDIA RAPIDS: 3.5 Million Users, 3000 Applications and Counting

NVIDIA’s systems and silicon, and advancements in accelerated computing deliver exceptional performance to industries around the world. With a software ecosystem with more than 3.5 million developers and accelerating 3,000 accelerated apps using NVIDIA’s 550 software development kits, or SDKs, and AI models.

And it’s growing fast. Over the past 12 months, NVIDIA has updated more than 100 SDKs and introduced 25 new ones. New updates enhance performance, and new frameworks enable new solutions to solving new challenges.

NVIDIA RAPIDS open source suite of SDKs that accelerate data science and data engineering. Process DataFrames, SQL arrays, machine learning, and graph analytics to accelerate from days to minutes.

More than 25% of the world’s top companies use frameworks and SDKs within NVIDIA RAPIDS. There is over 100 integration in RAPIDS. RAPIDS is expanding and now over 10 million Python developers on Windows can access NVIDIA RAPIDS through WSL. Furthermore, RAPIDS is now supported on ARM-based server architectures.

Interactive AI Models, Generations, and Avatars

Videos nowadays are the most digested form of media including webinars, conferences, and communications. Avatars are now developed with LLMs performed using computer vision, speech AI, and language processing to deliver seamless interaction from digital to the real world. These AIs are developed by NVIDIA through acceleration libraries and cloud runtime engines called UCF (Unified Computing Framework) and Omniverse ACE (Avatar Cloud Engine).

Isaac Sim enables the training of machine factories AI models such as cranes, stocking machines, and assembly machines to train at extremely accelerated speeds. NVIDIA is on a roll when it comes to AI and they won’t stop to look back.

Conclusion

NVIDIA has so many different things up its sleeve. Their business model isn’t just about delivering high-performance GPUs to accelerate your graphics anymore but instead accelerating the world’s smartest developers to solve and advance the world’s most challenging problems.

From Autonomous Vehicles, Conversational AI, LLMs, and Medical Imaging devices, NVIDIA is going all in on artificial intelligence and its capabilities to change how humans interact with the real world.

It's all about optimization, efficiency, and innovation.

Have any questions?

Contact Exxact Today!

Watch the NVIDIA GTC 2022 Keynote here.