Introduction

At NVIDIA GTC 2023, Jensen Huang emphasized the significance of the slowdown in Moore's Law, which has been the driving force behind computing power, while also acknowledging the rapid advancement of computing. This year has marked a new era in technology with the mainstream adoption of Artificial Intelligence.

NVIDIA has played a pivotal role in this technological revolution by developing and widely adopting AI while providing the necessary hardware and software to develop these models. As an example, ChatGPT relies on NVIDIA's data center accelerators to provide remarkably quick responses to our queries. It started with CUDA, then with DGX, and now with accelerators designed for various AI use cases.

We want to go over some highlights and announcements Jensen addressed at GTC 2023.

NVIDIA Advances Chipmaking and Lithography

NVIDIA announces cuLitho, a new tool to help optimize one of the major steps in the design of modern processors for major chip fabs like TSMC, ASML, and Synopsys. Creating patterns and masks used for the latest lithography processes is highly complex to create one stencil mask for a single CPU die.

With cuLitho a single reticle, which takes upwards of two weeks, can now be processed in eight hours running on 500 DGX systems compared to 40,000 CPU servers, cutting power costs from 35 megawatts (MW) to just 5MW. Accelerated computing contributes to reducing prototype cycle time, increasing throughput, and reducing carbon footprint while preparing for even more complex lithography for more capable silicon.

This not only impacts the very few chip fabs around the world but the entire economy built on technology. Without advancements in the computer chip manufacturing industry, the cost only increases. By reducing the time it takes to make current chips and continuing to make innovations for all silicon faster, more efficient, and increasingly cost-effective.

Grace CPU

The Grace CPU is comprised of 72 Arm cores that are interconnected by a high-speed on-chip coherent fabric, which delivers an impressive 3.2 TB/sec. By connecting two Grace CPUs, the Grace Superchip is created, which features enhanced LPDDR, or low-power RAM, found in smartphones. This configuration boasts a bandwidth of 1TB/s at 1/8th of the power. Grace is incredibly power-efficient, which enables slim form factor air cooling. With two Grace Superchips (4 Grace) in a single 1U air-cooled server, both performance and efficiency can be achieved at the same time.

NVIDIA's latest offering, the Grace CPU, has been specifically designed for high-throughput AI workloads that cannot be processed through parallelized computing on GPUs. This CPU is built for cloud data centers and is highly efficient in terms of TDP.

Even though the Grace CPU prioritizes power efficiency, it is still 1.3 times faster than the average x86 chips and 1.7 times more power efficient. Although Huang did not specify which CPUs, these Grace CPUs are definitely going to pack a punch.

NVIDIA DGX H100

Announced last year, NVIDIA DGX H100 has been unveiled and is in full production. Superseding DGX A100, the DGX H100 is NVIDIA’s new AI engine of the world. With ChatGPT being powered by the DGX-1 built back in 2016, NVIDIA has come a long way in providing accelerators to power the world's most innovative developments.

Jensen reaffirms the capabilities of DGX as an AI supercomputer and the blueprint for developing large and complex models. While previous DGX geared more towards research and development, the advancements in H100, such as an added transformer engine, enable around-the-clock operations and inferencing; one giant GPU to process data, train models, and deploy innovations. Microsoft Azure will be one of the first to leverage full-scale NVIDIA DGX H100 in their cloud data center for professionals to develop and compute on.

And to put these supercomputers in the hands of every corporation, NVIDIA launches NVIDIA DGX Cloud with Oracle Cloud Infrastructure (OCI). Access NVIDIA’s most powerful systems instantly from a browser via NVIDIA AI Enterprise, their most complete suite of AI libraries, enabling end-to-end training and deployment for anyone.

Speeding Deployment with New Platforms

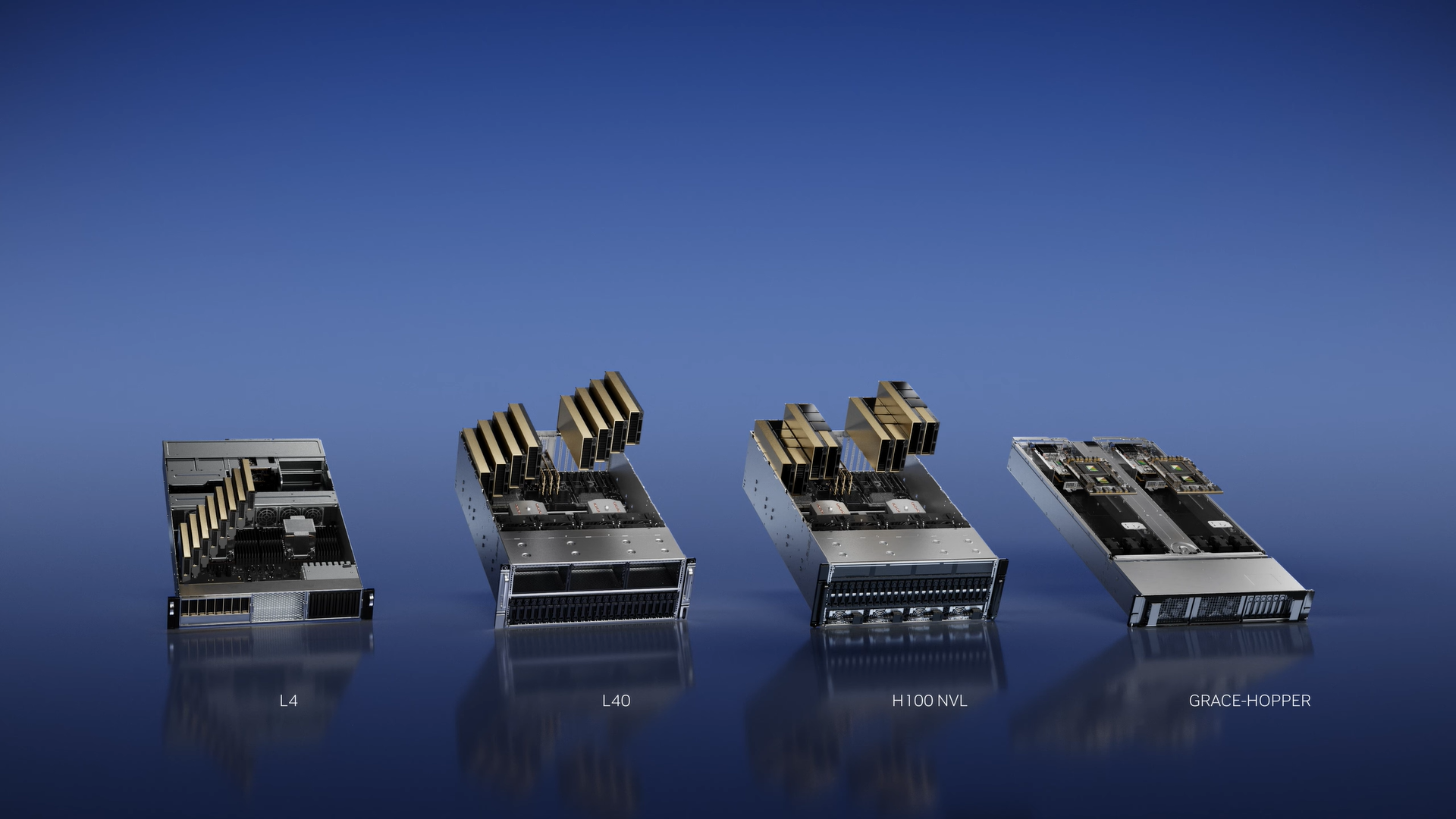

To drive the rapidly emerging generative AI models built by startups and tech companies alike, Huang announced inference platforms for AI video, image generation, LLM deployment, and recommender inference. They combine NVIDIA’s full stack of inference software with the latest NVIDIA Ada, Hopper, and Grace Hopper processors — including the NVIDIA L4 Tensor Core GPU and the NVIDIA H100 NVL GPU, both launched today.

NVIDIA L4

NVIDIA L4 for AI Video can deliver 120x more AI-powered video performance than CPUs, combined with 99% better energy efficiency. Most cloud video is processed on CPUs, a very compute-intensive task for the processors in charge of serial and complex tasks. An 8-GPU L4 server can replace over 100 dual-socket CPU servers for AI video.

These GPU servers are utilized by companies like Snap for AV1 video processing, generative AI, and AR. Cloud services that ingest large amounts of video data can leverage the computing prowess of GPUs.

NVIDIA L40

NVIDIA L40 was previously announced as the engine of NVIDIA Omiverse designed for Image Generation optimized graphics for AI-enabled 2D, video, and 3D image generation. Generative AI text-to-image and text-to-video can be powered to drive creative discovery for Omniverse creators.

AI tools can be used to assist the development of various projects without needing to start at ground zero. Revolutionizing the creative process with prompting to perform changes like adding backgrounds, applying special effects, or removing unwanted imagery with a single click.

NVIDIA H100 NVL

NVIDIA H100 NVL for Large Language Model Deployment is ideal for deploying massive LLMs like ChatGPT at scale. While NVIDIA H100 can be NVLinked the H100 NVL is a step-up replacing the 80GB HBM2e memory with 94GB of HBM3 memory per GPU for a total of 188GB of high bandwidth memory between the pair delivered at 7.8 TB/s. The NVIDIA H100 NVL can support the scaling and advancements of common PCIe servers found in data centers.

As A100 powers tools like ChatGPT, the NVIDIA H100 NVL is claimed to be 100 times faster, reducing the processing costs of LLMs by an order of magnitude. The H100 NVL has been designed to be the engine of OpenAI’s GPT-3 and future LLM models and other high-performance computing workloads.

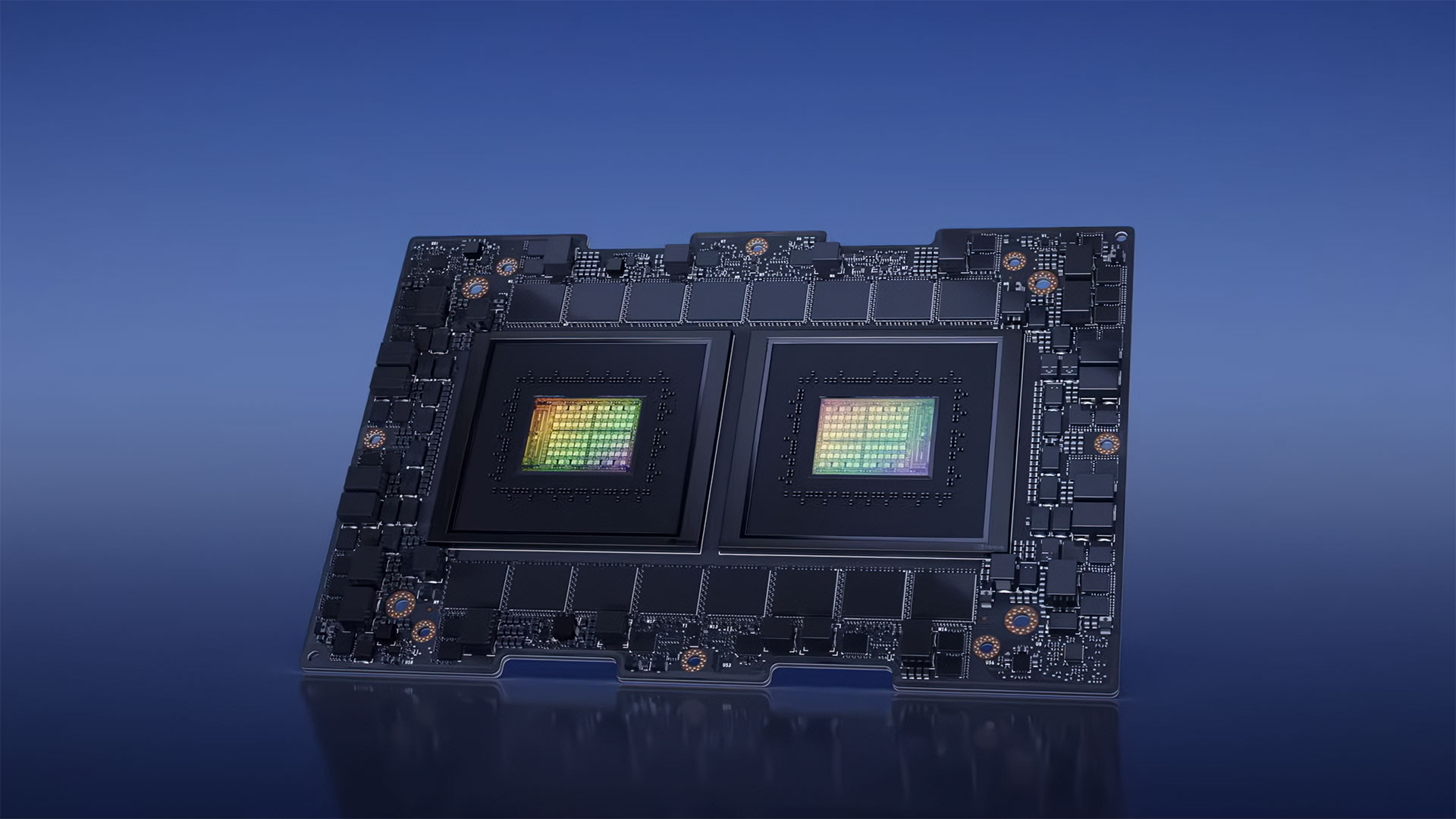

Grace Hopper Superchip

And NVIDIA Grace Hopper is NVIDIA’s new superchip connecting their Grace Arm CPU with their Hopper GPU architecture for the lowest latency between CPU and GPU. Grace Hopper is ideal for processing giant datasets like recommender systems, vector databases, and graph neural network AI models.

Modern CPUs store data in cache or RAM before transferring the GPU for inferencing. While the traditional process is fast, Grace Hopper is even faster with their 900GB C2C high-speed interface 7 times faster than PCIe.

Supercharging Generative AI

NVIDIA not only provides the platform but also the engine for individuals and corporations who want to develop generative AIs. Jensen Huang announced NVIDIA AI Foundations, a suite of cloud services that allow users to create, refine, and use custom language models and generative AI for specific tasks using their own data.

The AI Foundations services include NVIDIA NeMo, which lets users create custom language models, Picasso, which enables customers to build custom models using licensed or proprietary visual content, and BioNeMo, which is designed to assist researchers in the drug discovery industry with designing and screening molecules. All of these services rely on NVIDIA's pre-trained models, which have been developed using trillions of parameters and data, and users will have access to experts to assist them as needed.

NVIDIA Omniverse - The Digital to Physical

Huang continues with the push the Omniverse, their digital collaboration platform for creatives and manufacturing product design. He sentiments NVIDIA Omniverse will provide the ability for companies to feasibility plan production virtually and test efficiency prior to real-life implementation. Omniverse Digital Twins enable factories to test optimization processes between worlds without disrupting the flow of the other.

To showcase the extraordinary capabilities of Omniverse, NVIDIA’s open platform built for 3D design collaboration and digital twin simulation, it shows how Amazon is working to choreograph the movements of Proteus, Amazon’s first fully autonomous warehouse robot, as it moves bins of products from one place to another in the warehouse.

Companies like Lotus and BMW utilize 3D design collaboration and Digital Twins to build, optimize, and plan anything from small stations to entire factories. Mercedes-Benz uses Omniverse to build, optimize and plan assembly lines for new models and enhance self-driving capabilities. Rimac and Lucid Motors use Omniverse to build digital stores from actual design data that faithfully represent their cars.

Learn More about NVIDIA Omniverse Enterprise and how you can get started!

Helping Do the Impossible

NVIDIA has transitioned from its gaming hardware days to being the engine that drives AI models from start to finish. Cloud and software partners, researchers and scientists, prosumers and consumers alike are all impacted by the recent development and mainstream adoption of artificial intelligence, especially with Generative AI. We give our computers and machines the ability to be more creative than before and the world is changing, moving toward the next technological revolution.

The Era of AI is on the horizon and it's up to us to keep up. Without the acceleration in computing and the engines NVIDIA provides, our technology would not be the way it is today. Innovations are made possible not only with the hardware and software but those who harness it.

“Together,” Huang states, “We are helping the world do the impossible.”

Interested in scaling your computing infrastructure?

Want to bring your AI innovations to life?

Exxact is a leading solutions provider for GPU-accelerated AI and HPC.Contact us today for more information!

NVIDIA GTC 2023 Recap: What Mattered

Introduction

At NVIDIA GTC 2023, Jensen Huang emphasized the significance of the slowdown in Moore's Law, which has been the driving force behind computing power, while also acknowledging the rapid advancement of computing. This year has marked a new era in technology with the mainstream adoption of Artificial Intelligence.

NVIDIA has played a pivotal role in this technological revolution by developing and widely adopting AI while providing the necessary hardware and software to develop these models. As an example, ChatGPT relies on NVIDIA's data center accelerators to provide remarkably quick responses to our queries. It started with CUDA, then with DGX, and now with accelerators designed for various AI use cases.

We want to go over some highlights and announcements Jensen addressed at GTC 2023.

NVIDIA Advances Chipmaking and Lithography

NVIDIA announces cuLitho, a new tool to help optimize one of the major steps in the design of modern processors for major chip fabs like TSMC, ASML, and Synopsys. Creating patterns and masks used for the latest lithography processes is highly complex to create one stencil mask for a single CPU die.

With cuLitho a single reticle, which takes upwards of two weeks, can now be processed in eight hours running on 500 DGX systems compared to 40,000 CPU servers, cutting power costs from 35 megawatts (MW) to just 5MW. Accelerated computing contributes to reducing prototype cycle time, increasing throughput, and reducing carbon footprint while preparing for even more complex lithography for more capable silicon.

This not only impacts the very few chip fabs around the world but the entire economy built on technology. Without advancements in the computer chip manufacturing industry, the cost only increases. By reducing the time it takes to make current chips and continuing to make innovations for all silicon faster, more efficient, and increasingly cost-effective.

Grace CPU

The Grace CPU is comprised of 72 Arm cores that are interconnected by a high-speed on-chip coherent fabric, which delivers an impressive 3.2 TB/sec. By connecting two Grace CPUs, the Grace Superchip is created, which features enhanced LPDDR, or low-power RAM, found in smartphones. This configuration boasts a bandwidth of 1TB/s at 1/8th of the power. Grace is incredibly power-efficient, which enables slim form factor air cooling. With two Grace Superchips (4 Grace) in a single 1U air-cooled server, both performance and efficiency can be achieved at the same time.

NVIDIA's latest offering, the Grace CPU, has been specifically designed for high-throughput AI workloads that cannot be processed through parallelized computing on GPUs. This CPU is built for cloud data centers and is highly efficient in terms of TDP.

Even though the Grace CPU prioritizes power efficiency, it is still 1.3 times faster than the average x86 chips and 1.7 times more power efficient. Although Huang did not specify which CPUs, these Grace CPUs are definitely going to pack a punch.

NVIDIA DGX H100

Announced last year, NVIDIA DGX H100 has been unveiled and is in full production. Superseding DGX A100, the DGX H100 is NVIDIA’s new AI engine of the world. With ChatGPT being powered by the DGX-1 built back in 2016, NVIDIA has come a long way in providing accelerators to power the world's most innovative developments.

Jensen reaffirms the capabilities of DGX as an AI supercomputer and the blueprint for developing large and complex models. While previous DGX geared more towards research and development, the advancements in H100, such as an added transformer engine, enable around-the-clock operations and inferencing; one giant GPU to process data, train models, and deploy innovations. Microsoft Azure will be one of the first to leverage full-scale NVIDIA DGX H100 in their cloud data center for professionals to develop and compute on.

And to put these supercomputers in the hands of every corporation, NVIDIA launches NVIDIA DGX Cloud with Oracle Cloud Infrastructure (OCI). Access NVIDIA’s most powerful systems instantly from a browser via NVIDIA AI Enterprise, their most complete suite of AI libraries, enabling end-to-end training and deployment for anyone.

Speeding Deployment with New Platforms

To drive the rapidly emerging generative AI models built by startups and tech companies alike, Huang announced inference platforms for AI video, image generation, LLM deployment, and recommender inference. They combine NVIDIA’s full stack of inference software with the latest NVIDIA Ada, Hopper, and Grace Hopper processors — including the NVIDIA L4 Tensor Core GPU and the NVIDIA H100 NVL GPU, both launched today.

NVIDIA L4

NVIDIA L4 for AI Video can deliver 120x more AI-powered video performance than CPUs, combined with 99% better energy efficiency. Most cloud video is processed on CPUs, a very compute-intensive task for the processors in charge of serial and complex tasks. An 8-GPU L4 server can replace over 100 dual-socket CPU servers for AI video.

These GPU servers are utilized by companies like Snap for AV1 video processing, generative AI, and AR. Cloud services that ingest large amounts of video data can leverage the computing prowess of GPUs.

NVIDIA L40

NVIDIA L40 was previously announced as the engine of NVIDIA Omiverse designed for Image Generation optimized graphics for AI-enabled 2D, video, and 3D image generation. Generative AI text-to-image and text-to-video can be powered to drive creative discovery for Omniverse creators.

AI tools can be used to assist the development of various projects without needing to start at ground zero. Revolutionizing the creative process with prompting to perform changes like adding backgrounds, applying special effects, or removing unwanted imagery with a single click.

NVIDIA H100 NVL

NVIDIA H100 NVL for Large Language Model Deployment is ideal for deploying massive LLMs like ChatGPT at scale. While NVIDIA H100 can be NVLinked the H100 NVL is a step-up replacing the 80GB HBM2e memory with 94GB of HBM3 memory per GPU for a total of 188GB of high bandwidth memory between the pair delivered at 7.8 TB/s. The NVIDIA H100 NVL can support the scaling and advancements of common PCIe servers found in data centers.

As A100 powers tools like ChatGPT, the NVIDIA H100 NVL is claimed to be 100 times faster, reducing the processing costs of LLMs by an order of magnitude. The H100 NVL has been designed to be the engine of OpenAI’s GPT-3 and future LLM models and other high-performance computing workloads.

Grace Hopper Superchip

And NVIDIA Grace Hopper is NVIDIA’s new superchip connecting their Grace Arm CPU with their Hopper GPU architecture for the lowest latency between CPU and GPU. Grace Hopper is ideal for processing giant datasets like recommender systems, vector databases, and graph neural network AI models.

Modern CPUs store data in cache or RAM before transferring the GPU for inferencing. While the traditional process is fast, Grace Hopper is even faster with their 900GB C2C high-speed interface 7 times faster than PCIe.

Supercharging Generative AI

NVIDIA not only provides the platform but also the engine for individuals and corporations who want to develop generative AIs. Jensen Huang announced NVIDIA AI Foundations, a suite of cloud services that allow users to create, refine, and use custom language models and generative AI for specific tasks using their own data.

The AI Foundations services include NVIDIA NeMo, which lets users create custom language models, Picasso, which enables customers to build custom models using licensed or proprietary visual content, and BioNeMo, which is designed to assist researchers in the drug discovery industry with designing and screening molecules. All of these services rely on NVIDIA's pre-trained models, which have been developed using trillions of parameters and data, and users will have access to experts to assist them as needed.

NVIDIA Omniverse - The Digital to Physical

Huang continues with the push the Omniverse, their digital collaboration platform for creatives and manufacturing product design. He sentiments NVIDIA Omniverse will provide the ability for companies to feasibility plan production virtually and test efficiency prior to real-life implementation. Omniverse Digital Twins enable factories to test optimization processes between worlds without disrupting the flow of the other.

To showcase the extraordinary capabilities of Omniverse, NVIDIA’s open platform built for 3D design collaboration and digital twin simulation, it shows how Amazon is working to choreograph the movements of Proteus, Amazon’s first fully autonomous warehouse robot, as it moves bins of products from one place to another in the warehouse.

Companies like Lotus and BMW utilize 3D design collaboration and Digital Twins to build, optimize, and plan anything from small stations to entire factories. Mercedes-Benz uses Omniverse to build, optimize and plan assembly lines for new models and enhance self-driving capabilities. Rimac and Lucid Motors use Omniverse to build digital stores from actual design data that faithfully represent their cars.

Learn More about NVIDIA Omniverse Enterprise and how you can get started!

Helping Do the Impossible

NVIDIA has transitioned from its gaming hardware days to being the engine that drives AI models from start to finish. Cloud and software partners, researchers and scientists, prosumers and consumers alike are all impacted by the recent development and mainstream adoption of artificial intelligence, especially with Generative AI. We give our computers and machines the ability to be more creative than before and the world is changing, moving toward the next technological revolution.

The Era of AI is on the horizon and it's up to us to keep up. Without the acceleration in computing and the engines NVIDIA provides, our technology would not be the way it is today. Innovations are made possible not only with the hardware and software but those who harness it.

“Together,” Huang states, “We are helping the world do the impossible.”

Interested in scaling your computing infrastructure?

Want to bring your AI innovations to life?

Exxact is a leading solutions provider for GPU-accelerated AI and HPC.Contact us today for more information!

.jpg?format=webp)