With deep learning training systems feeding big data sets to GPUs, bottlenecks start to appear. GPUs are only getting faster and data sets are becoming larger and richer. With the massive amounts of data required for deep learning workloads, it is recommended to have the right storage to support it. Getting the proper balance of storage performance, ease of management and cost is a must. Below are some storage system attributes that are essential to support these workloads.

Availability

Deep learning systems process huge amounts of data in a short time frame because large data sets are required to obtain precise algorithms. If storage is not available, then the deep learning software cannot process its workloads. For enterprises adopting deep learning, high-availability storage with data replication may be required.

Deep Learning Storage Performance

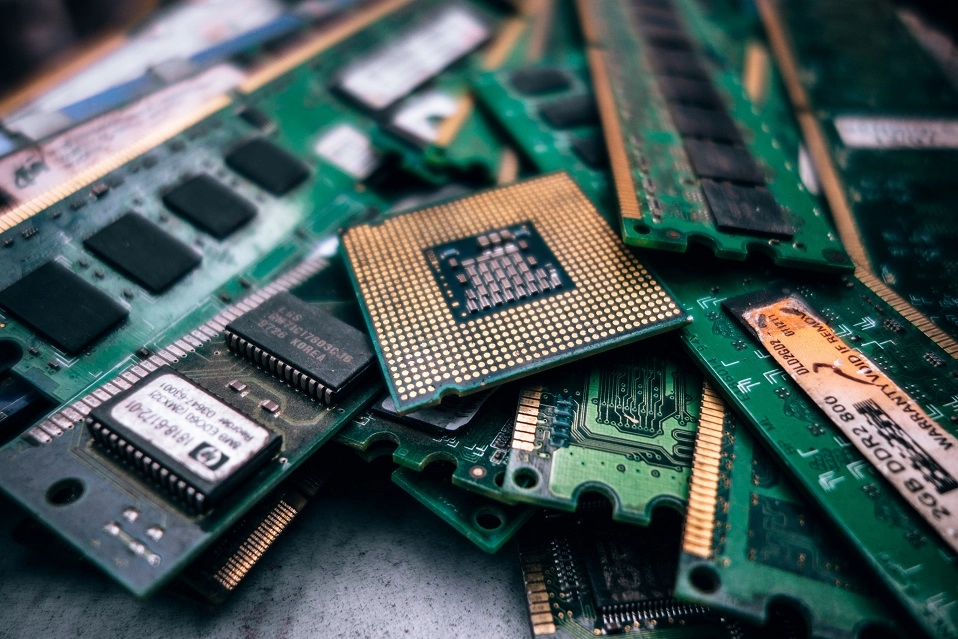

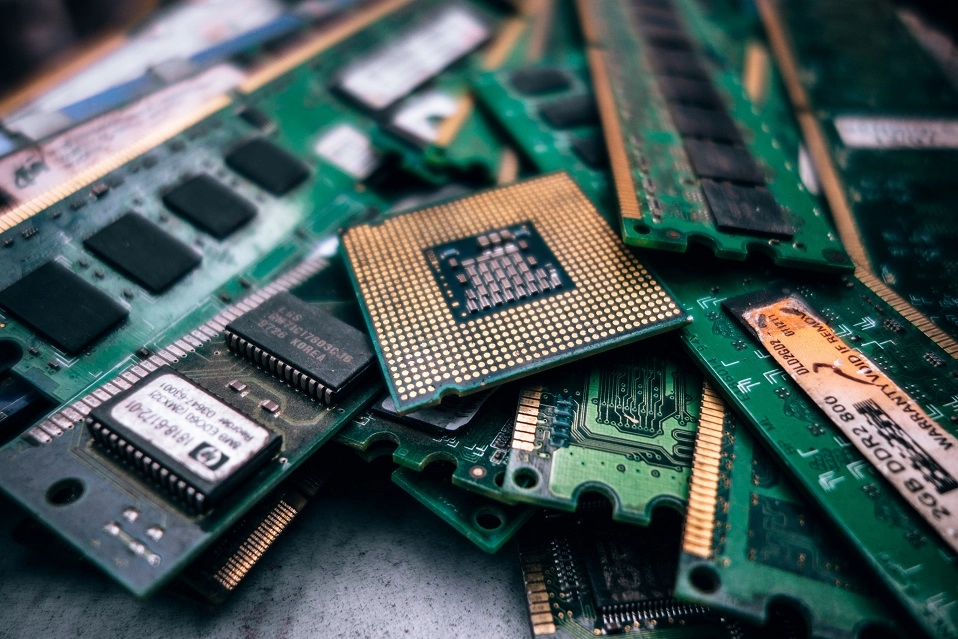

Deep learning workloads cover a broad array of data sources, which present different I/O load attributes (depending on the model, parameters, and variables.) Limiting the potential bottlenecks while querying data from storage is essential to maximizing throughput. In environments utilizing GPUs, a steady flow of data from the storage subsystem into the GPU jobs is necessary for obtaining optimal and timely results. This is especially true with batch training workloads that are processed in real time decision making. A high performance deep learning system requires equally high performance storage. Being able to compute large quantities of data is rendered useless if the storage can't get the data to the computing elements. To help alleviate this challenge, deep learning practitioners look for all-flash storage as it is currently the fastest storage technology that is available at a reasonable price point.

Reliability

If the data used is corrupt, deep learning systems cannot function. The reliability of the data depends on capabilities built into the software that runs the storage array, such as error checking. Another source of reliability is how dependable the operation of the storage hardware is. For the most part, storage arrays that use flash memory are perfectly suited for deep learning systems because solid-state drives have no moving parts that wear down.

Ease of Use / Automation

The volume of storage required in IT infrastructures for organizations continue to increase, even as budgets for hiring IT storage administrators have dropped. This brings the need for ease of use for the IT specialist, to easily install, deploy, and manage that storage. With fewer IT personnel managing the IT infrastructure, automation is also important. Adding a software control layer above the hardware allows the software to monitor more tasks, allowing the storage manager to handle other duties. Automation of storage features like replication, tiering and backups greatly reduces the storage management impact on the deep learning system. The more automated the system, the lower the costs are to run it.

In addition to an extensive solution lineup for deep learning, Exxact offers numerous Storage Solutions for any organizations storage infrastructure needs, including storage meant for deep learning applications. Here are a few of our featured storage solutions:

- Tensor TS3-264514-NAS 3U All-Flash NAS Storage Server

- Supports up to 300 TB of all-flash storage capacity

- Features an OpenZFS Storage Controller that has been developed and optimized to meet the demand of more powerful, reliable, and flexible storage for businesses

- Combines the flexibility of unified storage, the performance and efficiency of solid state flash drives, the capacity of hard disks, and the familiarity and simplified management of the FreeNAS user interface

- High Availability is achieved with redundant storage controllers

- OpenZFS FileSystem supports snapshots, thin provisioning, self-healing filesystem, online capacity expansion, ZFS Stripe, mirror, RAID-Z, RAID-Z2, and RAID-Z3

- Tensor TS3-264513-NAS 3U Storage Server

- Supports up to 4.8 PB of storage capacity

- Supports popular file-based protocols like CIFS, NFS, AFP, FTP, and WebDAV

- Remote administration is achieved via HTTP/HTTPS and REST API

- 10GbE and 8Gb fibre channel interfaces support for optimizing bandwidth

What are your thoughts on storage for deep learning? Let us know on social media!

Facebook: https://www.facebook.com/exxactcorp/

Twitter: https://twitter.com/Exxactcorp

Other Deep Learning Blogs

Why High Performance Storage is Essential to Deep Learning Systems

With deep learning training systems feeding big data sets to GPUs, bottlenecks start to appear. GPUs are only getting faster and data sets are becoming larger and richer. With the massive amounts of data required for deep learning workloads, it is recommended to have the right storage to support it. Getting the proper balance of storage performance, ease of management and cost is a must. Below are some storage system attributes that are essential to support these workloads.

Availability

Deep learning systems process huge amounts of data in a short time frame because large data sets are required to obtain precise algorithms. If storage is not available, then the deep learning software cannot process its workloads. For enterprises adopting deep learning, high-availability storage with data replication may be required.

Deep Learning Storage Performance

Deep learning workloads cover a broad array of data sources, which present different I/O load attributes (depending on the model, parameters, and variables.) Limiting the potential bottlenecks while querying data from storage is essential to maximizing throughput. In environments utilizing GPUs, a steady flow of data from the storage subsystem into the GPU jobs is necessary for obtaining optimal and timely results. This is especially true with batch training workloads that are processed in real time decision making. A high performance deep learning system requires equally high performance storage. Being able to compute large quantities of data is rendered useless if the storage can't get the data to the computing elements. To help alleviate this challenge, deep learning practitioners look for all-flash storage as it is currently the fastest storage technology that is available at a reasonable price point.

Reliability

If the data used is corrupt, deep learning systems cannot function. The reliability of the data depends on capabilities built into the software that runs the storage array, such as error checking. Another source of reliability is how dependable the operation of the storage hardware is. For the most part, storage arrays that use flash memory are perfectly suited for deep learning systems because solid-state drives have no moving parts that wear down.

Ease of Use / Automation

The volume of storage required in IT infrastructures for organizations continue to increase, even as budgets for hiring IT storage administrators have dropped. This brings the need for ease of use for the IT specialist, to easily install, deploy, and manage that storage. With fewer IT personnel managing the IT infrastructure, automation is also important. Adding a software control layer above the hardware allows the software to monitor more tasks, allowing the storage manager to handle other duties. Automation of storage features like replication, tiering and backups greatly reduces the storage management impact on the deep learning system. The more automated the system, the lower the costs are to run it.

In addition to an extensive solution lineup for deep learning, Exxact offers numerous Storage Solutions for any organizations storage infrastructure needs, including storage meant for deep learning applications. Here are a few of our featured storage solutions:

- Tensor TS3-264514-NAS 3U All-Flash NAS Storage Server

- Supports up to 300 TB of all-flash storage capacity

- Features an OpenZFS Storage Controller that has been developed and optimized to meet the demand of more powerful, reliable, and flexible storage for businesses

- Combines the flexibility of unified storage, the performance and efficiency of solid state flash drives, the capacity of hard disks, and the familiarity and simplified management of the FreeNAS user interface

- High Availability is achieved with redundant storage controllers

- OpenZFS FileSystem supports snapshots, thin provisioning, self-healing filesystem, online capacity expansion, ZFS Stripe, mirror, RAID-Z, RAID-Z2, and RAID-Z3

- Tensor TS3-264513-NAS 3U Storage Server

- Supports up to 4.8 PB of storage capacity

- Supports popular file-based protocols like CIFS, NFS, AFP, FTP, and WebDAV

- Remote administration is achieved via HTTP/HTTPS and REST API

- 10GbE and 8Gb fibre channel interfaces support for optimizing bandwidth

What are your thoughts on storage for deep learning? Let us know on social media!

Facebook: https://www.facebook.com/exxactcorp/

Twitter: https://twitter.com/Exxactcorp

Other Deep Learning Blogs