.jpg?format=webp)

Background on GPU Memory

When it comes to graphics processing units (GPUs), for time critical workloads, the type of memory has an impact on performance for your enterprise workloads. GPU memory is often referred to as VRAM which stands for Video Random Access Memory. GPU memory is similar to CPU memory and traditional RAM where data is stored locally for quick access and execution for future tasks that might leverage the same data. Larger VRAM capacity means more data can be stored on the GPU memory for fast short term memory access and less need to constantly access physical long-term memory from your HDD or SSD.

There are two prominent types of memory for GPUs: GDDR (found in consumer and professional GPUs) and HBM or high bandwidth memory (found in data center GPUs and specialized hardware). To help you choose the right GPU for your deployment, we define GDDR and HBM for GPU performance and offer our take on what makes sense for your workload.

What is GDDR Memory?

GDDR stands for Graphics Double Data Rate and is a type of memory that is specifically designed for use in graphics cards. GDDR memory is similar to DDR memory, which is used in most computers, but it has been optimized for use in graphics cards. GDDR memory is typically faster than DDR memory and has a higher bandwidth, which means that it can transfer more data at once.

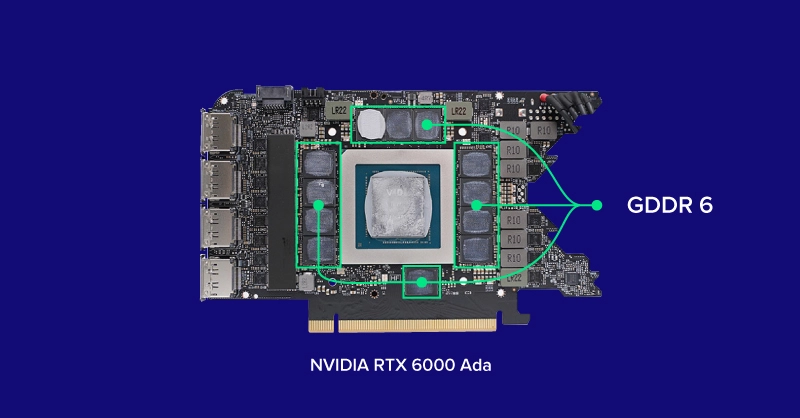

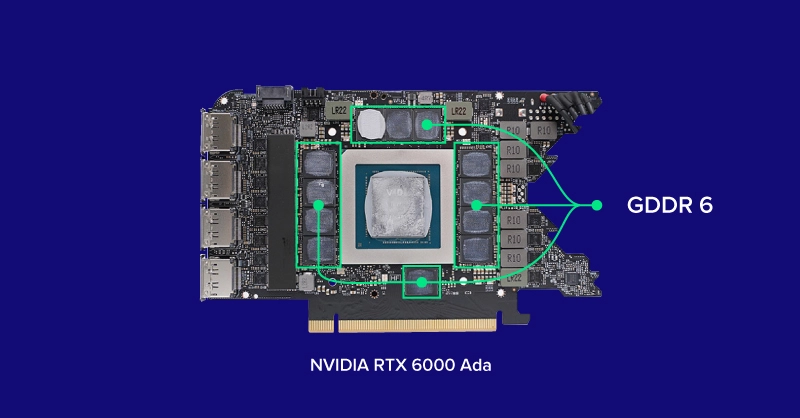

GDDR6 is the most recent memory standard for GPUs with a peak per-pin data rate of 16Gb/s and a max memory bus width of 384-bits. Found in the majority of GPUs including the NVIDIA RTX 6000 Ada and the AMD Radeon PRO W7900, GDDR6 is the memory standard for modern GPUs in 2024. The fastest mainstream GPUs equipped with GDDR6 is the RTX 6000 Ada with a peak memory bandwidth speed of 960GB/s, a near 1 TB of data per second capability.

GDDR memory are individual chips that are soldered to the PCB surrounding the GPU die. Some GPU die SKUs can have different memory capacities by either soldering more VRAM chips or using larger capacity chips. NVIDIA RTX 4090’s 24GB GDDR6X memory and RTX 6000 Ada’s 48GB GDDR6 ECC both use the AD102 GPU die but have different use cases based on their memory configurations. The RTX 6000 Ada doubles its memory by adding more VRAM chips on the back of the PCB for more memory size dependent productivity workloads such as CAD, 3D Design, and AI training, whereas the RTX 4090 uses faster GDDR6X chips for memory speed dependent productivity workloads and competitive gaming.

GDDR Memory Use Case

GDDR memory equipped GPUs are generally more accessible and less expensive since they are the mainstream GPU memory type. Since the complexity of GDDR is soldered directly on the PCB instead of the GPU die, various GPU dies can have different VRAM capacities. Consider the NVIDIA RTX 4060 Ti where there is an 8GB and a 16GB variant using the same chip.

As for workloads, GDDR memory is suitable for 90% of applications with most mainstream applications unable to max out or utilize the entire memory bandwidth. While this can limit the lower end of GPUs like the RTX 4060 Ti, top end models like the RTX 6000 Ada shouldn’t be bound by memory bandwidth in most use cases.

To reiterate, the majority of applications are optimized to use this common type of memory and should have no problem on a GDDR equipped GPU. Bandwidth speeds on top end RTX 6000 Ada, NVIDIA L40S, RTX 4090 and more can work wonders, and an increase in bandwidth can only result in minor speed improvements. But when those minor speed improvements need to be capitalized on, that's where high bandwidth memory shines.

What is HBM?

HBM stands for High Bandwidth Memory. HBM is designed to provide a larger memory bus width than GDDR memory, resulting in larger data packages transferred at once. A single HBM memory chip is slower than a single GDDR6 chip but the wider bus width, smaller capacity, and stackability/scalability of HBM chips makes it more powerful, efficient, and faster than GDDR memory. The latest adopted HBM standard is HBM3, found in NVIDIA H100 80GB SXM5 Tensor Core GPU with a 5120-bit bus and 3.35TB/s of memory bandwidth.

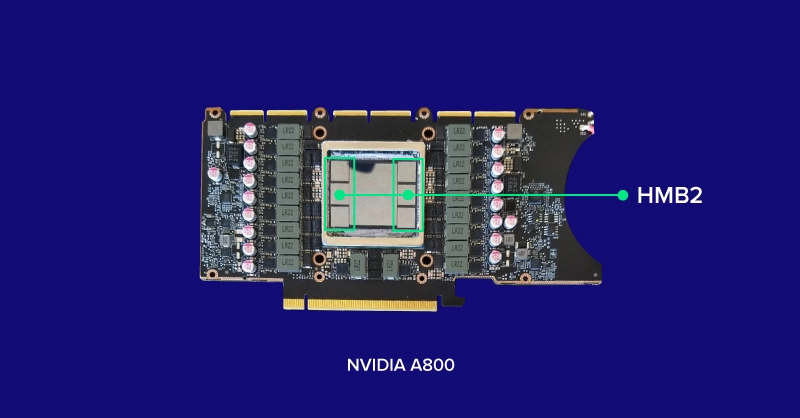

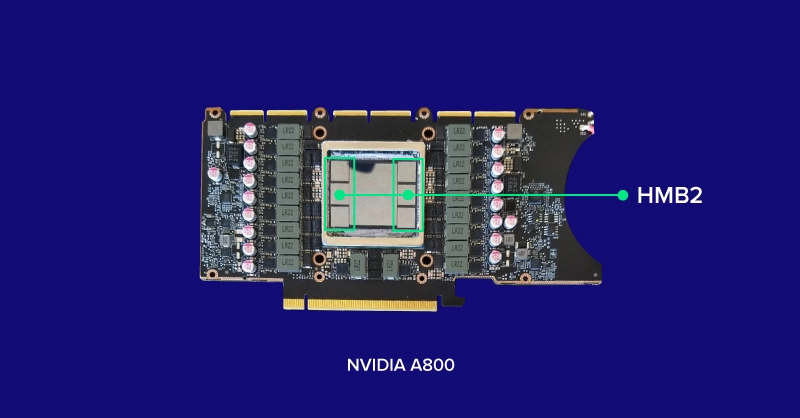

HBM sits inside the GPU die and is stacked – for example NVIDIA A800 40GB Active GPU has 5 active stacks of 8 HBM DRAM dies (8‑Hi) each with two 512‑bit channels per die for a total width of 5120-bits in total (5 active stacks * 2 channels * 512 bits). Because HBM is built into the GPU die, there is less room for error and modularity. For a GPU die SKU, HBM is not configurable like how GDDR equipped GPUs are, where each memory chip is soldered onto the PCB (but vendors can disable HBM stacks for stability and power target requirements).

HBM Use Case

HBM equipped GPU are generally less accessible, more niche, expensive, and only found in flagship accelerators like the H100, A800 40GB Active, and other data center GPUs. Since the memory is placed directly on the GPU die, GPUs won’t have different capacities (but can be disabled).

HBM is often only used in HPC and highly niche workloads that require the most bandwidth where the speed in accessing data is imperative. This can include simulation type workloads, AI training, analytics, edge computing and inferencing, and more. HBM is highly efficient and offer significantly larger bus-widths to parallelize the per pin rate. But most productivity applications won't be able to maximize the performance.

Another reason why most data center GPUs utilize HBM is the interconnectivity between the compute nodes. NVIDIA H100's NVLink technology requires fast GPU memory bandwidth speeds and it's the reason why HBM is prevalent in the NVIDIA DGX system. Stored and solved data need to be communicated from GPU to GPU quickly and effectively. Therefore, a fast, low latency, high bandwidth memory standard is needed to reduce the communication bottleneck.

GDDR6 vs HBM2

Most applications won’t ever need HBM. The fastest RTX Ada GPU equipped with GDDR6 can accelerate most workload. The NVIDIA RTX 6000 Ada is a highly capable flagship GPU that is perfect for small to medium scale AI training, rendering, analytics, simulation, and data intensive workloads with a high memory bandwidth of 960GB/s. Slot a server or workstation with a multi-GPU setup and the work can be parallelized and split for even more performance (if applicable in application/workload).

However, HBM equipped GPUs like the NVIDIA A800 40GB Active and NVIDIA H100 Tensor Core GPU can significantly boost productivity (though at a higher cost) for enterprise deployments that value the time saved. More performance and less waiting can enable faster breakthroughs. Simulation workloads for CFD, mechanical deformation, AI training, and real-time analytics where access to memory is imperative can utilize the NVIDIA A800 40GB Active’s fast access to HBM.

Exxact stocks the majority of high-performance GPUs which you can select when configurating a system with us. Here's a list of the top tier NVIDIA GPUs that you can put in an Exxact workstation and/or server when configuring a system and compare their bus-width and bandwidth speed:

| GPU | Memory Type | Memory Bus Width | Memory Bandwidth |

|---|---|---|---|

| RTX 6000 Ada | GDDR6 | 384-bits | 960 GB/s |

| GeForce RTX 4090 | GDDR6X | 384-bits | 1008 GB/s (1 TB/s) |

| NVIDIA L40S | GDDR6 | 384-bits | 864 GB/s |

| NVIDIA A800 40GB Active | HBM2 | 5120-bits | 1555 GB/s (1.5 TB/s) |

| NVIDIA H100 80GB PCIe | HBM2e | 5120-bits | 2039 GB/s (2 TB/s) |

| NVIDIA H100 80G SXM5 | HBM3 | 5120-bits | 3350 GB/s (3.35 TB/s) |

Conclusion

High level deployments, like ChatGPT, leverage the use of a cluster of H100s working in tandem to perform real time inferencing and generative AI capabilities to millions of users concurrently ingesting prompts and delivering real time outputs. Without the blisteringly fast HBM that enables GPU to GPU interconnectivity, a minimal slow down can build up and render an entire enterprise deployment near unusable. Consider the launch of ChatGPT when their computing infrastructure just couldn't hold the large influx of users even with the use of NVIDIA DGX systems. And once they were able to scale, it has been stable ever since. In retrospect, ChatGPT likely would not even be possible, without the use of these high bandwidth memory interconnected GPUs.

Both GDDR memory and HBM have their advantages and disadvantages. GDDR equipped GPUs are more accessible and less expensive (compared to HBM equipped GPUs) and is a good choice for applications that require high bandwidth but don’t require the absolute highest performance. HBM equipped GPUs are more niche and expensive but the performance it provides can be paramount for certain applications that require it. When choosing between these two types of memory, it’s important to consider the use case and cost, evaluating whether the time saved will make an impact in your workload.

We're Here to Deliver the Tools to Power Your Research

With access to the highest performing hardware, at Exxact, we can offer the platform optimized for your deployment, budget, and desired performance so you can make an impact with your research!

Talk to an Engineer Today.jpg?format=webp)

GDDR6 vs HBM - Defining GPU Memory Types

Background on GPU Memory

When it comes to graphics processing units (GPUs), for time critical workloads, the type of memory has an impact on performance for your enterprise workloads. GPU memory is often referred to as VRAM which stands for Video Random Access Memory. GPU memory is similar to CPU memory and traditional RAM where data is stored locally for quick access and execution for future tasks that might leverage the same data. Larger VRAM capacity means more data can be stored on the GPU memory for fast short term memory access and less need to constantly access physical long-term memory from your HDD or SSD.

There are two prominent types of memory for GPUs: GDDR (found in consumer and professional GPUs) and HBM or high bandwidth memory (found in data center GPUs and specialized hardware). To help you choose the right GPU for your deployment, we define GDDR and HBM for GPU performance and offer our take on what makes sense for your workload.

What is GDDR Memory?

GDDR stands for Graphics Double Data Rate and is a type of memory that is specifically designed for use in graphics cards. GDDR memory is similar to DDR memory, which is used in most computers, but it has been optimized for use in graphics cards. GDDR memory is typically faster than DDR memory and has a higher bandwidth, which means that it can transfer more data at once.

GDDR6 is the most recent memory standard for GPUs with a peak per-pin data rate of 16Gb/s and a max memory bus width of 384-bits. Found in the majority of GPUs including the NVIDIA RTX 6000 Ada and the AMD Radeon PRO W7900, GDDR6 is the memory standard for modern GPUs in 2024. The fastest mainstream GPUs equipped with GDDR6 is the RTX 6000 Ada with a peak memory bandwidth speed of 960GB/s, a near 1 TB of data per second capability.

GDDR memory are individual chips that are soldered to the PCB surrounding the GPU die. Some GPU die SKUs can have different memory capacities by either soldering more VRAM chips or using larger capacity chips. NVIDIA RTX 4090’s 24GB GDDR6X memory and RTX 6000 Ada’s 48GB GDDR6 ECC both use the AD102 GPU die but have different use cases based on their memory configurations. The RTX 6000 Ada doubles its memory by adding more VRAM chips on the back of the PCB for more memory size dependent productivity workloads such as CAD, 3D Design, and AI training, whereas the RTX 4090 uses faster GDDR6X chips for memory speed dependent productivity workloads and competitive gaming.

GDDR Memory Use Case

GDDR memory equipped GPUs are generally more accessible and less expensive since they are the mainstream GPU memory type. Since the complexity of GDDR is soldered directly on the PCB instead of the GPU die, various GPU dies can have different VRAM capacities. Consider the NVIDIA RTX 4060 Ti where there is an 8GB and a 16GB variant using the same chip.

As for workloads, GDDR memory is suitable for 90% of applications with most mainstream applications unable to max out or utilize the entire memory bandwidth. While this can limit the lower end of GPUs like the RTX 4060 Ti, top end models like the RTX 6000 Ada shouldn’t be bound by memory bandwidth in most use cases.

To reiterate, the majority of applications are optimized to use this common type of memory and should have no problem on a GDDR equipped GPU. Bandwidth speeds on top end RTX 6000 Ada, NVIDIA L40S, RTX 4090 and more can work wonders, and an increase in bandwidth can only result in minor speed improvements. But when those minor speed improvements need to be capitalized on, that's where high bandwidth memory shines.

What is HBM?

HBM stands for High Bandwidth Memory. HBM is designed to provide a larger memory bus width than GDDR memory, resulting in larger data packages transferred at once. A single HBM memory chip is slower than a single GDDR6 chip but the wider bus width, smaller capacity, and stackability/scalability of HBM chips makes it more powerful, efficient, and faster than GDDR memory. The latest adopted HBM standard is HBM3, found in NVIDIA H100 80GB SXM5 Tensor Core GPU with a 5120-bit bus and 3.35TB/s of memory bandwidth.

HBM sits inside the GPU die and is stacked – for example NVIDIA A800 40GB Active GPU has 5 active stacks of 8 HBM DRAM dies (8‑Hi) each with two 512‑bit channels per die for a total width of 5120-bits in total (5 active stacks * 2 channels * 512 bits). Because HBM is built into the GPU die, there is less room for error and modularity. For a GPU die SKU, HBM is not configurable like how GDDR equipped GPUs are, where each memory chip is soldered onto the PCB (but vendors can disable HBM stacks for stability and power target requirements).

HBM Use Case

HBM equipped GPU are generally less accessible, more niche, expensive, and only found in flagship accelerators like the H100, A800 40GB Active, and other data center GPUs. Since the memory is placed directly on the GPU die, GPUs won’t have different capacities (but can be disabled).

HBM is often only used in HPC and highly niche workloads that require the most bandwidth where the speed in accessing data is imperative. This can include simulation type workloads, AI training, analytics, edge computing and inferencing, and more. HBM is highly efficient and offer significantly larger bus-widths to parallelize the per pin rate. But most productivity applications won't be able to maximize the performance.

Another reason why most data center GPUs utilize HBM is the interconnectivity between the compute nodes. NVIDIA H100's NVLink technology requires fast GPU memory bandwidth speeds and it's the reason why HBM is prevalent in the NVIDIA DGX system. Stored and solved data need to be communicated from GPU to GPU quickly and effectively. Therefore, a fast, low latency, high bandwidth memory standard is needed to reduce the communication bottleneck.

GDDR6 vs HBM2

Most applications won’t ever need HBM. The fastest RTX Ada GPU equipped with GDDR6 can accelerate most workload. The NVIDIA RTX 6000 Ada is a highly capable flagship GPU that is perfect for small to medium scale AI training, rendering, analytics, simulation, and data intensive workloads with a high memory bandwidth of 960GB/s. Slot a server or workstation with a multi-GPU setup and the work can be parallelized and split for even more performance (if applicable in application/workload).

However, HBM equipped GPUs like the NVIDIA A800 40GB Active and NVIDIA H100 Tensor Core GPU can significantly boost productivity (though at a higher cost) for enterprise deployments that value the time saved. More performance and less waiting can enable faster breakthroughs. Simulation workloads for CFD, mechanical deformation, AI training, and real-time analytics where access to memory is imperative can utilize the NVIDIA A800 40GB Active’s fast access to HBM.

Exxact stocks the majority of high-performance GPUs which you can select when configurating a system with us. Here's a list of the top tier NVIDIA GPUs that you can put in an Exxact workstation and/or server when configuring a system and compare their bus-width and bandwidth speed:

| GPU | Memory Type | Memory Bus Width | Memory Bandwidth |

|---|---|---|---|

| RTX 6000 Ada | GDDR6 | 384-bits | 960 GB/s |

| GeForce RTX 4090 | GDDR6X | 384-bits | 1008 GB/s (1 TB/s) |

| NVIDIA L40S | GDDR6 | 384-bits | 864 GB/s |

| NVIDIA A800 40GB Active | HBM2 | 5120-bits | 1555 GB/s (1.5 TB/s) |

| NVIDIA H100 80GB PCIe | HBM2e | 5120-bits | 2039 GB/s (2 TB/s) |

| NVIDIA H100 80G SXM5 | HBM3 | 5120-bits | 3350 GB/s (3.35 TB/s) |

Conclusion

High level deployments, like ChatGPT, leverage the use of a cluster of H100s working in tandem to perform real time inferencing and generative AI capabilities to millions of users concurrently ingesting prompts and delivering real time outputs. Without the blisteringly fast HBM that enables GPU to GPU interconnectivity, a minimal slow down can build up and render an entire enterprise deployment near unusable. Consider the launch of ChatGPT when their computing infrastructure just couldn't hold the large influx of users even with the use of NVIDIA DGX systems. And once they were able to scale, it has been stable ever since. In retrospect, ChatGPT likely would not even be possible, without the use of these high bandwidth memory interconnected GPUs.

Both GDDR memory and HBM have their advantages and disadvantages. GDDR equipped GPUs are more accessible and less expensive (compared to HBM equipped GPUs) and is a good choice for applications that require high bandwidth but don’t require the absolute highest performance. HBM equipped GPUs are more niche and expensive but the performance it provides can be paramount for certain applications that require it. When choosing between these two types of memory, it’s important to consider the use case and cost, evaluating whether the time saved will make an impact in your workload.

We're Here to Deliver the Tools to Power Your Research

With access to the highest performing hardware, at Exxact, we can offer the platform optimized for your deployment, budget, and desired performance so you can make an impact with your research!

Talk to an Engineer Today