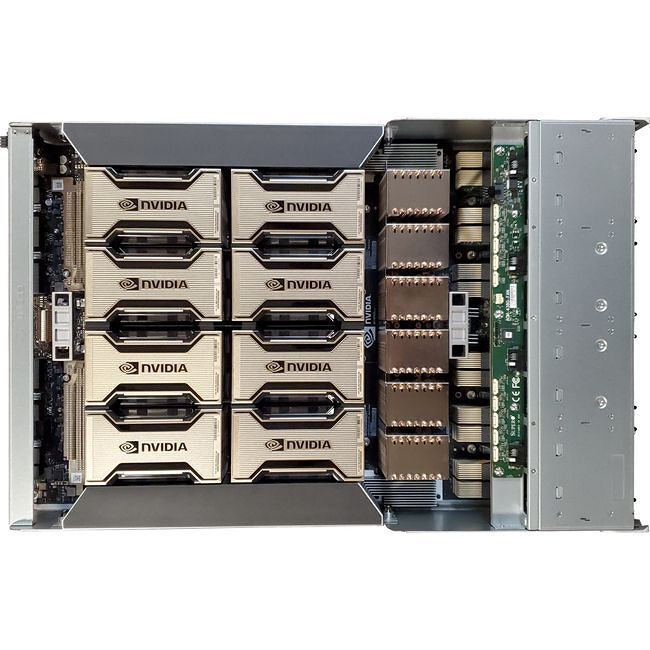

Exxact TensorEX 4U HGX A100 Server - 2x 3rd Gen Intel Xeon Scalable processor - TS4-168747704

The TensorEX TS4-168747704 is a 4U rack mountable HGX A100 server supporting 2x 3rd Gen Intel Xeon Scalable family processors, 32x DDR4 memory slots, and 8x NVIDIA A100 Ampere GPUs (SXM4), with up to 600 GB/s NVLINK interconnect.

Introducing 3rd Gen Intel® Xeon® Scalable Processors

Introducing the 3rd Gen Intel® Xeon® Scalable processors, a balanced architecture that delivers built-in AI acceleration and advanced security capabilities, which allow you to place your workloads more securely where they perform best - from Edge to Cloud.

The 3rd Gen Intel Xeon Scalable processor benefits from decades of innovation for the most common workload requirements, supported by close partnerships and deep integrations with the world’s software leaders. 3rd Gen Intel Xeon Scalable processors are optimized for many workload types and performance levels, all with the consistent, compatible, Intel architecture you know and trust.

Industry Leading Performance

Core-for-core, 3rd Gen Intel® Xeon® Scalable processors offer industry leading performance on popular databases, HPC workloads, virtualization and AI.

The 3rd Gen Intel® Xeon® Scalable processors deliver 1.5X more performance than other CPUs across 20 popular machine and deep learning workloads.

3rd Gen Intel® Xeon® Scalable processors deliver on average up to 62% more performance on a range of broadly-deployed network and 5G workloads over the prior generation, offering users huge performance increases while maintaining the convenience and compatibility of their architecture.

For key AI workloads, 3rd Gen Intel® Xeon® Scalable processors deliver up to74% increase in AI performance on the deep learning topology BERT while maintaining full compatibility.

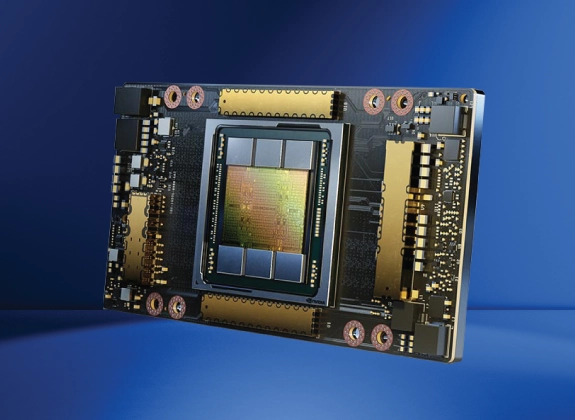

NVIDIA A100 Tensor Core GPU

Blistering Double Precision Accelerator for AI & HPC

NVIDIA A100 introduces double-precision Tensor Cores, providing the most significant milestone since the introduction of double-precision computing in GPUs for HPC. This enables researchers to reduce a 10-hour, double-precision simulation running on NVIDIA V100 Tensor Core GPUs to just four hours on A100.

- Accelerates and enables the most serious HPC and data center workloads

- With 80GBs of High Bandwidth Memory (HBM2e), A100 never skips a beat

A100 SXM4 GPU Options

| Model | Standard Memory | Memory Bandwidth (GB/s) | CUDA Cores | Tensor Cores | Single Precision (TFLOPS) | Double Precision (TFLOPS) | Power (W) | Explore |

|---|---|---|---|---|---|---|---|---|

| A100 80 GB SXM4 | 80 GB HBM2e | 2039 | 6912 | 432 | 19.5 | 9.7 | 400 | --- |