Larger & More Performant Memory

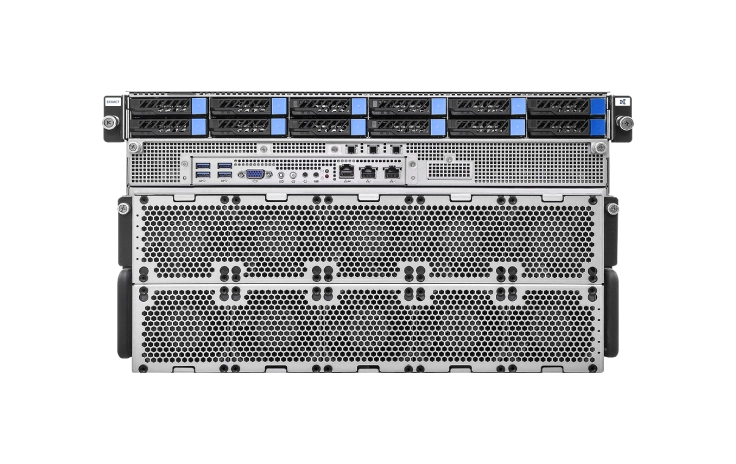

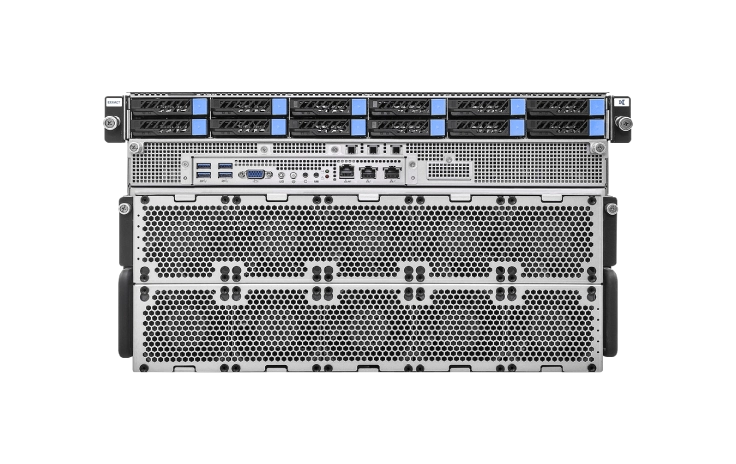

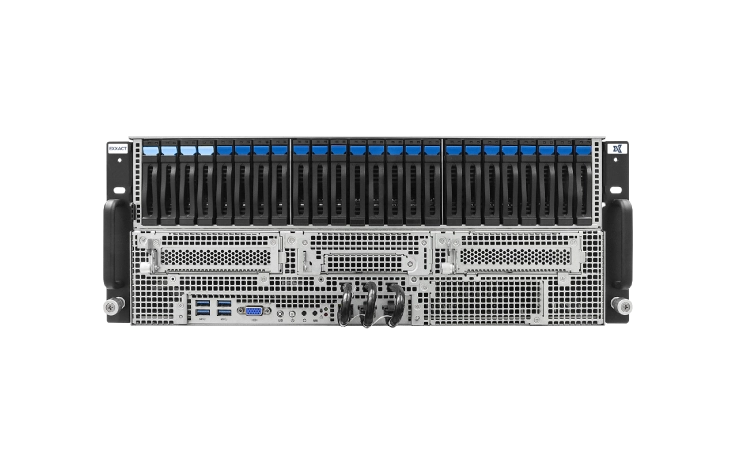

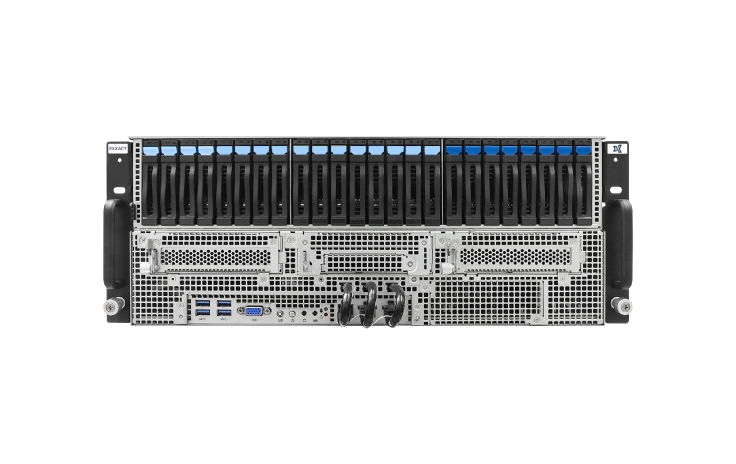

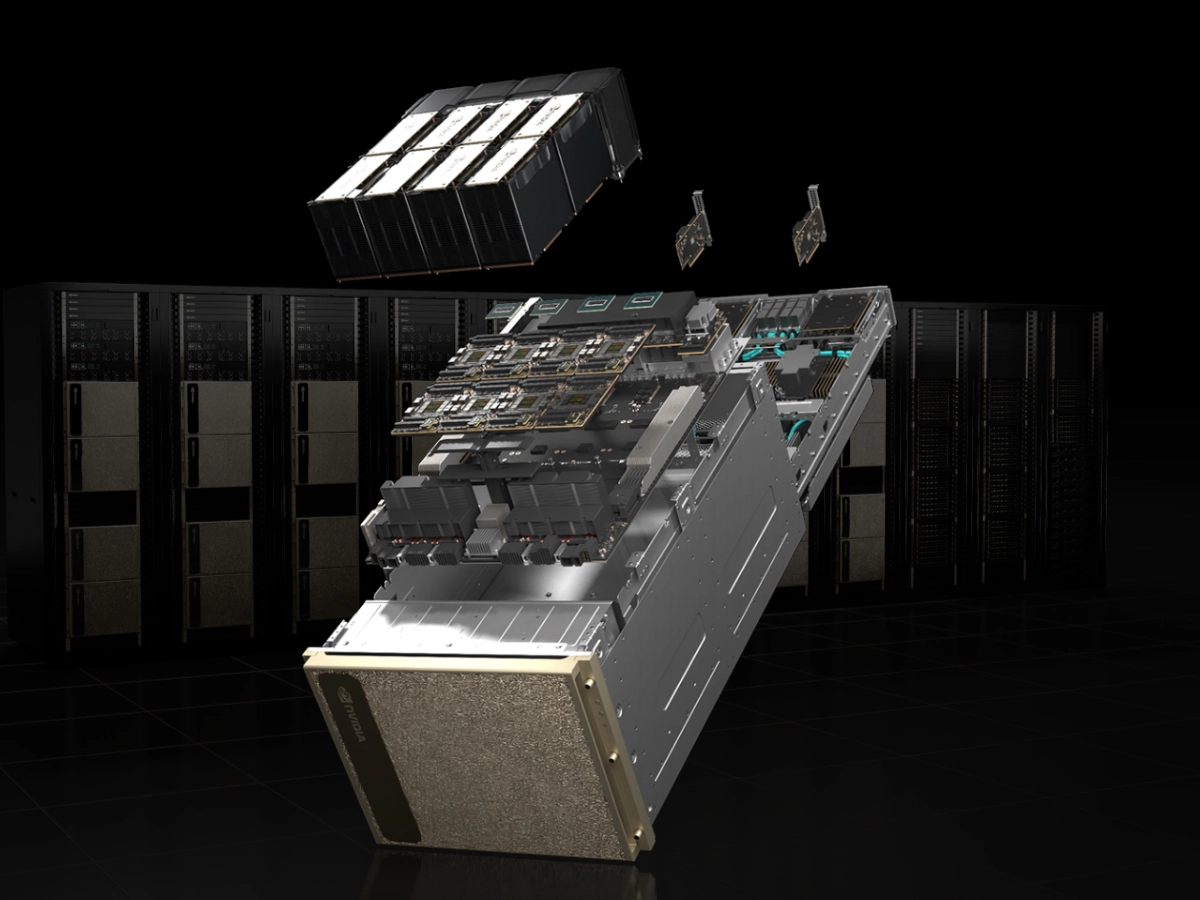

NVIDIA DGX H200

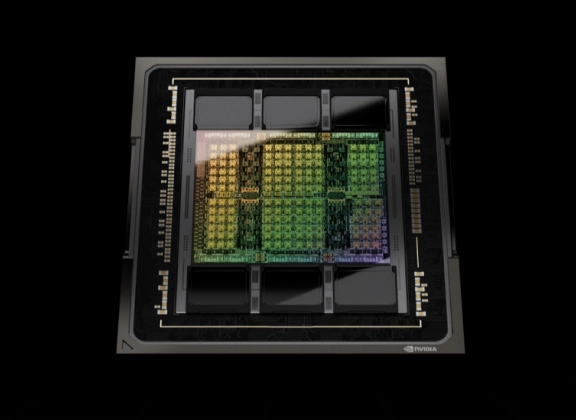

The NVIDIA DGX H200 features everything we love about DGX H100 but now with over 1.1TB of upgraded HBM3e memory running at 4.8TB/s of bandwidth. The DGX H200 delivers every data center's out-of-the-world performance and exceptional scalability. Speed up AI and HPC workloads like large-scale analytics to training and inferencing on huge LLMs.

Inquire about EDU discounts.