True Multi-Tenancy

WEKA uses a containerized microservice architecture for complete isolation between tenants through dedicated container sets. No noisy neighbors, with increased security boundaries at the container level.

Horizontal Scaling

The individualized container architecture promotes independent service scaling based on workload demands. WEKA NeuralMesh rebalances resources as your storage cluster grows, all without disrupting operations.

Operational Flexibility

Each storage container microservice maintains quality of service by deploying updates, performing maintenance, and troubleshooting issues on individual services, resulting in maximum uptime across the cluster.

WEKA Neural Mesh Data Platform

Core that intelligently distributes data and metadata in the NeuralMesh, a network of containerized microservices, intelligent load-balancing, built-in data protection, and auto self-healing, creating a high-availability and fault-tolerant data platform.

Accelerate gives NeuralMesh microsecond latency and ultra-high throughput by fusing memory and flash into a unified pool. It intelligently distributes metadata, eliminates data duplication, and bypasses kernel overhead, delivering blistering performance and maximum GPU efficiency at any scale.

WEKA reinvents storage with a rebuilt distributed parallel file system with local snapshotting, automated tiering, backup redundancy, and more, increasing utilization, reducing complexity, and creating efficient data pipelines.

The Observe component provides real-time manageability and observability of your NeuralMesh storage environment. Optimize your performance and prevent downtime with telemetry, logs, metrics, and change tracking with latency-aware rebalancing, anomaly detection, and AI-driven insights.

Enterprise Services provides mission-critical security, data protection, and isolation for NeuralMesh. Experience high performance across tenants with NeuralMesh’s role-based access, zero-copy efficiency, built-in erasure coding, and zero tuning. Every workload gets exactly what it needs securely and efficiently.

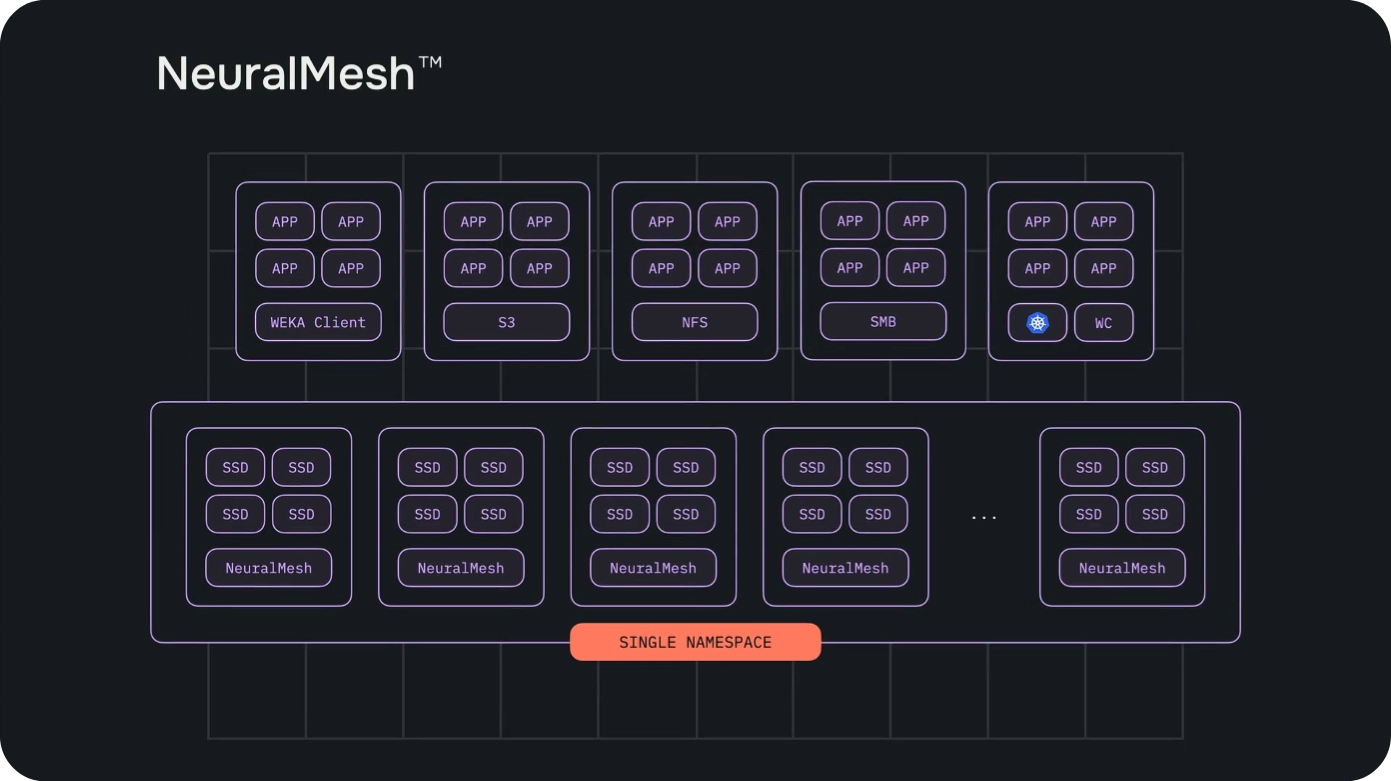

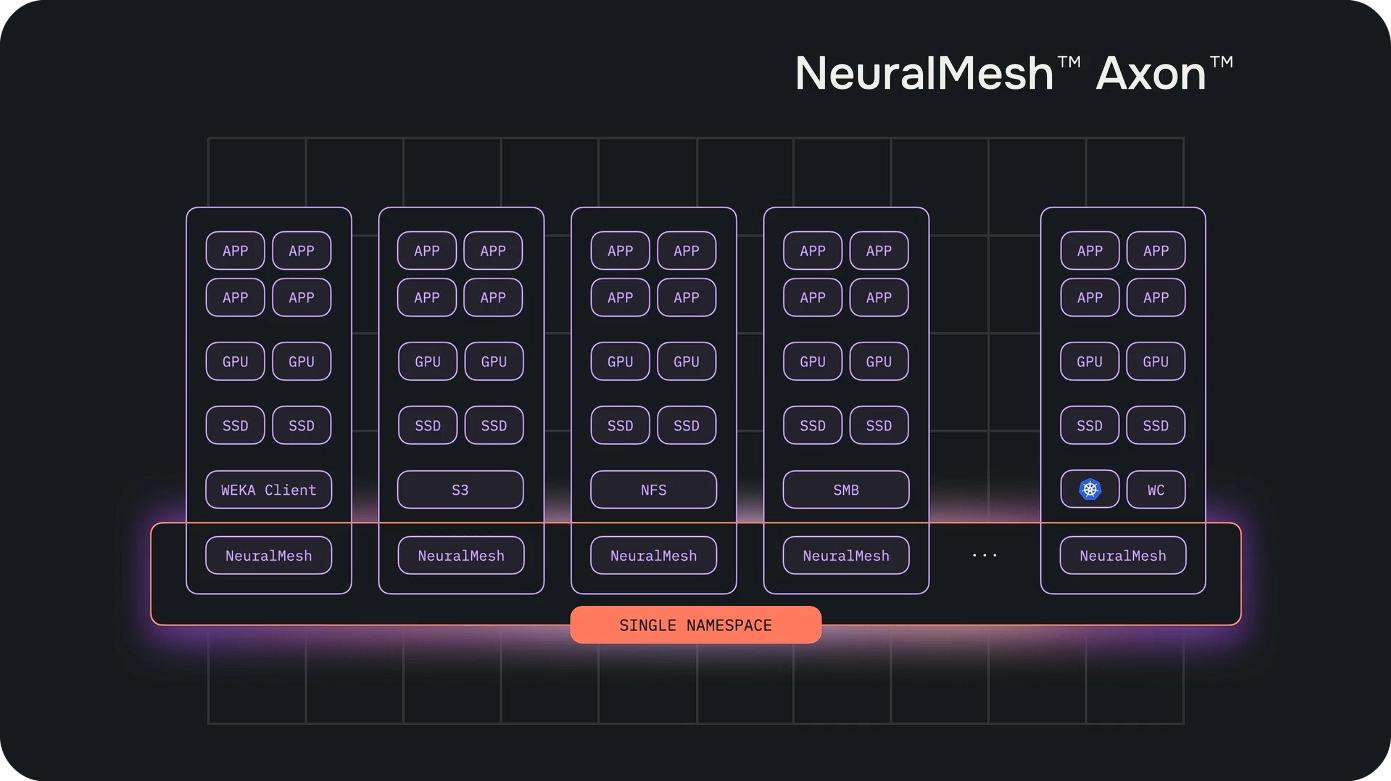

NeuralMesh Traditional vs NeuralMesh Axon

WEKA NeuralMesh can be deployed on dedicated storage servers or directly on your GPU-compute servers with Axon

WEKA NeuralMesh

Deploy WEKA NeuralMesh for a flexible, AI-native data platform that scales across bare metal, cloud, and hybrid environments without hardware lock-in.

- Multi-protocol access (POSIX, S3, NFS, SMB) for diverse workloads

- Tiering and hierarchical storage for exceptional performance and cost optimization

- Flexible deployments via bare-metal, cloud, and hybrid storage infrastructure

- Exabyte-scale growth with microsecond latency by just adding more storage nodes.

WEKA NeuralMesh Axon

Reduce data transfer bottlenecks further by deploying WEKA NeuralMesh Axon directly on your GPU compute servers’ NVMe drives.

- Consolidate compute and storage nodes infrastructure for lower TCO

- Leverage WEKA Augmented Memory Grid to overcome memory limitations

- Infinite scalability. WEKA NeuralMesh strengthens as you grow.

- Deliver extremely ultra-low latency in GPU-heavy high-throughput workloads.

WEKA NeuralMesh is Built for AI and Data Heavy Industries

Artificial Intelligence

Deploy WEKA NeuralMesh Axon directly on your compute servers and lower TCO, eliminate data recall bottlenecks, and leverage ultra-low latency for peak AI training and inference throughput.

Design & Rendering

WEKA NeuralMesh delivers high-performance storage for design teams handling 3D models, rendering environments, and visualizing scenes. Enable real-time collaboration with ultra-low latency access.

Engineering Simulation

WEKA NeuralMesh accelerates engineering simulations collaboration with ultra-low latency access to massive datasets. Run complex FEA, CFD, and multi-physics with seamlessly scaling storage.

Scientific Computing

WEKA NeuralMesh accelerates healthcare and scientific breakthroughs with zero-latency access to data, increased data security, and seamless scalability from terabytes to exabytes.

Interested?