Exxact TensorEX 4U HGX A100 Server - 1x Ampere Altra Max processor - TS4-107911847

The TensorEX TS4-107911847 is a 4U rack mountable HGX A100 server supporting 1x Ampere Altra Max ARM processor, 16x DDR4 memory slots, and 8x NVIDIA A100 Ampere GPUs (SXM4), with up to 600 GB/s NVLINK interconnect.

Training, building, and deploying Deep Learning and AI models can solve complex problems with less coding. Whether it's data collection, annotation, training, or evaluation, leverage the immense parallelism GPUs offer to parse, train, and evaluate at extremely high throughput. Process massive datasets faster with multi-GPU configurations to develop AI models that surpass any other form of computing.

Facilitate any stage of Scientific research, from data preparation and 2D Image Processing to conducting complex MD simulations. Leverage GPUs to speed up calculations and research and encourage the use of AI to solve complex problems Protein Folding, building new molecules, and exponentially accelerating genome sequencing.

Engineering and Product Design applications are notorious for their compute-intensive requirements. Your system should never hold you back from designing the next big thing. Leverage high core count CPUs, ample RAM, and the top-of-the-line GPUs to enable the most enjoyable design experience in CAD applications like Solidworks and simulation applications like ANSYS.

3D design, rendering, and real-time engines have solidified their place in media and entertainment, manufacturing, and architectural design. As digital assets get larger and more complex so should your system. Exxact curates the most competent workstation or server so you can focus on being creative with your designs.

Ampere Altra & Altra Max ARM Processors

High Density, High Efficiency, Lower TCO

Power consumption is one of the critical factors in data centers and HPC. Ampere CPUs are built to satisfy highly dense workloads with extremely efficient cores. Workloads are distributed to large amounts of small efficient cores for increased performance per watt. Ampere-based servers fuel numerous cloud-based applications that require around-the-clock operations such as web hosting, database management, and edge computing while keeping power targets low.

- Better thermals and power efficiency power the same cloud services at lower TCO

- High core count density and scalability lend to highly performative cloud nativity.

- Don’t skimp out on accelerators, storage, and IO; Leverage 128 lanes of PCIe 4 per CPU socket.

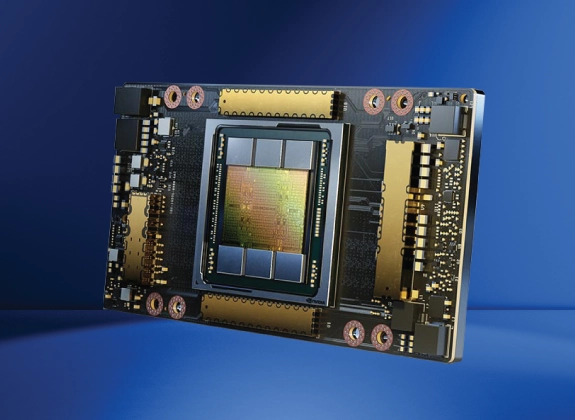

NVIDIA A100 Tensor Core GPU

Blistering Double Precision Accelerator for AI & HPC

NVIDIA A100 introduces double-precision Tensor Cores, providing the most significant milestone since the introduction of double-precision computing in GPUs for HPC. This enables researchers to reduce a 10-hour, double-precision simulation running on NVIDIA V100 Tensor Core GPUs to just four hours on A100.

- Accelerates and enables the most serious HPC and data center workloads

- With 80GBs of High Bandwidth Memory (HBM2e), A100 never skips a beat

A100 SXM4 GPU Options

| Model | Standard Memory | Memory Bandwidth (GB/s) | CUDA Cores | Tensor Cores | Single Precision (TFLOPS) | Double Precision (TFLOPS) | Power (W) | Explore |

|---|---|---|---|---|---|---|---|---|

| A100 80 GB SXM4 | 80 GB HBM2e | 2039 | 6912 | 432 | 19.5 | 9.7 | 400 | --- |

GPUs have provided groundbreaking performance to accelerate deep learning research with thousands of computational cores and up to 100x application throughput when compared to CPUs alone. Exxact has developed the Deep Learning server, featuring NVIDIA GPU technology coupled with state-of-the-art NVLINK GPU-GPU interconnect technology, and a full pre-installed suite of the leading deep learning software, for developers to get a jump-start on deep learning research with the best tools that money can buy.

Features:

- NVIDIA DIGITS software providing powerful design, training, and visualization of deep neural networks for image classification

- Pre-installed standard Ubuntu 18.04/20.04 w/ Exxact Machine Learning Image (EMLI)

- Google TensorFlow software library

- Automatic software update tool included

- A turn-key server with NVLINK GPU-GPU interconnect topology.

An EMLI Environment for Every Developer

Conda EMLI

For developers who want pre-installed deep learning frameworks and their dependencies in separate Python environments installed natively on the system.

Container EMLI

For developers who want pre-installed frameworks utilizing the latest NGC containers, GPU drivers, and libraries in ready to deploy DL environments with the flexibility of containerization.

DIY EMLI

For experienced developers who want a minimalist install to set up their own private deep learning repositories or custom builds of deep learning frameworks.