AI Across Industries

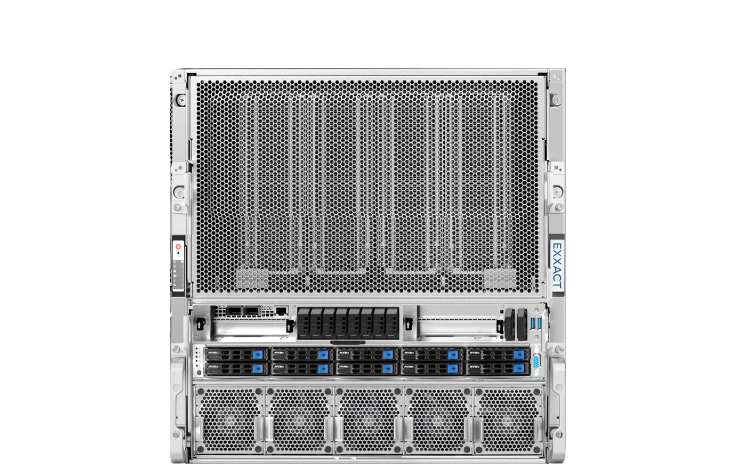

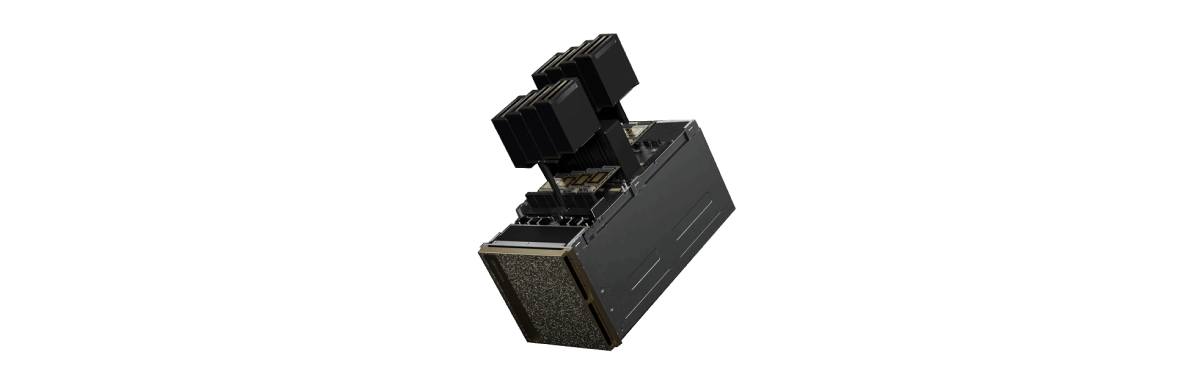

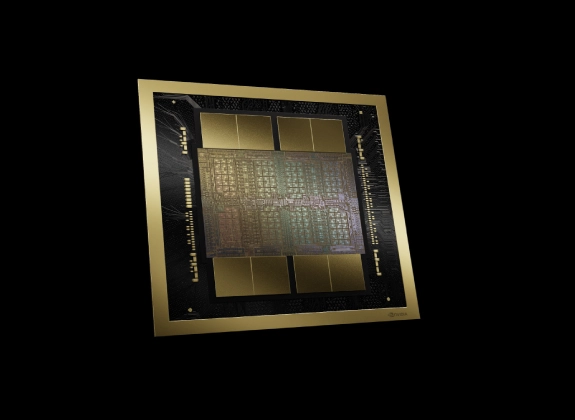

NVIDIA DGX B200 & H200

NVIDIA DGX is the gold standard for AI infrastructure as an all-in-one solution for training, fine-tuning, and inference, providing a seamless experience for enterprise AI workflows. As the building block for BasePOD and SuperPOD, DGX is designed to grow with your business needs. DGX H200 is available now while DGX B200 is shipping soon.