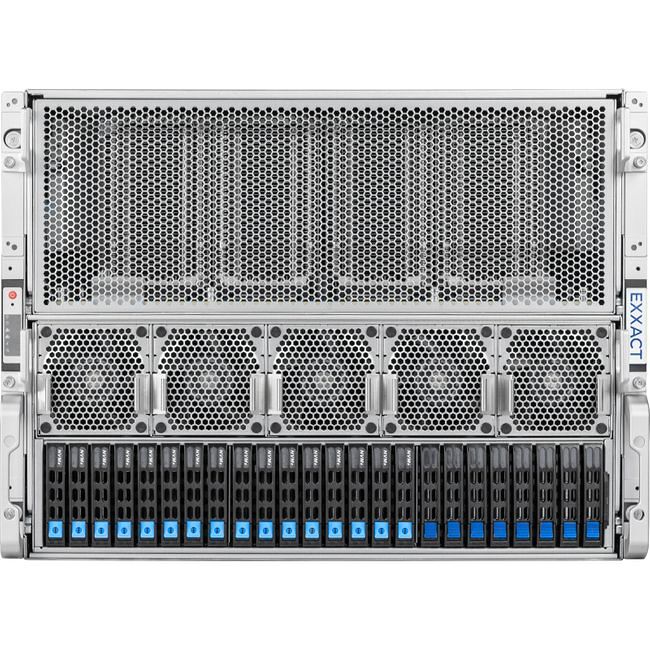

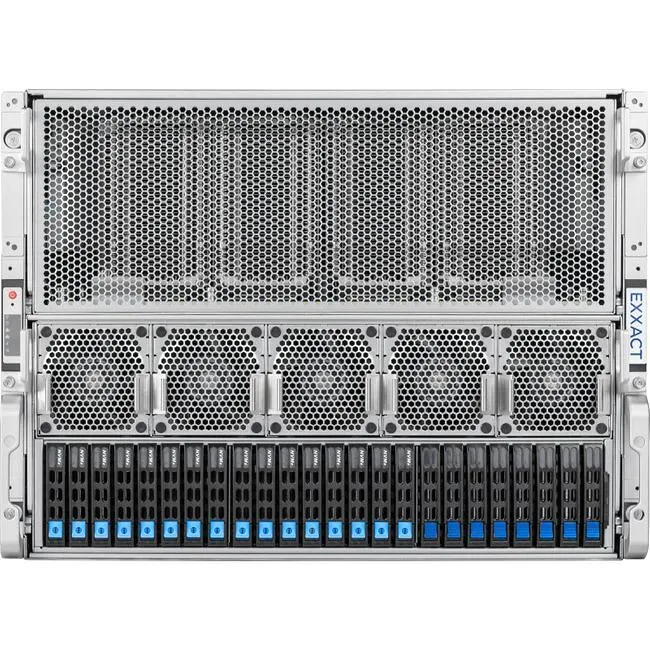

Exxact TensorEX 8U HGX H200 Server - 2x AMD EPYC 9004-Series processor - TS4-118380266

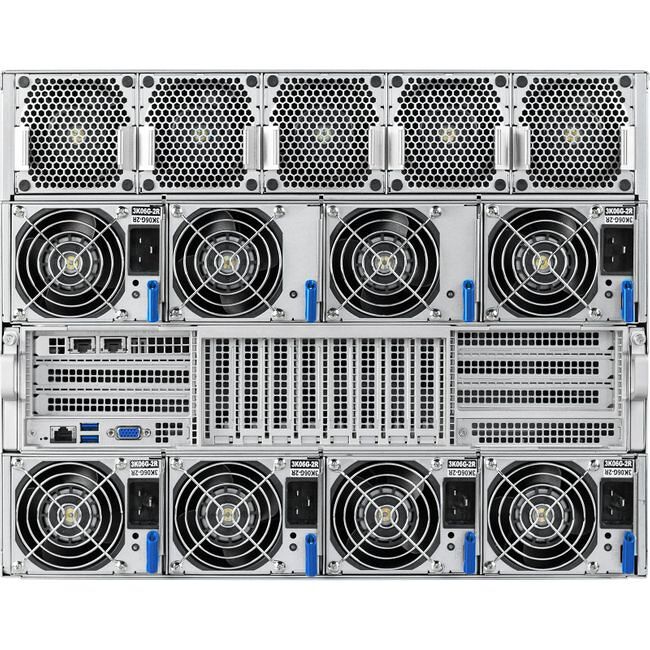

The TensorEX TS4-118380266 is a 8U rack mountable HGX H100 server supporting 2x AMD EPYC 9004 Series processors, 24x DDR5 memory, and 8x NVIDIA H200 GPUs (SXM), with up to 900 GB/s NVLINK interconnect.

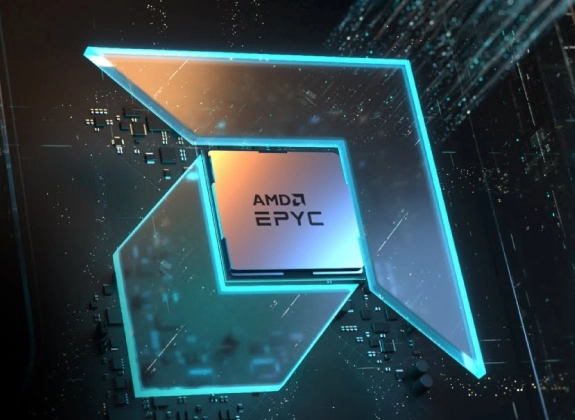

4th Generation AMD EPYC 9004™ series CPU

Up to 96 Cores of Unprecedented Performance and Efficiency

Data centers requiring the best speed, security, and scalability gravitate to AMD EPYC™. The 4th Generation AMD EPYC™ 9004 delivers leadership memory bandwidth, capacity, and next-generation IO with up to 160 PCIe5 lanes, and CXL. Train dense AI models, execute highly complex computations, or deploy thousands of VM with confidence.

- Average 2x generational performance uplift in Enterprise, Cloud, and HPC

- Various SKUs for core density, core performance, and TCO optimized

- Consolidate your data center and reduce your carbon footprint with exceptional performance-per-watt leadership and core density

GPUs have provided groundbreaking performance to accelerate deep learning research with thousands of computational cores and up to 100x application throughput when compared to CPUs alone. Exxact has developed the Deep Learning server, featuring NVIDIA GPU technology coupled with state-of-the-art NVLINK GPU-GPU interconnect technology, and a full pre-installed suite of the leading deep learning software, for developers to get a jump-start on deep learning research with the best tools that money can buy.

Features:

- NVIDIA DIGITS software providing powerful design, training, and visualization of deep neural networks for image classification

- Pre-installed standard Ubuntu 18.04/20.04 w/ Exxact Machine Learning Image (EMLI)

- Google TensorFlow software library

- Automatic software update tool included

- A turn-key server with NVLINK GPU-GPU interconnect topology.

An EMLI Environment for Every Developer

Conda EMLI

For developers who want pre-installed deep learning frameworks and their dependencies in separate Python environments installed natively on the system.

Container EMLI

For developers who want pre-installed frameworks utilizing the latest NGC containers, GPU drivers, and libraries in ready to deploy DL environments with the flexibility of containerization.

DIY EMLI

For experienced developers who want a minimalist install to set up their own private deep learning repositories or custom builds of deep learning frameworks.

H200 SXM GPU Options

| Model | Standard Memory | Memory Bandwidth (TB/s) | CUDA Cores | Tensor Cores | Single Precision (TFLOPS) | Double Precision (TFLOPS) | Power (W) | Explore |

|---|---|---|---|---|---|---|---|---|

| H200 141 GB SXM | 141 GB HBM3e | 4.8 | N/A | N/A | 67 | 34 | 700 | --- |